Anton de Ruiter

Analytical Solution for Inverse Kinematics

Oct 29, 2024Abstract:This paper introduces a closed-form analytical solution for the inverse kinematics (IK) of a 6 Degrees of Freedom (DOF) serial robotic manipulator arm, configured with six revolute joints and utilized within the Lunar Exploration Rover System (LERS). As a critical asset for conducting precise operations in the demanding lunar environment, this robotic arm relies on the IK solution to determine joint parameters required for precise end-effector positioning, essential for tasks such as sample collection, infrastructure assembly, and equipment deployment. By applying geometric principles, the proposed method offers a highly efficient and accurate approach to solving the IK problem, significantly reducing computational demands compared to traditional numerical methods. This advancement not only enhances real-time operational capabilities but is also optimized for space robotics, where precision and speed are critical. Additionally, the paper explores the integration of the LERS robotic system, underscoring the importance of this work in supporting autonomous lunar exploration within the ARTEMIS program and future missions

A Novel Optimization-Based Collision Avoidance For Autonomous On-Orbit Assembly

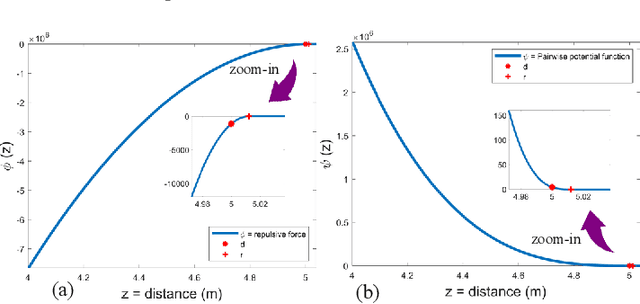

Apr 15, 2024Abstract:The collision avoidance constraints are prominent as non-convex, non-differentiable, and challenging when defined in optimization-based motion planning problems. To overcome these issues, this paper presents a novel non-conservative collision avoidance technique using the notion of convex optimization to establish the distance between robotic spacecraft and space structures for autonomous on-orbit assembly operations. The proposed technique defines each ellipsoidal- and polyhedral-shaped object as the union of convex compact sets, each represented non-conservatively by a real-valued convex function. Then, the functions are introduced as a set of constraints to a convex optimization problem to produce a new set of differentiable constraints resulting from the optimality conditions. These new constraints are later fed into an optimal control problem to enforce collision avoidance where the motion planning for the autonomous on-orbit assembly takes place. Numerical experiments for two assembly scenarios in tight environments are presented to demonstrate the capability and effectiveness of the proposed technique. The results show that this framework leads to optimal non-conservative trajectories for robotic spacecraft in tight environments. Although developed for autonomous on-orbit assembly, this technique could be used for any generic motion planning problem where collision avoidance is crucial.

Multi-agent Motion Planning for Dense and Dynamic Environments via Deep Reinforcement Learning

Jan 18, 2020

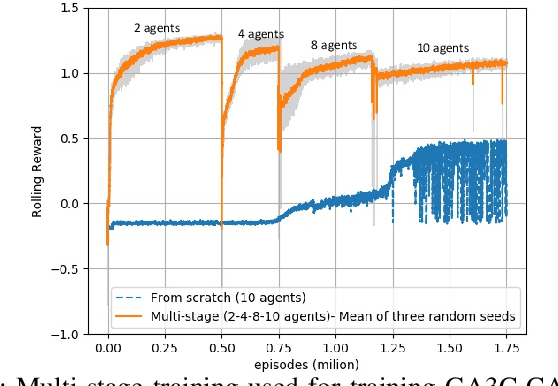

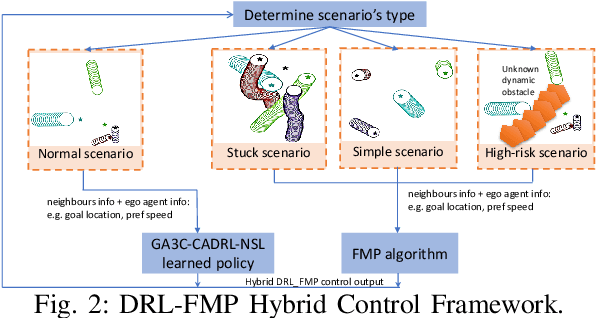

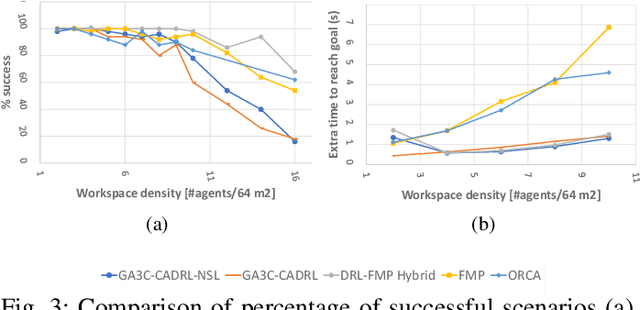

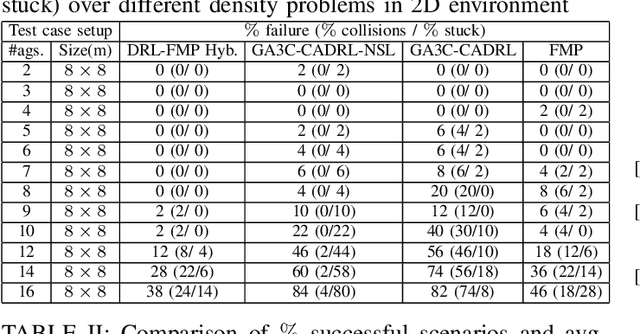

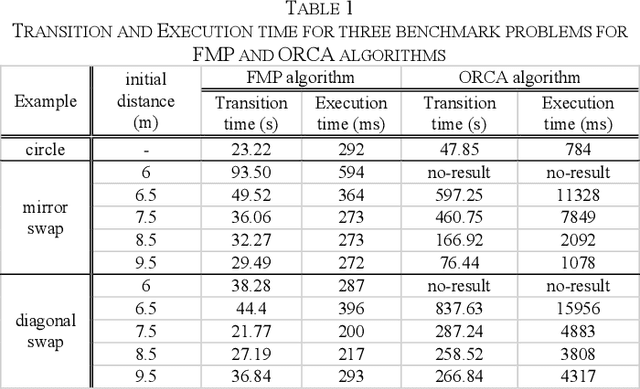

Abstract:This paper introduces a hybrid algorithm of deep reinforcement learning (RL) and Force-based motion planning (FMP) to solve distributed motion planning problem in dense and dynamic environments. Individually, RL and FMP algorithms each have their own limitations. FMP is not able to produce time-optimal paths and existing RL solutions are not able to produce collision-free paths in dense environments. Therefore, we first tried improving the performance of recent RL approaches by introducing a new reward function that not only eliminates the requirement of a pre supervised learning (SL) step but also decreases the chance of collision in crowded environments. That improved things, but there were still a lot of failure cases. So, we developed a hybrid approach to leverage the simpler FMP approach in stuck, simple and high-risk cases, and continue using RL for normal cases in which FMP can't produce optimal path. Also, we extend GA3C-CADRL algorithm to 3D environment. Simulation results show that the proposed algorithm outperforms both deep RL and FMP algorithms and produces up to 50% more successful scenarios than deep RL and up to 75% less extra time to reach goal than FMP.

Force-based Algorithm for Motion Planning of Large Agent Teams

Sep 10, 2019

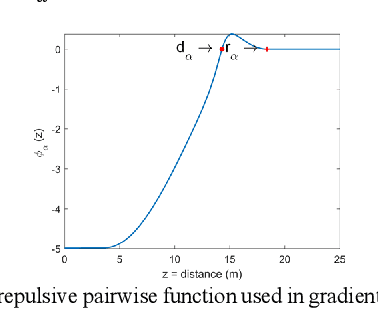

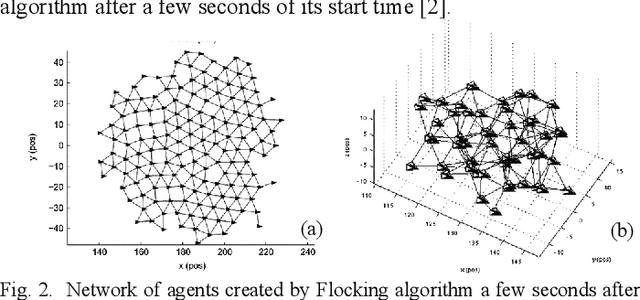

Abstract:This paper presents a distributed, efficient, scalable and real-time motion planning algorithm for a large group of agents moving in 2 or 3-dimensional spaces. This algorithm enables autonomous agents to generate individual trajectories independently with only the relative position information of neighboring agents. Each agent applies a force-based control that contains two main terms: collision avoidance and navigational feedback. The first term keeps two agents separate with a certain distance, while the second term attracts each agent toward its goal location. Compared with existing collision-avoidance algorithms, the proposed force-based motion planning (FMP) algorithm is able to find collision-free motions with lower transition time, free from velocity state information of neighbouring agents. It leads to less computational overhead. The performance of proposed FMP is examined over several dense and complex 2D and 3D benchmark simulation scenarios, with results outperforming existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge