Alex Levering

Contrastive Pretraining for Visual Concept Explanations of Socioeconomic Outcomes

Apr 15, 2024

Abstract:Predicting socioeconomic indicators from satellite imagery with deep learning has become an increasingly popular research direction. Post-hoc concept-based explanations can be an important step towards broader adoption of these models in policy-making as they enable the interpretation of socioeconomic outcomes based on visual concepts that are intuitive to humans. In this paper, we study the interplay between representation learning using an additional task-specific contrastive loss and post-hoc concept explainability for socioeconomic studies. Our results on two different geographical locations and tasks indicate that the task-specific pretraining imposes a continuous ordering of the latent space embeddings according to the socioeconomic outcomes. This improves the model's interpretability as it enables the latent space of the model to associate urban concepts with continuous intervals of socioeconomic outcomes. Further, we illustrate how analyzing the model's conceptual sensitivity for the intervals of socioeconomic outcomes can shed light on new insights for urban studies.

Cross-Modal Learning of Housing Quality in Amsterdam

Mar 13, 2024

Abstract:In our research we test data and models for the recognition of housing quality in the city of Amsterdam from ground-level and aerial imagery. For ground-level images we compare Google StreetView (GSV) to Flickr images. Our results show that GSV predicts the most accurate building quality scores, approximately 30% better than using only aerial images. However, we find that through careful filtering and by using the right pre-trained model, Flickr image features combined with aerial image features are able to halve the performance gap to GSV features from 30% to 15%. Our results indicate that there are viable alternatives to GSV for liveability factor prediction, which is encouraging as GSV images are more difficult to acquire and not always available.

Time Series Analysis of Urban Liveability

Sep 01, 2023

Abstract:In this paper we explore deep learning models to monitor longitudinal liveability changes in Dutch cities at the neighbourhood level. Our liveability reference data is defined by a country-wise yearly survey based on a set of indicators combined into a liveability score, the Leefbaarometer. We pair this reference data with yearly-available high-resolution aerial images, which creates yearly timesteps at which liveability can be monitored. We deploy a convolutional neural network trained on an aerial image from 2016 and the Leefbaarometer score to predict liveability at new timesteps 2012 and 2020. The results in a city used for training (Amsterdam) and one never seen during training (Eindhoven) show some trends which are difficult to interpret, especially in light of the differences in image acquisitions at the different time steps. This demonstrates the complexity of liveability monitoring across time periods and the necessity for more sophisticated methods compensating for changes unrelated to liveability dynamics.

* Accepted at JURSE 2023

Geo-Information Harvesting from Social Media Data

Nov 01, 2022Abstract:As unconventional sources of geo-information, massive imagery and text messages from open platforms and social media form a temporally quasi-seamless, spatially multi-perspective stream, but with unknown and diverse quality. Due to its complementarity to remote sensing data, geo-information from these sources offers promising perspectives, but harvesting is not trivial due to its data characteristics. In this article, we address key aspects in the field, including data availability, analysis-ready data preparation and data management, geo-information extraction from social media text messages and images, and the fusion of social media and remote sensing data. We then showcase some exemplary geographic applications. In addition, we present the first extensive discussion of ethical considerations of social media data in the context of geo-information harvesting and geographic applications. With this effort, we wish to stimulate curiosity and lay the groundwork for researchers who intend to explore social media data for geo-applications. We encourage the community to join forces by sharing their code and data.

Detecting Unsigned Physical Road Incidents from Driver-View Images

Apr 24, 2020

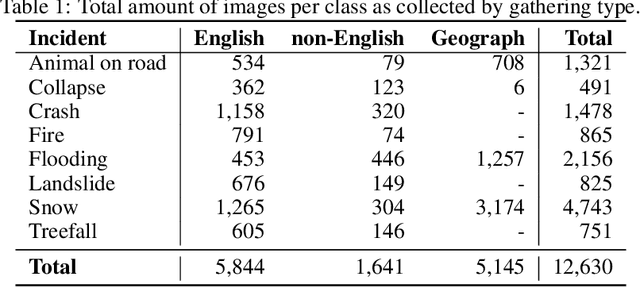

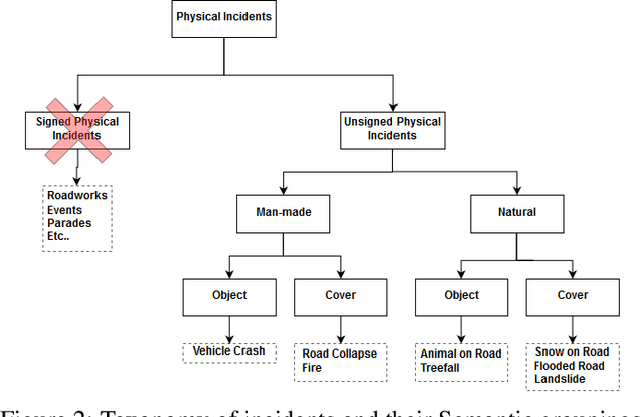

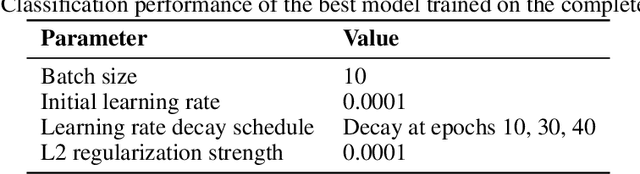

Abstract:Safety on roads is of uttermost importance, especially in the context of autonomous vehicles. A critical need is to detect and communicate disruptive incidents early and effectively. In this paper we propose a system based on an off-the-shelf deep neural network architecture that is able to detect and recognize types of unsigned (non-placarded, such as traffic signs), physical (visible in images) road incidents. We develop a taxonomy for unsigned physical incidents to provide a means of organizing and grouping related incidents. After selecting eight target types of incidents, we collect a dataset of twelve thousand images gathered from publicly-available web sources. We subsequently fine-tune a convolutional neural network to recognize the eight types of road incidents. The proposed model is able to recognize incidents with a high level of accuracy (higher than 90%). We further show that while our system generalizes well across spatial context by training a classifier on geostratified data in the United Kingdom (with an accuracy of over 90%), the translation to visually less similar environments requires spatially distributed data collection. Note: this is a pre-print version of work accepted in IEEE Transactions on Intelligent Vehicles (T-IV;in press). The paper is currently in production, and the DOI link will be added soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge