Abhishek Singh

Microsoft

AdaSplit: Adaptive Trade-offs for Resource-constrained Distributed Deep Learning

Dec 02, 2021

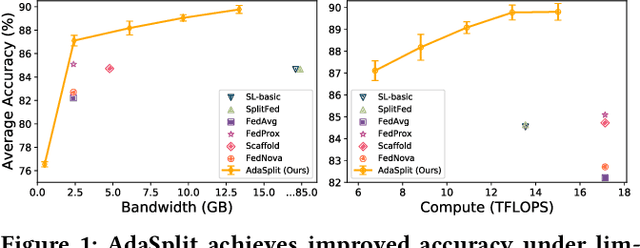

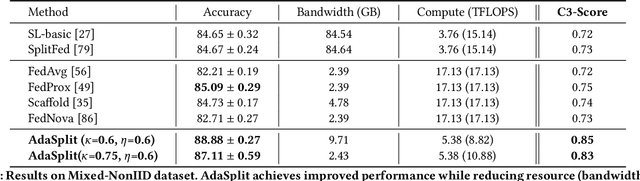

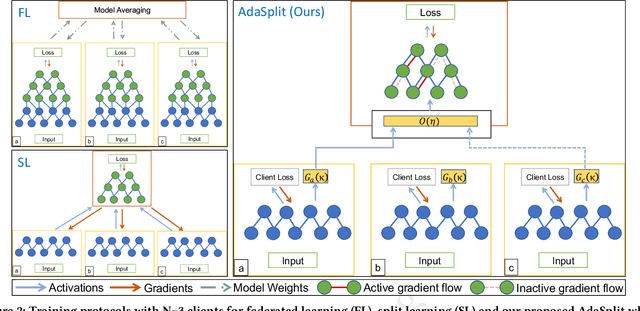

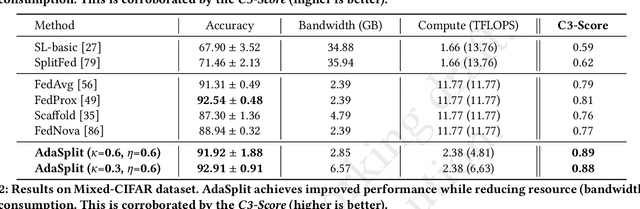

Abstract:Distributed deep learning frameworks like federated learning (FL) and its variants are enabling personalized experiences across a wide range of web clients and mobile/IoT devices. However, FL-based frameworks are constrained by computational resources at clients due to the exploding growth of model parameters (eg. billion parameter model). Split learning (SL), a recent framework, reduces client compute load by splitting the model training between client and server. This flexibility is extremely useful for low-compute setups but is often achieved at cost of increase in bandwidth consumption and may result in sub-optimal convergence, especially when client data is heterogeneous. In this work, we introduce AdaSplit which enables efficiently scaling SL to low resource scenarios by reducing bandwidth consumption and improving performance across heterogeneous clients. To capture and benchmark this multi-dimensional nature of distributed deep learning, we also introduce C3-Score, a metric to evaluate performance under resource budgets. We validate the effectiveness of AdaSplit under limited resources through extensive experimental comparison with strong federated and split learning baselines. We also present a sensitivity analysis of key design choices in AdaSplit which validates the ability of AdaSplit to provide adaptive trade-offs across variable resource budgets.

MedPerf: Open Benchmarking Platform for Medical Artificial Intelligence using Federated Evaluation

Oct 08, 2021

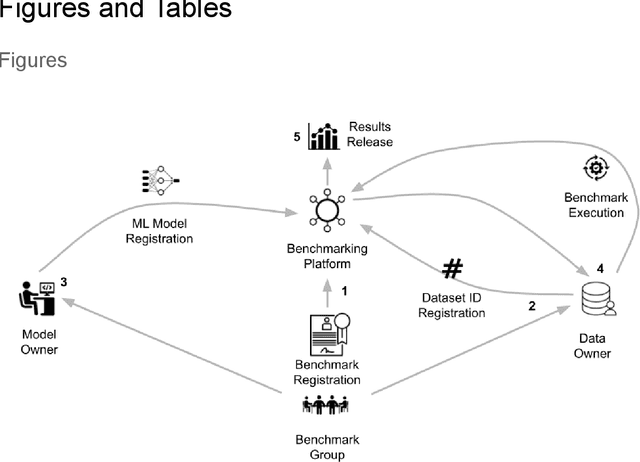

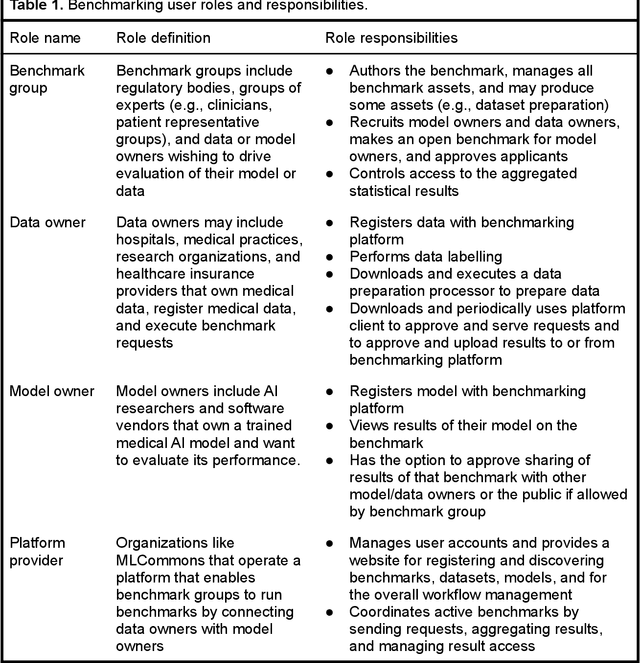

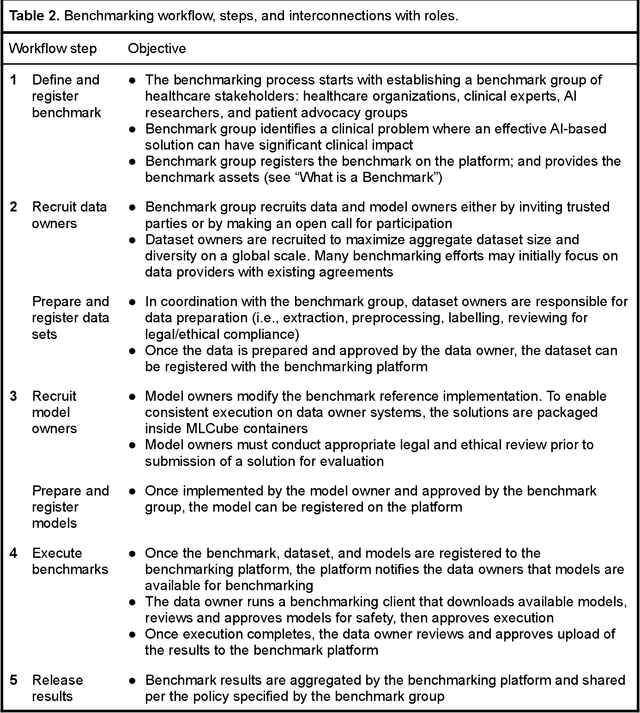

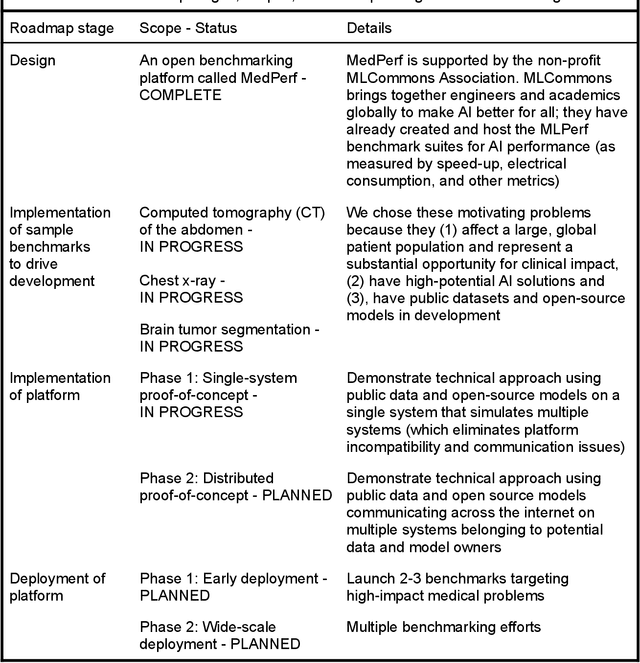

Abstract:Medical AI has tremendous potential to advance healthcare by supporting the evidence-based practice of medicine, personalizing patient treatment, reducing costs, and improving provider and patient experience. We argue that unlocking this potential requires a systematic way to measure the performance of medical AI models on large-scale heterogeneous data. To meet this need, we are building MedPerf, an open framework for benchmarking machine learning in the medical domain. MedPerf will enable federated evaluation in which models are securely distributed to different facilities for evaluation, thereby empowering healthcare organizations to assess and verify the performance of AI models in an efficient and human-supervised process, while prioritizing privacy. We describe the current challenges healthcare and AI communities face, the need for an open platform, the design philosophy of MedPerf, its current implementation status, and our roadmap. We call for researchers and organizations to join us in creating the MedPerf open benchmarking platform.

An In-Memory Analog Computing Co-Processor for Energy-Efficient CNN Inference on Mobile Devices

May 24, 2021

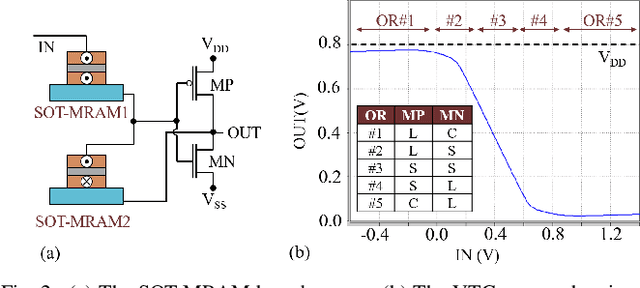

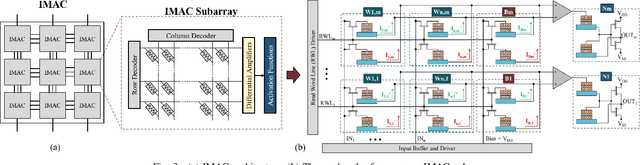

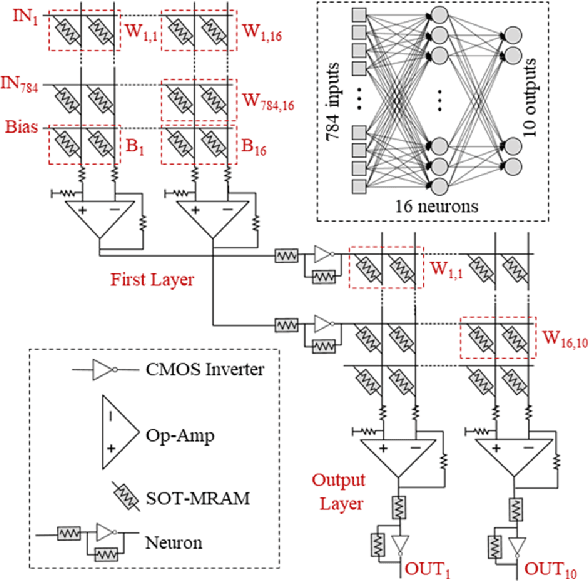

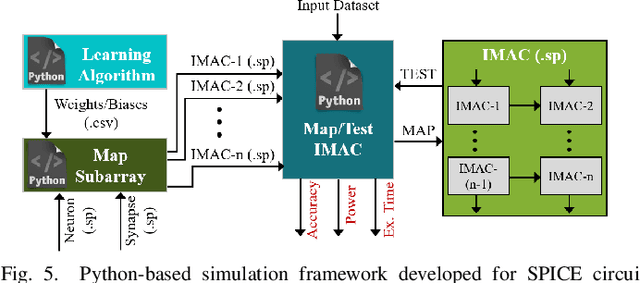

Abstract:In this paper, we develop an in-memory analog computing (IMAC) architecture realizing both synaptic behavior and activation functions within non-volatile memory arrays. Spin-orbit torque magnetoresistive random-access memory (SOT-MRAM) devices are leveraged to realize sigmoidal neurons as well as binarized synapses. First, it is shown the proposed IMAC architecture can be utilized to realize a multilayer perceptron (MLP) classifier achieving orders of magnitude performance improvement compared to previous mixed-signal and digital implementations. Next, a heterogeneous mixed-signal and mixed-precision CPU-IMAC architecture is proposed for convolutional neural networks (CNNs) inference on mobile processors, in which IMAC is designed as a co-processor to realize fully-connected (FC) layers whereas convolution layers are executed in CPU. Architecture-level analytical models are developed to evaluate the performance and energy consumption of the CPU-IMAC architecture. Simulation results exhibit 6.5% and 10% energy savings for CPU-IMAC based realizations of LeNet and VGG CNN models, for MNIST and CIFAR-10 pattern recognition tasks, respectively.

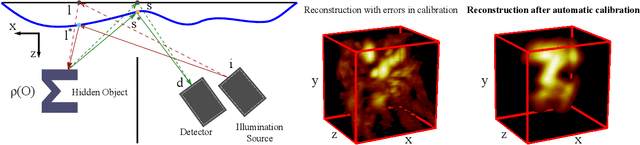

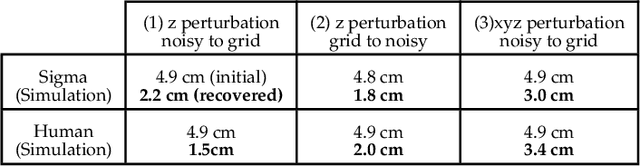

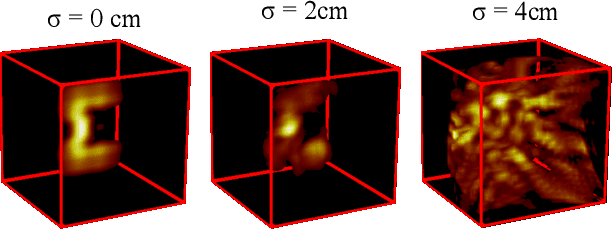

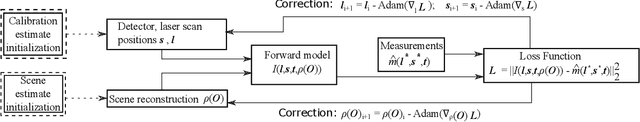

Automatic calibration of time of flight based non-line-of-sight reconstruction

May 21, 2021

Abstract:Time of flight based Non-line-of-sight (NLOS) imaging approaches require precise calibration of illumination and detector positions on the visible scene to produce reasonable results. If this calibration error is sufficiently high, reconstruction can fail entirely without any indication to the user. In this work, we highlight the necessity of building autocalibration into NLOS reconstruction in order to handle mis-calibration. We propose a forward model of NLOS measurements that is differentiable with respect to both, the hidden scene albedo, and virtual illumination and detector positions. With only a mean squared error loss and no regularization, our model enables joint reconstruction and recovery of calibration parameters by minimizing the measurement residual using gradient descent. We demonstrate our method is able to produce robust reconstructions using simulated and real data where the calibration error applied causes other state of the art algorithms to fail.

Can Self Reported Symptoms Predict Daily COVID-19 Cases?

May 18, 2021

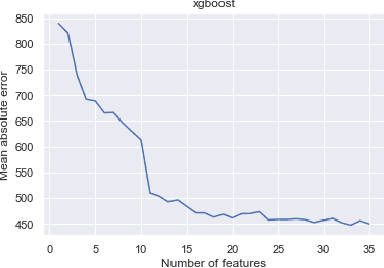

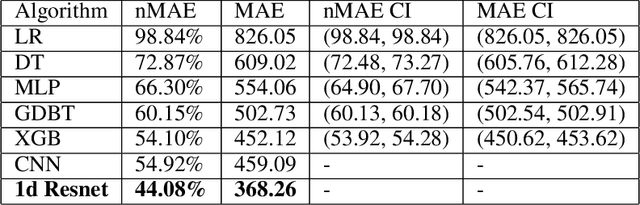

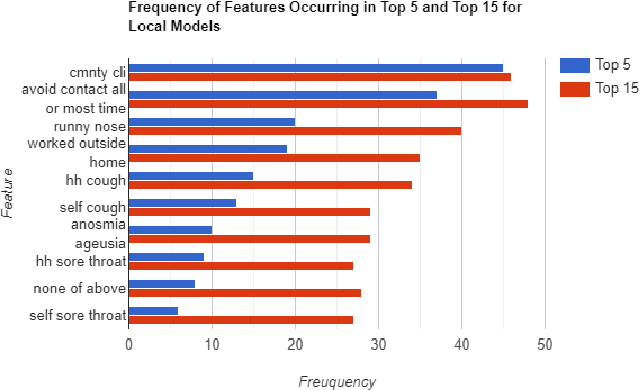

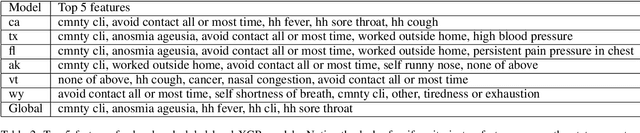

Abstract:The COVID-19 pandemic has impacted lives and economies across the globe, leading to many deaths. While vaccination is an important intervention, its roll-out is slow and unequal across the globe. Therefore, extensive testing still remains one of the key methods to monitor and contain the virus. Testing on a large scale is expensive and arduous. Hence, we need alternate methods to estimate the number of cases. Online surveys have been shown to be an effective method for data collection amidst the pandemic. In this work, we develop machine learning models to estimate the prevalence of COVID-19 using self-reported symptoms. Our best model predicts the daily cases with a mean absolute error (MAE) of 226.30 (normalized MAE of 27.09%) per state, which demonstrates the possibility of predicting the actual number of confirmed cases by utilizing self-reported symptoms. The models are developed at two levels of data granularity - local models, which are trained at the state level, and a single global model which is trained on the combined data aggregated across all states. Our results indicate a lower error on the local models as opposed to the global model. In addition, we also show that the most important symptoms (features) vary considerably from state to state. This work demonstrates that the models developed on crowd-sourced data, curated via online platforms, can complement the existing epidemiological surveillance infrastructure in a cost-effective manner.

COVID-19 Outbreak Prediction and Analysis using Self Reported Symptoms

Dec 21, 2020

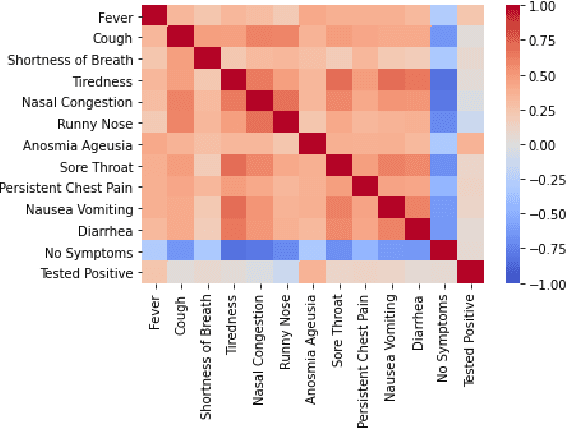

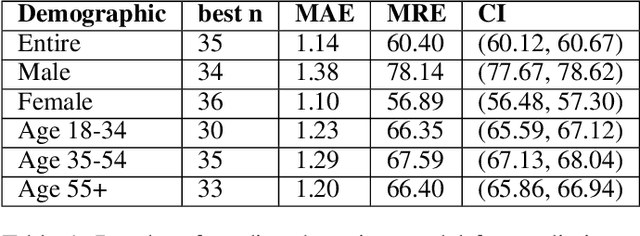

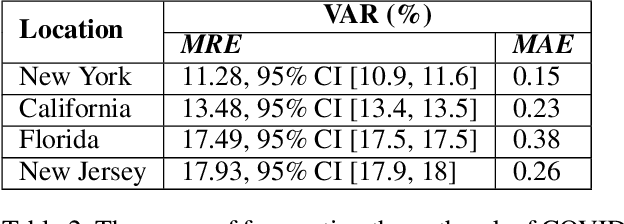

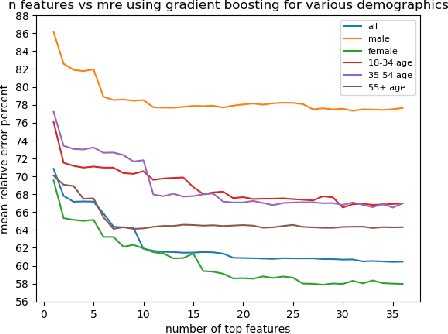

Abstract:The COVID-19 pandemic has challenged scientists and policy-makers internationally to develop novel approaches to public health policy. Furthermore, it has also been observed that the prevalence and spread of COVID-19 vary across different spatial, temporal, and demographics. Despite ramping up testing, we still are not at the required level in most parts of the globe. Therefore, we utilize self-reported symptoms survey data to understand trends in the spread of COVID-19. The aim of this study is to segment populations that are highly susceptible. In order to understand such populations, we perform exploratory data analysis, outbreak prediction, and time-series forecasting using public health and policy datasets. From our studies, we try to predict the likely % of the population that tested positive for COVID-19 based on self-reported symptoms. Our findings reaffirm the predictive value of symptoms, such as anosmia and ageusia. And we forecast that % of the population having COVID-19-like illness (CLI) and those tested positive as 0.15% and 1.14% absolute error respectively. These findings could help aid faster development of the public health policy, particularly in areas with low levels of testing and having a greater reliance on self-reported symptoms. Our analysis sheds light on identifying clinical attributes of interest across different demographics. We also provide insights into the effects of various policy enactments on COVID-19 prevalence.

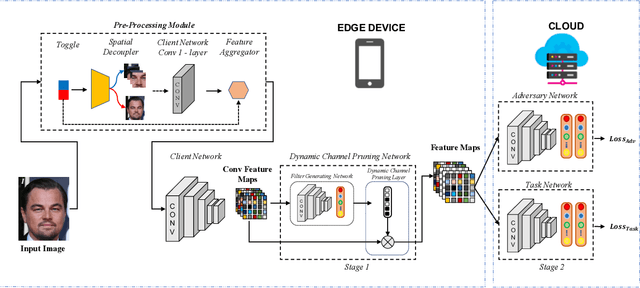

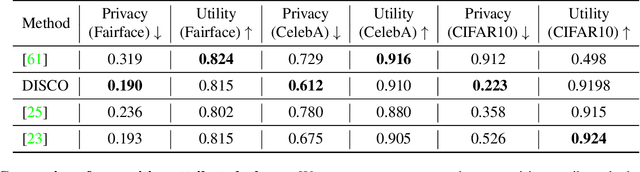

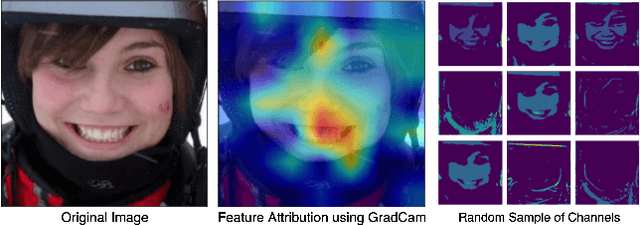

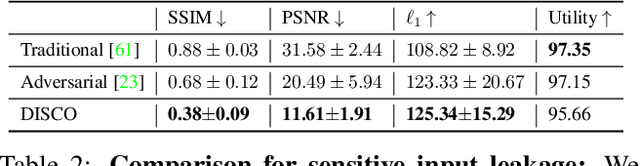

DISCO: Dynamic and Invariant Sensitive Channel Obfuscation for deep neural networks

Dec 20, 2020

Abstract:Recent deep learning models have shown remarkable performance in image classification. While these deep learning systems are getting closer to practical deployment, the common assumption made about data is that it does not carry any sensitive information. This assumption may not hold for many practical cases, especially in the domain where an individual's personal information is involved, like healthcare and facial recognition systems. We posit that selectively removing features in this latent space can protect the sensitive information and provide a better privacy-utility trade-off. Consequently, we propose DISCO which learns a dynamic and data driven pruning filter to selectively obfuscate sensitive information in the feature space. We propose diverse attack schemes for sensitive inputs \& attributes and demonstrate the effectiveness of DISCO against state-of-the-art methods through quantitative and qualitative evaluation. Finally, we also release an evaluation benchmark dataset of 1 million sensitive representations to encourage rigorous exploration of novel attack schemes.

PoinT-5: Pointer Network and T-5 based Financial NarrativeSummarisation

Oct 12, 2020

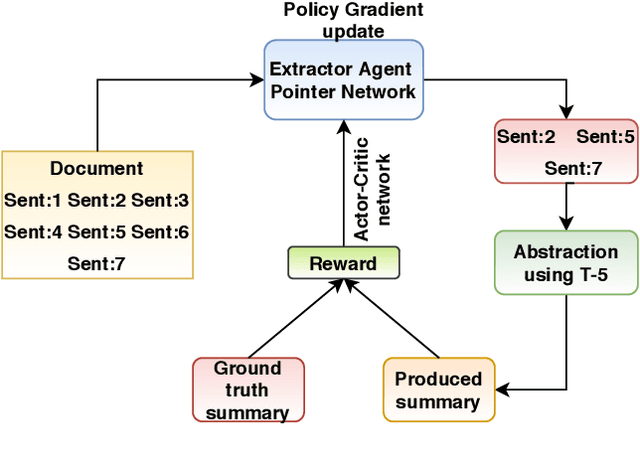

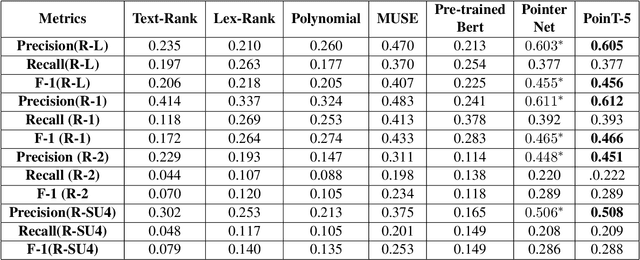

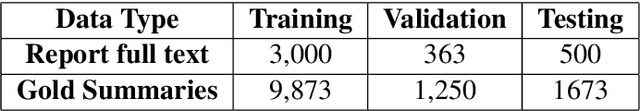

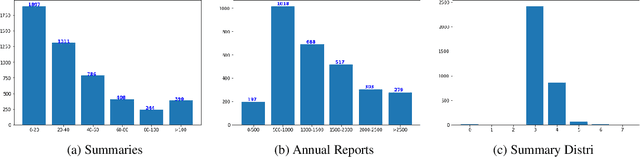

Abstract:Companies provide annual reports to their shareholders at the end of the financial year that describes their operations and financial conditions. The average length of these reports is 80, and it may extend up to 250 pages long. In this paper, we propose our methodology PoinT-5 (the combination of Pointer Network and T-5 (Test-to-text transfer Transformer) algorithms) that we used in the Financial Narrative Summarisation (FNS) 2020 task. The proposed method uses pointer networks to extract important narrative sentences from the report, and then T-5 is used to paraphrase extracted sentences into a concise yet informative sentence. We evaluate our method using ROUGE-N (1,2), L, and SU4. The proposed method achieves the highest precision scores in all the metrics and highest F1 scores in ROUGE1, and LCS and the only solution to cross the MUSE solution baseline in ROUGE-LCS metrics.

Proximity Sensing for Contact Tracing

Sep 04, 2020

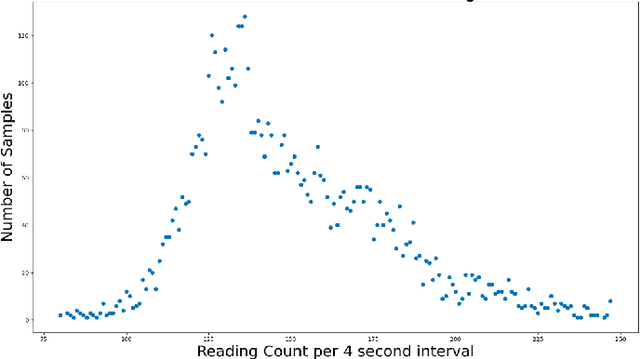

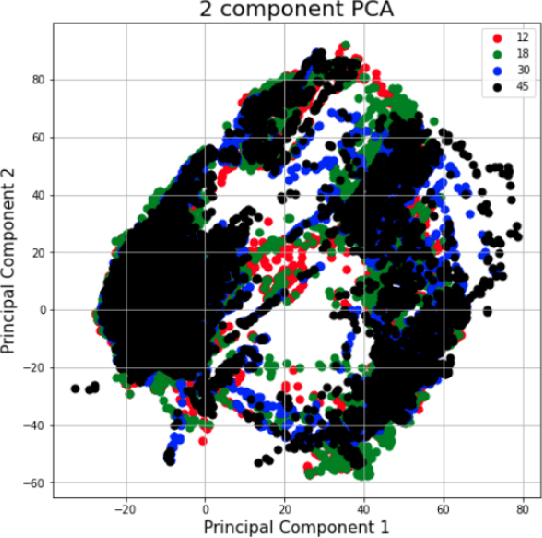

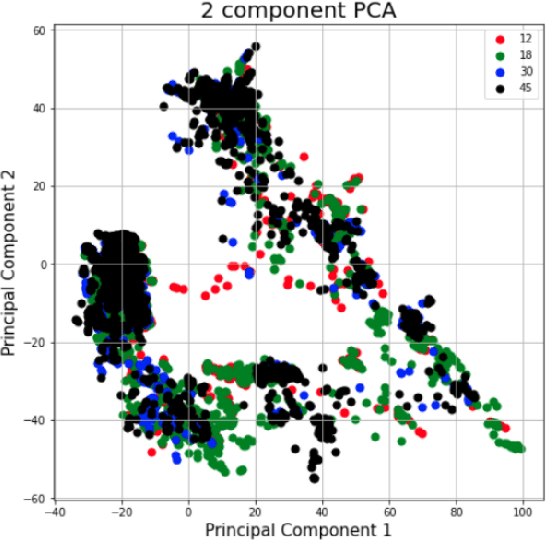

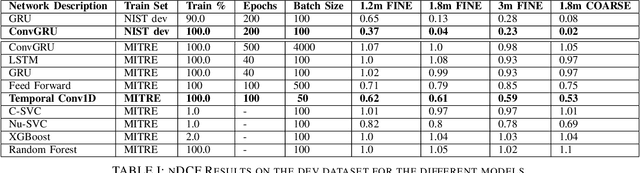

Abstract:The TC4TL (Too Close For Too Long) challenge is aimed towards designing an effective proximity sensing algorithm that can accurately provide exposure notifications. In this paper, we describe our approach to model sensor and other device-level data to estimate the distance between two phones. We also present our research and data analysis on the TC4TL challenge and discuss various limitations associated with the task, and the dataset used for this purpose.

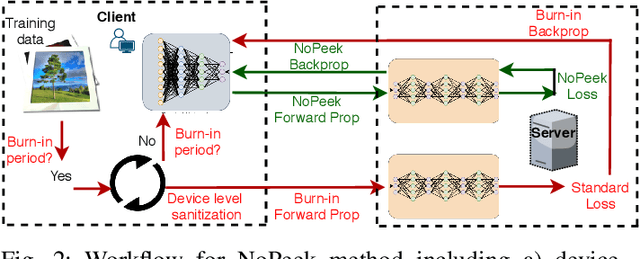

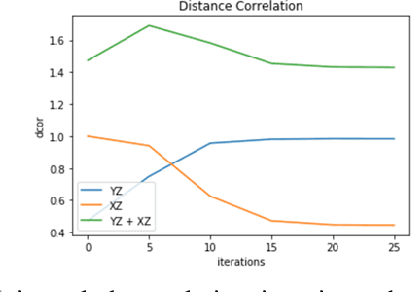

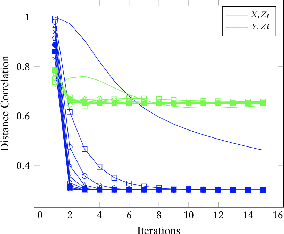

NoPeek: Information leakage reduction to share activations in distributed deep learning

Aug 20, 2020

Abstract:For distributed machine learning with sensitive data, we demonstrate how minimizing distance correlation between raw data and intermediary representations reduces leakage of sensitive raw data patterns across client communications while maintaining model accuracy. Leakage (measured using distance correlation between input and intermediate representations) is the risk associated with the invertibility of raw data from intermediary representations. This can prevent client entities that hold sensitive data from using distributed deep learning services. We demonstrate that our method is resilient to such reconstruction attacks and is based on reduction of distance correlation between raw data and learned representations during training and inference with image datasets. We prevent such reconstruction of raw data while maintaining information required to sustain good classification accuracies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge