Renato Umeton

AI Benchmark Democratization and Carpentry

Dec 12, 2025Abstract:Benchmarks are a cornerstone of modern machine learning, enabling reproducibility, comparison, and scientific progress. However, AI benchmarks are increasingly complex, requiring dynamic, AI-focused workflows. Rapid evolution in model architectures, scale, datasets, and deployment contexts makes evaluation a moving target. Large language models often memorize static benchmarks, causing a gap between benchmark results and real-world performance. Beyond traditional static benchmarks, continuous adaptive benchmarking frameworks are needed to align scientific assessment with deployment risks. This calls for skills and education in AI Benchmark Carpentry. From our experience with MLCommons, educational initiatives, and programs like the DOE's Trillion Parameter Consortium, key barriers include high resource demands, limited access to specialized hardware, lack of benchmark design expertise, and uncertainty in relating results to application domains. Current benchmarks often emphasize peak performance on top-tier hardware, offering limited guidance for diverse, real-world scenarios. Benchmarking must become dynamic, incorporating evolving models, updated data, and heterogeneous platforms while maintaining transparency, reproducibility, and interpretability. Democratization requires both technical innovation and systematic education across levels, building sustained expertise in benchmark design and use. Benchmarks should support application-relevant comparisons, enabling informed, context-sensitive decisions. Dynamic, inclusive benchmarking will ensure evaluation keeps pace with AI evolution and supports responsible, reproducible, and accessible AI deployment. Community efforts can provide a foundation for AI Benchmark Carpentry.

Multiplex Imaging Analysis in Pathology: a Comprehensive Review on Analytical Approaches and Digital Toolkits

Nov 01, 2024

Abstract:Conventional histopathology has long been essential for disease diagnosis, relying on visual inspection of tissue sections. Immunohistochemistry aids in detecting specific biomarkers but is limited by its single-marker approach, restricting its ability to capture the full tissue environment. The advent of multiplexed imaging technologies, like multiplexed immunofluorescence and spatial transcriptomics, allows for simultaneous visualization of multiple biomarkers in a single section, enhancing morphological data with molecular and spatial information. This provides a more comprehensive view of the tissue microenvironment, cellular interactions, and disease mechanisms - crucial for understanding disease progression, prognosis, and treatment response. However, the extensive data from multiplexed imaging necessitates sophisticated computational methods for preprocessing, segmentation, feature extraction, and spatial analysis. These tools are vital for managing large, multidimensional datasets, converting raw imaging data into actionable insights. By automating labor-intensive tasks and enhancing reproducibility and accuracy, computational tools are pivotal in diagnostics and research. This review explores the current landscape of multiplexed imaging in pathology, detailing workflows and key technologies like PathML, an AI-powered platform that streamlines image analysis, making complex dataset interpretation accessible for clinical and research settings.

MedPerf: Open Benchmarking Platform for Medical Artificial Intelligence using Federated Evaluation

Oct 08, 2021

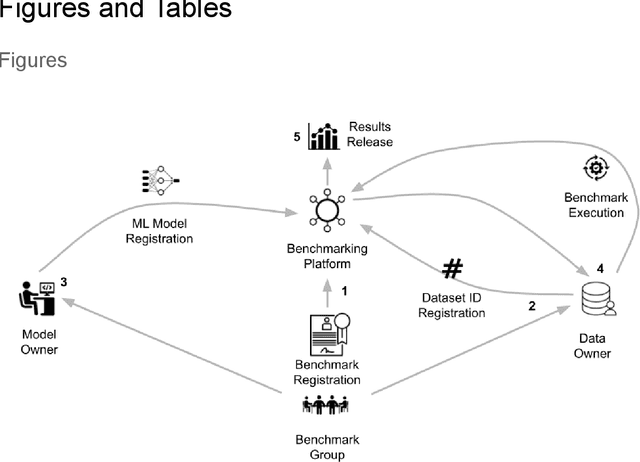

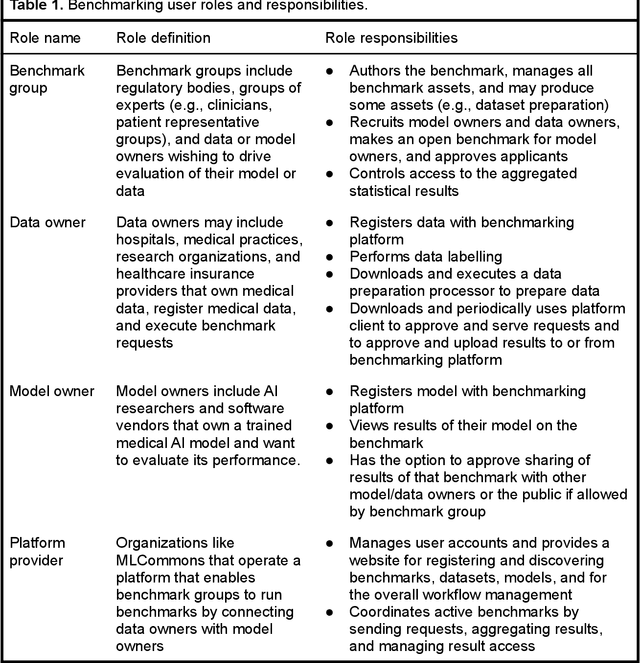

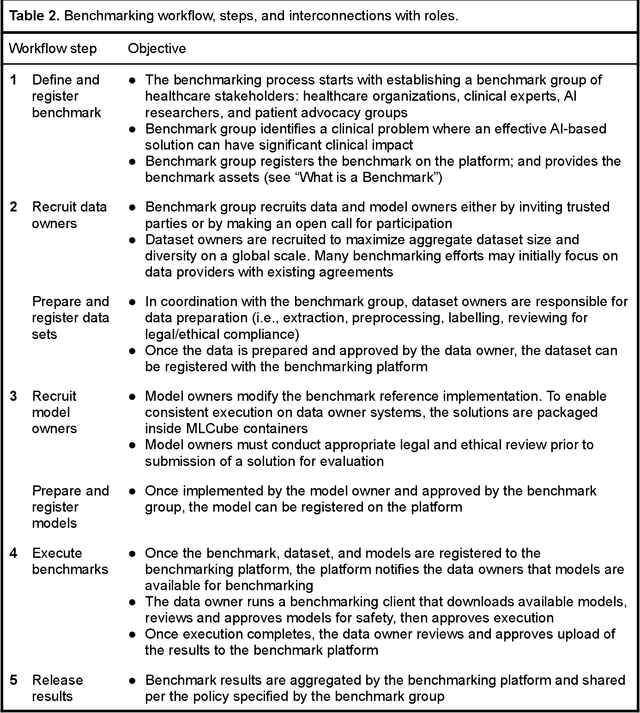

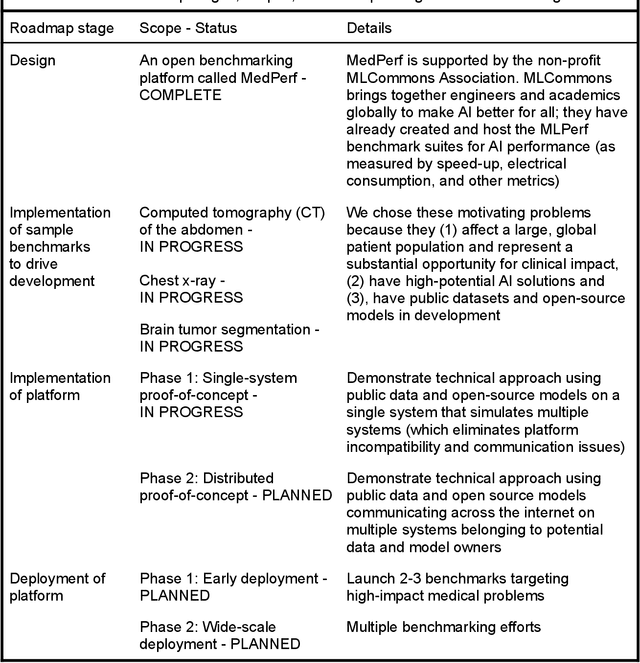

Abstract:Medical AI has tremendous potential to advance healthcare by supporting the evidence-based practice of medicine, personalizing patient treatment, reducing costs, and improving provider and patient experience. We argue that unlocking this potential requires a systematic way to measure the performance of medical AI models on large-scale heterogeneous data. To meet this need, we are building MedPerf, an open framework for benchmarking machine learning in the medical domain. MedPerf will enable federated evaluation in which models are securely distributed to different facilities for evaluation, thereby empowering healthcare organizations to assess and verify the performance of AI models in an efficient and human-supervised process, while prioritizing privacy. We describe the current challenges healthcare and AI communities face, the need for an open platform, the design philosophy of MedPerf, its current implementation status, and our roadmap. We call for researchers and organizations to join us in creating the MedPerf open benchmarking platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge