Abhishek Kashyap

Single-View Shape Completion for Robotic Grasping in Clutter

Dec 18, 2025

Abstract:In vision-based robot manipulation, a single camera view can only capture one side of objects of interest, with additional occlusions in cluttered scenes further restricting visibility. As a result, the observed geometry is incomplete, and grasp estimation algorithms perform suboptimally. To address this limitation, we leverage diffusion models to perform category-level 3D shape completion from partial depth observations obtained from a single view, reconstructing complete object geometries to provide richer context for grasp planning. Our method focuses on common household items with diverse geometries, generating full 3D shapes that serve as input to downstream grasp inference networks. Unlike prior work, which primarily considers isolated objects or minimal clutter, we evaluate shape completion and grasping in realistic clutter scenarios with household objects. In preliminary evaluations on a cluttered scene, our approach consistently results in better grasp success rates than a naive baseline without shape completion by 23% and over a recent state of the art shape completion approach by 19%. Our code is available at https://amm.aass.oru.se/shape-completion-grasping/.

Exploiting Radiance Fields for Grasp Generation on Novel Synthetic Views

May 16, 2025Abstract:Vision based robot manipulation uses cameras to capture one or more images of a scene containing the objects to be manipulated. Taking multiple images can help if any object is occluded from one viewpoint but more visible from another viewpoint. However, the camera has to be moved to a sequence of suitable positions for capturing multiple images, which requires time and may not always be possible, due to reachability constraints. So while additional images can produce more accurate grasp poses due to the extra information available, the time-cost goes up with the number of additional views sampled. Scene representations like Gaussian Splatting are capable of rendering accurate photorealistic virtual images from user-specified novel viewpoints. In this work, we show initial results which indicate that novel view synthesis can provide additional context in generating grasp poses. Our experiments on the Graspnet-1billion dataset show that novel views contributed force-closure grasps in addition to the force-closure grasps obtained from sparsely sampled real views while also improving grasp coverage. In the future we hope this work can be extended to improve grasp extraction from radiance fields constructed with a single input image, using for example diffusion models or generalizable radiance fields.

The First WARA Robotics Mobile Manipulation Challenge -- Lessons Learned

May 11, 2025Abstract:The first WARA Robotics Mobile Manipulation Challenge, held in December 2024 at ABB Corporate Research in V\"aster{\aa}s, Sweden, addressed the automation of task-intensive and repetitive manual labor in laboratory environments - specifically the transport and cleaning of glassware. Designed in collaboration with AstraZeneca, the challenge invited academic teams to develop autonomous robotic systems capable of navigating human-populated lab spaces and performing complex manipulation tasks, such as loading items into industrial dishwashers. This paper presents an overview of the challenge setup, its industrial motivation, and the four distinct approaches proposed by the participating teams. We summarize lessons learned from this edition and propose improvements in design to enable a more effective second iteration to take place in 2025. The initiative bridges an important gap in effective academia-industry collaboration within the domain of autonomous mobile manipulation systems by promoting the development and deployment of applied robotic solutions in real-world laboratory contexts.

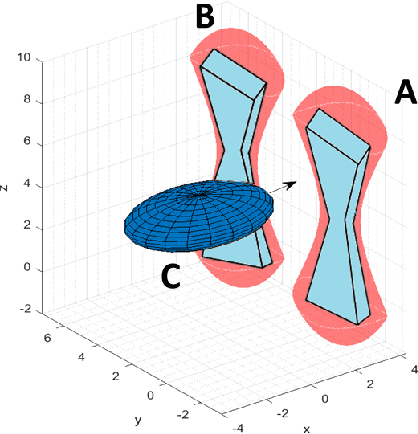

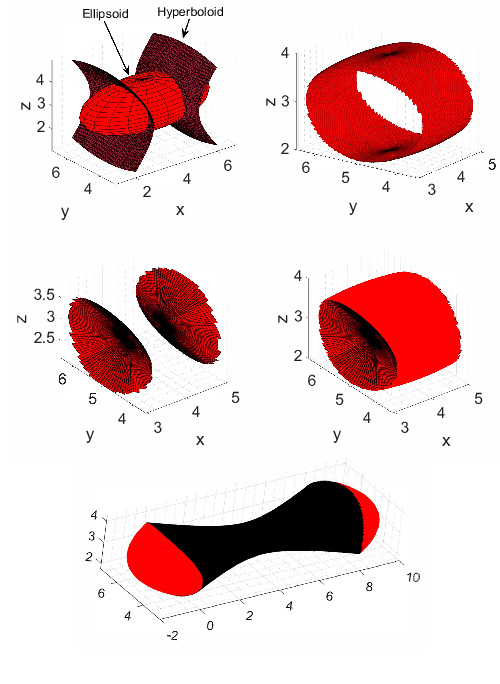

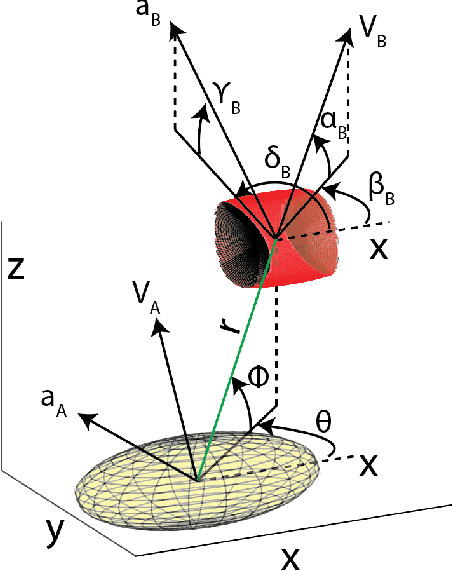

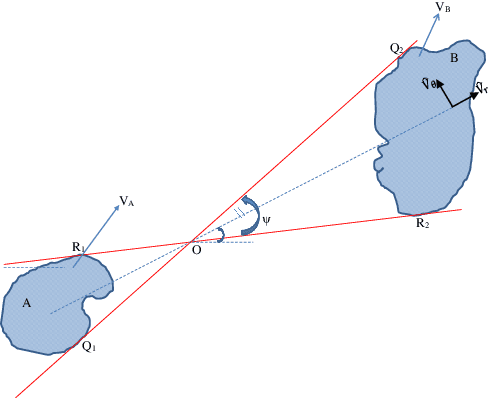

Collision Avoidance of 3-Dimensional Objects in Dynamic Environments

Mar 17, 2022

Abstract:Achieving collision avoidance between moving objects is an important objective while determining robot trajectories. In performing collision avoidance maneuvers, the relative shapes of the objects play an important role. The literature largely models the shapes of the objects as spheres, and this can make the avoidance maneuvers very conservative, especially when the objects are of elongated shape and/or non-convex. In this paper, we model the shapes of the objects using suitable combinations of ellipsoids and one-sheeted/two-sheeted hyperboloids, and employ a collision cone approach to achieve collision avoidance. We present a method to construct the 3-D collision cone, and present simulation results demonstrating the working of the collision avoidance laws.

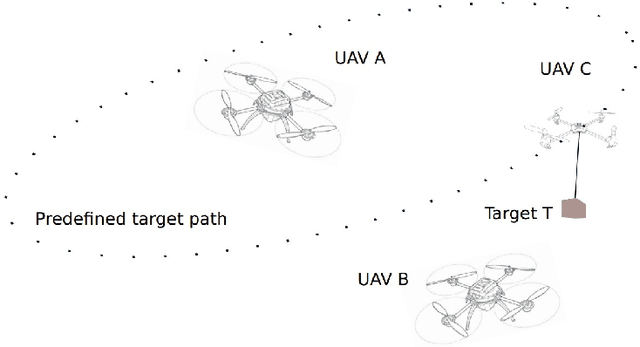

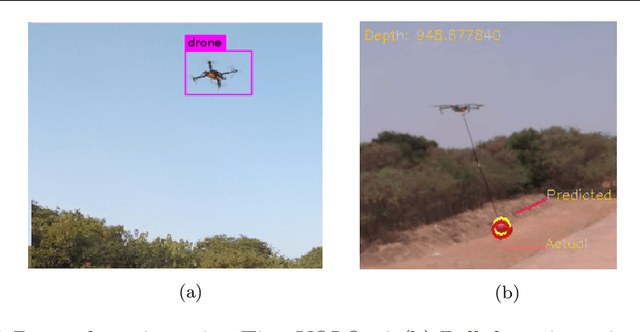

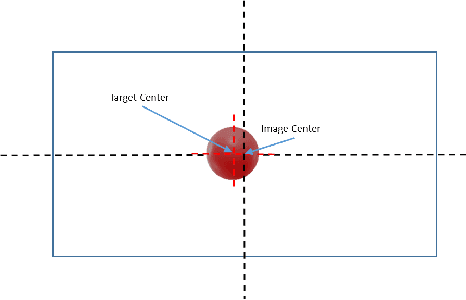

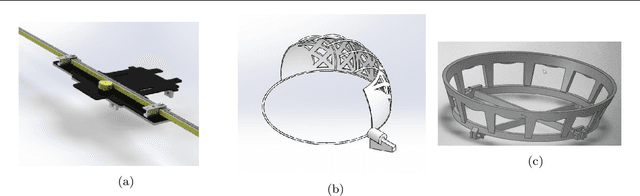

Collaborative Tracking and Capture of Aerial Object using UAVs

Oct 04, 2020

Abstract:This work details the problem of aerial target capture using multiple UAVs. This problem is motivated from the challenge 1 of Mohammed Bin Zayed International Robotic Challenge 2020. The UAVs utilise visual feedback to autonomously detect target, approach it and capture without disturbing the vehicle which carries the target. Multi-UAV collaboration improves the efficiency of the system and increases the chance of capturing the ball robustly in short span of time. In this paper, the proposed architecture is validated through simulation in ROS-Gazebo environment and is further implemented on hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge