Zuzeng Lin

AnimeColor: Reference-based Animation Colorization with Diffusion Transformers

Jul 27, 2025Abstract:Animation colorization plays a vital role in animation production, yet existing methods struggle to achieve color accuracy and temporal consistency. To address these challenges, we propose \textbf{AnimeColor}, a novel reference-based animation colorization framework leveraging Diffusion Transformers (DiT). Our approach integrates sketch sequences into a DiT-based video diffusion model, enabling sketch-controlled animation generation. We introduce two key components: a High-level Color Extractor (HCE) to capture semantic color information and a Low-level Color Guider (LCG) to extract fine-grained color details from reference images. These components work synergistically to guide the video diffusion process. Additionally, we employ a multi-stage training strategy to maximize the utilization of reference image color information. Extensive experiments demonstrate that AnimeColor outperforms existing methods in color accuracy, sketch alignment, temporal consistency, and visual quality. Our framework not only advances the state of the art in animation colorization but also provides a practical solution for industrial applications. The code will be made publicly available at \href{https://github.com/IamCreateAI/AnimeColor}{https://github.com/IamCreateAI/AnimeColor}.

Collaborative Neural Rendering using Anime Character Sheets

Jul 12, 2022

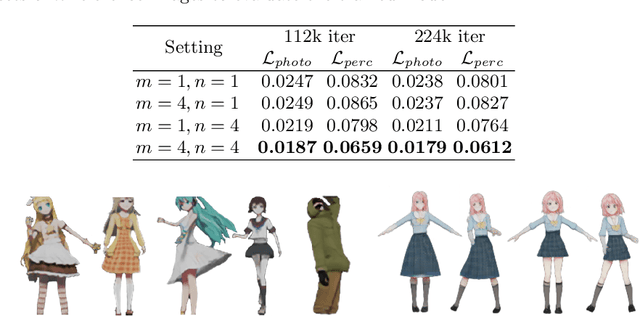

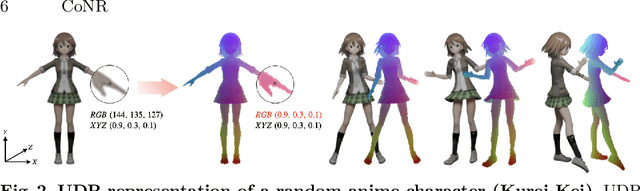

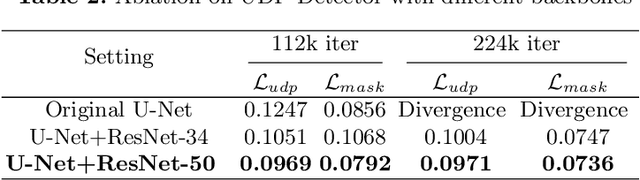

Abstract:Drawing images of characters at desired poses is an essential but laborious task in anime production. In this paper, we present the Collaborative Neural Rendering~(CoNR) method to create new images from a few arbitrarily posed reference images available in character sheets. In general, the high diversity of body shapes of anime characters defies the employment of universal body models for real-world humans, like SMPL. To overcome this difficulty, CoNR uses a compact and easy-to-obtain landmark encoding to avoid creating a unified UV mapping in the pipeline. In addition, CoNR's performance can be significantly increased when having multiple reference images by using feature space cross-view dense correspondence and warping in a specially designed neural network construct. Moreover, we collect a character sheet dataset containing over 700,000 hand-drawn and synthesized images of diverse poses to facilitate research in this area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge