Zhuoyu Li

Accelerating PDE-Constrained Optimization by the Derivative of Neural Operators

Jun 16, 2025Abstract:PDE-Constrained Optimization (PDECO) problems can be accelerated significantly by employing gradient-based methods with surrogate models like neural operators compared to traditional numerical solvers. However, this approach faces two key challenges: (1) **Data inefficiency**: Lack of efficient data sampling and effective training for neural operators, particularly for optimization purpose. (2) **Instability**: High risk of optimization derailment due to inaccurate neural operator predictions and gradients. To address these challenges, we propose a novel framework: (1) **Optimization-oriented training**: we leverage data from full steps of traditional optimization algorithms and employ a specialized training method for neural operators. (2) **Enhanced derivative learning**: We introduce a *Virtual-Fourier* layer to enhance derivative learning within the neural operator, a crucial aspect for gradient-based optimization. (3) **Hybrid optimization**: We implement a hybrid approach that integrates neural operators with numerical solvers, providing robust regularization for the optimization process. Our extensive experimental results demonstrate the effectiveness of our model in accurately learning operators and their derivatives. Furthermore, our hybrid optimization approach exhibits robust convergence.

Hunyuan-TurboS: Advancing Large Language Models through Mamba-Transformer Synergy and Adaptive Chain-of-Thought

May 21, 2025Abstract:As Large Language Models (LLMs) rapidly advance, we introduce Hunyuan-TurboS, a novel large hybrid Transformer-Mamba Mixture of Experts (MoE) model. It synergistically combines Mamba's long-sequence processing efficiency with Transformer's superior contextual understanding. Hunyuan-TurboS features an adaptive long-short chain-of-thought (CoT) mechanism, dynamically switching between rapid responses for simple queries and deep "thinking" modes for complex problems, optimizing computational resources. Architecturally, this 56B activated (560B total) parameter model employs 128 layers (Mamba2, Attention, FFN) with an innovative AMF/MF block pattern. Faster Mamba2 ensures linear complexity, Grouped-Query Attention minimizes KV cache, and FFNs use an MoE structure. Pre-trained on 16T high-quality tokens, it supports a 256K context length and is the first industry-deployed large-scale Mamba model. Our comprehensive post-training strategy enhances capabilities via Supervised Fine-Tuning (3M instructions), a novel Adaptive Long-short CoT Fusion method, Multi-round Deliberation Learning for iterative improvement, and a two-stage Large-scale Reinforcement Learning process targeting STEM and general instruction-following. Evaluations show strong performance: overall top 7 rank on LMSYS Chatbot Arena with a score of 1356, outperforming leading models like Gemini-2.0-Flash-001 (1352) and o4-mini-2025-04-16 (1345). TurboS also achieves an average of 77.9% across 23 automated benchmarks. Hunyuan-TurboS balances high performance and efficiency, offering substantial capabilities at lower inference costs than many reasoning models, establishing a new paradigm for efficient large-scale pre-trained models.

PAT-CNN: Automatic Segmentation and Quantification of Pericardial Adipose Tissue from T2-Weighted Cardiac Magnetic Resonance Images

Nov 09, 2022Abstract:Background: Increased pericardial adipose tissue (PAT) is associated with many types of cardiovascular disease (CVD). Although cardiac magnetic resonance images (CMRI) are often acquired in patients with CVD, there are currently no tools to automatically identify and quantify PAT from CMRI. The aim of this study was to create a neural network to segment PAT from T2-weighted CMRI and explore the correlations between PAT volumes (PATV) and CVD outcomes and mortality. Methods: We trained and tested a deep learning model, PAT-CNN, to segment PAT on T2-weighted cardiac MR images. Using the segmentations from PAT-CNN, we automatically calculated PATV on images from 391 patients. We analysed correlations between PATV and CVD diagnosis and 1-year mortality post-imaging. Results: PAT-CNN was able to accurately segment PAT with Dice score/ Hausdorff distances of 0.74 +- 0.03/27.1 +- 10.9~mm, similar to the values obtained when comparing the segmentations of two independent human observers ($0.76 +- 0.06/21.2 +- 10.3~mm$). Regression models showed that, independently of sex and body-mass index, PATV is significantly positively correlated with a diagnosis of CVD and with 1-year all cause mortality (p-value < 0.01). Conclusions: PAT-CNN can segment PAT from T2-weighted CMR images automatically and accurately. Increased PATV as measured automatically from CMRI is significantly associated with the presence of CVD and can independently predict 1-year mortality.

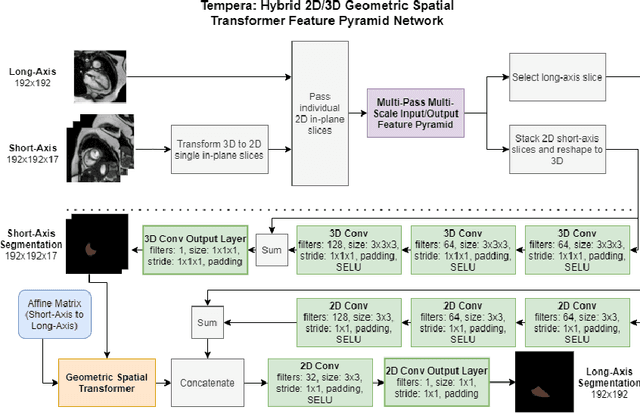

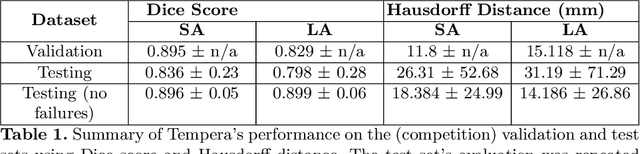

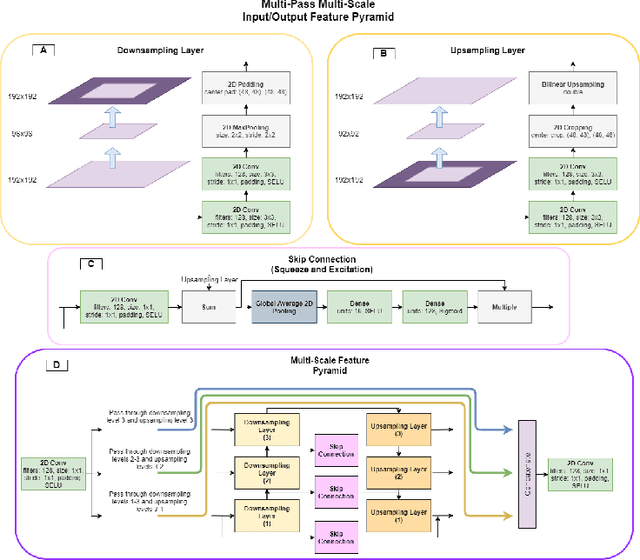

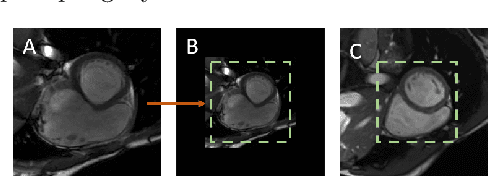

Tempera: Spatial Transformer Feature Pyramid Network for Cardiac MRI Segmentation

Mar 01, 2022

Abstract:Assessing the structure and function of the right ventricle (RV) is important in the diagnosis of several cardiac pathologies. However, it remains more challenging to segment the RV than the left ventricle (LV). In this paper, we focus on segmenting the RV in both short (SA) and long-axis (LA) cardiac MR images simultaneously. For this task, we propose a new multi-input/output architecture, hybrid 2D/3D geometric spatial TransformEr Multi-Pass fEature pyRAmid (Tempera). Our feature pyramid extends current designs by allowing not only a multi-scale feature output but multi-scale SA and LA input images as well. Tempera transfers learned features between SA and LA images via layer weight sharing and incorporates a geometric target transformer to map the predicted SA segmentation to LA space. Our model achieves an average Dice score of 0.836 and 0.798 for the SA and LA, respectively, and 26.31 mm and 31.19 mm Hausdorff distances. This opens up the potential for the incorporation of RV segmentation models into clinical workflows.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge