Zhaofeng Si

Tamper-evident Image using JPEG Fixed Points

Apr 24, 2025Abstract:An intriguing phenomenon about JPEG compression has been observed since two decades ago- after repeating JPEG compression and decompression, it leads to a stable image that does not change anymore, which is a fixed point. In this work, we prove the existence of fixed points in the essential JPEG procedures. We analyze JPEG compression and decompression processes, revealing the existence of fixed points that can be reached within a few iterations. These fixed points are diverse and preserve the image's visual quality, ensuring minimal distortion. This result is used to develop a method to create a tamper-evident image from the original authentic image, which can expose tampering operations by showing deviations from the fixed point image.

Meta-Learning with Heterogeneous Tasks

Oct 24, 2024

Abstract:Meta-learning is a general approach to equip machine learning models with the ability to handle few-shot scenarios when dealing with many tasks. Most existing meta-learning methods work based on the assumption that all tasks are of equal importance. However, real-world applications often present heterogeneous tasks characterized by varying difficulty levels, noise in training samples, or being distinctively different from most other tasks. In this paper, we introduce a novel meta-learning method designed to effectively manage such heterogeneous tasks by employing rank-based task-level learning objectives, Heterogeneous Tasks Robust Meta-learning (HeTRoM). HeTRoM is proficient in handling heterogeneous tasks, and it prevents easy tasks from overwhelming the meta-learner. The approach allows for an efficient iterative optimization algorithm based on bi-level optimization, which is then improved by integrating statistical guidance. Our experimental results demonstrate that our method provides flexibility, enabling users to adapt to diverse task settings and enhancing the meta-learner's overall performance.

Automatic Block-wise Pruning with Auxiliary Gating Structures for Deep Convolutional Neural Networks

May 07, 2022

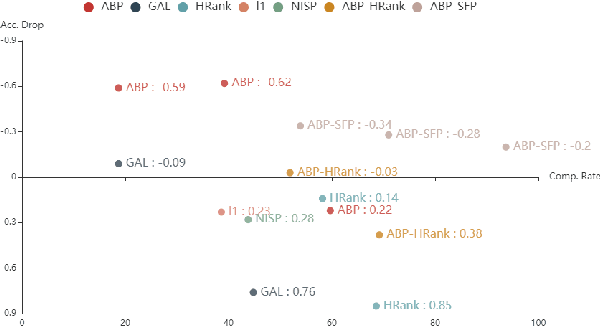

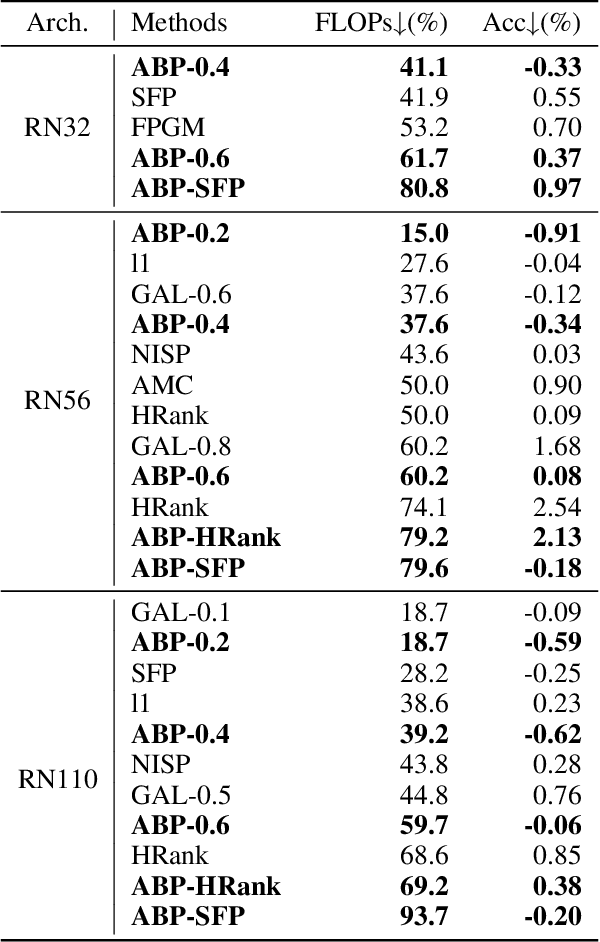

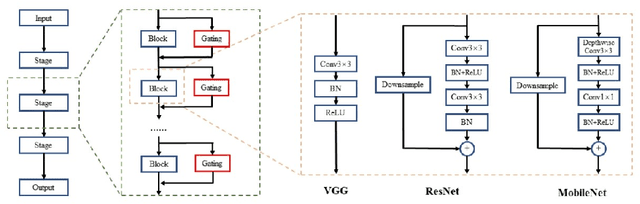

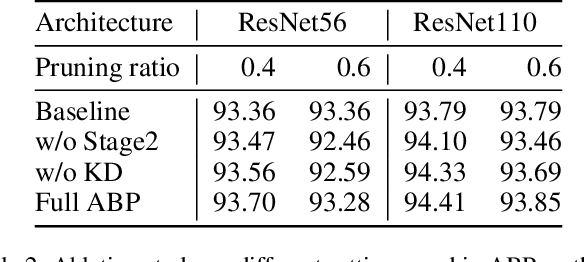

Abstract:Convolutional neural networks are prevailing in deep learning tasks. However, they suffer from massive cost issues when working on mobile devices. Network pruning is an effective method of model compression to handle such problems. This paper presents a novel structured network pruning method with auxiliary gating structures which assigns importance marks to blocks in backbone network as a criterion when pruning. Block-wise pruning is then realized by proposed voting strategy, which is different from prevailing methods who prune a model in small granularity like channel-wise. We further develop a three-stage training scheduling for the proposed architecture incorporating knowledge distillation for better performance. Our experiments demonstrate that our method can achieve state-of-the-arts compression performance for the classification tasks. In addition, our approach can integrate synergistically with other pruning methods by providing pretrained models, thus achieving a better performance than the unpruned model with over 93\% FLOPs reduced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge