Yves Le Traon

SnT, CSC

On The Empirical Effectiveness of Unrealistic Adversarial Hardening Against Realistic Adversarial Attacks

Feb 07, 2022

Abstract:While the literature on security attacks and defense of Machine Learning (ML) systems mostly focuses on unrealistic adversarial examples, recent research has raised concern about the under-explored field of realistic adversarial attacks and their implications on the robustness of real-world systems. Our paper paves the way for a better understanding of adversarial robustness against realistic attacks and makes two major contributions. First, we conduct a study on three real-world use cases (text classification, botnet detection, malware detection)) and five datasets in order to evaluate whether unrealistic adversarial examples can be used to protect models against realistic examples. Our results reveal discrepancies across the use cases, where unrealistic examples can either be as effective as the realistic ones or may offer only limited improvement. Second, to explain these results, we analyze the latent representation of the adversarial examples generated with realistic and unrealistic attacks. We shed light on the patterns that discriminate which unrealistic examples can be used for effective hardening. We release our code, datasets and models to support future research in exploring how to reduce the gap between unrealistic and realistic adversarial attacks.

Robust Active Learning: Sample-Efficient Training of Robust Deep Learning Models

Dec 05, 2021

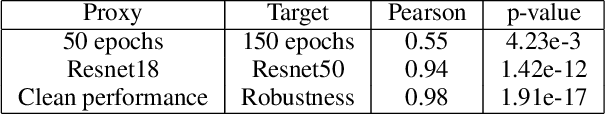

Abstract:Active learning is an established technique to reduce the labeling cost to build high-quality machine learning models. A core component of active learning is the acquisition function that determines which data should be selected to annotate. State-of-the-art acquisition functions -- and more largely, active learning techniques -- have been designed to maximize the clean performance (e.g. accuracy) and have disregarded robustness, an important quality property that has received increasing attention. Active learning, therefore, produces models that are accurate but not robust. In this paper, we propose \emph{robust active learning}, an active learning process that integrates adversarial training -- the most established method to produce robust models. Via an empirical study on 11 acquisition functions, 4 datasets, 6 DNN architectures, and 15105 trained DNNs, we show that robust active learning can produce models with the robustness (accuracy on adversarial examples) ranging from 2.35\% to 63.85\%, whereas standard active learning systematically achieves negligible robustness (less than 0.20\%). Our study also reveals, however, that the acquisition functions that perform well on accuracy are worse than random sampling when it comes to robustness. We, therefore, examine the reasons behind this and devise a new acquisition function that targets both clean performance and robustness. Our acquisition function -- named density-based robust sampling with entropy (DRE) -- outperforms the other acquisition functions (including random) in terms of robustness by up to 24.40\% (3.84\% than random particularly), while remaining competitive on accuracy. Additionally, we prove that DRE is applicable as a test selection metric for model retraining and stands out from all compared functions by up to 8.21\% robustness.

A Unified Framework for Adversarial Attack and Defense in Constrained Feature Space

Dec 02, 2021

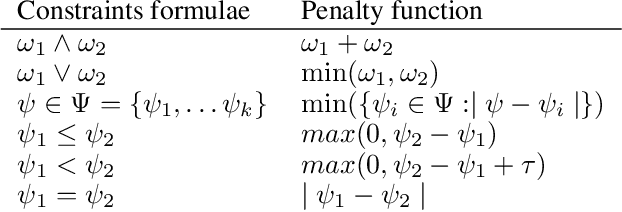

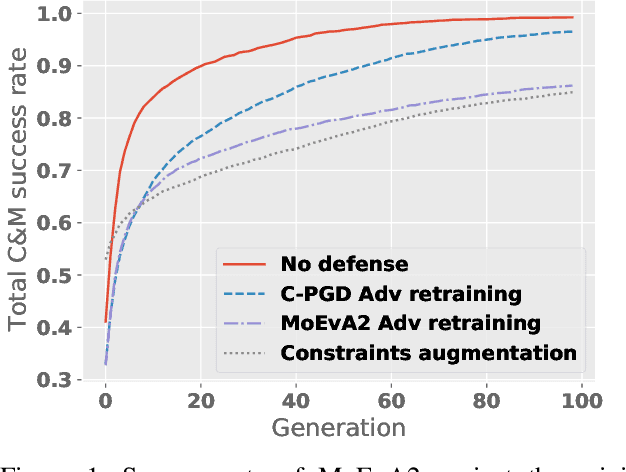

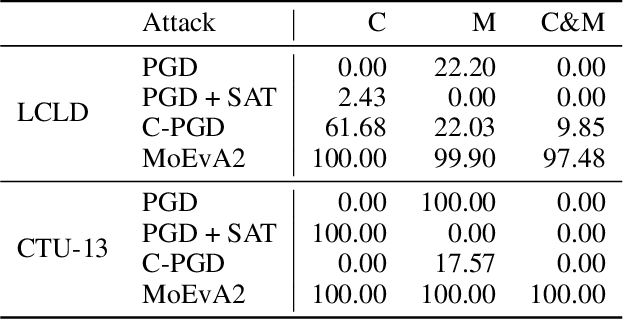

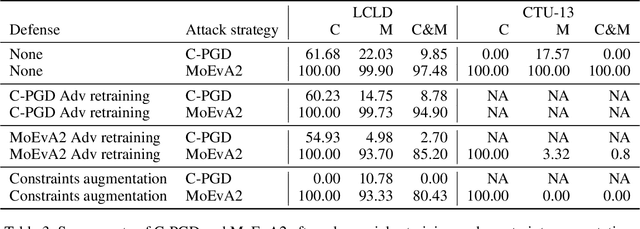

Abstract:The generation of feasible adversarial examples is necessary for properly assessing models that work on constrained feature space. However, it remains a challenging task to enforce constraints into attacks that were designed for computer vision. We propose a unified framework to generate feasible adversarial examples that satisfy given domain constraints. Our framework supports the use cases reported in the literature and can handle both linear and non-linear constraints. We instantiate our framework into two algorithms: a gradient-based attack that introduces constraints in the loss function to maximize, and a multi-objective search algorithm that aims for misclassification, perturbation minimization, and constraint satisfaction. We show that our approach is effective on two datasets from different domains, with a success rate of up to 100%, where state-of-the-art attacks fail to generate a single feasible example. In addition to adversarial retraining, we propose to introduce engineered non-convex constraints to improve model adversarial robustness. We demonstrate that this new defense is as effective as adversarial retraining. Our framework forms the starting point for research on constrained adversarial attacks and provides relevant baselines and datasets that future research can exploit.

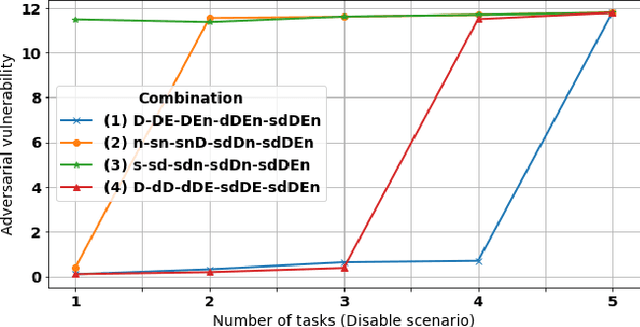

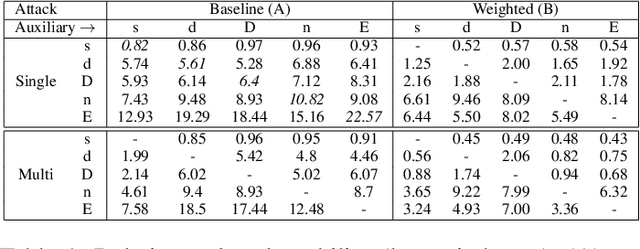

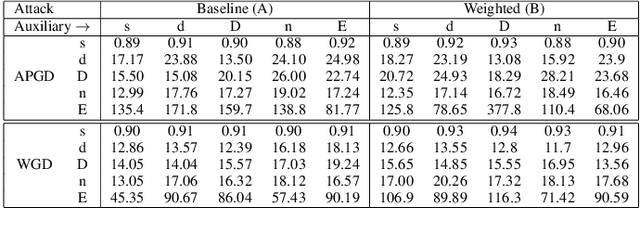

Adversarial Robustness in Multi-Task Learning: Promises and Illusions

Oct 26, 2021

Abstract:Vulnerability to adversarial attacks is a well-known weakness of Deep Neural networks. While most of the studies focus on single-task neural networks with computer vision datasets, very little research has considered complex multi-task models that are common in real applications. In this paper, we evaluate the design choices that impact the robustness of multi-task deep learning networks. We provide evidence that blindly adding auxiliary tasks, or weighing the tasks provides a false sense of robustness. Thereby, we tone down the claim made by previous research and study the different factors which may affect robustness. In particular, we show that the choice of the task to incorporate in the loss function are important factors that can be leveraged to yield more robust models.

MUTEN: Boosting Gradient-Based Adversarial Attacks via Mutant-Based Ensembles

Sep 27, 2021

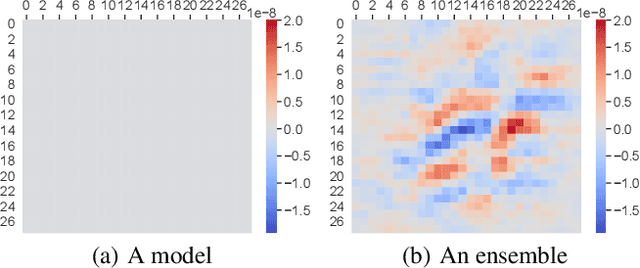

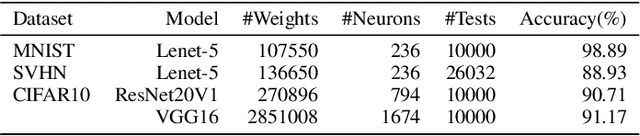

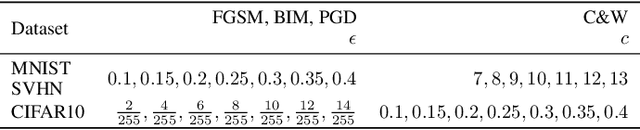

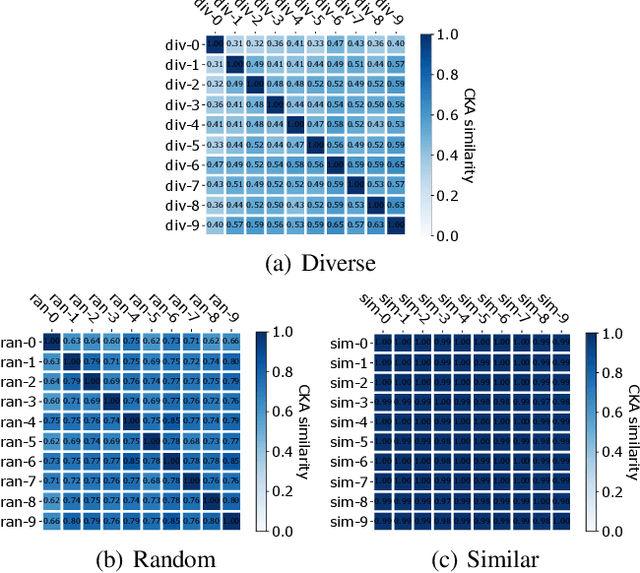

Abstract:Deep Neural Networks (DNNs) are vulnerable to adversarial examples, which causes serious threats to security-critical applications. This motivated much research on providing mechanisms to make models more robust against adversarial attacks. Unfortunately, most of these defenses, such as gradient masking, are easily overcome through different attack means. In this paper, we propose MUTEN, a low-cost method to improve the success rate of well-known attacks against gradient-masking models. Our idea is to apply the attacks on an ensemble model which is built by mutating the original model elements after training. As we found out that mutant diversity is a key factor in improving success rate, we design a greedy algorithm for generating diverse mutants efficiently. Experimental results on MNIST, SVHN, and CIFAR10 show that MUTEN can increase the success rate of four attacks by up to 0.45.

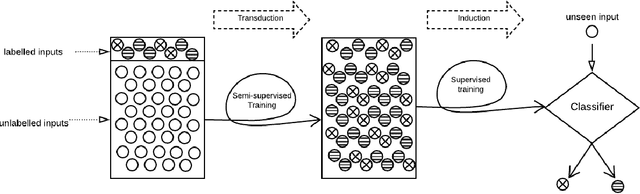

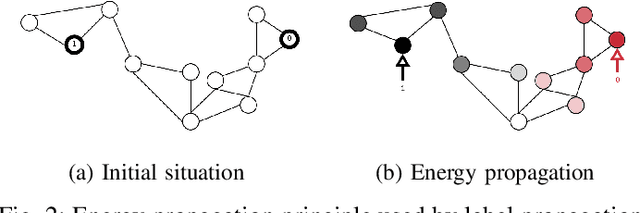

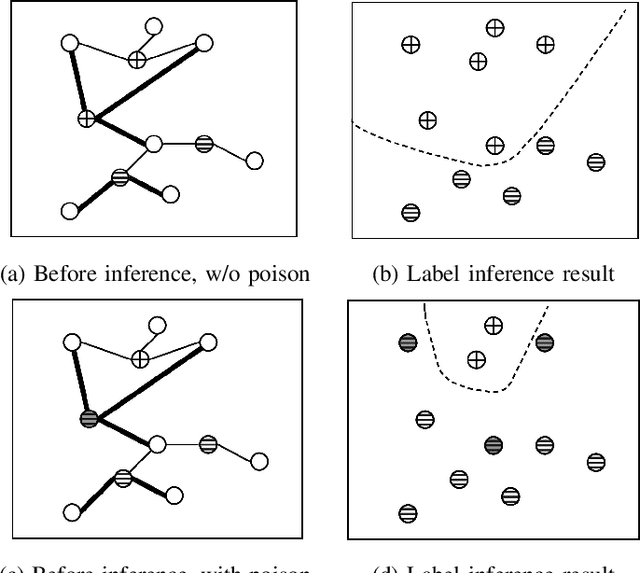

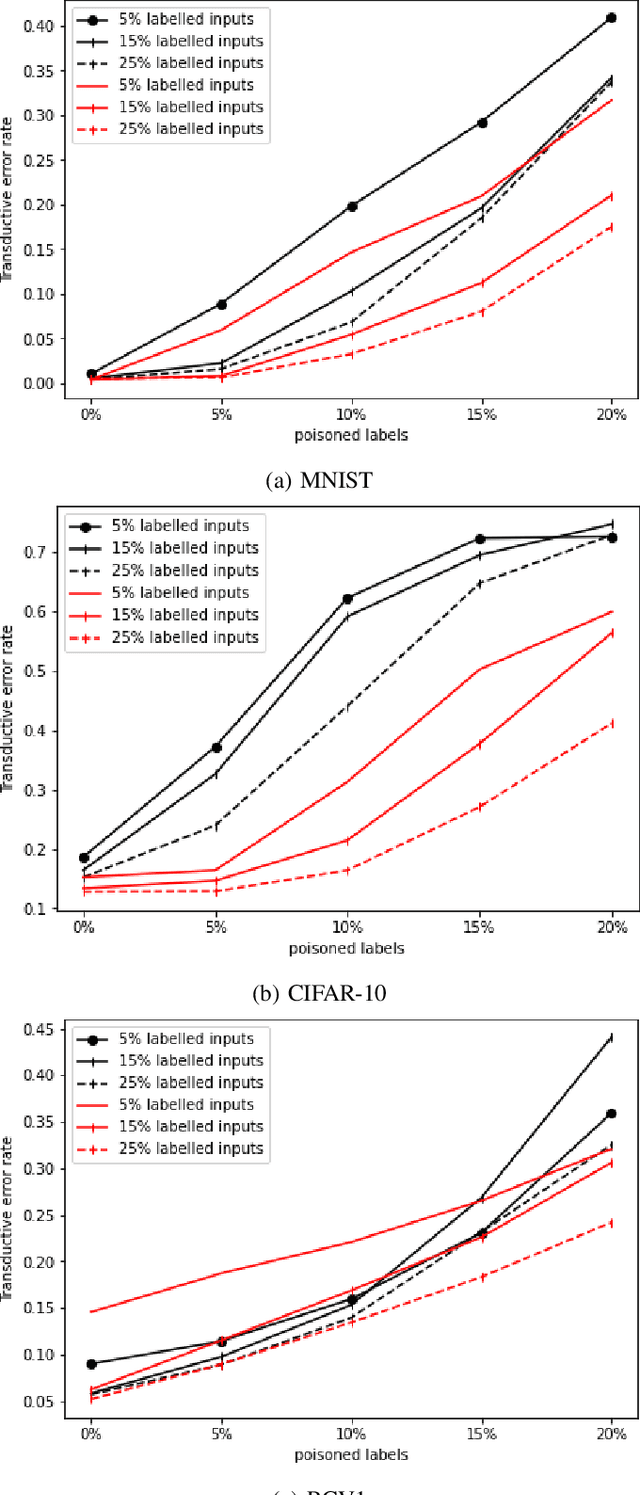

Effective and Efficient Data Poisoning in Semi-Supervised Learning

Dec 14, 2020

Abstract:Semi-Supervised Learning (SSL) aims to maximize the benefits of learning from a limited amount of labelled data together with a vast amount of unlabelled data. Because they rely on the known labels to infer the unknown labels, SSL algorithms are sensitive to data quality. This makes it important to study the potential threats related to the labelled data, more specifically, label poisoning. However, data poisoning of SSL remains largely understudied. To fill this gap, we propose a novel data poisoning method which is both effective and efficient. Our method exploits mathematical properties of SSL to approximate the influence of labelled inputs onto unlabelled one, which allows the identification of the inputs that, if poisoned, would produce the highest number of incorrectly inferred labels. We evaluate our approach on three classification problems under 12 different experimental settings each. Compared to the state of the art, our influence-based attack produces an average increase of error rate 3 times higher, while being faster by multiple orders of magnitude. Moreover, our method can inform engineers of inputs that deserve investigation (relabelling them) before training the learning model. We show that relabelling one-third of the poisoned inputs (selected based on their influence) reduces the poisoning effect by 50%.

Efficient and Transferable Adversarial Examples from Bayesian Neural Networks

Nov 10, 2020

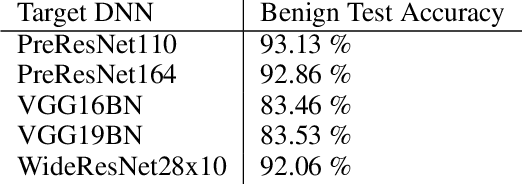

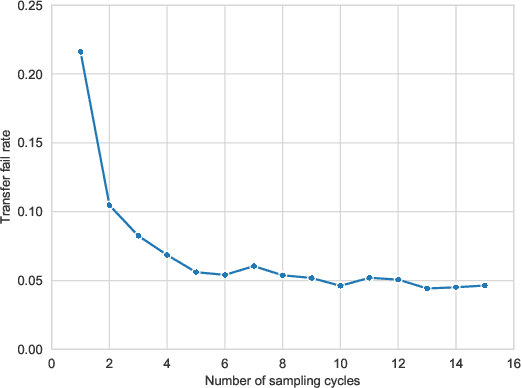

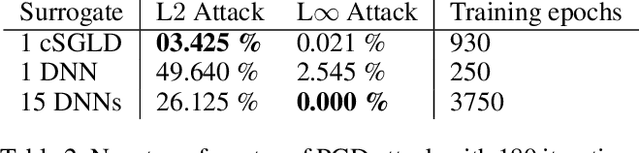

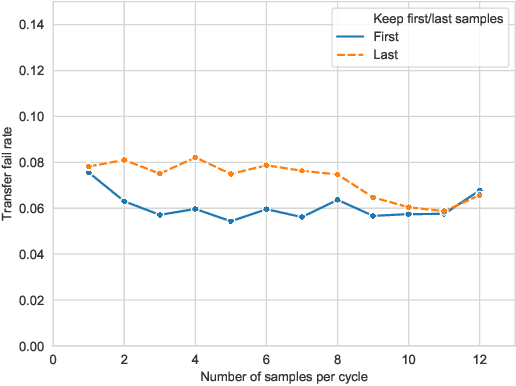

Abstract:Deep neural networks are vulnerable to evasion attacks, i.e., carefully crafted examples designed to fool a model at test time. Attacks that successfully evade an ensemble of models can transfer to other independently trained models, which proves useful in black-box settings. Unfortunately, these methods involve heavy computation costs to train the models forming the ensemble. To overcome this, we propose a new method to generate transferable adversarial examples efficiently. Inspired by Bayesian deep learning, our method builds such ensembles by sampling from the posterior distribution of neural network weights during a single training process. Experiments on CIFAR-10 show that our approach improves the transfer rates significantly at equal or even lower computation costs. Intra-architecture transfer rate is increased by 23% compared to classical ensemble-based attacks, while requiring 4 times less training epochs. In the inter-architecture case, we show that we can combine our method with ensemble-based attacks to increase their transfer rate by up to 15% with constant training computational cost.

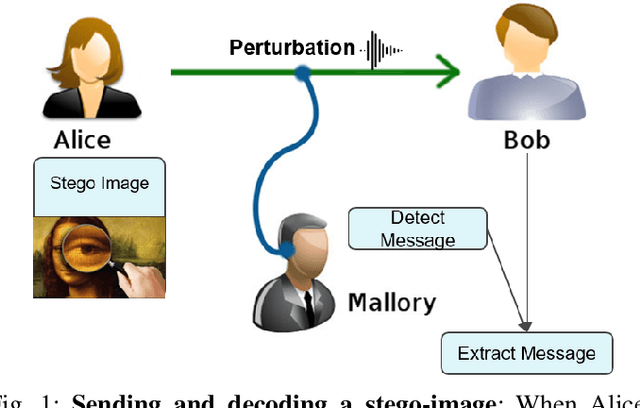

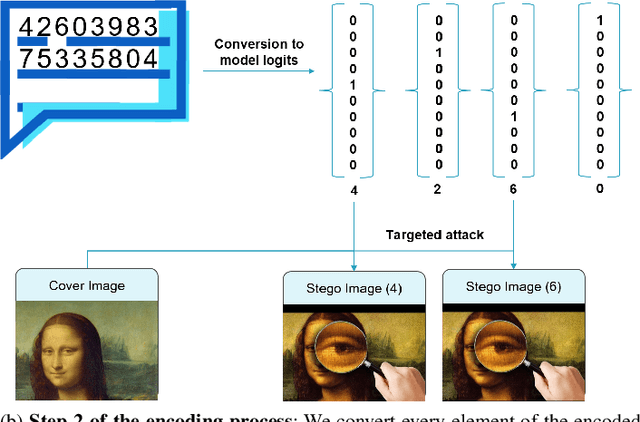

Adversarial Embedding: A robust and elusive Steganography and Watermarking technique

Nov 14, 2019

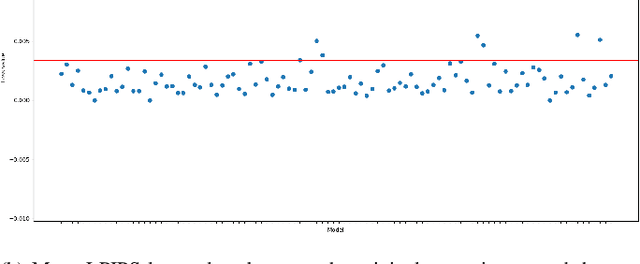

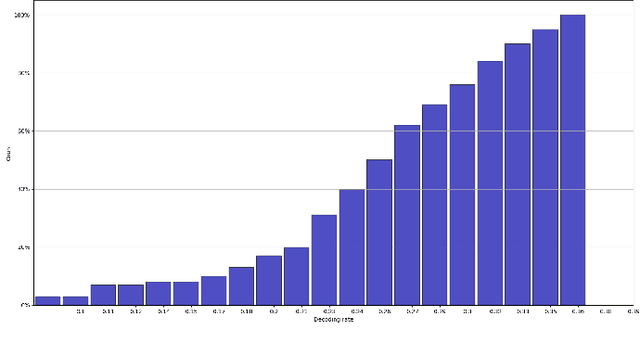

Abstract:We propose adversarial embedding, a new steganography and watermarking technique that embeds secret information within images. The key idea of our method is to use deep neural networks for image classification and adversarial attacks to embed secret information within images. Thus, we use the attacks to embed an encoding of the message within images and the related deep neural network outputs to extract it. The key properties of adversarial attacks (invisible perturbations, nontransferability, resilience to tampering) offer guarantees regarding the confidentiality and the integrity of the hidden messages. We empirically evaluate adversarial embedding using more than 100 models and 1,000 messages. Our results confirm that our embedding passes unnoticed by both humans and steganalysis methods, while at the same time impedes illicit retrieval of the message (less than 13% recovery rate when the interceptor has some knowledge about our model), and is resilient to soft and (to some extent) aggressive image tampering (up to 100% recovery rate under jpeg compression). We further develop our method by proposing a new type of adversarial attack which improves the embedding density (amount of hidden information) of our method to up to 10 bits per pixel.

Test Selection for Deep Learning Systems

Apr 30, 2019

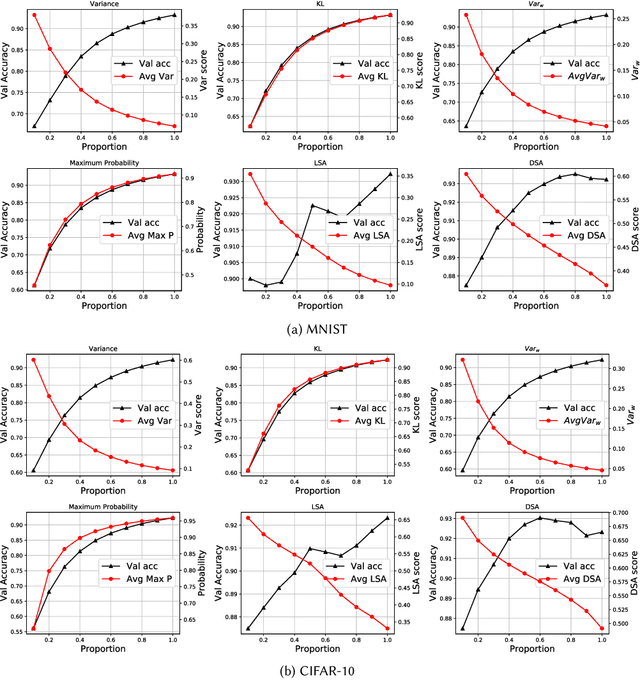

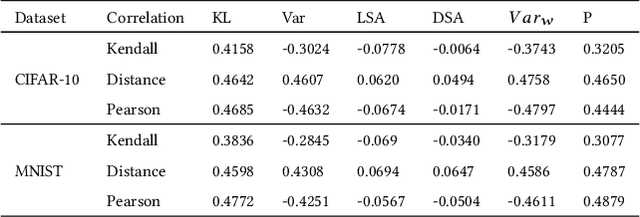

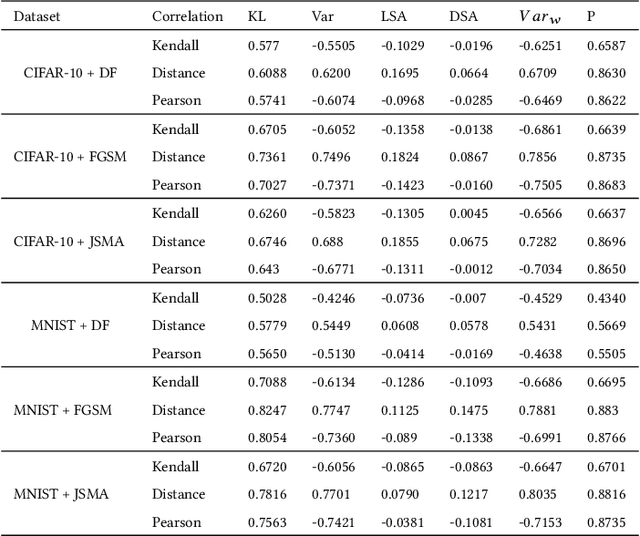

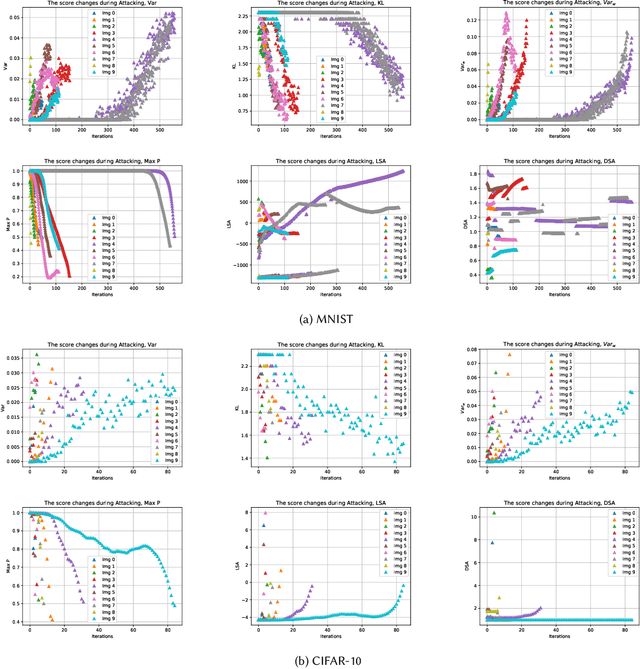

Abstract:Testing of deep learning models is challenging due to the excessive number and complexity of computations involved. As a result, test data selection is performed manually and in an ad hoc way. This raises the question of how we can automatically select candidate test data to test deep learning models. Recent research has focused on adapting test selection metrics from code-based software testing (such as coverage) to deep learning. However, deep learning models have different attributes from code such as spread of computations across the entire network reflecting training data properties, balance of neuron weights and redundancy (use of many more neurons than needed). Such differences make code-based metrics inappropriate to select data that can challenge the models (can trigger misclassification). We thus propose a set of test selection metrics based on the notion of model uncertainty (model confidence on specific inputs). Intuitively, the more uncertain we are about a candidate sample, the more likely it is that this sample triggers a misclassification. Similarly, the samples for which we are the most uncertain, are the most informative and should be used to improve the model by retraining. We evaluate these metrics on two widely-used image classification problems involving real and artificial (adversarial) data. We show that uncertainty-based metrics have a strong ability to select data that are misclassified and lead to major improvement in classification accuracy during retraining: up to 80% more gain than random selection and other state-of-the-art metrics on one dataset and up to 29% on the other.

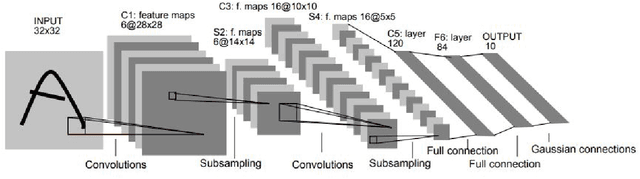

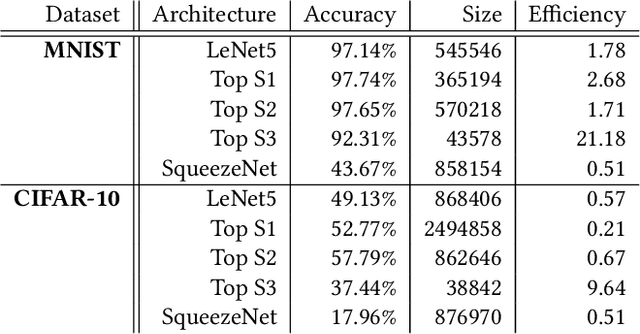

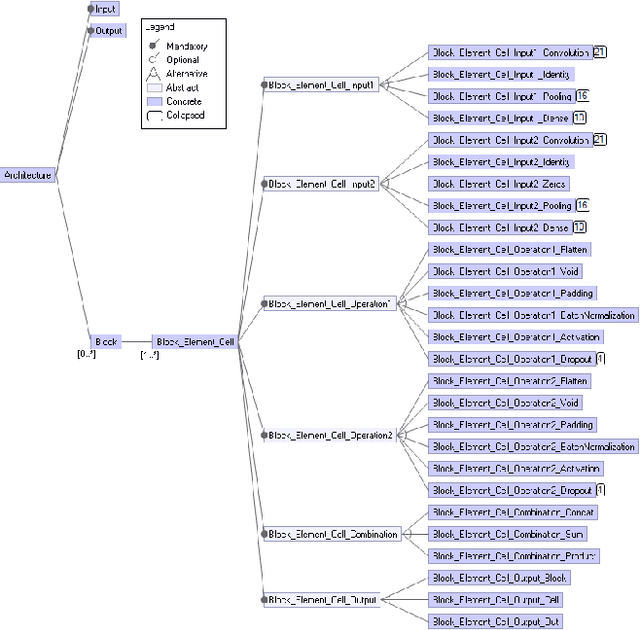

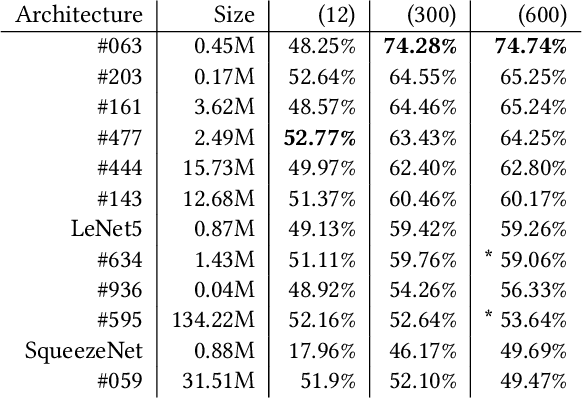

Automated Search for Configurations of Deep Neural Network Architectures

Apr 09, 2019

Abstract:Deep Neural Networks (DNNs) are intensively used to solve a wide variety of complex problems. Although powerful, such systems require manual configuration and tuning. To this end, we view DNNs as configurable systems and propose an end-to-end framework that allows the configuration, evaluation and automated search for DNN architectures. Therefore, our contribution is threefold. First, we model the variability of DNN architectures with a Feature Model (FM) that generalizes over existing architectures. Each valid configuration of the FM corresponds to a valid DNN model that can be built and trained. Second, we implement, on top of Tensorflow, an automated procedure to deploy, train and evaluate the performance of a configured model. Third, we propose a method to search for configurations and demonstrate that it leads to good DNN models. We evaluate our method by applying it on image classification tasks (MNIST, CIFAR-10) and show that, with limited amount of computation and training, our method can identify high-performing architectures (with high accuracy). We also demonstrate that we outperform existing state-of-the-art architectures handcrafted by ML researchers. Our FM and framework have been released %and are publicly available to support replication and future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge