Yutong Guo

GRNN:Recurrent Neural Network based on Ghost Features for Video Super-Resolution

May 14, 2025

Abstract:Modern video super-resolution (VSR) systems based on convolutional neural networks (CNNs) require huge computational costs. The problem of feature redundancy is present in most models in many domains, but is rarely discussed in VSR. We experimentally observe that many features in VSR models are also similar to each other, so we propose to use "Ghost features" to reduce this redundancy. We also analyze the so-called "gradient disappearance" phenomenon generated by the conventional recurrent convolutional network (RNN) model, and combine the Ghost module with RNN to complete the modeling on time series. The current frame is used as input to the model together with the next frame, the output of the previous frame and the hidden state. Extensive experiments on several benchmark models and datasets show that the PSNR and SSIM of our proposed modality are improved to some extent. Some texture details in the video are also better preserved.

AMPCliff: quantitative definition and benchmarking of activity cliffs in antimicrobial peptides

Apr 15, 2024

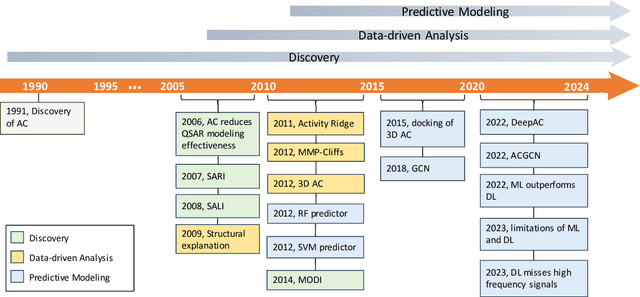

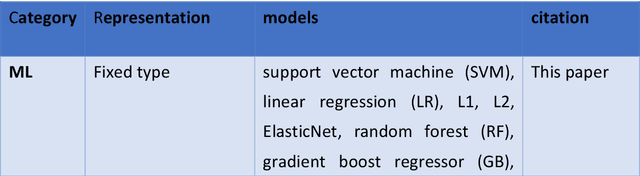

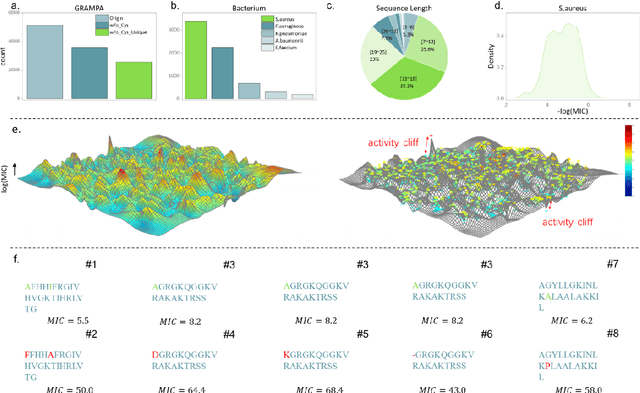

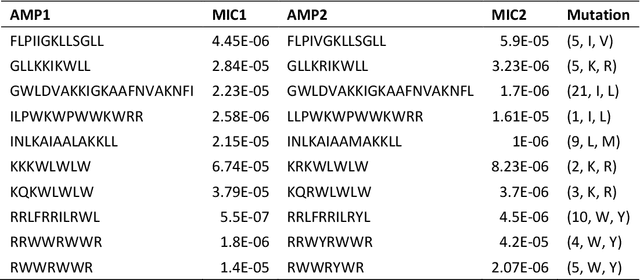

Abstract:Activity cliff (AC) is a phenomenon that a pair of similar molecules differ by a small structural alternation but exhibit a large difference in their biochemical activities. The AC of small molecules has been extensively investigated but limited knowledge is accumulated about the AC phenomenon in peptides with canonical amino acids. This study introduces a quantitative definition and benchmarking framework AMPCliff for the AC phenomenon in antimicrobial peptides (AMPs) composed by canonical amino acids. A comprehensive analysis of the existing AMP dataset reveals a significant prevalence of AC within AMPs. AMPCliff quantifies the activities of AMPs by the metric minimum inhibitory concentration (MIC), and defines 0.9 as the minimum threshold for the normalized BLOSUM62 similarity score between a pair of aligned peptides with at least two-fold MIC changes. This study establishes a benchmark dataset of paired AMPs in Staphylococcus aureus from the publicly available AMP dataset GRAMPA, and conducts a rigorous procedure to evaluate various AMP AC prediction models, including nine machine learning, four deep learning algorithms, four masked language models, and four generative language models. Our analysis reveals that these models are capable of detecting AMP AC events and the pre-trained protein language ESM2 model demonstrates superior performance across the evaluations. The predictive performance of AMP activity cliffs remains to be further improved, considering that ESM2 with 33 layers only achieves the Spearman correlation coefficient=0.50 for the regression task of the MIC values on the benchmark dataset. Source code and additional resources are available at https://www.healthinformaticslab.org/supp/ or https://github.com/Kewei2023/AMPCliff-generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge