Yupeng Han

Route to Reason: Adaptive Routing for LLM and Reasoning Strategy Selection

May 26, 2025

Abstract:The inherent capabilities of a language model (LM) and the reasoning strategies it employs jointly determine its performance in reasoning tasks. While test-time scaling is regarded as an effective approach to tackling complex reasoning tasks, it incurs substantial computational costs and often leads to "overthinking", where models become trapped in "thought pitfalls". To address this challenge, we propose Route-To-Reason (RTR), a novel unified routing framework that dynamically allocates both LMs and reasoning strategies according to task difficulty under budget constraints. RTR learns compressed representations of both expert models and reasoning strategies, enabling their joint and adaptive selection at inference time. This method is low-cost, highly flexible, and can be seamlessly extended to arbitrary black-box or white-box models and strategies, achieving true plug-and-play functionality. Extensive experiments across seven open source models and four reasoning strategies demonstrate that RTR achieves an optimal trade-off between accuracy and computational efficiency among all baselines, achieving higher accuracy than the best single model while reducing token usage by over 60%.

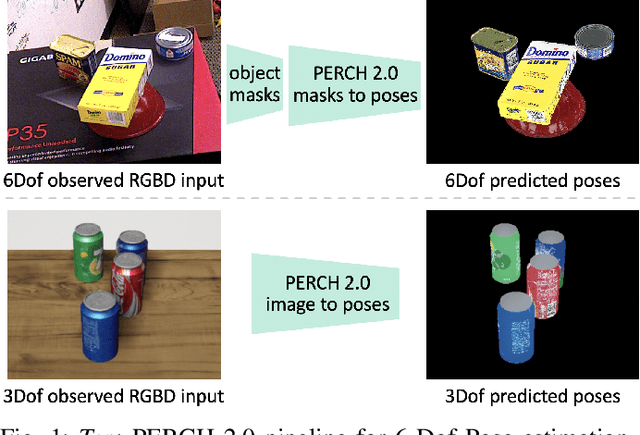

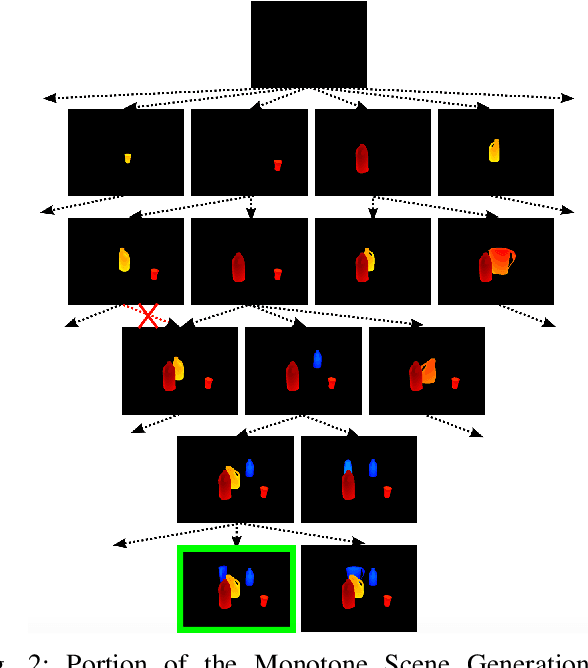

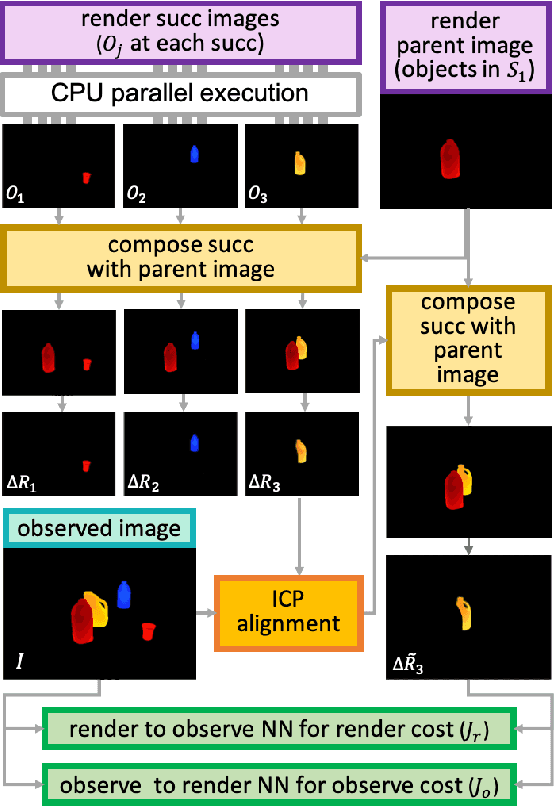

PERCH 2.0 : Fast and Accurate GPU-based Perception via Search for Object Pose Estimation

Aug 01, 2020

Abstract:Pose estimation of known objects is fundamental to tasks such as robotic grasping and manipulation. The need for reliable grasping imposes stringent accuracy requirements on pose estimation in cluttered, occluded scenes in dynamic environments. Modern methods employ large sets of training data to learn features in order to find correspondence between 3D models and observed data. However these methods require extensive annotation of ground truth poses. An alternative is to use algorithms that search for the best explanation of the observed scene in a space of possible rendered scenes. A recently developed algorithm, PERCH (PErception Via SeaRCH) does so by using depth data to converge to a globally optimum solution using a search over a specially constructed tree. While PERCH offers strong guarantees on accuracy, the current formulation suffers from low scalability owing to its high runtime. In addition, the sole reliance on depth data for pose estimation restricts the algorithm to scenes where no two objects have the same shape. In this work, we propose PERCH 2.0, a novel perception via search strategy that takes advantage of GPU acceleration and RGB data. We show that our approach can achieve a speedup of 100x over PERCH, as well as better accuracy than the state-of-the-art data-driven approaches on 6-DoF pose estimation without the need for annotating ground truth poses in the training data. Our code and video are available at https://sbpl-cruz.github.io/perception/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge