Young-Sik Shin

Uni-Mapper: Unified Mapping Framework for Multi-modal LiDARs in Complex and Dynamic Environments

Jul 28, 2025Abstract:The unification of disparate maps is crucial for enabling scalable robot operation across multiple sessions and collaborative multi-robot scenarios. However, achieving a unified map robust to sensor modalities and dynamic environments remains a challenging problem. Variations in LiDAR types and dynamic elements lead to differences in point cloud distribution and scene consistency, hindering reliable descriptor generation and loop closure detection essential for accurate map alignment. To address these challenges, this paper presents Uni-Mapper, a dynamic-aware 3D point cloud map merging framework for multi-modal LiDAR systems. It comprises dynamic object removal, dynamic-aware loop closure, and multi-modal LiDAR map merging modules. A voxel-wise free space hash map is built in a coarse-to-fine manner to identify and reject dynamic objects via temporal occupancy inconsistencies. The removal module is integrated with a LiDAR global descriptor, which encodes preserved static local features to ensure robust place recognition in dynamic environments. In the final stage, multiple pose graph optimizations are conducted for both intra-session and inter-map loop closures. We adopt a centralized anchor-node strategy to mitigate intra-session drift errors during map merging. In the final stage, centralized anchor-node-based pose graph optimization is performed to address intra- and inter-map loop closures for globally consistent map merging. Our framework is evaluated on diverse real-world datasets with dynamic objects and heterogeneous LiDARs, showing superior performance in loop detection across sensor modalities, robust mapping in dynamic environments, and accurate multi-map alignment over existing methods. Project Page: https://sparolab.github.io/research/uni_mapper.

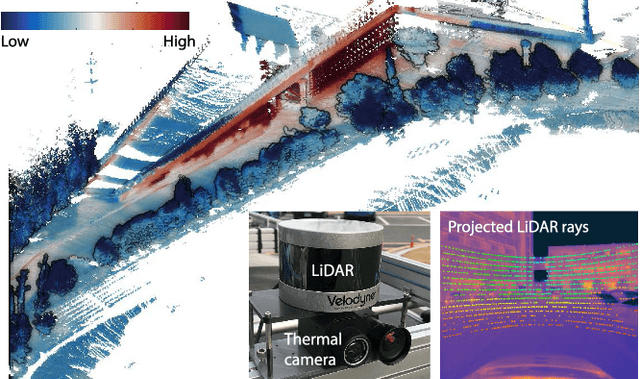

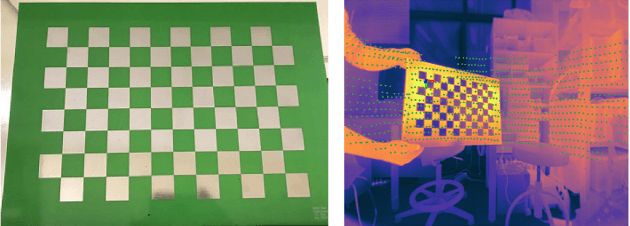

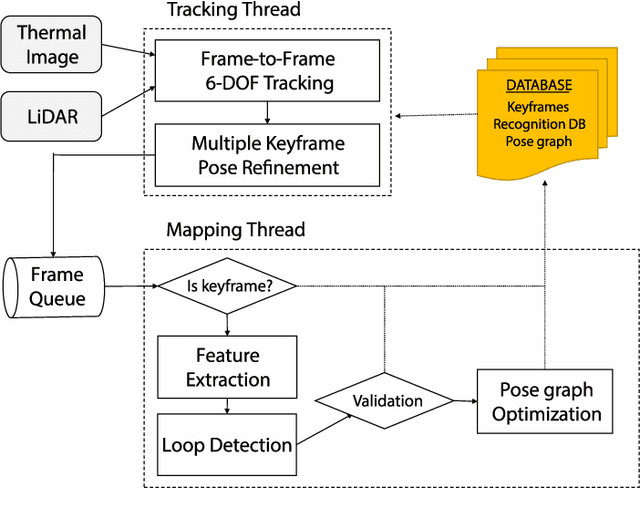

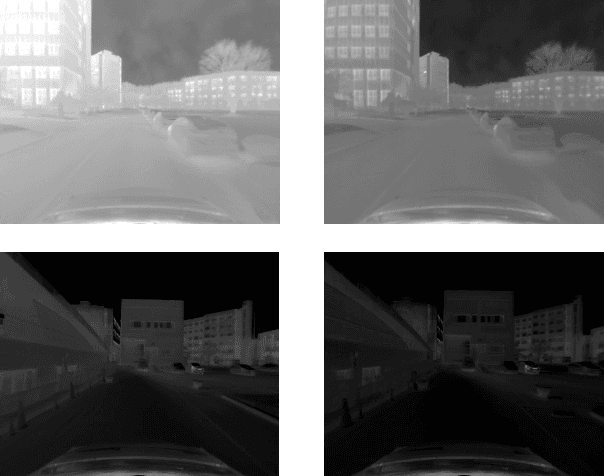

Sparse Depth Enhanced Direct Thermal-infrared SLAM Beyond the Visible Spectrum

Feb 28, 2019

Abstract:In this paper, we propose a thermal-infrared simultaneous localization and mapping (SLAM) system enhanced by sparse depth measurements from Light Detection and Ranging (LiDAR). Thermal-infrared cameras are relatively robust against fog, smoke, and dynamic lighting conditions compared to RGB cameras operating under the visible spectrum. Due to the advantages of thermal-infrared cameras, exploiting them for motion estimation and mapping is highly appealing. However, operating a thermal-infrared camera directly in existing vision-based methods is difficult because of the modality difference. This paper proposes a method to use sparse depth measurement for 6-DOF motion estimation by directly tracking under 14- bit raw measurement of the thermal camera. In addition, we perform a refinement to improve the local accuracy and include a loop closure to maintain global consistency. The experimental results demonstrate that the system is not only robust under various lighting conditions such as day and night, but also overcomes the scale problem of monocular cameras. The video is available at https://youtu.be/oO7lT3uAzLc.

Complex Urban LiDAR Data Set

Mar 16, 2018

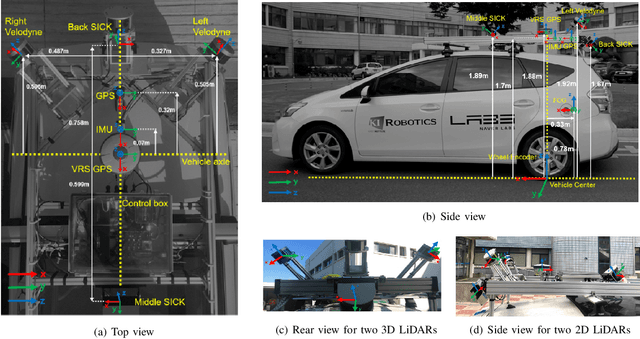

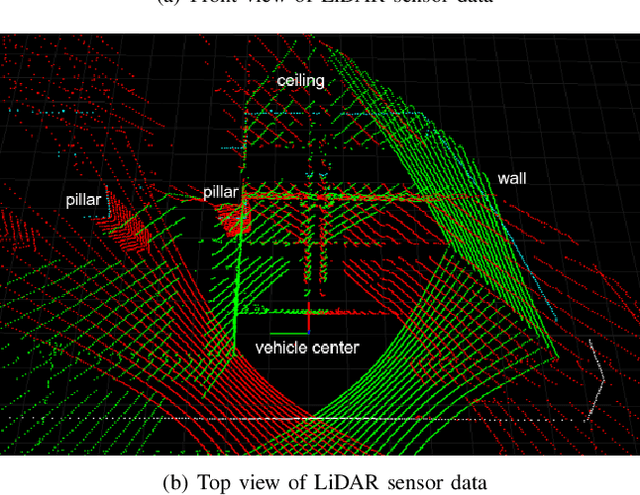

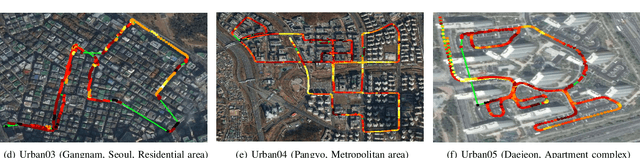

Abstract:This paper presents a Light Detection and Ranging (LiDAR) data set that targets complex urban environments. Urban environments with high-rise buildings and congested traffic pose a significant challenge for many robotics applications. The presented data set is unique in the sense it is able to capture the genuine features of an urban environment (e.g. metropolitan areas, large building complexes and underground parking lots). Data of two-dimensional (2D) and threedimensional (3D) LiDAR, which are typical types of LiDAR sensors, are provided in the data set. The two 16-ray 3D LiDARs are tilted on both sides for maximal coverage. One 2D LiDAR faces backward while the other faces forwards to collect data of roads and buildings, respectively. Raw sensor data from Fiber Optic Gyro (FOG), Inertial Measurement Unit (IMU), and the Global Positioning System (GPS) are presented in a file format for vehicle pose estimation. The pose information of the vehicle estimated at 100 Hz is also presented after applying the graph simultaneous localization and mapping (SLAM) algorithm. For the convenience of development, the file player and data viewer in Robot Operating System (ROS) environment were also released via the web page. The full data sets are available at: http://irap.kaist.ac.kr/dataset. In this website, 3D preview of each data set is provided using WebGL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge