Yijun Gu

Active Hierarchical Imitation and Reinforcement Learning

Dec 14, 2020

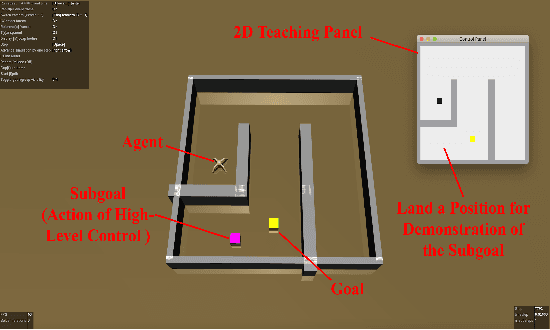

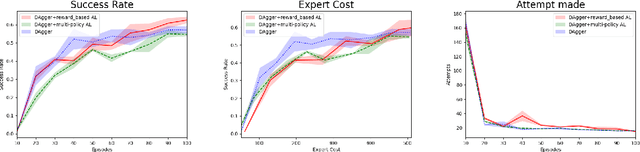

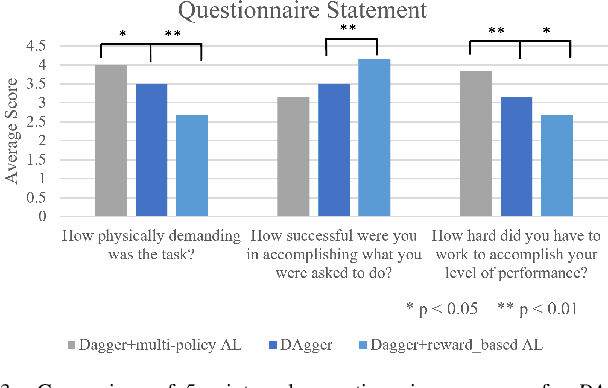

Abstract:Humans can leverage hierarchical structures to split a task into sub-tasks and solve problems efficiently. Both imitation and reinforcement learning or a combination of them with hierarchical structures have been proven to be an efficient way for robots to learn complex tasks with sparse rewards. However, in the previous work of hierarchical imitation and reinforcement learning, the tested environments are in relatively simple 2D games, and the action spaces are discrete. Furthermore, many imitation learning works focusing on improving the policies learned from the expert polices that are hard-coded or trained by reinforcement learning algorithms, rather than human experts. In the scenarios of human-robot interaction, humans can be required to provide demonstrations to teach the robot, so it is crucial to improve the learning efficiency to reduce expert efforts, and know human's perception about the learning/training process. In this project, we explored different imitation learning algorithms and designed active learning algorithms upon the hierarchical imitation and reinforcement learning framework we have developed. We performed an experiment where five participants were asked to guide a randomly initialized agent to a random goal in a maze. Our experimental results showed that using DAgger and reward-based active learning method can achieve better performance while saving more human efforts physically and mentally during the training process.

Assistive VR Gym: Using Interactions with Real People to Improve Virtual Assistive Robots

Jul 09, 2020

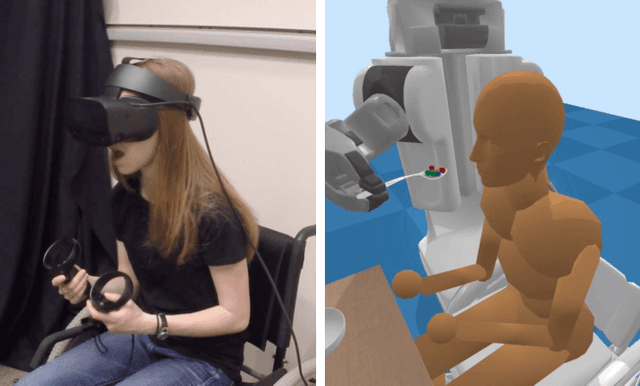

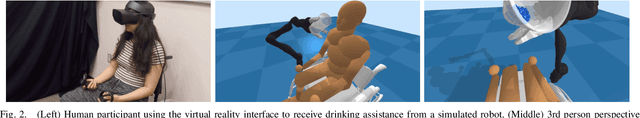

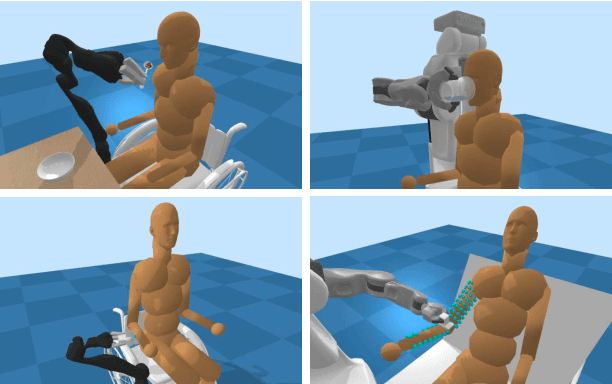

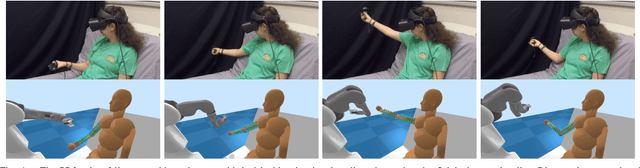

Abstract:Versatile robotic caregivers could benefit millions of people worldwide, including older adults and people with disabilities. Recent work has explored how robotic caregivers can learn to interact with people through physics simulations, yet transferring what has been learned to real robots remains challenging. Virtual reality (VR) has the potential to help bridge the gap between simulations and the real world. We present Assistive VR Gym (AVR Gym), which enables real people to interact with virtual assistive robots. We also provide evidence that AVR Gym can help researchers improve the performance of simulation-trained assistive robots with real people. Prior to AVR Gym, we trained robot control policies (Original Policies) solely in simulation for four robotic caregiving tasks (robot-assisted feeding, drinking, itch scratching, and bed bathing) with two simulated robots (PR2 from Willow Garage and Jaco from Kinova). With AVR Gym, we developed Revised Policies based on insights gained from testing the Original policies with real people. Through a formal study with eight participants in AVR Gym, we found that the Original policies performed poorly, the Revised policies performed significantly better, and that improvements to the biomechanical models used to train the Revised policies resulted in simulated people that better match real participants. Notably, participants significantly disagreed that the Original policies were successful at assistance, but significantly agreed that the Revised policies were successful at assistance. Overall, our results suggest that VR can be used to improve the performance of simulation-trained control policies with real people without putting people at risk, thereby serving as a valuable stepping stone to real robotic assistance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge