Xingzhe He

Soft Multicopter Control using Neural Dynamics Identification

Sep 02, 2020

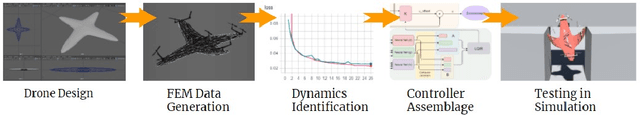

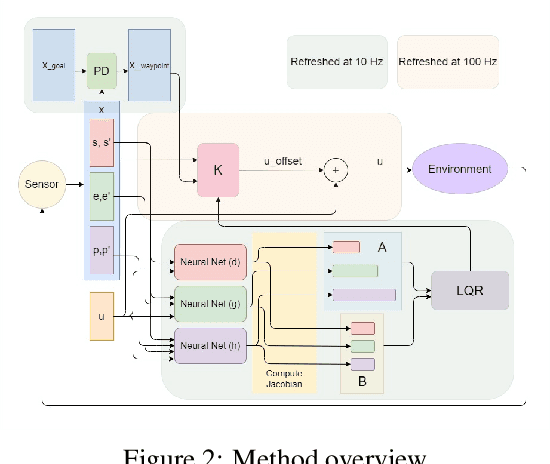

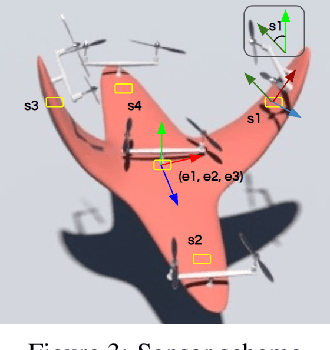

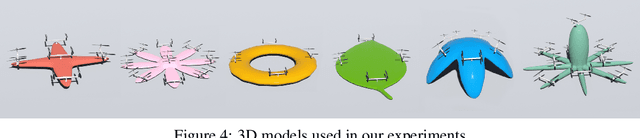

Abstract:Dynamic control of a soft-body robot to deliver complex behaviors with low-dimensional actuation inputs is challenging. In this paper, we present a computational approach to automatically generate versatile, underactuated control policies that drives soft-bodied machines with complicated structures and nonlinear dynamics. Our target application is focused on the autonomous control of a soft multicopter, featured by its elastic material components, non-conventional shapes, and asymmetric rotor layouts, to precisely deliver compliant deformation and agile locomotion. The central piece of our approach lies in a lightweight neural surrogate model to identify and predict the temporal evolution of a set of geometric variables characterizing an elastic soft body. This physics-based learning model is further integrated into a Linear Quadratic Regulator (LQR) control loop enhanced by a novel online fixed-point relinearization scheme to accommodate the dynamic body balance, allowing an aggressive reduction of the computational overhead caused by the conventional full-scale sensing-simulation-control workflow. We demonstrate the efficacy of our approach by generating controllers for a broad spectrum of customized soft multicopter designs and testing them in a high-fidelity physics simulation environment. The control algorithm enables the multicopters to perform a variety of tasks, including hovering, trajectory tracking, cruising and active deforming.

Learning Physical Constraints with Neural Projections

Jun 23, 2020

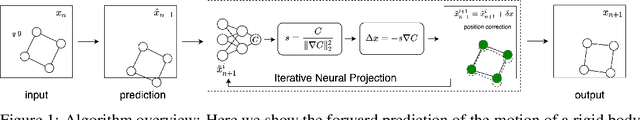

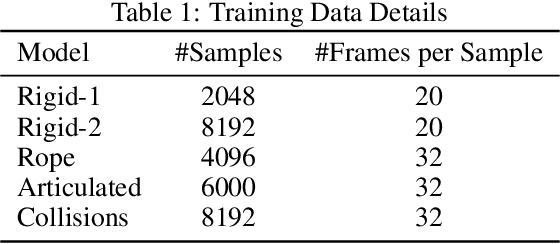

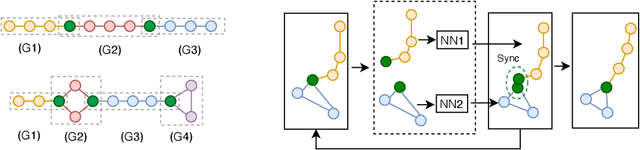

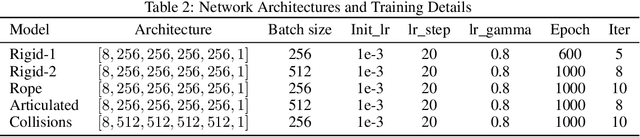

Abstract:We propose a new family of neural networks to predict the behaviors of physical systems by learning their underpinning constraints. A neural projection operator liesat the heart of our approach, composed of a lightweight network with an embedded recursive architecture that interactively enforces learned underpinning constraints and predicts the various governed behaviors of different physical systems. Our neural projection operator is motivated by the position-based dynamics model that has been used widely in game and visual effects industries to unify the various fast physics simulators. Our method can automatically and effectively uncover a broad range of constraints from observation point data, such as length, angle, bending, collision, boundary effects, and their arbitrary combinations, without any connectivity priors. We provide a multi-group point representation in conjunction with a configurable network connection mechanism to incorporate prior inputs for processing complex physical systems. We demonstrated the efficacy of our approach by learning a set of challenging physical systems all in a unified and simple fashion including: rigid bodies with complex geometries, ropes with varying length and bending, articulated soft and rigid bodies, and multi-object collisions with complex boundaries.

RoeNets: Predicting Discontinuity of Hyperbolic Systems from Continuous Data

Jun 07, 2020

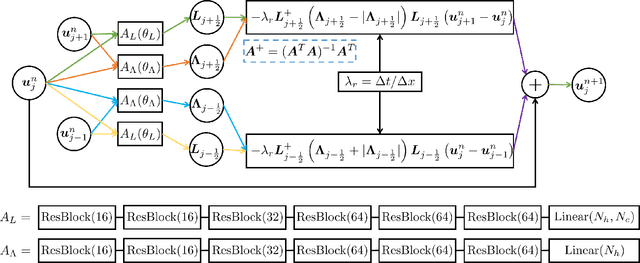

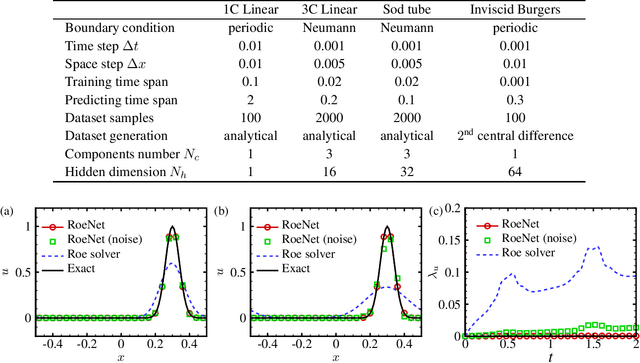

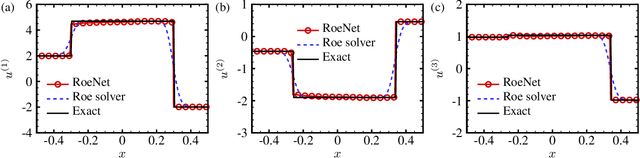

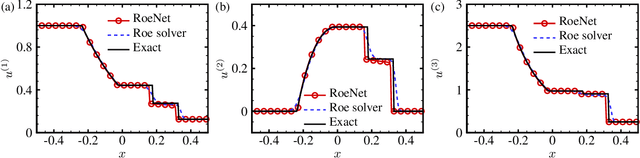

Abstract:We introduce Roe Neural Networks (RoeNets) that can predict the discontinuity of the hyperbolic conservation laws (HCLs) based on short-term discontinuous and even continuous training data. Our methodology is inspired by Roe approximate Riemann solver (P. L. Roe, J. Comput. Phys., vol. 43, 1981, pp. 357--372), which is one of the most fundamental HCLs numerical solvers. In order to accurately solve the HCLs, Roe argues the need to construct a Roe matrix that fulfills "Property U", including diagonalizable with real eigenvalues, consistent with the exact Jacobian, and preserving conserved quantities. However, the construction of such matrix cannot be achieved by any general numerical method. Our model made a breakthrough improvement in solving the HCLs by applying Roe solver under a neural network perspective. To enhance the expressiveness of our model, we incorporate pseudoinverses into a novel context to enable a hidden dimension so that we are flexible with the number of parameters. The ability of our model to predict long-term discontinuity from a short window of continuous training data is in general considered impossible using traditional machine learning approaches. We demonstrate that our model can generate highly accurate predictions of evolution of convection without dissipation and the discontinuity of hyperbolic systems from smooth training data.

Neural Vortex Method: from Finite Lagrangian Particles to Infinite Dimensional Eulerian Dynamics

Jun 07, 2020

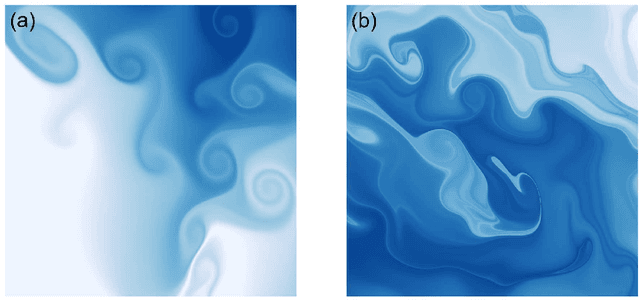

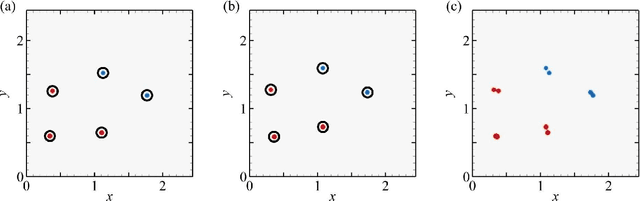

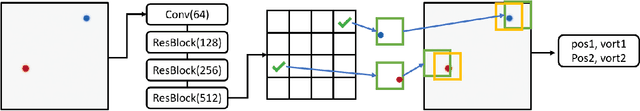

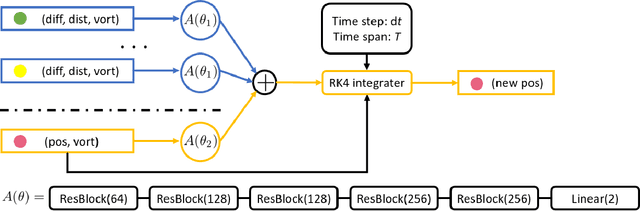

Abstract:In the field of fluid numerical analysis, there has been a long-standing problem: lacking of a rigorous mathematical tool to map from a continuous flow field to discrete vortex particles, hurdling the Lagrangian particles from inheriting the high resolution of a large-scale Eulerian solver. To tackle this challenge, we propose a novel learning-based framework, the Neural Vortex Method (NVM), which builds a neural-network description of the Lagrangian vortex structures and their interaction dynamics to reconstruct the high-resolution Eulerian flow field in a physically-precise manner. The key components of our infrastructure consist of two networks: a vortex representation network to identify the Lagrangian vortices from a grid-based velocity field and a vortex interaction network to learn the underlying governing dynamics of these finite structures. By embedding these two networks with a vorticity-to-velocity Poisson solver and training its parameters using the high-fidelity data obtained from high-resolution direct numerical simulation, we can predict the accurate fluid dynamics on a precision level that was infeasible for all the previous conventional vortex methods (CVMs). To the best of our knowledge, our method is the first approach that can utilize motions of finite particles to learn infinite dimensional dynamic systems. We demonstrate the efficacy of our method in generating highly accurate prediction results, with low computational cost, of the leapfrogging vortex rings system, the turbulence system, and the systems governed by Euler equations with different external forces.

Symplectic Neural Networks in Taylor Series Form for Hamiltonian Systems

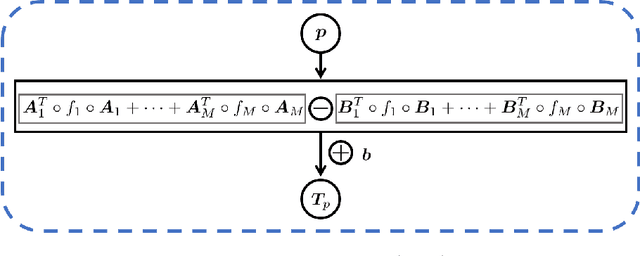

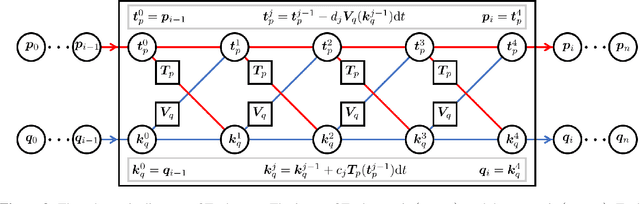

May 13, 2020

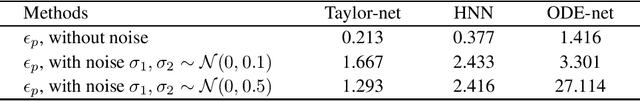

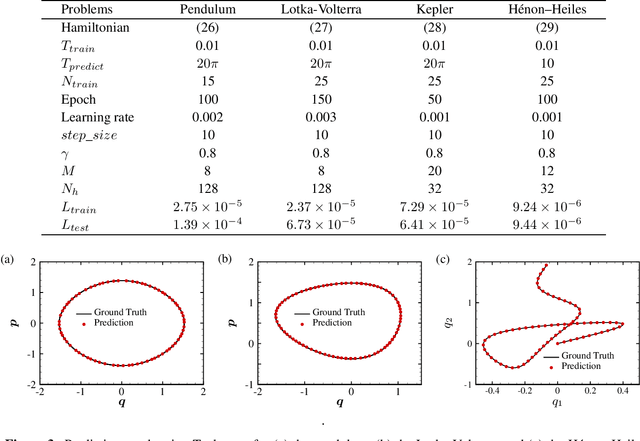

Abstract:We propose an effective and light-weighted learning algorithm, Symplectic Taylor Neural Networks (Taylor-nets), to conduct continuous, long-term predictions of a complex Hamiltonian dynamic system based on sparse, short-term observations. At the heart of our algorithm is a novel neural network architecture consisting of two sub-networks. Both are embedded with terms in the form of Taylor series expansion that are designed with a symmetric structure. The key mechanism underpinning our infrastructure is the strong expressiveness and special symmetric property of the Taylor series expansion, which can inherently accommodate the numerical fitting process of the spatial derivatives of the Hamiltonian as well as preserve its symplectic structure. We further incorporate a fourth-order symplectic integrator in conjunction with neural ODEs' framework into our Taylor-net architecture to learn the continuous time evolution of the target systems while preserving their symplectic structures simultaneously. We demonstrated the efficacy of our Tayler-net in predicting a broad spectrum of Hamiltonian dynamic systems, including the pendulum, the Lotka-Volterra, the Kepler, and the H\`enon-Heiles systems. Compared with previous methods, our model exhibits its unique computational merits by using extremely small training data with short training period (6000 times shorter than the predicting period), small sample sizes (5 times smaller compared with the state-of-the-art methods), and no intermediary data to train the networks, while outperforming others to a great extent regarding the prediction accuracy, the convergence rate, and the robustness.

AdvectiveNet: An Eulerian-Lagrangian Fluidic reservoir for Point Cloud Processing

Feb 24, 2020

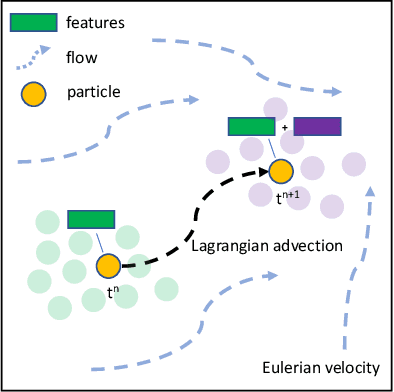

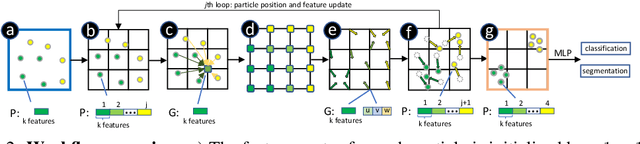

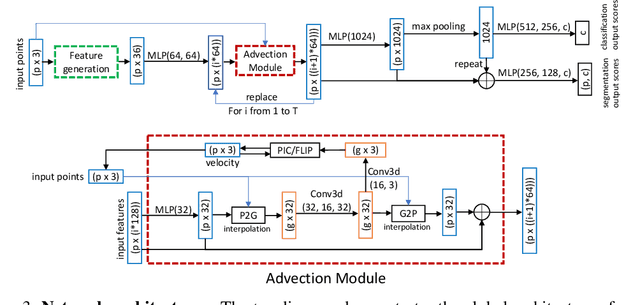

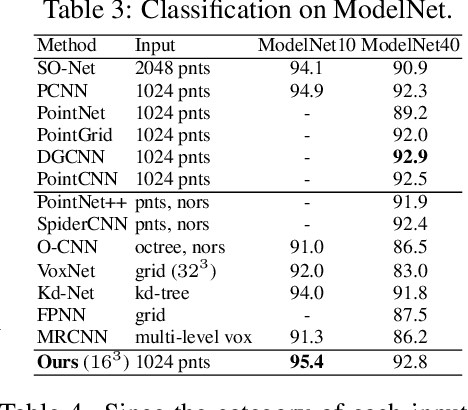

Abstract:This paper presents a novel physics-inspired deep learning approach for point cloud processing motivated by the natural flow phenomena in fluid mechanics. Our learning architecture jointly defines data in an Eulerian world space, using a static background grid, and a Lagrangian material space, using moving particles. By introducing this Eulerian-Lagrangian representation, we are able to naturally evolve and accumulate particle features using flow velocities generated from a generalized, high-dimensional force field. We demonstrate the efficacy of this system by solving various point cloud classification and segmentation problems with state-of-the-art performance. The entire geometric reservoir and data flow mimics the pipeline of the classic PIC/FLIP scheme in modeling natural flow, bridging the disciplines of geometric machine learning and physical simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge