Xiao-Yun Zhou

Real-time Surgical Environment Enhancement for Robot-Assisted Minimally Invasive Surgery Based on Super-Resolution

Nov 08, 2020

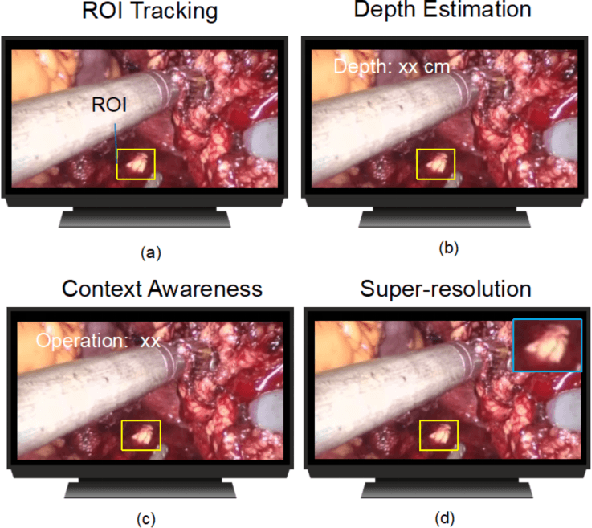

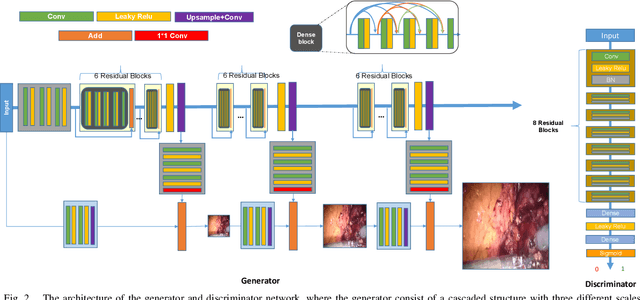

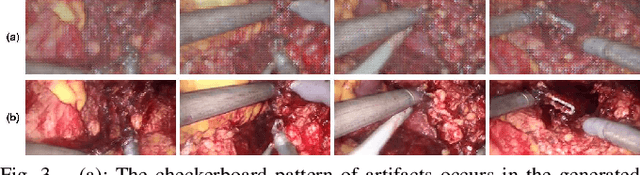

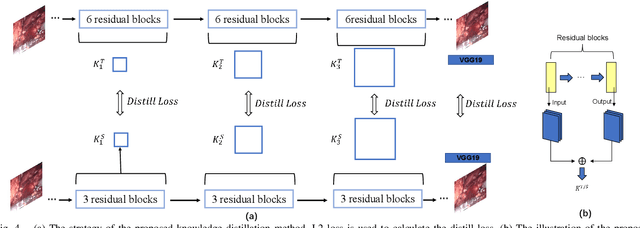

Abstract:In Robot-Assisted Minimally Invasive Surgery (RAMIS), a camera assistant is normally required to control the position and zooming ratio of the laparoscope, following the surgeon's instructions. However, moving the laparoscope frequently may lead to unstable and suboptimal views, while the adjustment of zooming ratio may interrupt the workflow of the surgical operation. To this end, we propose a multi-scale Generative Adversarial Network (GAN)-based video super-resolution method to construct a framework for automatic zooming ratio adjustment. It can provide automatic real-time zooming for high-quality visualization of the Region Of Interest (ROI) during the surgical operation. In the pipeline of the framework, the Kernel Correlation Filter (KCF) tracker is used for tracking the tips of the surgical tools, while the Semi-Global Block Matching (SGBM) based depth estimation and Recurrent Neural Network (RNN)-based context-awareness are developed to determine the upscaling ratio for zooming. The framework is validated with the JIGSAW dataset and Hamlyn Centre Laparoscopic/Endoscopic Video Datasets, with results demonstrating its practicability.

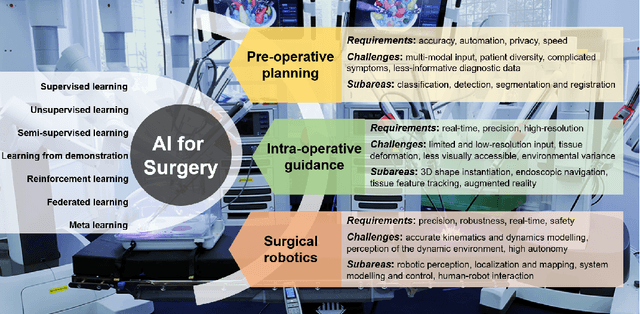

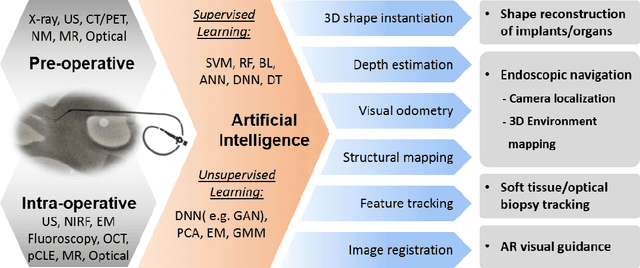

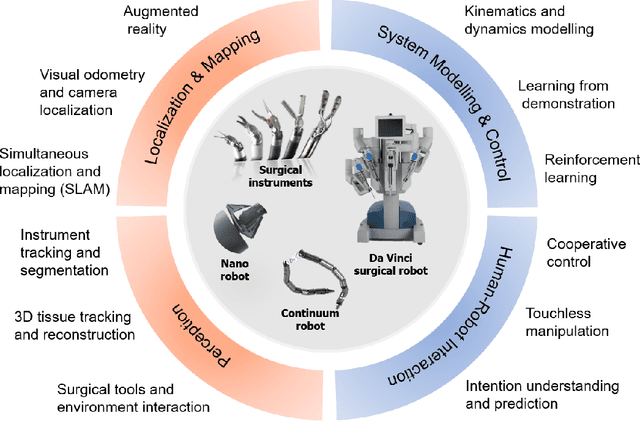

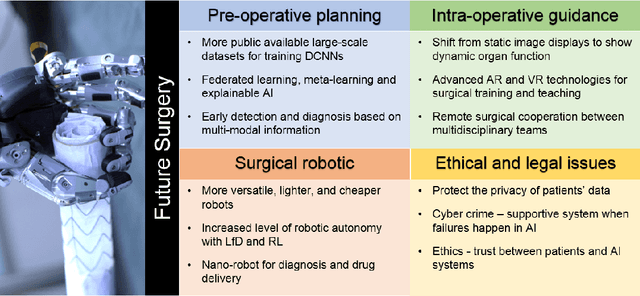

Artificial Intelligence in Surgery

Dec 23, 2019

Abstract:Artificial Intelligence (AI) is gradually changing the practice of surgery with the advanced technological development of imaging, navigation and robotic intervention. In this article, the recent successful and influential applications of AI in surgery are reviewed from pre-operative planning and intra-operative guidance to the integration of surgical robots. We end with summarizing the current state, emerging trends and major challenges in the future development of AI in surgery.

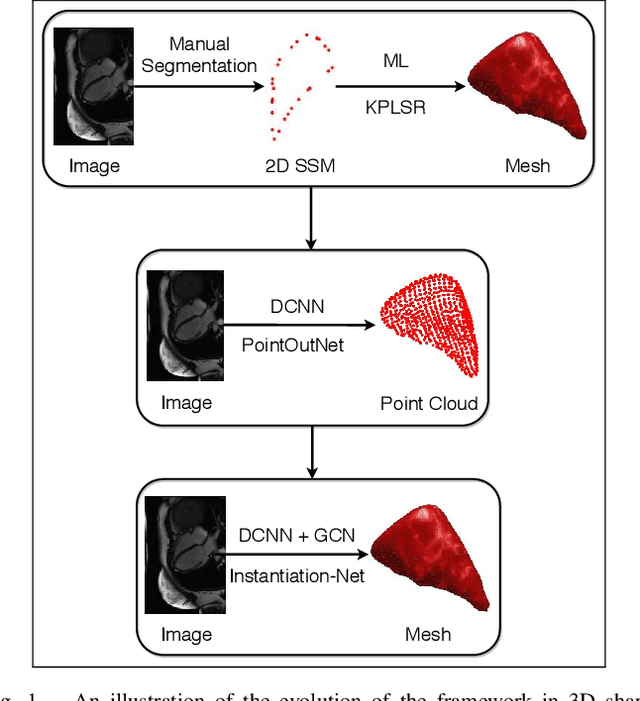

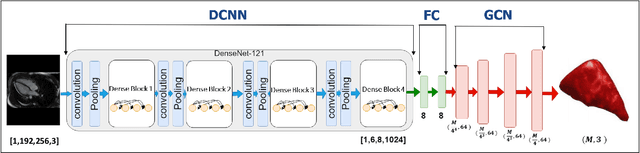

Instantiation-Net: 3D Mesh Reconstruction from Single 2D Image for Right Ventricle

Sep 16, 2019

Abstract:3D shape instantiation which reconstructs the 3D shape of a target from limited 2D images or projections is an emerging technique for surgical intervention. It improves the currently less-informative and insufficient 2D navigation schemes for robot-assisted Minimally Invasive Surgery (MIS) to 3D navigation. Previously, a general and registration-free framework was proposed for 3D shape instantiation based on Kernel Partial Least Square Regression (KPLSR), requiring manually segmented anatomical structures as the pre-requisite. Two hyper-parameters including the Gaussian width and component number also need to be carefully adjusted. Deep Convolutional Neural Network (DCNN) based framework has also been proposed to reconstruct a 3D point cloud from a single 2D image, with end-to-end and fully automatic learning. In this paper, an Instantiation-Net is proposed to reconstruct the 3D mesh of a target from its a single 2D image, by using DCNN to extract features from the 2D image and Graph Convolutional Network (GCN) to reconstruct the 3D mesh, and using Fully Connected (FC) layers to connect the DCNN to GCN. Detailed validation was performed to demonstrate the practical strength of the method and its potential clinical use.

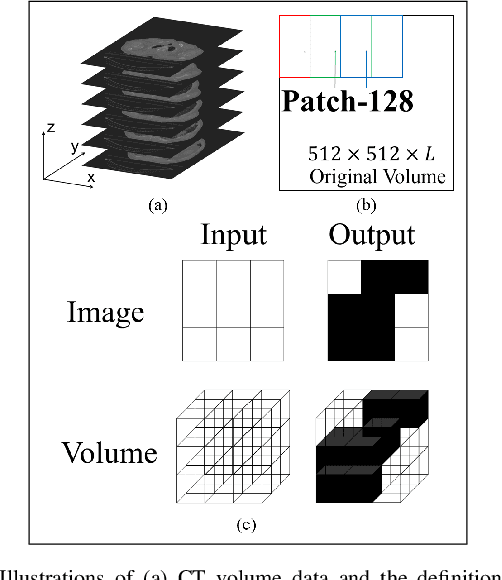

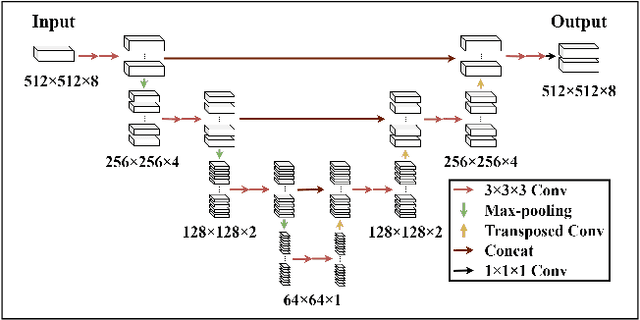

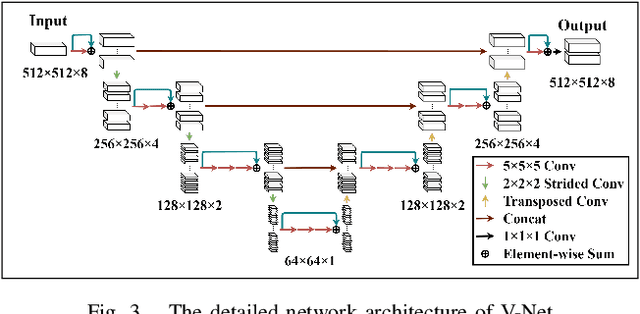

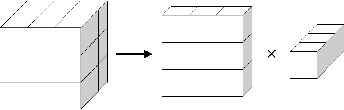

Z-Net: an Asymmetric 3D DCNN for Medical CT Volume Segmentation

Sep 16, 2019

Abstract:Accurate volume segmentation from the Computed Tomography (CT) scan is a common prerequisite for pre-operative planning, intra-operative guidance and quantitative assessment of therapeutic outcomes in robot-assisted Minimally Invasive Surgery (MIS). The use of 3D Deep Convolutional Neural Network (DCNN) is a viable solution for this task but is memory intensive. The use of patch division can mitigate this issue in practice, but can cause discontinuities between the adjacent patches and severe class-imbalances within individual sub-volumes. This paper presents a new patch division approach - Patch-512 to tackle the class-imbalance issue by preserving a full field-of-view of the objects in the XY planes. To achieve better segmentation results based on these asymmetric patches, a 3D DCNN architecture using asymmetrical separable convolutions is proposed. The proposed network, called Z-Net, can be seamlessly integrated into existing 3D DCNNs such as 3D U-Net and V-Net, for improved volume segmentation. Detailed validation of the method is provided for CT aortic, liver and lung segmentation, demonstrating the effectiveness and practical value of the method for intra-operative 3D navigation in robot-assisted MIS.

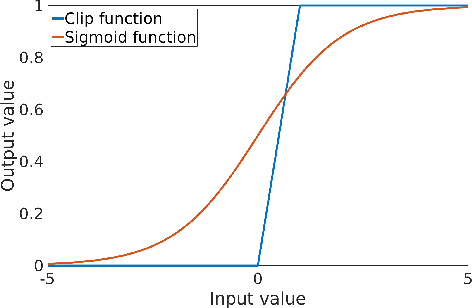

U-Net Training with Instance-Layer Normalization

Aug 25, 2019

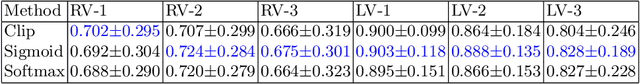

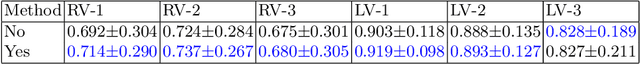

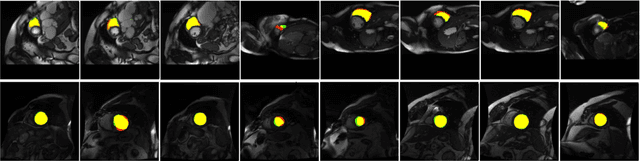

Abstract:Normalization layers are essential in a Deep Convolutional Neural Network (DCNN). Various normalization methods have been proposed. The statistics used to normalize the feature maps can be computed at batch, channel, or instance level. However, in most of existing methods, the normalization for each layer is fixed. Batch-Instance Normalization (BIN) is one of the first proposed methods that combines two different normalization methods and achieve diverse normalization for different layers. However, two potential issues exist in BIN: first, the Clip function is not differentiable at input values of 0 and 1; second, the combined feature map is not with a normalized distribution which is harmful for signal propagation in DCNN. In this paper, an Instance-Layer Normalization (ILN) layer is proposed by using the Sigmoid function for the feature map combination, and cascading group normalization. The performance of ILN is validated on image segmentation of the Right Ventricle (RV) and Left Ventricle (LV) using U-Net as the network architecture. The results show that the proposed ILN outperforms previous traditional and popular normalization methods with noticeable accuracy improvements for most validations, supporting the effectiveness of the proposed ILN.

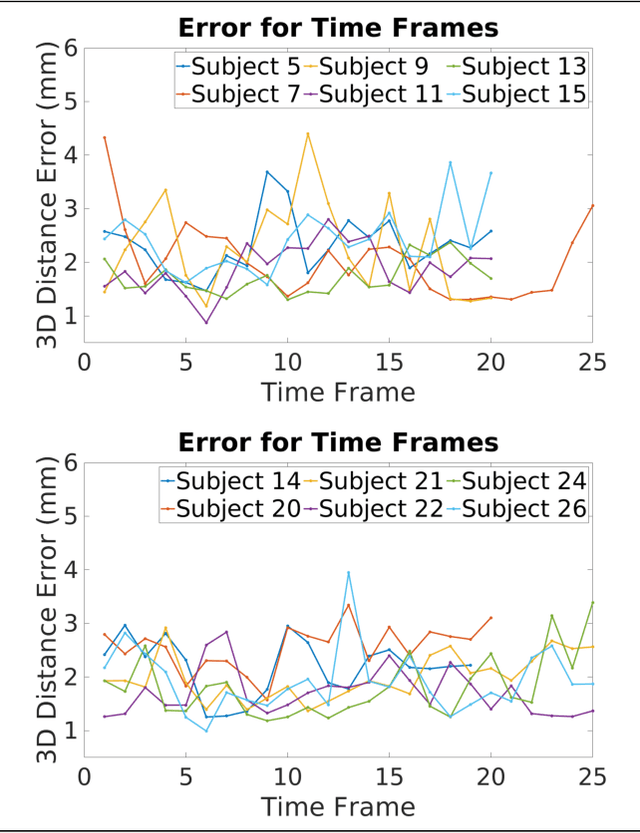

One-stage Shape Instantiation from a Single 2D Image to 3D Point Cloud

Jul 24, 2019

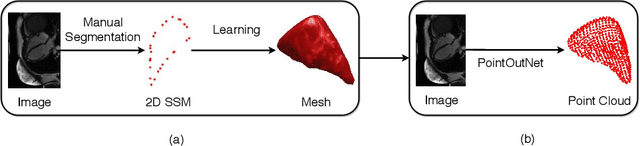

Abstract:Shape instantiation which predicts the 3D shape of a dynamic target from one or more 2D images is important for real-time intra-operative navigation. Previously, a general shape instantiation framework was proposed with manual image segmentation to generate a 2D Statistical Shape Model (SSM) and with Kernel Partial Least Square Regression (KPLSR) to learn the relationship between the 2D and 3D SSM for 3D shape prediction. In this paper, the two-stage shape instantiation is improved to be one-stage. PointOutNet with 19 convolutional layers and three fully-connected layers is used as the network structure and Chamfer distance is used as the loss function to predict the 3D target point cloud from a single 2D image. With the proposed one-stage shape instantiation algorithm, a spontaneous image-to-point cloud training and inference can be achieved. A dataset from 27 Right Ventricle (RV) subjects, indicating 609 experiments, were used to validate the proposed one-stage shape instantiation algorithm. An average point cloud-to-point cloud (PC-to-PC) error of 1.72mm has been achieved, which is comparable to the PLSR-based (1.42mm) and KPLSR-based (1.31mm) two-stage shape instantiation algorithm.

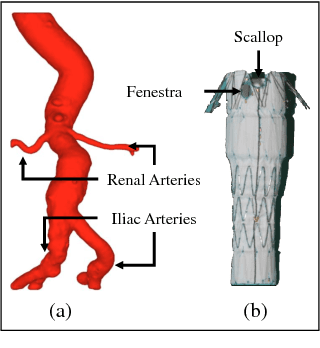

Real-time 3D Shape Instantiation for Partially-deployed Stent Segment from a Single 2D Fluoroscopic Image in Robot-assisted Fenestrated Endovascular Aortic Repair

Feb 28, 2019

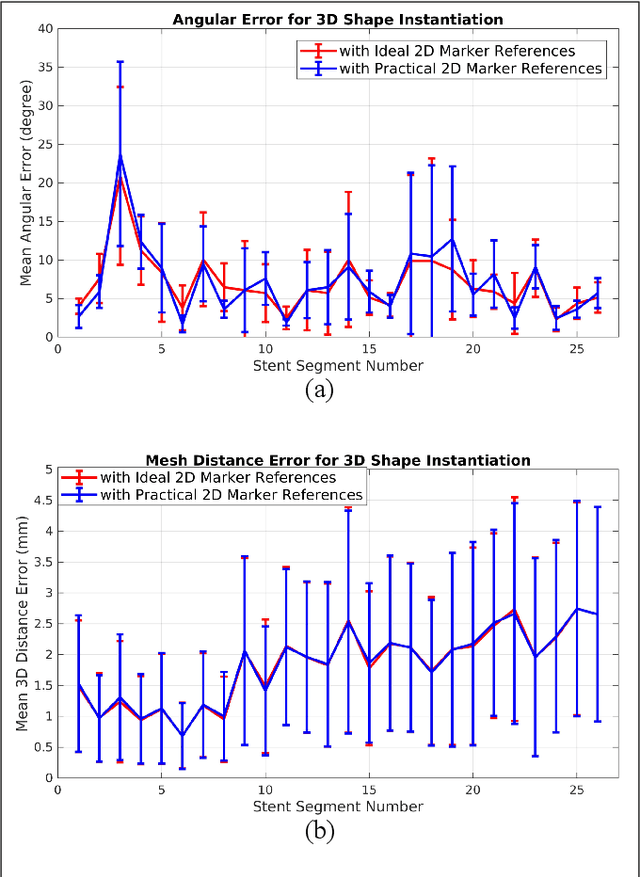

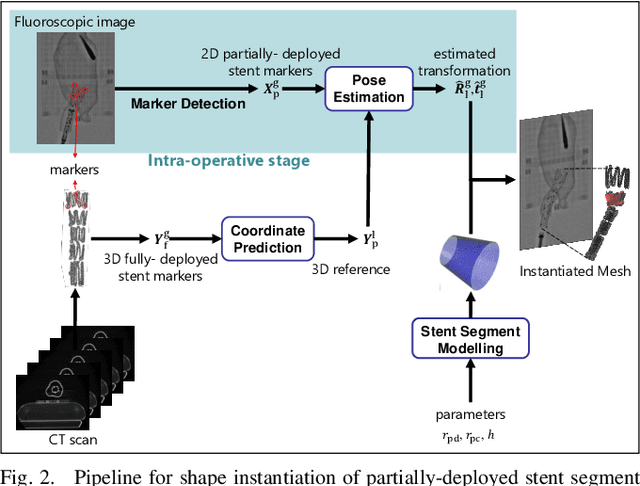

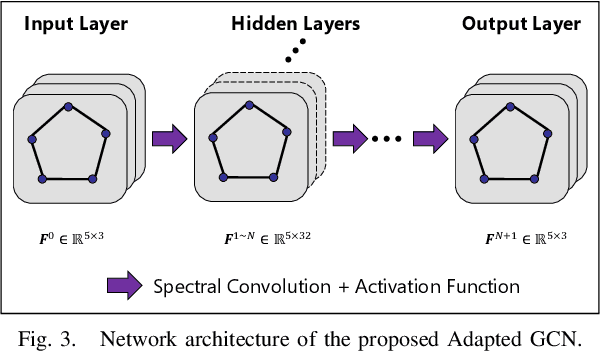

Abstract:In robot-assisted Fenestrated Endovascular Aortic Repair (FEVAR), accurate alignment of stent graft fenestrations or scallops with aortic branches is essential for establishing complete blood flow perfusion. Current navigation is largely based on 2D fluoroscopic images, which lacks 3D anatomical information, thus causing longer operation time as well as high risks of radiation exposure. Previously, 3D shape instantiation frameworks for real-time 3D shape reconstruction of fully-deployed or fully-compressed stent graft from a single 2D fluoroscopic image have been proposed for 3D navigation in robot-assisted FEVAR. However, these methods could not instantiate partially-deployed stent segments, as the 3D marker references are unknown. In this paper, an adapted Graph Convolutional Network (GCN) is proposed to predict 3D marker references from 3D fully-deployed markers. As original GCN is for classification, in this paper, the coarsening layers are removed and the softmax function at the network end is replaced with linear mapping for the regression task. The derived 3D and the 2D marker references are used to instantiate partially-deployed stent segment shape with the existing 3D shape instantiation framework. Validations were performed on three commonly used stent grafts and five patient-specific 3D printed aortic aneurysm phantoms. Comparable performances with average mesh distance errors of 1$\sim$3mm and average angular errors around 7degree were achieved.

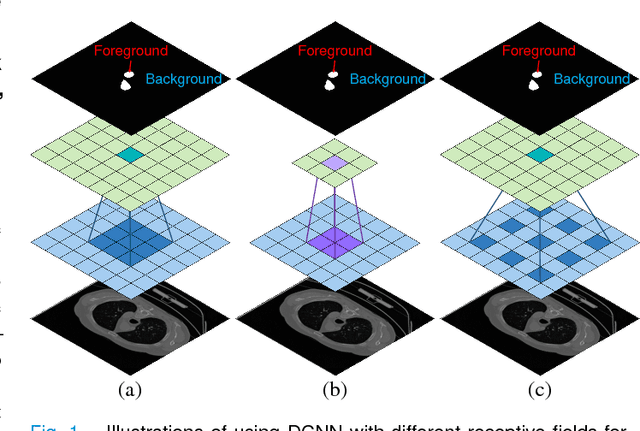

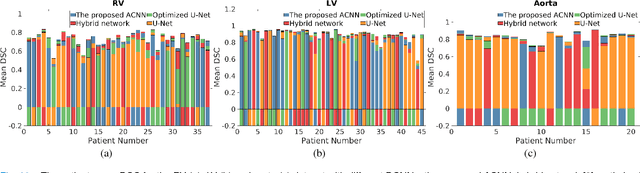

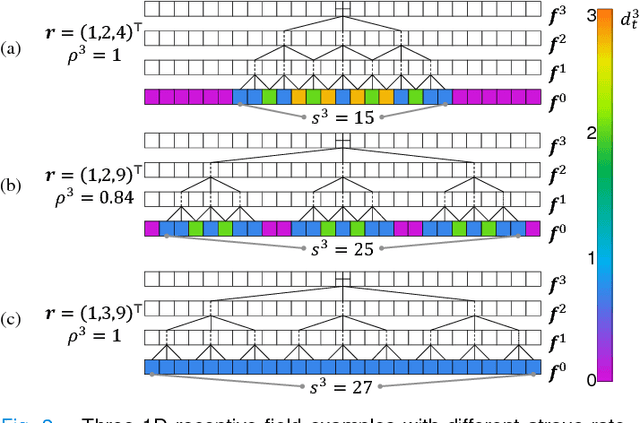

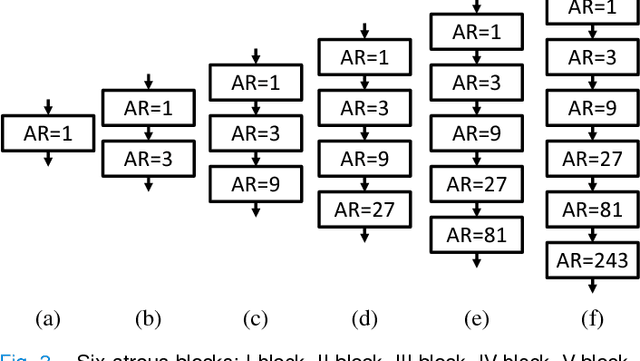

Atrous Convolutional Neural Network (ACNN) for Biomedical Semantic Segmentation with Dimensionally Lossless Feature Maps

Jan 26, 2019

Abstract:Deep Convolutional Neural Networks (DCNNs) are showing impressive performances in biomedical semantic segmentation. However, current DCNNs usually use down-sampling layers to achieve significant receptive field increasing and to gain abstract semantic information. These down-sampling layers decrease the spatial dimension of feature maps as well, which is harmful for semantic segmentation. Atrous convolution is an alternative for the down-sampling layer. It could increase the receptive field significantly but also maintain the spatial dimension of feature maps. In this paper, firstly, an atrous rate setting is proposed to achieve the largest and fully-covered receptive field with a minimum number of layers. Secondly, six atrous blocks, three shortcut connections and four normalization methods are explored to select the optimal atrous block, shortcut connection and normalization method. Finally, a new and dimensionally lossless DCNN - Atrous Convolutional Neural Network (ACNN) is proposed with using cascaded atrous II-blocks, residual learning and Fine Group Normalization (FGN). The Right Ventricle (RV), Left Ventricle (LV) and aorta data are used for the validation. The results show that the proposed ACNN achieves comparable segmentation Dice Similarity Coefficients (DSCs) with U-Net, optimized U-Net and the hybrid network, but uses much less parameters. This advantage is considered to benefit from the dimensionally lossless feature maps.

3D Path Planning from a Single 2D Fluoroscopic Image for Robot Assisted Fenestrated Endovascular Aortic Repair

Sep 16, 2018

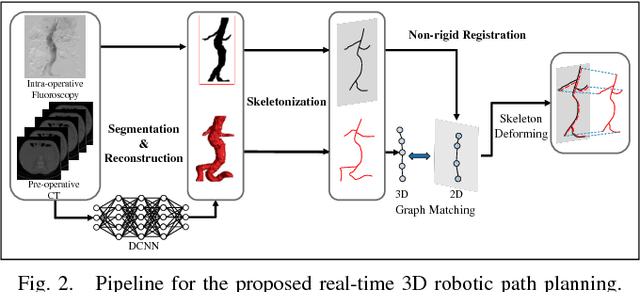

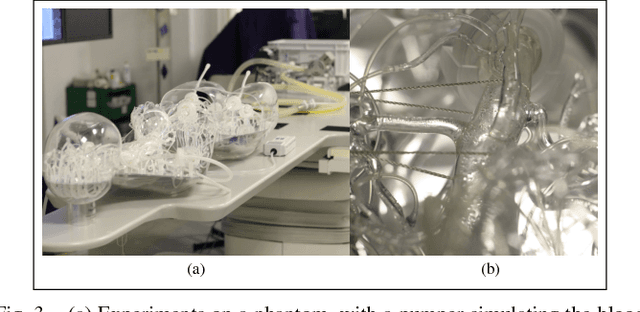

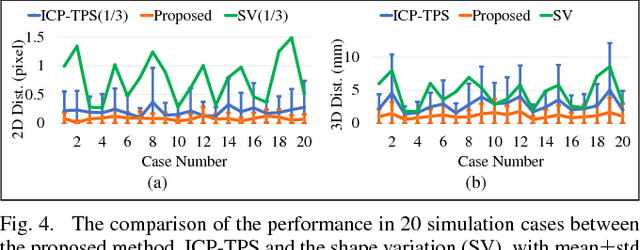

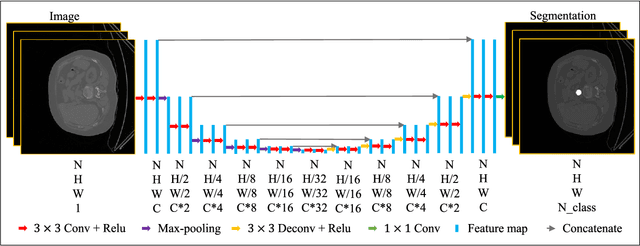

Abstract:The current standard of intra-operative navigation during Fenestrated Endovascular Aortic Repair (FEVAR) calls for need of 3D alignments between inserted devices and aortic branches. The navigation commonly via 2D fluoroscopic images, lacks anatomical information, resulting in longer operation hours and radiation exposure. In this paper, a framework for real-time 3D robotic path planning from a single 2D fluoroscopic image of Abdominal Aortic Aneurysm (AAA) is introduced. A graph matching method is proposed to establish the correspondence between the 3D preoperative and 2D intra-operative AAA skeletons, and then the two skeletons are registered by skeleton deformation and regularization in respect to skeleton length and smoothness. Furthermore, deep learning was used to segment 3D pre-operative AAA from Computed Tomography (CT) scans to facilitate the framework automation. Simulation, phantom and patient AAA data sets have been used to validate the proposed framework. 3D distance error of 2mm was achieved in the phantom setup. Performance advantages were also achieved in terms of accuracy, robustness and time-efficiency. All the code will be open source.

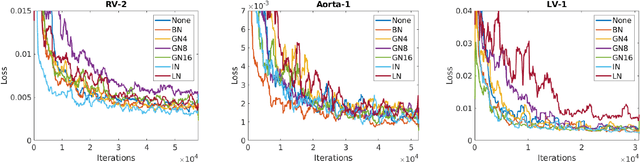

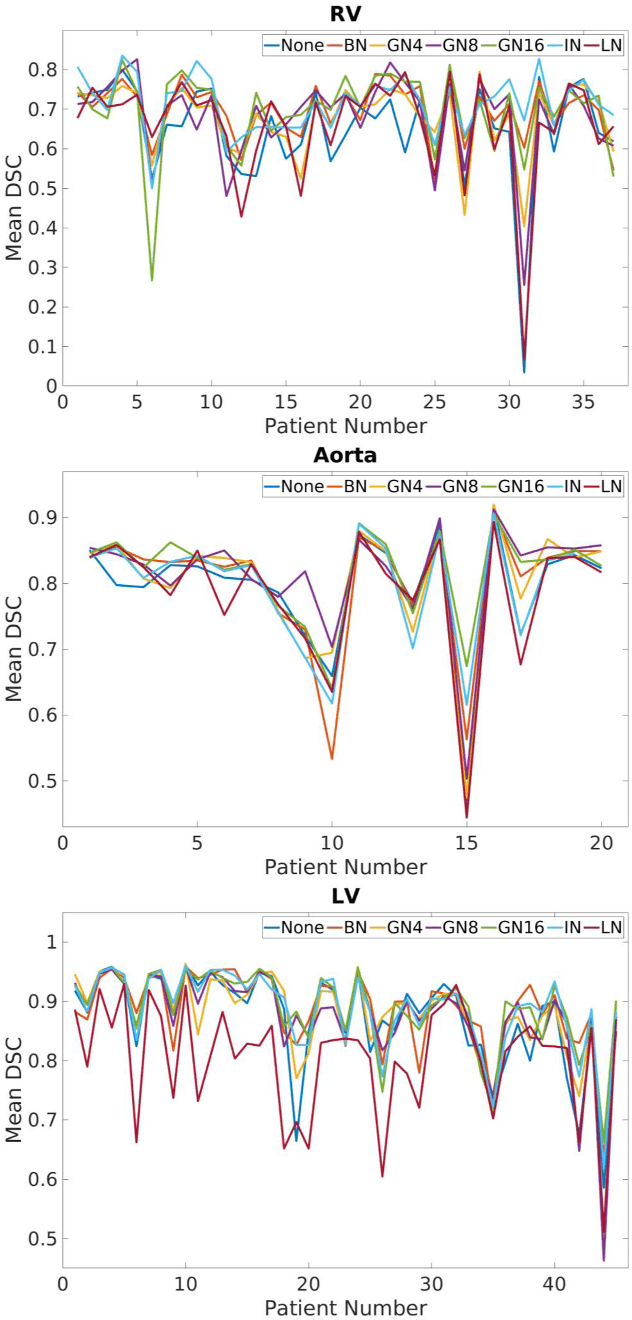

Normalization in Training Deep Convolutional Neural Networks for 2D Bio-medical Semantic Segmentation

Sep 11, 2018

Abstract:2D bio-medical semantic segmentation is important for surgical robotic vision. Segmentation methods based on Deep Convolutional Neural Network (DCNN) out-perform conventional methods in terms of both the accuracy and automation. One common issue in training DCNN is the internal covariate shift, where the convolutional kernels are trained to fit the distribution change of input feature, hence both the training speed and performance are decreased. Batch Normalization (BN) is the first proposed method for addressing internal covariate shift and is widely used. Later Instance Normalization (IN) and Layer Normalization (LN) were proposed and are used much less than BN. Group Normalization (GN) was proposed very recently and has not been applied into 2D bio-medical semantic segmentation yet. Most DCNN-based bio-medical semantic segmentation adopts BN as the normalization method by default, without reviewing its performance. In this paper, four normalization methods - BN, IN, LN and GN are compared and reviewed in details specifically for 2D bio-medical semantic segmentation. The result proved that GN out-performed the other three normalization methods - BN, IN and LN in 2D bio-medical semantic segmentation regarding both the accuracy and robustness. Unet is adopted as the basic DCNN structure. 37 RVs from both asymptomatic and Hypertrophic Cardiomyopathy (HCM) subjects and 20 aortas from asymptomatic subjects were used for the validation. The code and trained models will be available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge