Wenshuo Ma

Towards Performance-Enhanced Model-Contrastive Federated Learning using Historical Information in Heterogeneous Scenarios

Feb 12, 2026Abstract:Federated Learning (FL) enables multiple nodes to collaboratively train a model without sharing raw data. However, FL systems are usually deployed in heterogeneous scenarios, where nodes differ in both data distributions and participation frequencies, which undermines the FL performance. To tackle the above issue, this paper proposes PMFL, a performance-enhanced model-contrastive federated learning framework using historical training information. Specifically, on the node side, we design a novel model-contrastive term into the node optimization objective by incorporating historical local models to capture stable contrastive points, thereby improving the consistency of model updates in heterogeneous data distributions. On the server side, we utilize the cumulative participation count of each node to adaptively adjust its aggregation weight, thereby correcting the bias in the global objective caused by different node participation frequencies. Furthermore, the updated global model incorporates historical global models to reduce its fluctuations in performance between adjacent rounds. Extensive experiments demonstrate that PMFL achieves superior performance compared with existing FL methods in heterogeneous scenarios.

AABO: Adaptive Anchor Box Optimization for Object Detection via Bayesian Sub-sampling

Jul 18, 2020

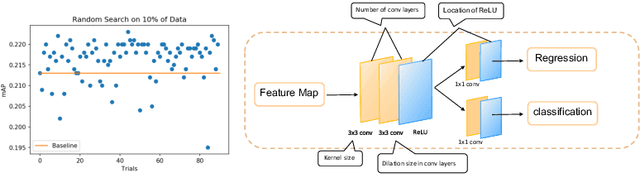

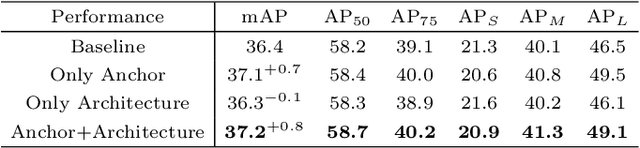

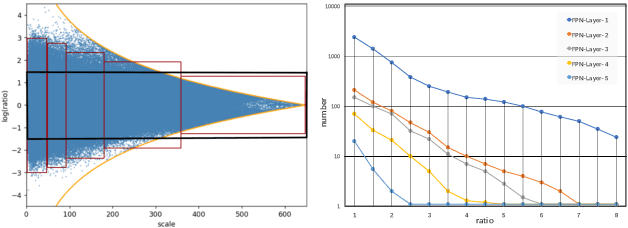

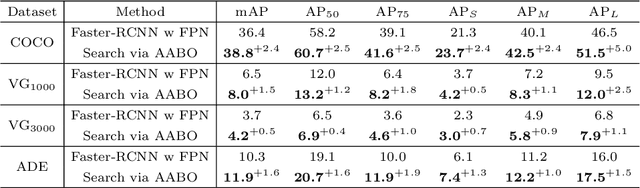

Abstract:Most state-of-the-art object detection systems follow an anchor-based diagram. Anchor boxes are densely proposed over the images and the network is trained to predict the boxes position offset as well as the classification confidence. Existing systems pre-define anchor box shapes and sizes and ad-hoc heuristic adjustments are used to define the anchor configurations. However, this might be sub-optimal or even wrong when a new dataset or a new model is adopted. In this paper, we study the problem of automatically optimizing anchor boxes for object detection. We first demonstrate that the number of anchors, anchor scales and ratios are crucial factors for a reliable object detection system. By carefully analyzing the existing bounding box patterns on the feature hierarchy, we design a flexible and tight hyper-parameter space for anchor configurations. Then we propose a novel hyper-parameter optimization method named AABO to determine more appropriate anchor boxes for a certain dataset, in which Bayesian Optimization and subsampling method are combined to achieve precise and efficient anchor configuration optimization. Experiments demonstrate the effectiveness of our proposed method on different detectors and datasets, e.g. achieving around 2.4% mAP improvement on COCO, 1.6% on ADE and 1.5% on VG, and the optimal anchors can bring 1.4% to 2.4% mAP improvement on SOTA detectors by only optimizing anchor configurations, e.g. boosting Mask RCNN from 40.3% to 42.3%, and HTC detector from 46.8% to 48.2%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge