Wadhah Zai El Amri

Do Robots Really Need Anthropomorphic Hands?

Aug 07, 2025Abstract:Human manipulation skills represent a pinnacle of their voluntary motor functions, requiring the coordination of many degrees of freedom and processing of high-dimensional sensor input to achieve such a high level of dexterity. Thus, we set out to answer whether the human hand, with its associated biomechanical properties, sensors, and control mechanisms, is an ideal that we should strive for in robotics-do we really need anthropomorphic robotic hands? This survey can help practitioners to make the trade-off between hand complexity and potential manipulation skills. We provide an overview of the human hand, a comparison of commercially available robotic and prosthetic hands, and a systematic review of hand mechanisms and skills that they are capable of. This leads to follow-up questions. What is the minimum requirement for mechanisms and sensors to implement most skills that a robot needs? What is missing to reach human-level dexterity? Can we improve upon human dexterity? Although complex five-fingered hands are often used as the ultimate goal for robotic manipulators, they are not necessary for all tasks. We found that wrist flexibility and finger abduction/adduction are important for manipulation capabilities. On the contrary, increasing the number of fingers, actuators, or degrees of freedom is often not necessary. Three fingers are a good compromise between simplicity and dexterity. Non-anthropomorphic hand designs with two opposing pairs of fingers or human hands with six fingers can further increase dexterity, suggesting that the human hand may not be the optimum.

ACROSS: A Deformation-Based Cross-Modal Representation for Robotic Tactile Perception

Nov 13, 2024

Abstract:Tactile perception is essential for human interaction with the environment and is becoming increasingly crucial in robotics. Tactile sensors like the BioTac mimic human fingertips and provide detailed interaction data. Despite its utility in applications like slip detection and object identification, this sensor is now deprecated, making many existing valuable datasets obsolete. However, recreating similar datasets with newer sensor technologies is both tedious and time-consuming. Therefore, it is crucial to adapt these existing datasets for use with new setups and modalities. In response, we introduce ACROSS, a novel framework for translating data between tactile sensors by exploiting sensor deformation information. We demonstrate the approach by translating BioTac signals into the DIGIT sensor. Our framework consists of first converting the input signals into 3D deformation meshes. We then transition from the 3D deformation mesh of one sensor to the mesh of another, and finally convert the generated 3D deformation mesh into the corresponding output space. We demonstrate our approach to the most challenging problem of going from a low-dimensional tactile representation to a high-dimensional one. In particular, we transfer the tactile signals of a BioTac sensor to DIGIT tactile images. Our approach enables the continued use of valuable datasets and the exchange of data between groups with different setups.

Transferring Tactile Data Across Sensors

Oct 18, 2024

Abstract:Tactile perception is essential for human interaction with the environment and is becoming increasingly crucial in robotics. Tactile sensors like the BioTac mimic human fingertips and provide detailed interaction data. Despite its utility in applications like slip detection and object identification, this sensor is now deprecated, making many existing datasets obsolete. This article introduces a novel method for translating data between tactile sensors by exploiting sensor deformation information rather than output signals. We demonstrate the approach by translating BioTac signals into the DIGIT sensor. Our framework consists of three steps: first, converting signal data into corresponding 3D deformation meshes; second, translating these 3D deformation meshes from one sensor to another; and third, generating output images using the converted meshes. Our approach enables the continued use of valuable datasets.

Optimizing BioTac Simulation for Realistic Tactile Perception

Apr 16, 2024

Abstract:Tactile sensing presents a promising opportunity for enhancing the interaction capabilities of today's robots. BioTac is a commonly used tactile sensor that enables robots to perceive and respond to physical tactile stimuli. However, the sensor's non-linearity poses challenges in simulating its behavior. In this paper, we first investigate a BioTac simulation that uses temperature, force, and contact point positions to predict the sensor outputs. We show that training with BioTac temperature readings does not yield accurate sensor output predictions during deployment. Consequently, we tested three alternative models, i.e., an XGBoost regressor, a neural network, and a transformer encoder. We train these models without temperature readings and provide a detailed investigation of the window size of the input vectors. We demonstrate that we achieve statistically significant improvements over the baseline network. Furthermore, our results reveal that the XGBoost regressor and transformer outperform traditional feed-forward neural networks in this task. We make all our code and results available online on https://github.com/wzaielamri/Optimizing_BioTac_Simulation.

A Review of the Role of Causality in Developing Trustworthy AI Systems

Feb 14, 2023

Abstract:State-of-the-art AI models largely lack an understanding of the cause-effect relationship that governs human understanding of the real world. Consequently, these models do not generalize to unseen data, often produce unfair results, and are difficult to interpret. This has led to efforts to improve the trustworthiness aspects of AI models. Recently, causal modeling and inference methods have emerged as powerful tools. This review aims to provide the reader with an overview of causal methods that have been developed to improve the trustworthiness of AI models. We hope that our contribution will motivate future research on causality-based solutions for trustworthy AI.

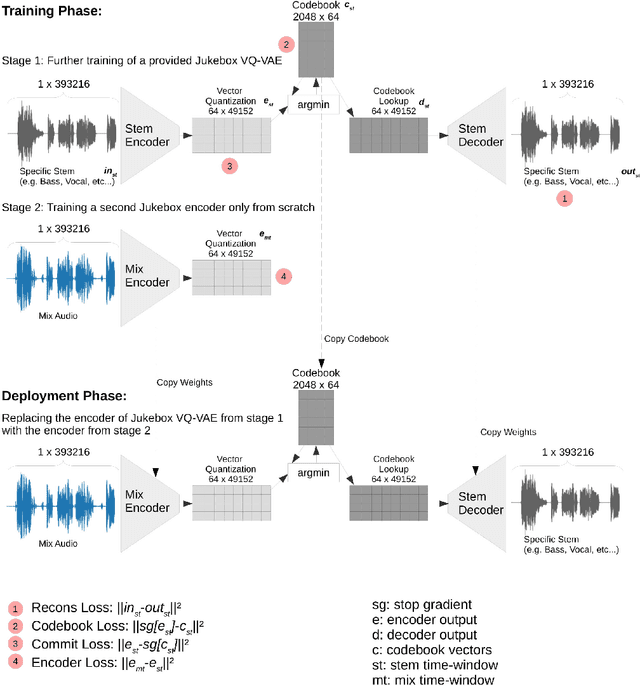

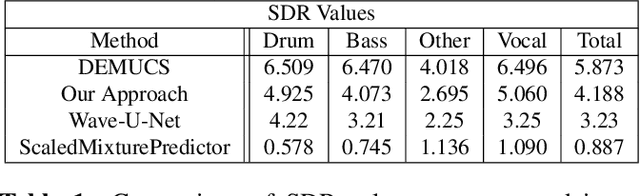

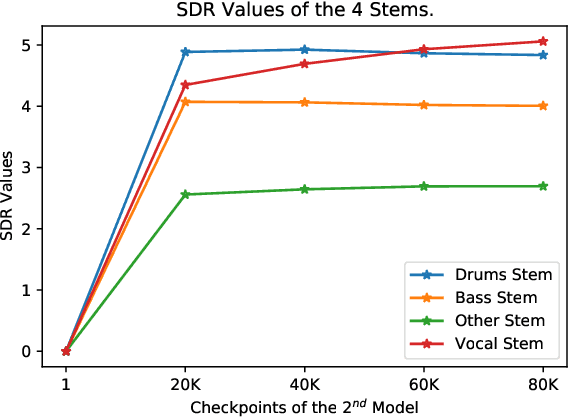

Transfer Learning with Jukebox for Music Source Separation

Nov 28, 2021

Abstract:In this work, we demonstrate how to adapt a publicly available pre-trained Jukebox model for the problem of audio source separation from a single mixed audio channel. Our neural network architecture for transfer learning is fast to train and results demonstrate comparable performance to other state-of-the-art approaches. We provide an open-source code implementation of our architecture (https://rebrand.ly/transfer-jukebox-github).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge