Venkatesh Thirugnana Sambandham

DrivIng: A Large-Scale Multimodal Driving Dataset with Full Digital Twin Integration

Jan 21, 2026Abstract:Perception is a cornerstone of autonomous driving, enabling vehicles to understand their surroundings and make safe, reliable decisions. Developing robust perception algorithms requires large-scale, high-quality datasets that cover diverse driving conditions and support thorough evaluation. Existing datasets often lack a high-fidelity digital twin, limiting systematic testing, edge-case simulation, sensor modification, and sim-to-real evaluations. To address this gap, we present DrivIng, a large-scale multimodal dataset with a complete geo-referenced digital twin of a ~18 km route spanning urban, suburban, and highway segments. Our dataset provides continuous recordings from six RGB cameras, one LiDAR, and high-precision ADMA-based localization, captured across day, dusk, and night. All sequences are annotated at 10 Hz with 3D bounding boxes and track IDs across 12 classes, yielding ~1.2 million annotated instances. Alongside the benefits of a digital twin, DrivIng enables a 1-to-1 transfer of real traffic into simulation, preserving agent interactions while enabling realistic and flexible scenario testing. To support reproducible research and robust validation, we benchmark DrivIng with state-of-the-art perception models and publicly release the dataset, digital twin, HD map, and codebase.

Towards Transformer-based Homogenization of Satellite Imagery for Landsat-8 and Sentinel-2

Oct 14, 2022

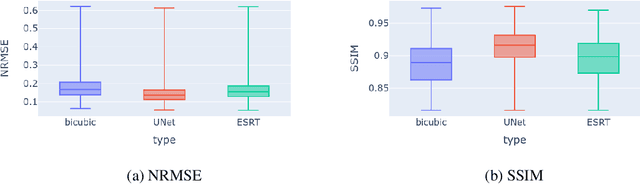

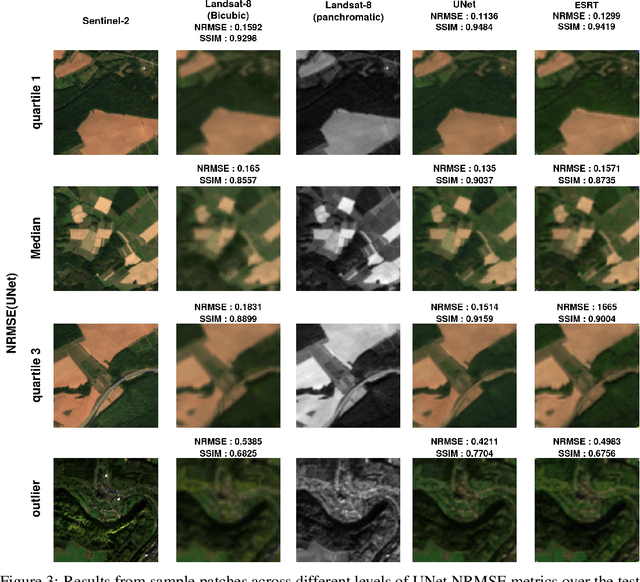

Abstract:Landsat-8 (NASA) and Sentinel-2 (ESA) are two prominent multi-spectral imaging satellite projects that provide publicly available data. The multi-spectral imaging sensors of the satellites capture images of the earth's surface in the visible and infrared region of the electromagnetic spectrum. Since the majority of the earth's surface is constantly covered with clouds, which are not transparent at these wavelengths, many images do not provide much information. To increase the temporal availability of cloud-free images of a certain area, one can combine the observations from multiple sources. However, the sensors of satellites might differ in their properties, making the images incompatible. This work provides a first glance at the possibility of using a transformer-based model to reduce the spectral and spatial differences between observations from both satellite projects. We compare the results to a model based on a fully convolutional UNet architecture. Somewhat surprisingly, we find that, while deep models outperform classical approaches, the UNet significantly outperforms the transformer in our experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge