Vandana Mukherjee

DECASTE: Unveiling Caste Stereotypes in Large Language Models through Multi-Dimensional Bias Analysis

May 20, 2025Abstract:Recent advancements in large language models (LLMs) have revolutionized natural language processing (NLP) and expanded their applications across diverse domains. However, despite their impressive capabilities, LLMs have been shown to reflect and perpetuate harmful societal biases, including those based on ethnicity, gender, and religion. A critical and underexplored issue is the reinforcement of caste-based biases, particularly towards India's marginalized caste groups such as Dalits and Shudras. In this paper, we address this gap by proposing DECASTE, a novel, multi-dimensional framework designed to detect and assess both implicit and explicit caste biases in LLMs. Our approach evaluates caste fairness across four dimensions: socio-cultural, economic, educational, and political, using a range of customized prompting strategies. By benchmarking several state-of-the-art LLMs, we reveal that these models systematically reinforce caste biases, with significant disparities observed in the treatment of oppressed versus dominant caste groups. For example, bias scores are notably elevated when comparing Dalits and Shudras with dominant caste groups, reflecting societal prejudices that persist in model outputs. These results expose the subtle yet pervasive caste biases in LLMs and emphasize the need for more comprehensive and inclusive bias evaluation methodologies that assess the potential risks of deploying such models in real-world contexts.

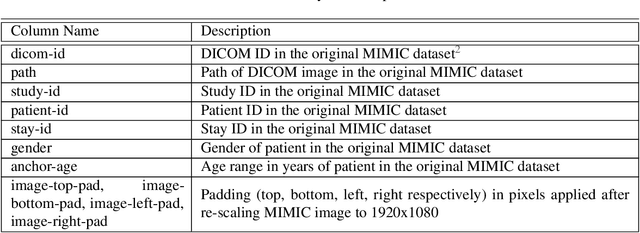

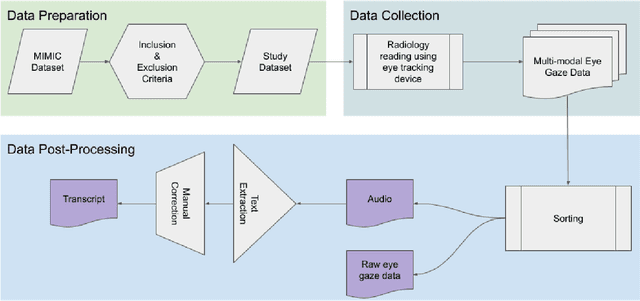

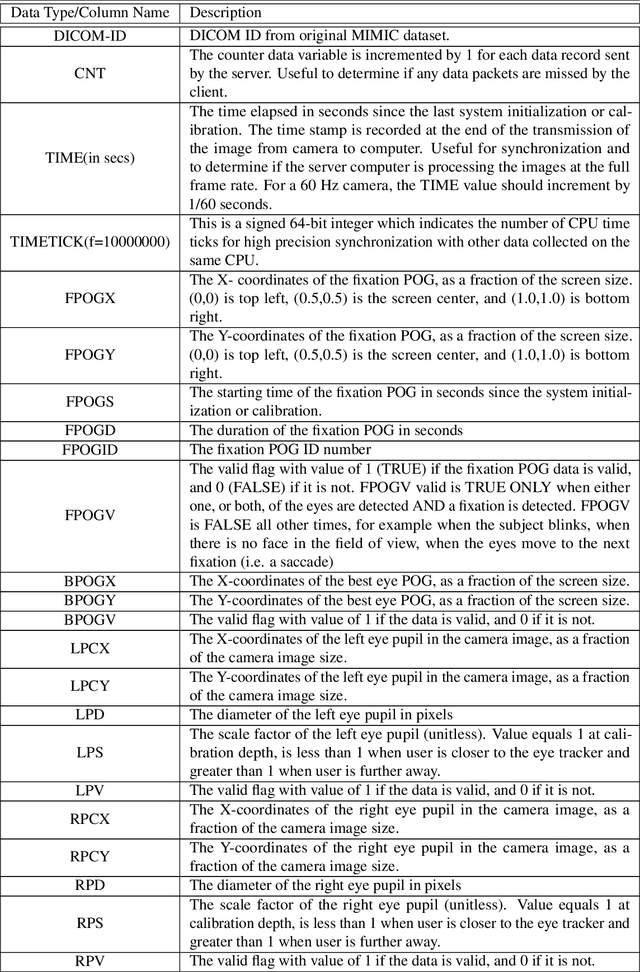

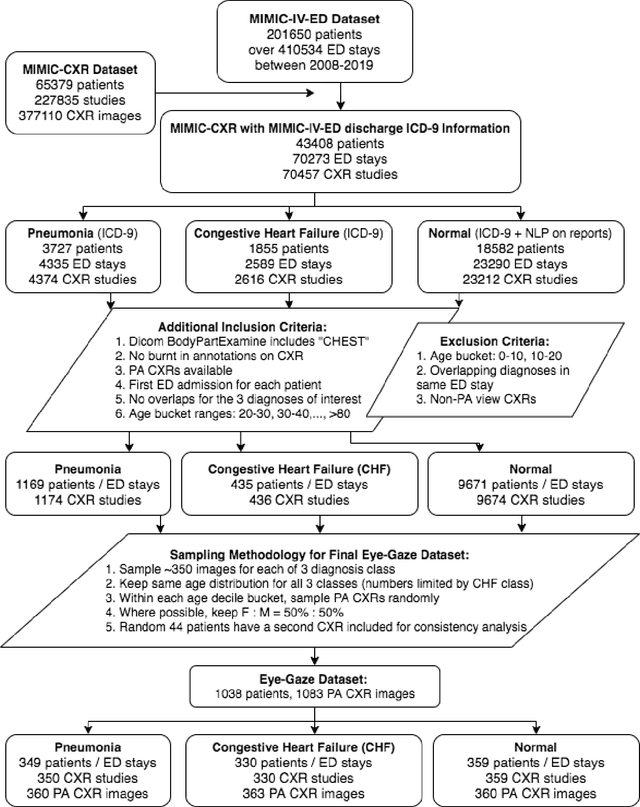

Creation and Validation of a Chest X-Ray Dataset with Eye-tracking and Report Dictation for AI Development

Oct 08, 2020

Abstract:We developed a rich dataset of Chest X-Ray (CXR) images to assist investigators in artificial intelligence. The data were collected using an eye tracking system while a radiologist reviewed and reported on 1,083 CXR images. The dataset contains the following aligned data: CXR image, transcribed radiology report text, radiologist's dictation audio and eye gaze coordinates data. We hope this dataset can contribute to various areas of research particularly towards explainable and multimodal deep learning / machine learning methods. Furthermore, investigators in disease classification and localization, automated radiology report generation, and human-machine interaction can benefit from these data. We report deep learning experiments that utilize the attention maps produced by eye gaze dataset to show the potential utility of this data.

Receptivity of an AI Cognitive Assistant by the Radiology Community: A Report on Data Collected at RSNA

Sep 13, 2020

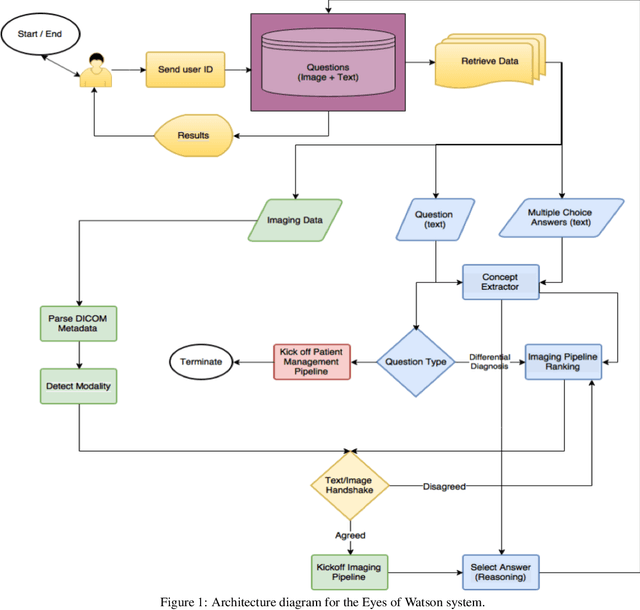

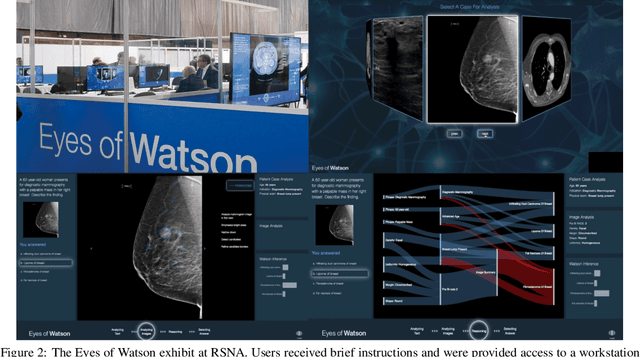

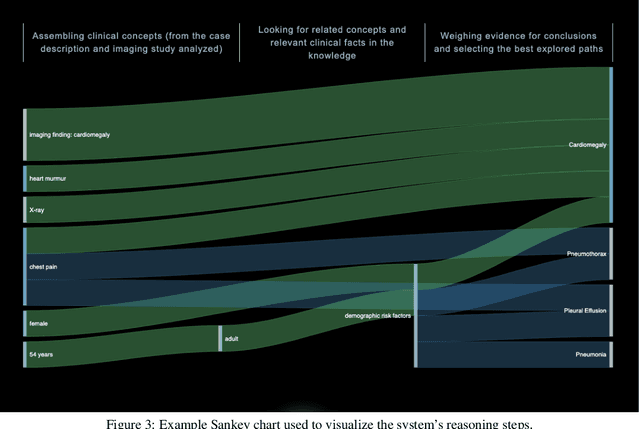

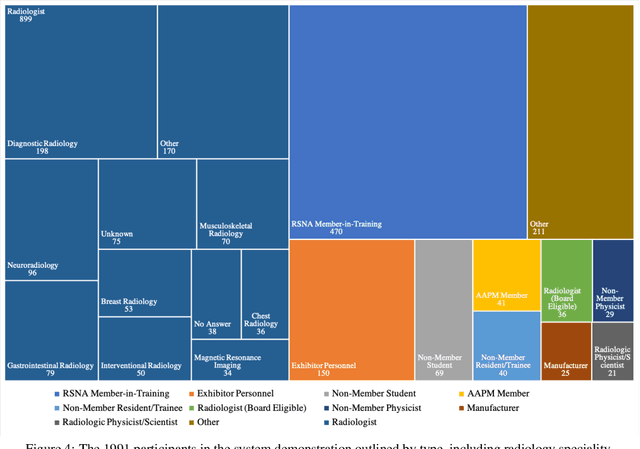

Abstract:Due to advances in machine learning and artificial intelligence (AI), a new role is emerging for machines as intelligent assistants to radiologists in their clinical workflows. But what systematic clinical thought processes are these machines using? Are they similar enough to those of radiologists to be trusted as assistants? A live demonstration of such a technology was conducted at the 2016 Scientific Assembly and Annual Meeting of the Radiological Society of North America (RSNA). The demonstration was presented in the form of a question-answering system that took a radiology multiple choice question and a medical image as inputs. The AI system then demonstrated a cognitive workflow, involving text analysis, image analysis, and reasoning, to process the question and generate the most probable answer. A post demonstration survey was made available to the participants who experienced the demo and tested the question answering system. Of the reported 54,037 meeting registrants, 2,927 visited the demonstration booth, 1,991 experienced the demo, and 1,025 completed a post-demonstration survey. In this paper, the methodology of the survey is shown and a summary of its results are presented. The results of the survey show a very high level of receptiveness to cognitive computing technology and artificial intelligence among radiologists.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge