Urs Bergmann

Disentangling Multiple Conditional Inputs in GANs

Jun 20, 2018

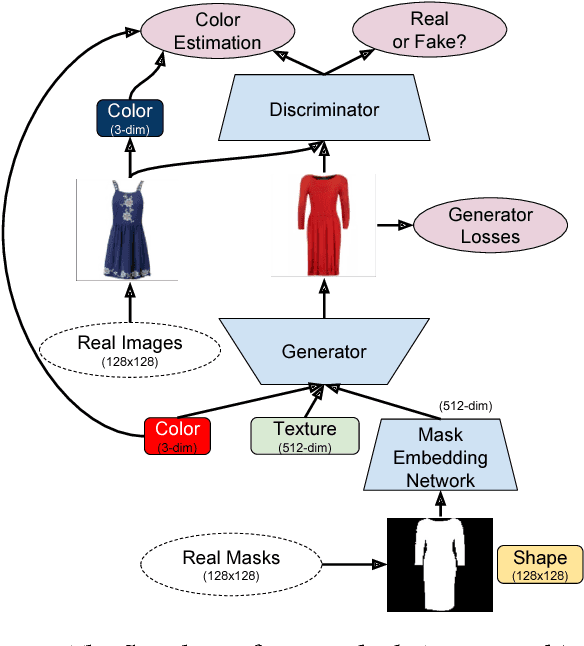

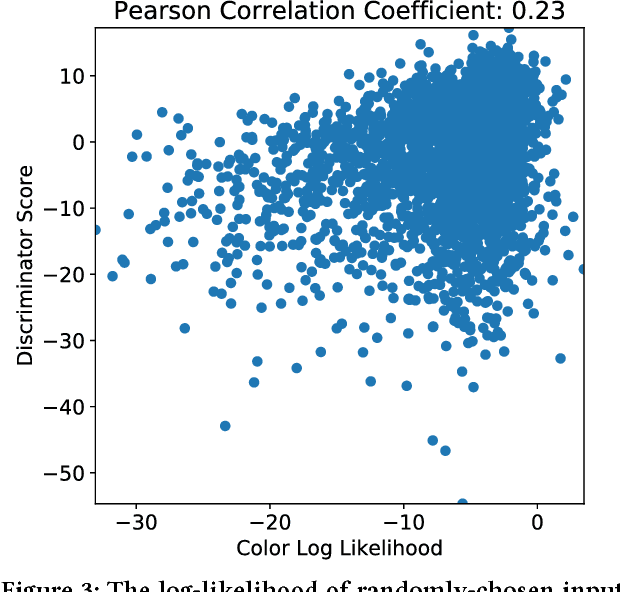

Abstract:In this paper, we propose a method that disentangles the effects of multiple input conditions in Generative Adversarial Networks (GANs). In particular, we demonstrate our method in controlling color, texture, and shape of a generated garment image for computer-aided fashion design. To disentangle the effect of input attributes, we customize conditional GANs with consistency loss functions. In our experiments, we tune one input at a time and show that we can guide our network to generate novel and realistic images of clothing articles. In addition, we present a fashion design process that estimates the input attributes of an existing garment and modifies them using our generator.

First Order Generative Adversarial Networks

Jun 07, 2018

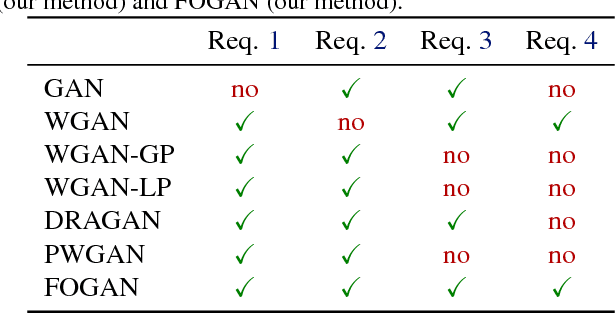

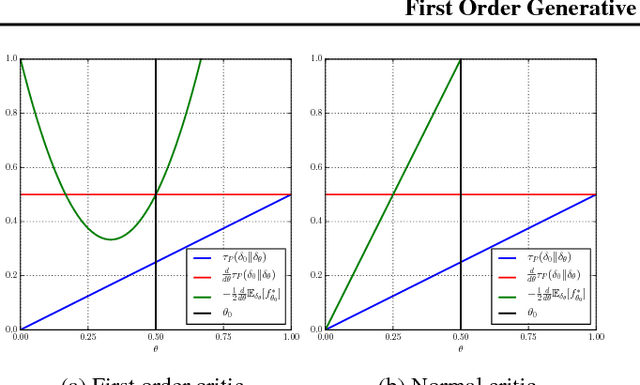

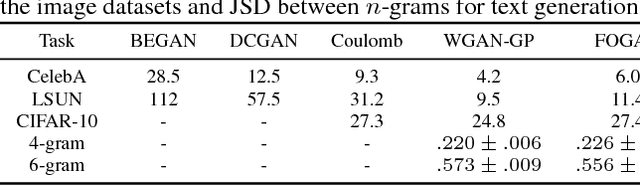

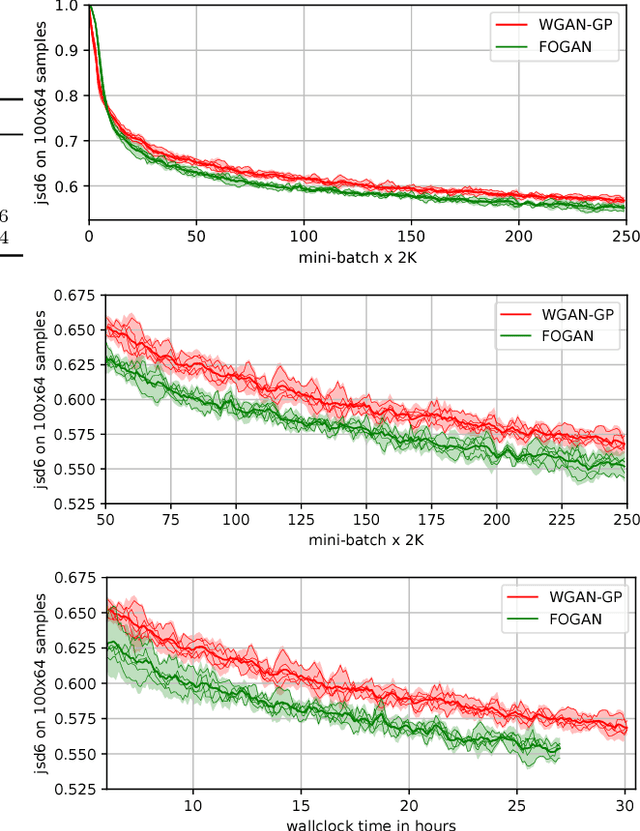

Abstract:GANs excel at learning high dimensional distributions, but they can update generator parameters in directions that do not correspond to the steepest descent direction of the objective. Prominent examples of problematic update directions include those used in both Goodfellow's original GAN and the WGAN-GP. To formally describe an optimal update direction, we introduce a theoretical framework which allows the derivation of requirements on both the divergence and corresponding method for determining an update direction, with these requirements guaranteeing unbiased mini-batch updates in the direction of steepest descent. We propose a novel divergence which approximates the Wasserstein distance while regularizing the critic's first order information. Together with an accompanying update direction, this divergence fulfills the requirements for unbiased steepest descent updates. We verify our method, the First Order GAN, with image generation on CelebA, LSUN and CIFAR-10 and set a new state of the art on the One Billion Word language generation task. Code to reproduce experiments is available.

Stochastic Maximum Likelihood Optimization via Hypernetworks

Jan 12, 2018

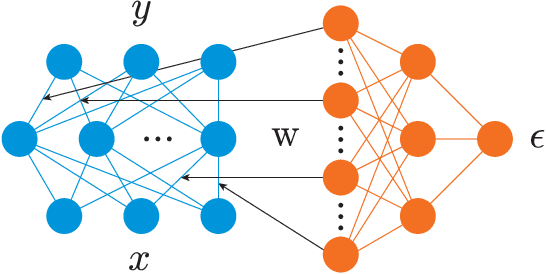

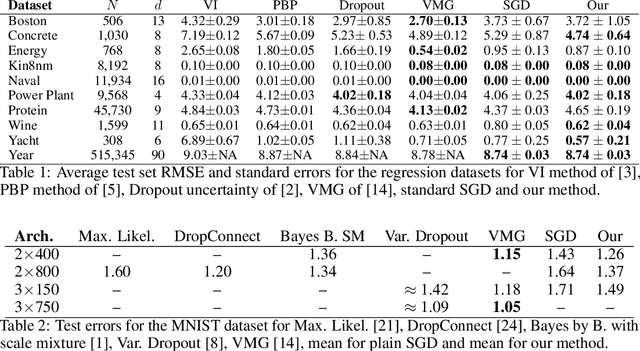

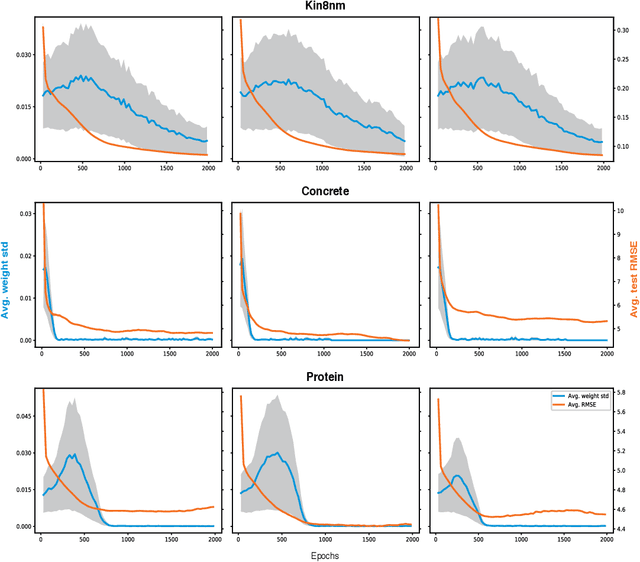

Abstract:This work explores maximum likelihood optimization of neural networks through hypernetworks. A hypernetwork initializes the weights of another network, which in turn can be employed for typical functional tasks such as regression and classification. We optimize hypernetworks to directly maximize the conditional likelihood of target variables given input. Using this approach we obtain competitive empirical results on regression and classification benchmarks.

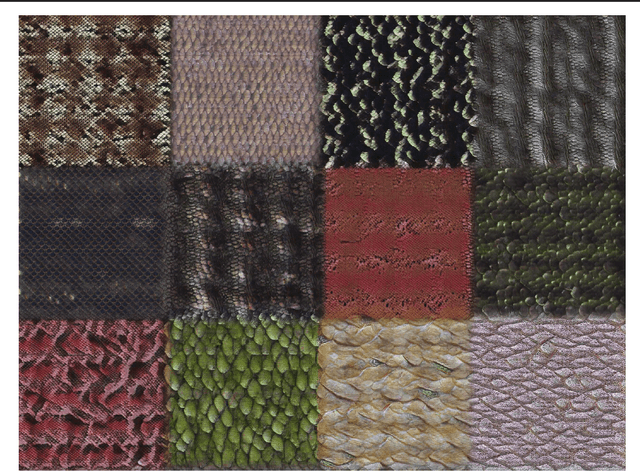

GANosaic: Mosaic Creation with Generative Texture Manifolds

Dec 01, 2017

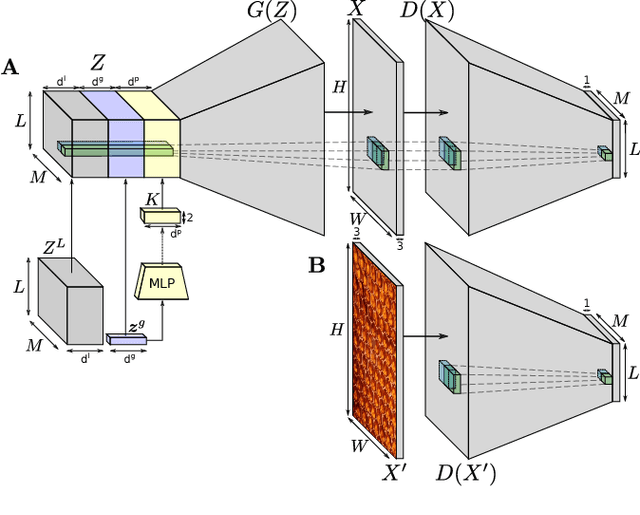

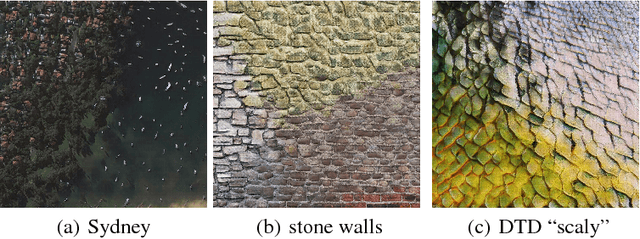

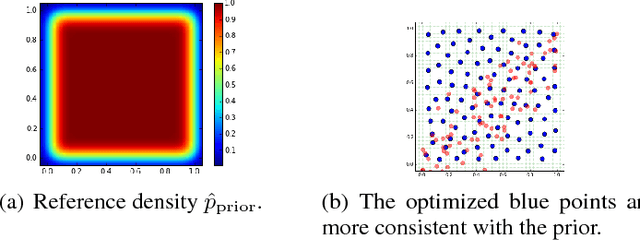

Abstract:This paper presents a novel framework for generating texture mosaics with convolutional neural networks. Our method is called GANosaic and performs optimization in the latent noise space of a generative texture model, which allows the transformation of a content image into a mosaic exhibiting the visual properties of the underlying texture manifold. To represent that manifold, we use a state-of-the-art generative adversarial method for texture synthesis, which can learn expressive texture representations from data and produce mosaic images with very high resolution. This fully convolutional model generates smooth (without any visible borders) mosaic images which morph and blend different textures locally. In addition, we develop a new type of differentiable statistical regularization appropriate for optimization over the prior noise space of the PSGAN model.

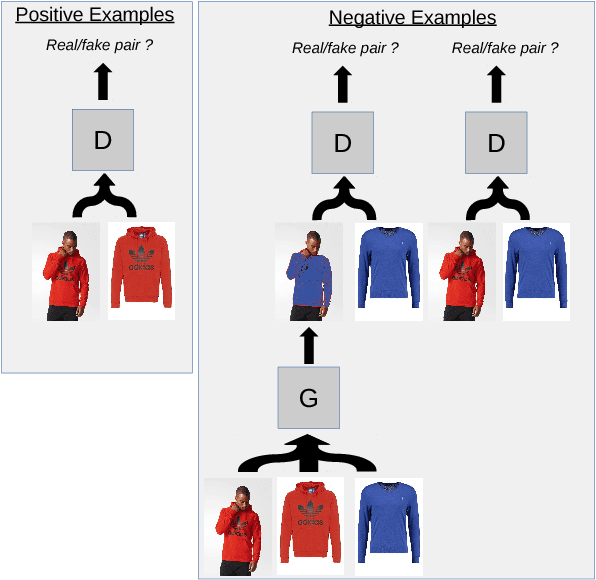

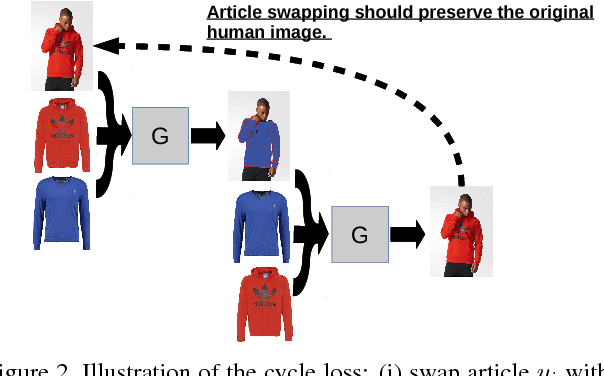

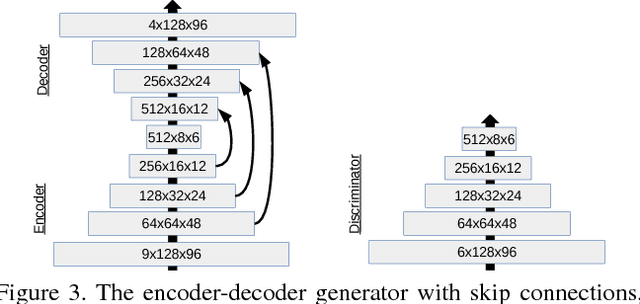

The Conditional Analogy GAN: Swapping Fashion Articles on People Images

Sep 14, 2017

Abstract:We present a novel method to solve image analogy problems : it allows to learn the relation between paired images present in training data, and then generalize and generate images that correspond to the relation, but were never seen in the training set. Therefore, we call the method Conditional Analogy Generative Adversarial Network (CAGAN), as it is based on adversarial training and employs deep convolutional neural networks. An especially interesting application of that technique is automatic swapping of clothing on fashion model photos. Our work has the following contributions. First, the definition of the end-to-end trainable CAGAN architecture, which implicitly learns segmentation masks without expensive supervised labeling data. Second, experimental results show plausible segmentation masks and often convincing swapped images, given the target article. Finally, we discuss the next steps for that technique: neural network architecture improvements and more advanced applications.

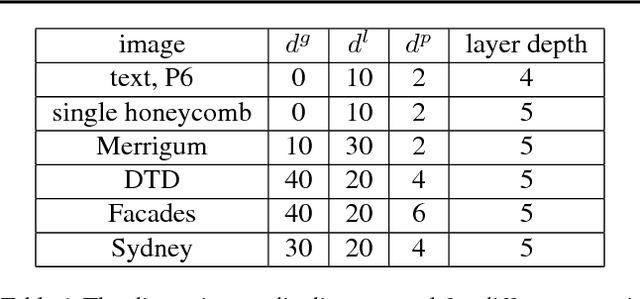

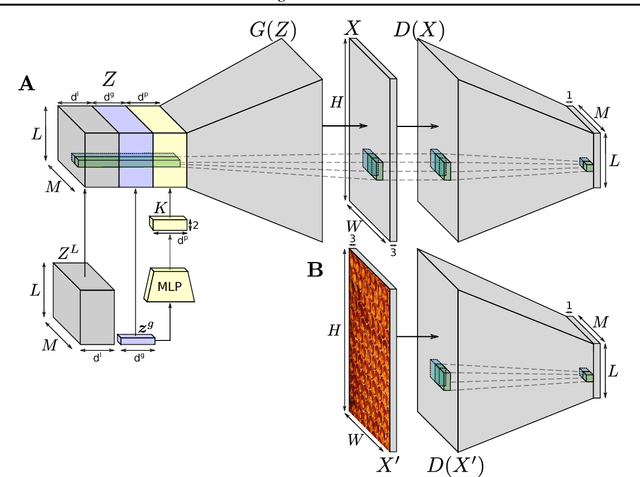

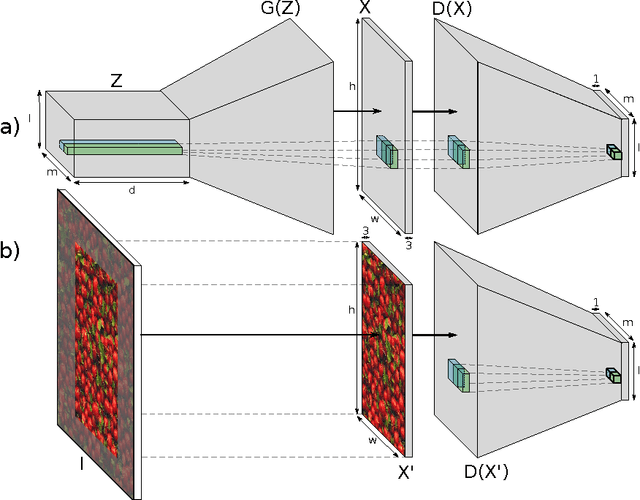

Learning Texture Manifolds with the Periodic Spatial GAN

Sep 08, 2017

Abstract:This paper introduces a novel approach to texture synthesis based on generative adversarial networks (GAN) (Goodfellow et al., 2014). We extend the structure of the input noise distribution by constructing tensors with different types of dimensions. We call this technique Periodic Spatial GAN (PSGAN). The PSGAN has several novel abilities which surpass the current state of the art in texture synthesis. First, we can learn multiple textures from datasets of one or more complex large images. Second, we show that the image generation with PSGANs has properties of a texture manifold: we can smoothly interpolate between samples in the structured noise space and generate novel samples, which lie perceptually between the textures of the original dataset. In addition, we can also accurately learn periodical textures. We make multiple experiments which show that PSGANs can flexibly handle diverse texture and image data sources. Our method is highly scalable and it can generate output images of arbitrary large size.

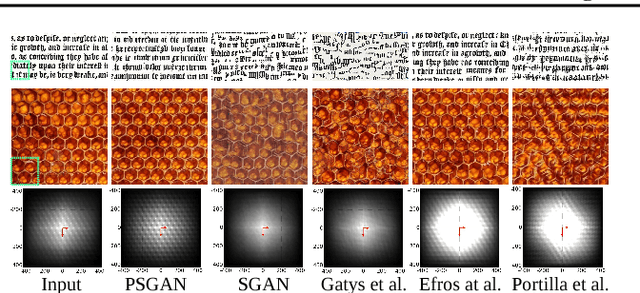

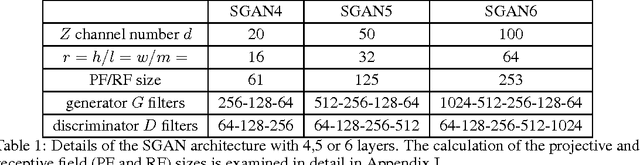

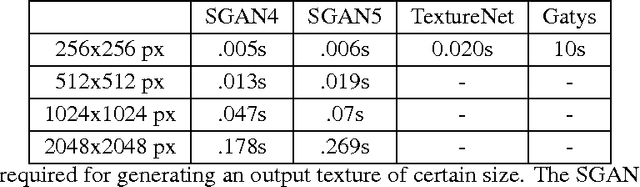

Texture Synthesis with Spatial Generative Adversarial Networks

Sep 08, 2017

Abstract:Generative adversarial networks (GANs) are a recent approach to train generative models of data, which have been shown to work particularly well on image data. In the current paper we introduce a new model for texture synthesis based on GAN learning. By extending the input noise distribution space from a single vector to a whole spatial tensor, we create an architecture with properties well suited to the task of texture synthesis, which we call spatial GAN (SGAN). To our knowledge, this is the first successful completely data-driven texture synthesis method based on GANs. Our method has the following features which make it a state of the art algorithm for texture synthesis: high image quality of the generated textures, very high scalability w.r.t. the output texture size, fast real-time forward generation, the ability to fuse multiple diverse source images in complex textures. To illustrate these capabilities we present multiple experiments with different classes of texture images and use cases. We also discuss some limitations of our method with respect to the types of texture images it can synthesize, and compare it to other neural techniques for texture generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge