Tobias Gruber

Uncertainty depth estimation with gated images for 3D reconstruction

Mar 11, 2020

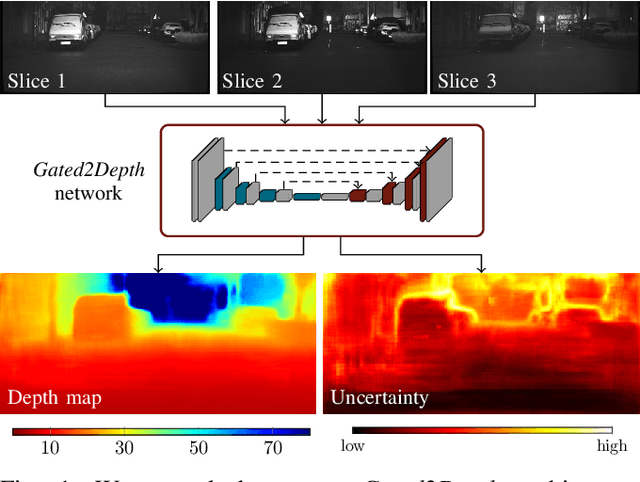

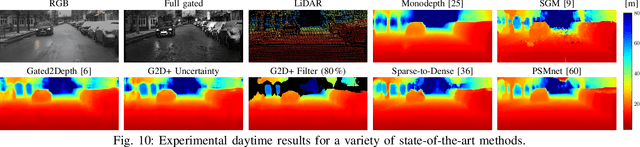

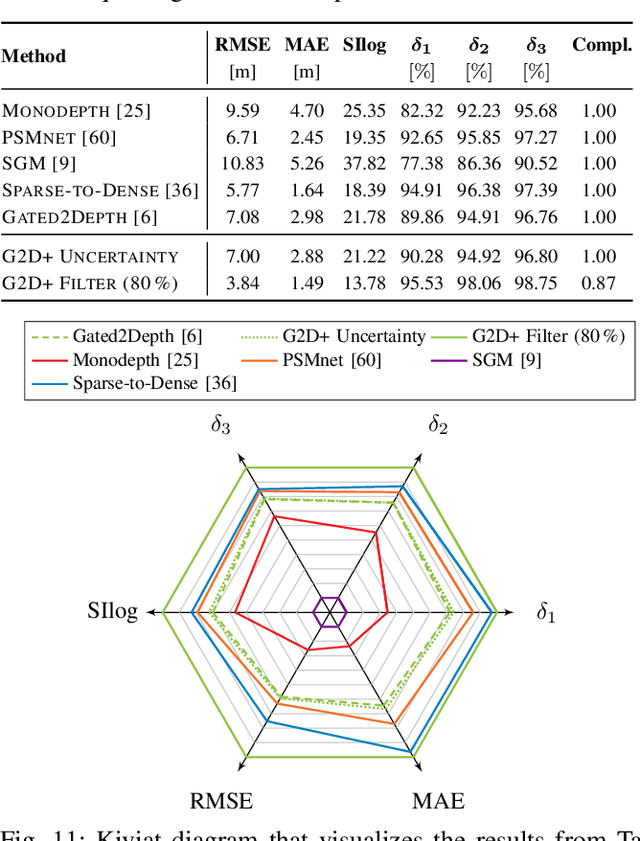

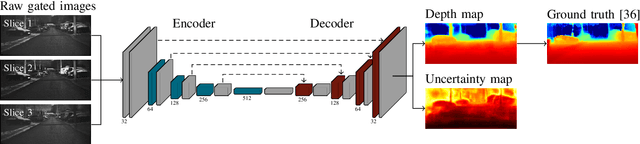

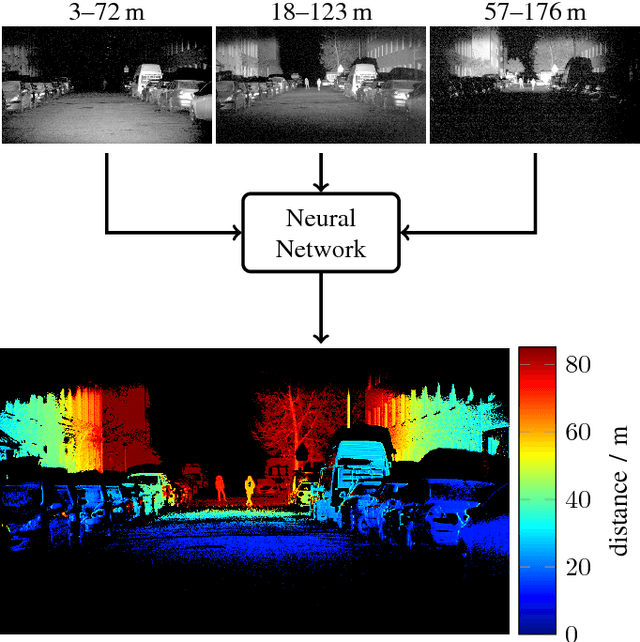

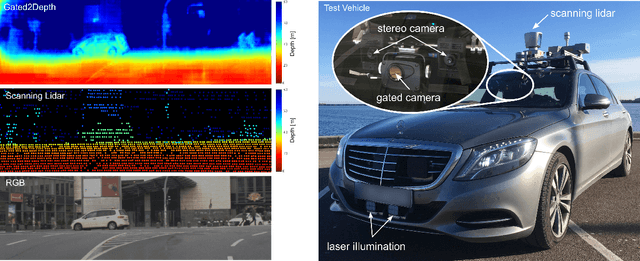

Abstract:Gated imaging is an emerging sensor technology for self-driving cars that provides high-contrast images even under adverse weather influence. It has been shown that this technology can even generate high-fidelity dense depth maps with accuracy comparable to scanning LiDAR systems. In this work, we extend the recent Gated2Depth framework with aleatoric uncertainty providing an additional confidence measure for the depth estimates. This confidence can help to filter out uncertain estimations in regions without any illumination. Moreover, we show that training on dense depth maps generated by LiDAR depth completion algorithms can further improve the performance.

A Benchmark for Lidar Sensors in Fog: Is Detection Breaking Down?

Dec 06, 2019

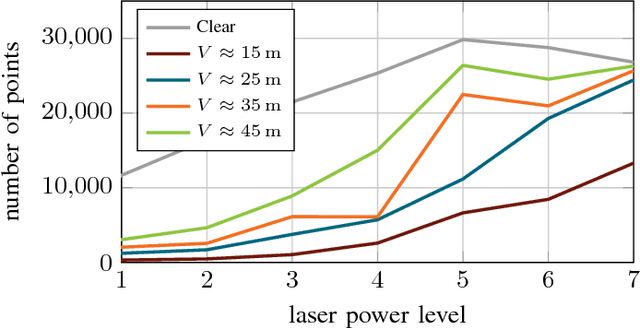

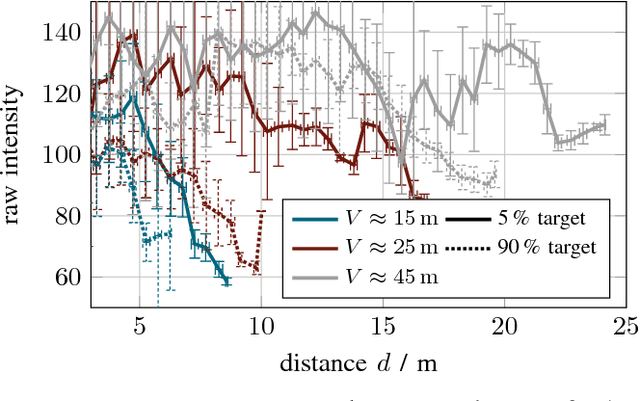

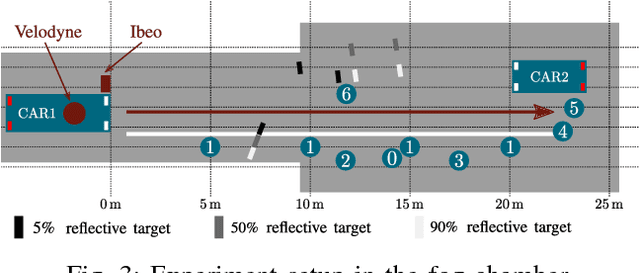

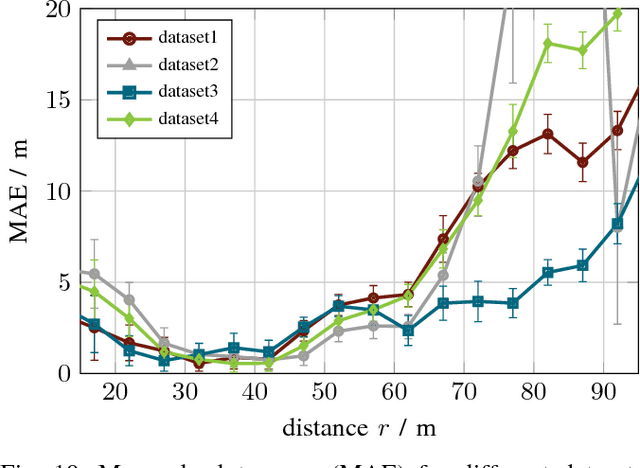

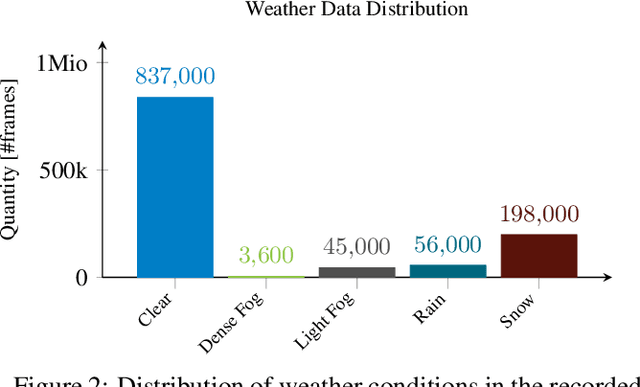

Abstract:Autonomous driving at level five does not only means self-driving in the sunshine. Adverse weather is especially critical because fog, rain, and snow degrade the perception of the environment. In this work, current state of the art light detection and ranging (lidar) sensors are tested in controlled conditions in a fog chamber. We present current problems and disturbance patterns for four different state of the art lidar systems. Moreover, we investigate how tuning internal parameters can improve their performance in bad weather situations. This is of great importance because most state of the art detection algorithms are based on undisturbed lidar data.

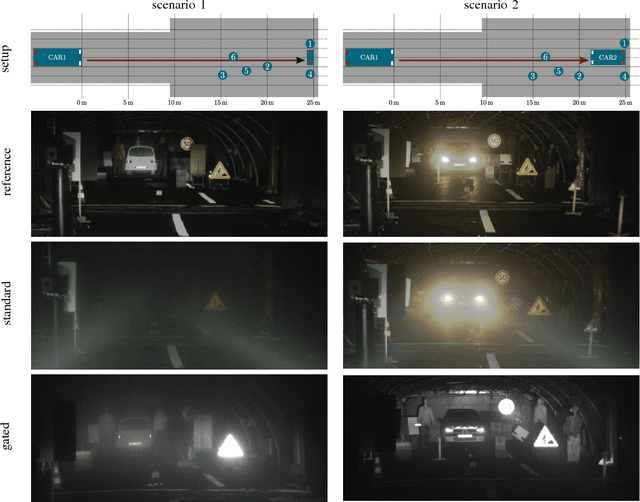

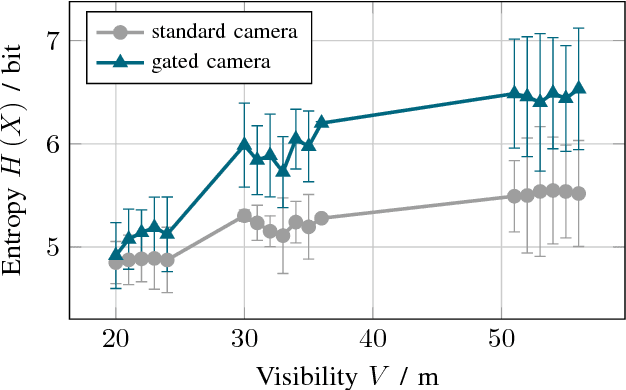

Benchmarking Image Sensors Under Adverse Weather Conditions for Autonomous Driving

Dec 06, 2019

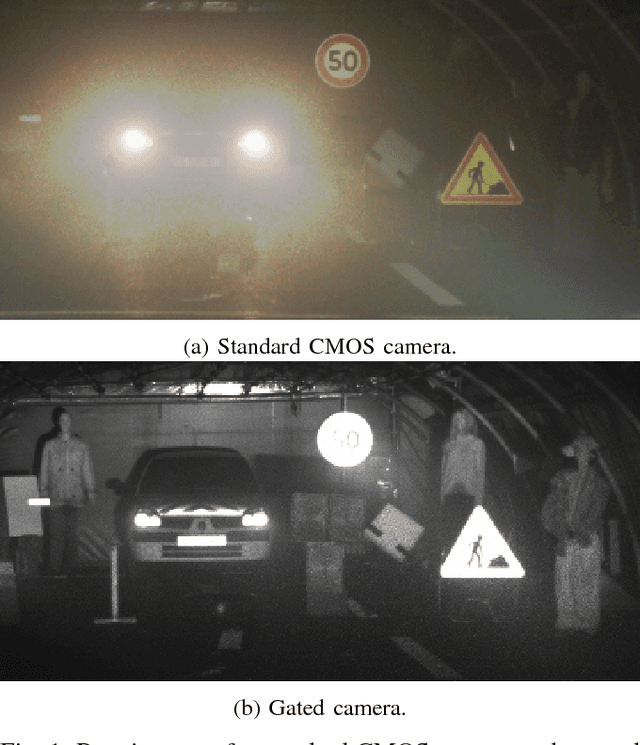

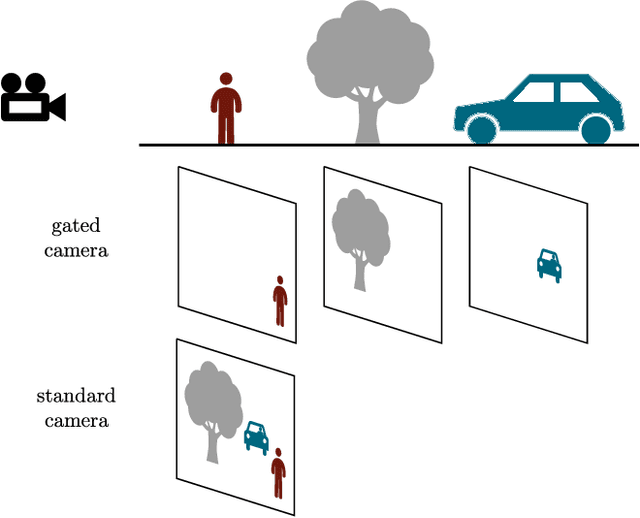

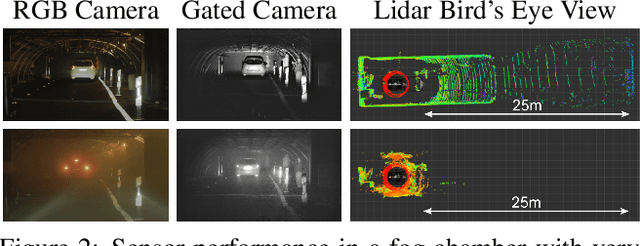

Abstract:Adverse weather conditions are very challenging for autonomous driving because most of the state-of-the-art sensors stop working reliably under these conditions. In order to develop robust sensors and algorithms, tests with current sensors in defined weather conditions are crucial for determining the impact of bad weather for each sensor. This work describes a testing and evaluation methodology that helps to benchmark novel sensor technologies and compare them to state-of-the-art sensors. As an example, gated imaging is compared to standard imaging under foggy conditions. It is shown that gated imaging outperforms state-of-the-art standard passive imaging due to time-synchronized active illumination.

Learning Super-resolved Depth from Active Gated Imaging

Dec 05, 2019

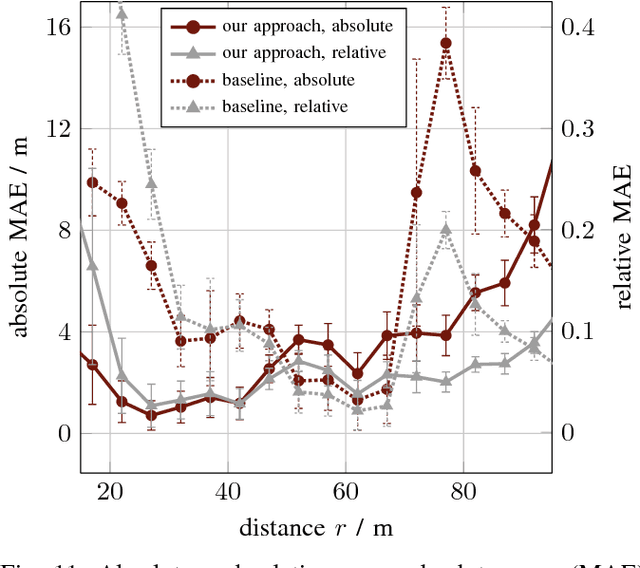

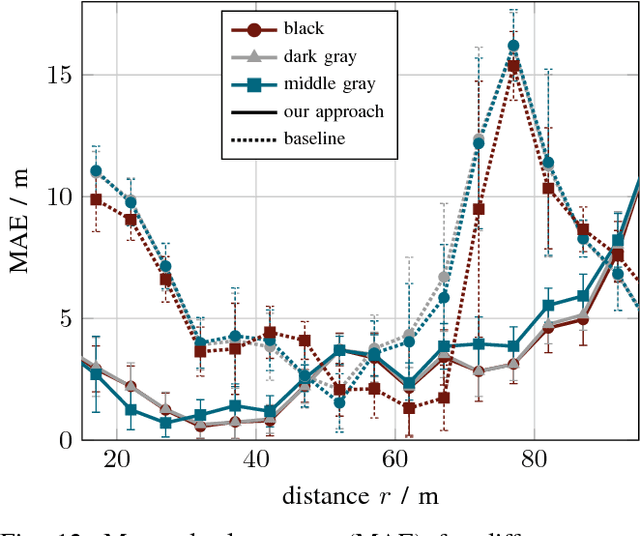

Abstract:Environment perception for autonomous driving is doomed by the trade-off between range-accuracy and resolution: current sensors that deliver very precise depth information are usually restricted to low resolution because of technology or cost limitations. In this work, we exploit depth information from an active gated imaging system based on cost-sensitive diode and CMOS technology. Learning a mapping between pixel intensities of three gated slices and depth produces a super-resolved depth map image with respectable relative accuracy of 5% in between 25-80 m. By design, depth information is perfectly aligned with pixel intensity values.

Pixel-Accurate Depth Evaluation in Realistic Driving Scenarios

Jun 21, 2019

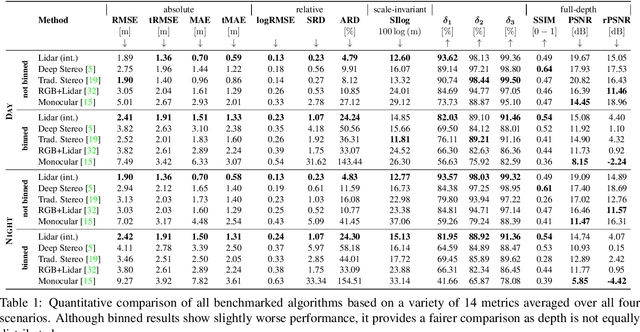

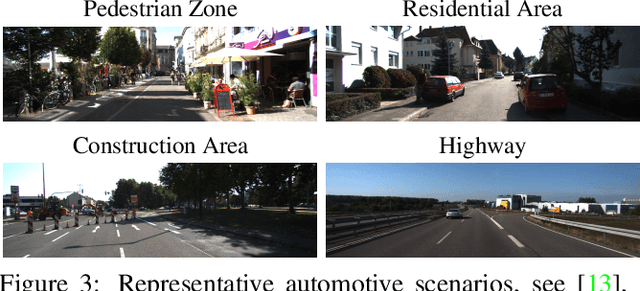

Abstract:This work presents an evaluation benchmark for depth estimation and completion using high-resolution depth measurements with angular resolution of up to 25" (arcsecond), akin to a 50 megapixel camera with per-pixel depth available. Existing datasets, such as the KITTI benchmark, provide only sparse reference measurements with an order of magnitude lower angular resolution - these sparse measurements are treated as ground truth by existing depth estimation methods. We propose an evaluation in four characteristic automotive scenarios recorded in varying weather conditions (day, night, fog, rain). As a result, our benchmark allows to evaluate the robustness of depth sensing methods to adverse weather and different driving conditions. Using the proposed evaluation data, we show that current stereo approaches provide significantly more stable depth estimates than monocular methods and lidar completion in adverse weather.

Seeing Through Fog Without Seeing Fog: Deep Sensor Fusion in the Absence of Labeled Training Data

Feb 24, 2019

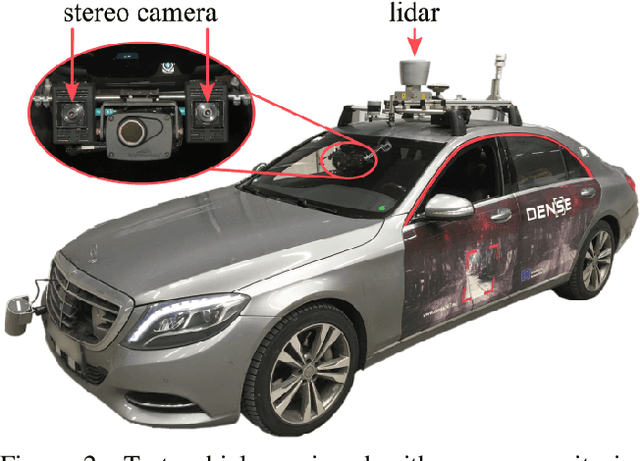

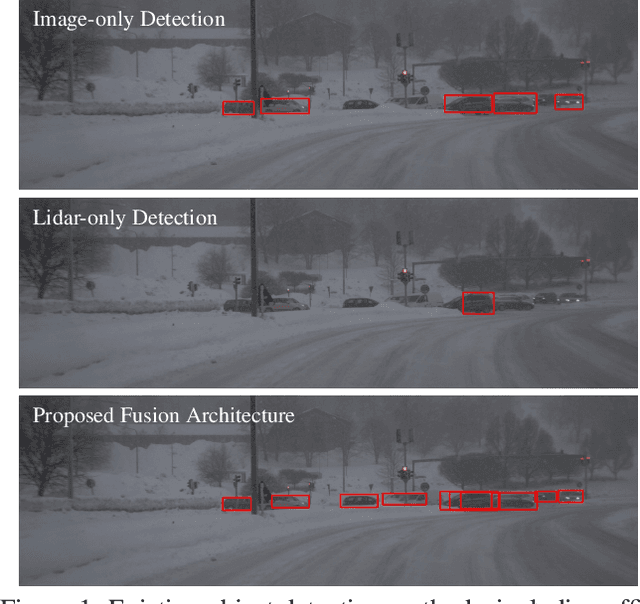

Abstract:The fusion of color and lidar data plays a critical role in object detection for autonomous vehicles, which base their decision making on these inputs. While existing methods exploit redundant and complimentary information under good imaging conditions, they fail to do this in adverse weather and imaging conditions where the sensory streams can be asymmetrically distorted. These rare "edge-case" scenarios are not represented in available data sets, and existing fusion architectures are not designed to handle severe asymmetric distortions. We present a deep fusion architecture that allows for robust fusion in fog and snow without having large labeled training data available for these scenarios. Departing from proposal-level fusion, we propose a real-time single-shot model that adaptively fuses features driven by temporal coherence of the distortions. We validate the proposed method, trained on clean data, in simulation and on unseen conditions of in-the-wild driving scenarios.

Gated2Depth: Real-time Dense Lidar from Gated Images

Feb 13, 2019

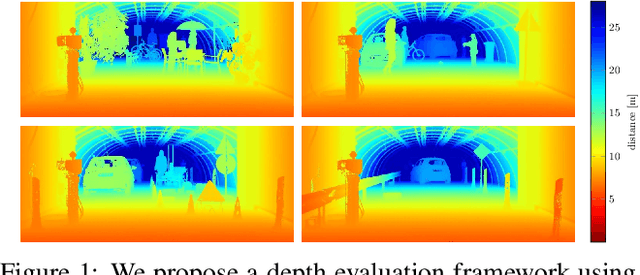

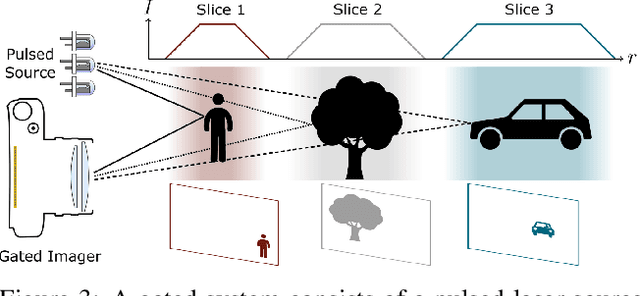

Abstract:We present an imaging framework which converts three images from a gated camera into high-resolution depth maps with depth resolution comparable to pulsed lidar measurements. Existing scanning lidar systems achieve low spatial resolution at large ranges due to mechanically-limited angular sampling rates, restricting scene understanding tasks to close-range clusters with dense sampling. In addition, today's lidar detector technologies, short-pulsed laser sources and scanning mechanics result in high cost, power consumption and large form-factors. We depart from point scanning and propose a learned architecture that recovers high-fidelity dense depth from three temporally gated images, acquired with a flash source and a high-resolution CMOS sensor. The proposed architecture exploits semantic context across gated slices, and is trained on a synthetic discriminator loss without the need of dense depth labels. The method is real-time and essentially turns a gated camera into a low-cost dense flash lidar which we validate on a wide range of outdoor driving captures and in simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge