Tenghui Xie

Wireless Communication as an Information Sensor for Multi-agent Cooperative Perception: A Survey

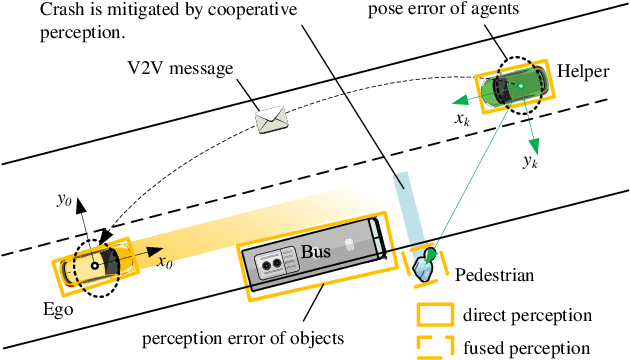

Apr 30, 2025Abstract:Cooperative perception extends the perception capabilities of autonomous vehicles by enabling multi-agent information sharing via Vehicle-to-Everything (V2X) communication. Unlike traditional onboard sensors, V2X acts as a dynamic "information sensor" characterized by limited communication, heterogeneity, mobility, and scalability. This survey provides a comprehensive review of recent advancements from the perspective of information-centric cooperative perception, focusing on three key dimensions: information representation, information fusion, and large-scale deployment. We categorize information representation into data-level, feature-level, and object-level schemes, and highlight emerging methods for reducing data volume and compressing messages under communication constraints. In information fusion, we explore techniques under both ideal and non-ideal conditions, including those addressing heterogeneity, localization errors, latency, and packet loss. Finally, we summarize system-level approaches to support scalability in dense traffic scenarios. Compared with existing surveys, this paper introduces a new perspective by treating V2X communication as an information sensor and emphasizing the challenges of deploying cooperative perception in real-world intelligent transportation systems.

A Spatial Calibration Method for Robust Cooperative Perception

Apr 25, 2023

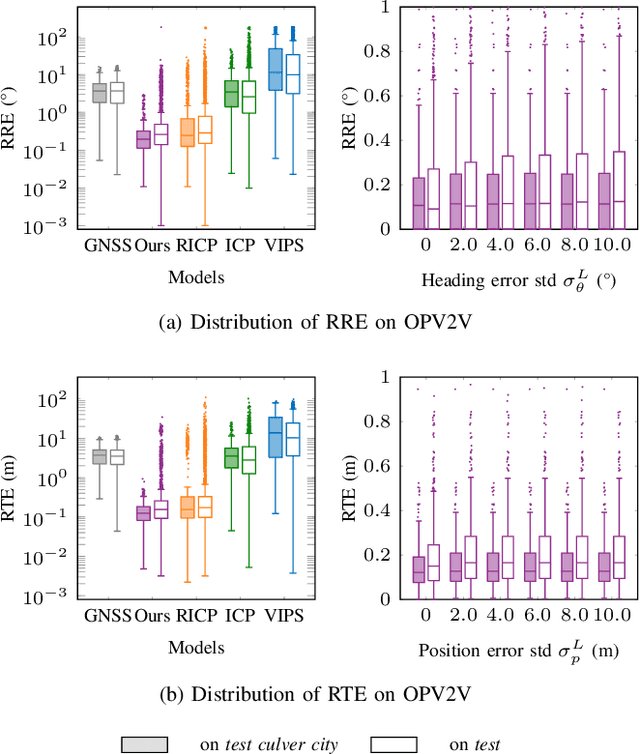

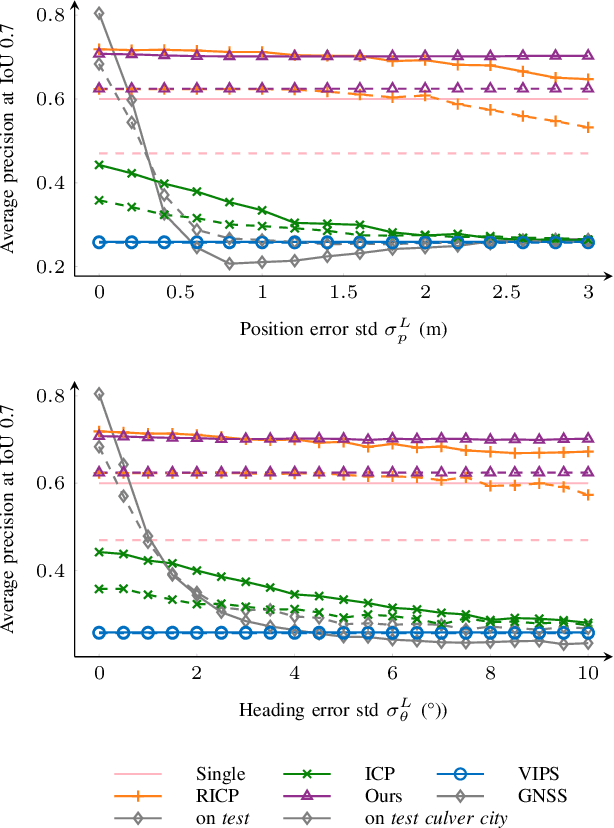

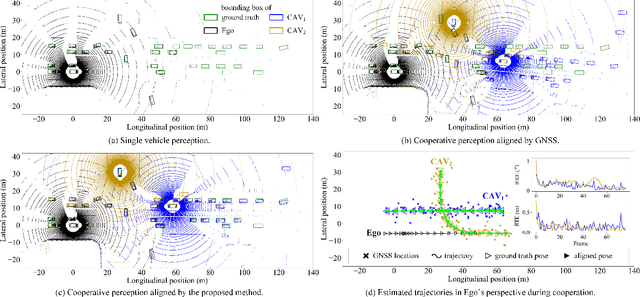

Abstract:Cooperative perception is a promising technique for enhancing the perception capabilities of automated vehicles through vehicle-to-everything (V2X) cooperation, provided that accurate relative pose transforms are available. Nevertheless, obtaining precise positioning information often entails high costs associated with navigation systems. Moreover, signal drift resulting from factors such as occlusion and multipath effects can compromise the stability of the positioning information. Hence, a low-cost and robust method is required to calibrate relative pose information for multi-agent cooperative perception. In this paper, we propose a simple but effective inter-agent object association approach (CBM), which constructs contexts using the detected bounding boxes, followed by local context matching and global consensus maximization. Based on the matched correspondences, optimal relative pose transform is estimated, followed by cooperative perception fusion. Extensive experimental studies are conducted on both the simulated and real-world datasets, high object association precision and decimeter level relative pose calibration accuracy is achieved among the cooperating agents even with larger inter-agent localization errors. Furthermore, the proposed approach outperforms the state-of-the-art methods in terms of object association and relative pose estimation accuracy, as well as the robustness of cooperative perception against the pose errors of the connected agents. The code will be available at https://github.com/zhyingS/CBM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge