Sudipta Banerjee

FPBench: A Comprehensive Benchmark of Multimodal Large Language Models for Fingerprint Analysis

Dec 19, 2025Abstract:Multimodal LLMs (MLLMs) have gained significant traction in complex data analysis, visual question answering, generation, and reasoning. Recently, they have been used for analyzing the biometric utility of iris and face images. However, their capabilities in fingerprint understanding are yet unexplored. In this work, we design a comprehensive benchmark, \textsc{FPBench} that evaluates the performance of 20 MLLMs (open-source and proprietary) across 7 real and synthetic datasets on 8 biometric and forensic tasks using zero-shot and chain-of-thought prompting strategies. We discuss our findings in terms of performance, explainability and share our insights into the challenges and limitations. We establish \textsc{FPBench} as the first comprehensive benchmark for fingerprint domain understanding with MLLMs paving the path for foundation models for fingerprints.

DiffClean: Diffusion-based Makeup Removal for Accurate Age Estimation

Jul 17, 2025Abstract:Accurate age verification can protect underage users from unauthorized access to online platforms and e-commerce sites that provide age-restricted services. However, accurate age estimation can be confounded by several factors, including facial makeup that can induce changes to alter perceived identity and age to fool both humans and machines. In this work, we propose DiffClean which erases makeup traces using a text-guided diffusion model to defend against makeup attacks. DiffClean improves age estimation (minor vs. adult accuracy by 4.8%) and face verification (TMR by 8.9% at FMR=0.01%) over competing baselines on digitally simulated and real makeup images.

FaceCloak: Learning to Protect Face Templates

Apr 08, 2025

Abstract:Generative models can reconstruct face images from encoded representations (templates) bearing remarkable likeness to the original face raising security and privacy concerns. We present FaceCloak, a neural network framework that protects face templates by generating smart, renewable binary cloaks. Our method proactively thwarts inversion attacks by cloaking face templates with unique disruptors synthesized from a single face template on the fly while provably retaining biometric utility and unlinkability. Our cloaked templates can suppress sensitive attributes while generalizing to novel feature extraction schemes and outperforms leading baselines in terms of biometric matching and resiliency to reconstruction attacks. FaceCloak-based matching is extremely fast (inference time cost=0.28ms) and light-weight (0.57MB).

Detecting Near-Duplicate Face Images

Aug 14, 2024

Abstract:Near-duplicate images are often generated when applying repeated photometric and geometric transformations that produce imperceptible variants of the original image. Consequently, a deluge of near-duplicates can be circulated online posing copyright infringement concerns. The concerns are more severe when biometric data is altered through such nuanced transformations. In this work, we address the challenge of near-duplicate detection in face images by, firstly, identifying the original image from a set of near-duplicates and, secondly, deducing the relationship between the original image and the near-duplicates. We construct a tree-like structure, called an Image Phylogeny Tree (IPT) using a graph-theoretic approach to estimate the relationship, i.e., determine the sequence in which they have been generated. We further extend our method to create an ensemble of IPTs known as Image Phylogeny Forests (IPFs). We rigorously evaluate our method to demonstrate robustness across other modalities, unseen transformations by latest generative models and IPT configurations, thereby significantly advancing the state-of-the-art performance by 42% on IPF reconstruction accuracy.

Mitigating the Impact of Attribute Editing on Face Recognition

Mar 12, 2024Abstract:Facial attribute editing using generative models can impair automated face recognition. This degradation persists even with recent identity-preserving models such as InstantID. To mitigate this issue, we propose two techniques that perform local and global attribute editing. Local editing operates on the finer details via a regularization-free method based on ControlNet conditioned on depth maps and auxiliary semantic segmentation masks. Global editing operates on coarser details via a regularization-based method guided by custom loss and regularization set. In this work, we empirically ablate twenty-six facial semantic, demographic and expression-based attributes altered using state-of-the-art generative models and evaluate them using ArcFace and AdaFace matchers on CelebA, CelebAMaskHQ and LFW datasets. Finally, we use LLaVA, a vision-language framework for attribute prediction to validate our editing techniques. Our methods outperform SoTA (BLIP, InstantID) at facial editing while retaining identity.

Identity-Preserving Aging of Face Images via Latent Diffusion Models

Jul 17, 2023

Abstract:The performance of automated face recognition systems is inevitably impacted by the facial aging process. However, high quality datasets of individuals collected over several years are typically small in scale. In this work, we propose, train, and validate the use of latent text-to-image diffusion models for synthetically aging and de-aging face images. Our models succeed with few-shot training, and have the added benefit of being controllable via intuitive textual prompting. We observe high degrees of visual realism in the generated images while maintaining biometric fidelity measured by commonly used metrics. We evaluate our method on two benchmark datasets (CelebA and AgeDB) and observe significant reduction (~44%) in the False Non-Match Rate compared to existing state-of the-art baselines.

Generating Adversarial Attacks in the Latent Space

Apr 10, 2023

Abstract:Adversarial attacks in the input (pixel) space typically incorporate noise margins such as $L_1$ or $L_{\infty}$-norm to produce imperceptibly perturbed data that confound deep learning networks. Such noise margins confine the magnitude of permissible noise. In this work, we propose injecting adversarial perturbations in the latent (feature) space using a generative adversarial network, removing the need for margin-based priors. Experiments on MNIST, CIFAR10, Fashion-MNIST, CIFAR100 and Stanford Dogs datasets support the effectiveness of the proposed method in generating adversarial attacks in the latent space while ensuring a high degree of visual realism with respect to pixel-based adversarial attack methods.

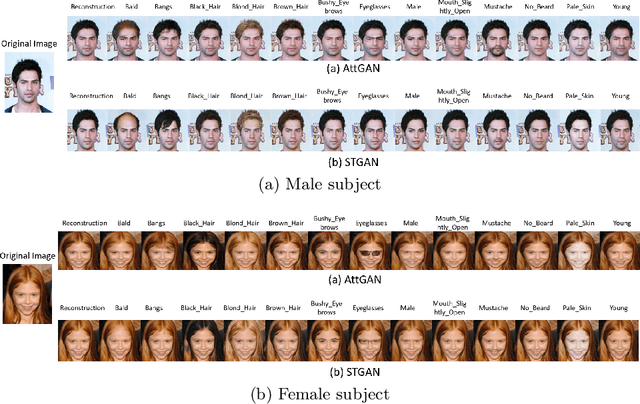

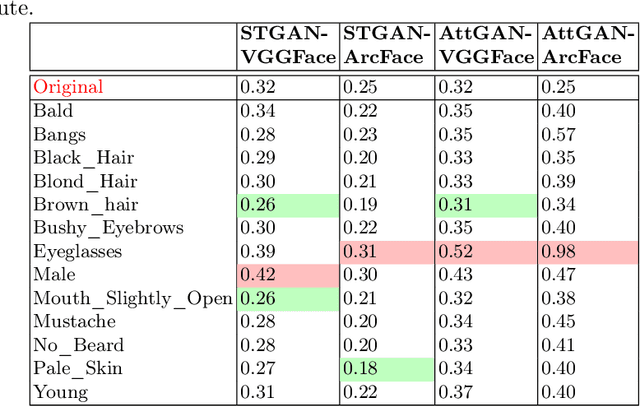

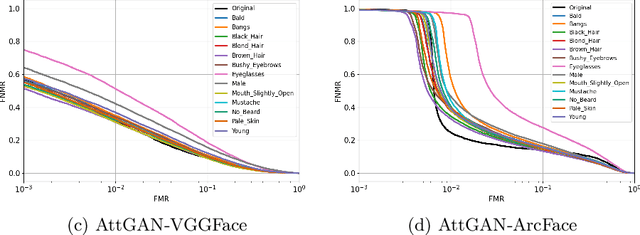

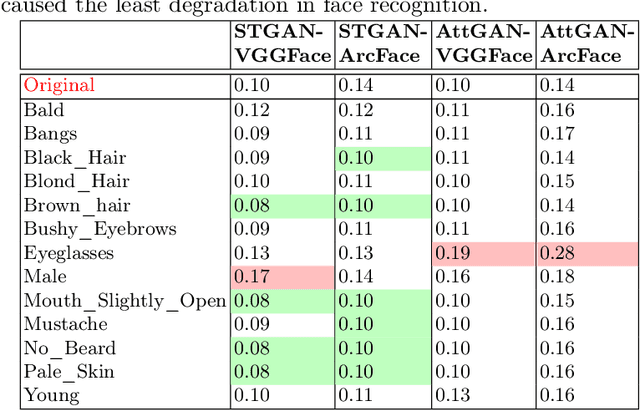

Can GAN-induced Attribute Manipulations Impact Face Recognition?

Sep 07, 2022

Abstract:Impact due to demographic factors such as age, sex, race, etc., has been studied extensively in automated face recognition systems. However, the impact of \textit{digitally modified} demographic and facial attributes on face recognition is relatively under-explored. In this work, we study the effect of attribute manipulations induced via generative adversarial networks (GANs) on face recognition performance. We conduct experiments on the CelebA dataset by intentionally modifying thirteen attributes using AttGAN and STGAN and evaluating their impact on two deep learning-based face verification methods, ArcFace and VGGFace. Our findings indicate that some attribute manipulations involving eyeglasses and digital alteration of sex cues can significantly impair face recognition by up to 73% and need further analysis.

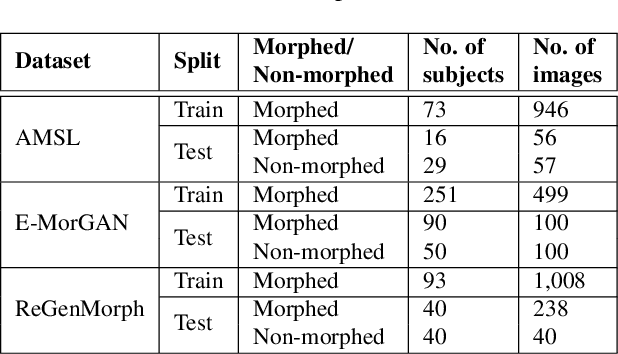

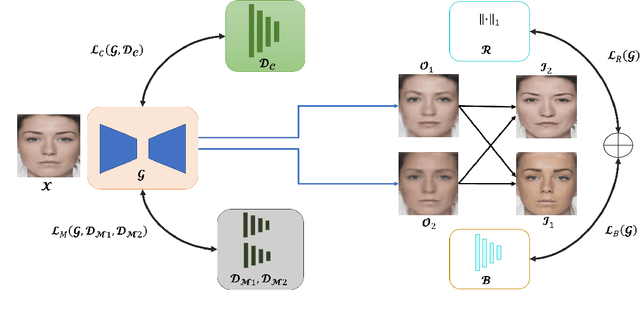

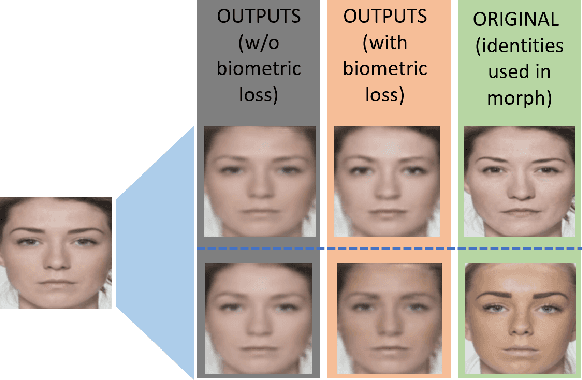

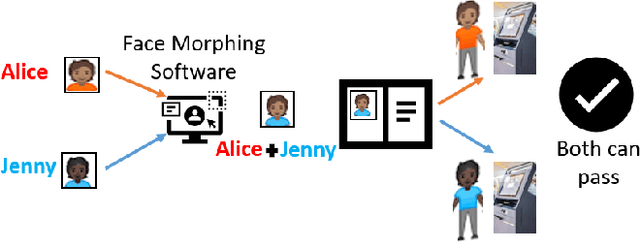

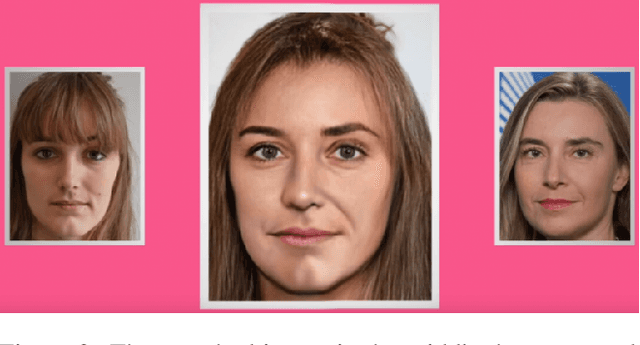

Facial De-morphing: Extracting Component Faces from a Single Morph

Sep 07, 2022

Abstract:A face morph is created by strategically combining two or more face images corresponding to multiple identities. The intention is for the morphed image to match with multiple identities. Current morph attack detection strategies can detect morphs but cannot recover the images or identities used in creating them. The task of deducing the individual face images from a morphed face image is known as \textit{de-morphing}. Existing work in de-morphing assume the availability of a reference image pertaining to one identity in order to recover the image of the accomplice - i.e., the other identity. In this work, we propose a novel de-morphing method that can recover images of both identities simultaneously from a single morphed face image without needing a reference image or prior information about the morphing process. We propose a generative adversarial network that achieves single image-based de-morphing with a surprisingly high degree of visual realism and biometric similarity with the original face images. We demonstrate the performance of our method on landmark-based morphs and generative model-based morphs with promising results.

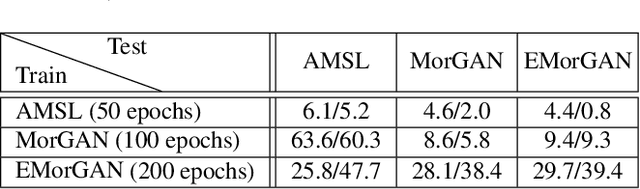

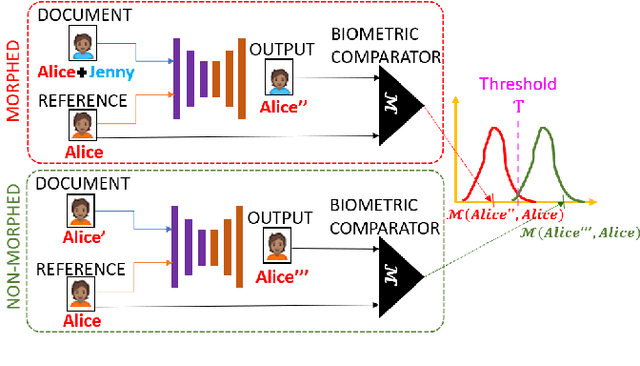

Conditional Identity Disentanglement for Differential Face Morph Detection

Jul 05, 2021

Abstract:We present the task of differential face morph attack detection using a conditional generative network (cGAN). To determine whether a face image in an identification document, such as a passport, is morphed or not, we propose an algorithm that learns to implicitly disentangle identities from the morphed image conditioned on the trusted reference image using the cGAN. Furthermore, the proposed method can also recover some underlying information about the second subject used in generating the morph. We performed experiments on AMSL face morph, MorGAN, and EMorGAN datasets to demonstrate the effectiveness of the proposed method. We also conducted cross-dataset and cross-attack detection experiments. We obtained promising results of 3% BPCER @ 10% APCER on intra-dataset evaluation, which is comparable to existing methods; and 4.6% BPCER @ 10% APCER on cross-dataset evaluation, which outperforms state-of-the-art methods by at least 13.9%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge