Subramanyam Sahoo

The Deepfake Detective: Interpreting Neural Forensics Through Sparse Features and Manifolds

Dec 25, 2025

Abstract:Deepfake detection models have achieved high accuracy in identifying synthetic media, but their decision processes remain largely opaque. In this paper we present a mechanistic interpretability framework for deepfake detection applied to a vision-language model. Our approach combines a sparse autoencoder (SAE) analysis of internal network representations with a novel forensic manifold analysis that probes how the model's features respond to controlled forensic artifact manipulations. We demonstrate that only a small fraction of latent features are actively used in each layer, and that the geometric properties of the model's feature manifold, including intrinsic dimensionality, curvature, and feature selectivity, vary systematically with different types of deepfake artifacts. These insights provide a first step toward opening the "black box" of deepfake detectors, allowing us to identify which learned features correspond to specific forensic artifacts and to guide the development of more interpretable and robust models.

The Double Life of Code World Models: Provably Unmasking Malicious Behavior Through Execution Traces

Dec 15, 2025Abstract:Large language models (LLMs) increasingly generate code with minimal human oversight, raising critical concerns about backdoor injection and malicious behavior. We present Cross-Trace Verification Protocol (CTVP), a novel AI control framework that verifies untrusted code-generating models through semantic orbit analysis. Rather than directly executing potentially malicious code, CTVP leverages the model's own predictions of execution traces across semantically equivalent program transformations. By analyzing consistency patterns in these predicted traces, we detect behavioral anomalies indicative of backdoors. Our approach introduces the Adversarial Robustness Quotient (ARQ), which quantifies the computational cost of verification relative to baseline generation, demonstrating exponential growth with orbit size. Theoretical analysis establishes information-theoretic bounds showing non-gamifiability -- adversaries cannot improve through training due to fundamental space complexity constraints. This work demonstrates that semantic orbit analysis provides a scalable, theoretically grounded approach to AI control for code generation tasks.

The Good, The Bad, and The Hybrid: A Reward Structure Showdown in Reasoning Models Training

Nov 17, 2025

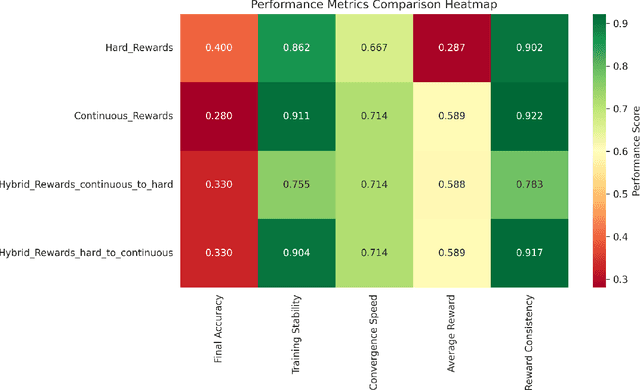

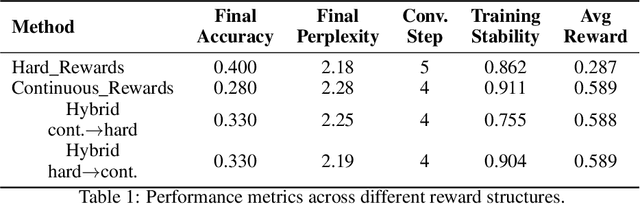

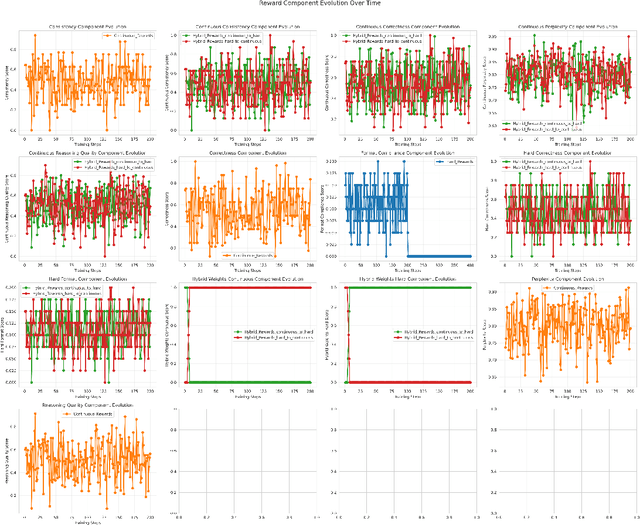

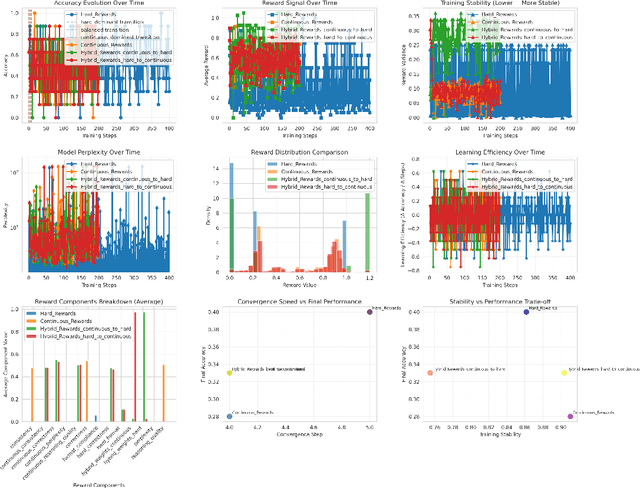

Abstract:Reward design is central to reinforcement learning from human feedback (RLHF) and alignment research. In this work, we propose a unified framework to study hard, continuous, and hybrid reward structures for fine-tuning large language models (LLMs) on mathematical reasoning tasks. Using Qwen3-4B with LoRA fine-tuning on the GSM8K dataset, we formalize and empirically evaluate reward formulations that incorporate correctness, perplexity, reasoning quality, and consistency. We introduce an adaptive hybrid reward scheduler that transitions between discrete and continuous signals, balancing exploration and stability. Our results show that hybrid reward structures improve convergence speed and training stability over purely hard or continuous approaches, offering insights for alignment via adaptive reward modeling.

Who Evaluates AI's Social Impacts? Mapping Coverage and Gaps in First and Third Party Evaluations

Nov 06, 2025

Abstract:Foundation models are increasingly central to high-stakes AI systems, and governance frameworks now depend on evaluations to assess their risks and capabilities. Although general capability evaluations are widespread, social impact assessments covering bias, fairness, privacy, environmental costs, and labor practices remain uneven across the AI ecosystem. To characterize this landscape, we conduct the first comprehensive analysis of both first-party and third-party social impact evaluation reporting across a wide range of model developers. Our study examines 186 first-party release reports and 183 post-release evaluation sources, and complements this quantitative analysis with interviews of model developers. We find a clear division of evaluation labor: first-party reporting is sparse, often superficial, and has declined over time in key areas such as environmental impact and bias, while third-party evaluators including academic researchers, nonprofits, and independent organizations provide broader and more rigorous coverage of bias, harmful content, and performance disparities. However, this complementarity has limits. Only model developers can authoritatively report on data provenance, content moderation labor, financial costs, and training infrastructure, yet interviews reveal that these disclosures are often deprioritized unless tied to product adoption or regulatory compliance. Our findings indicate that current evaluation practices leave major gaps in assessing AI's societal impacts, highlighting the urgent need for policies that promote developer transparency, strengthen independent evaluation ecosystems, and create shared infrastructure to aggregate and compare third-party evaluations in a consistent and accessible way.

Boardwalk Empire: How Generative AI is Revolutionizing Economic Paradigms

Oct 22, 2024Abstract:The relentless pursuit of technological advancements has ushered in a new era where artificial intelligence (AI) is not only a powerful tool but also a critical economic driver. At the forefront of this transformation is Generative AI, which is catalyzing a paradigm shift across industries. Deep generative models, an integration of generative and deep learning techniques, excel in creating new data beyond analyzing existing ones, revolutionizing sectors from production and manufacturing to finance. By automating design, optimization, and innovation cycles, Generative AI is reshaping core industrial processes. In the financial sector, it is transforming risk assessment, trading strategies, and forecasting, demonstrating its profound impact. This paper explores the sweeping changes driven by deep learning models like Large Language Models (LLMs), highlighting their potential to foster innovative business models, disruptive technologies, and novel economic landscapes. As we stand at the threshold of an AI-driven economic era, Generative AI is emerging as a pivotal force, driving innovation, disruption, and economic evolution on a global scale.

The Shifting Paradigm in AI : Why Generative Artificial Intelligence is the new Economic Variable

Oct 19, 2024Abstract:The relentless pursuit of technological advancements has ushered in a new era where artificial intelligence (AI) is not only a powerful tool but also a critical economic driver. At the forefront of this transformation is Generative AI, which is catalyzing a paradigm shift across industries. Deep generative models, an integration of generative and deep learning techniques, excel in creating new data beyond analyzing existing ones, revolutionizing sectors from production and manufacturing to finance. By automating design, optimization, and innovation cycles, Generative AI is reshaping core industrial processes. In the financial sector, it is transforming risk assessment, trading strategies, and forecasting, demonstrating its profound impact. This paper explores the sweeping changes driven by deep learning models like Large Language Models (LLMs), highlighting their potential to foster innovative business models, disruptive technologies, and novel economic landscapes. As we stand at the threshold of an AI-driven economic era, Generative AI is emerging as a pivotal force, driving innovation, disruption, and economic evolution on a global scale.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge