Siyuan Sun

Creating a biologically more accurate spider robot to study active vibration sensing

Jan 23, 2026Abstract:Orb-weaving spiders detect prey on a web using vibration sensors at leg joints. They often dynamically crouch their legs during prey sensing, likely an active sensing strategy. However, how leg crouching enhances sensing is poorly understood, because measuring system vibrations in behaving animals is difficult. We use robophysical modeling to study this problem. Our previous spider robot had only four legs, simplified leg morphology, and a shallow crouching range of motion. Here, we developed a new spider robot, with eight legs, each with four joints that better approximated spider leg morphology. Leg exoskeletons were 3-D printed and joint stiffness was tuned using integrated silicone molding with variable materials and geometry. Tendon-driven actuation allowed a motor in the body to crouch all eight legs deeply as spiders do, while accelerometers at leg joints record leg vibrations. Experiments showed that our new spider robot reproduced key vibration features observed in the previous robot while improving biological accuracy. Our new robot provides a biologically more accurate robophysical model for studying how leg behaviors modulate vibration sensing on a web.

Global Convergence in Neural ODEs: Impact of Activation Functions

Sep 26, 2025Abstract:Neural Ordinary Differential Equations (ODEs) have been successful in various applications due to their continuous nature and parameter-sharing efficiency. However, these unique characteristics also introduce challenges in training, particularly with respect to gradient computation accuracy and convergence analysis. In this paper, we address these challenges by investigating the impact of activation functions. We demonstrate that the properties of activation functions, specifically smoothness and nonlinearity, are critical to the training dynamics. Smooth activation functions guarantee globally unique solutions for both forward and backward ODEs, while sufficient nonlinearity is essential for maintaining the spectral properties of the Neural Tangent Kernel (NTK) during training. Together, these properties enable us to establish the global convergence of Neural ODEs under gradient descent in overparameterized regimes. Our theoretical findings are validated by numerical experiments, which not only support our analysis but also provide practical guidelines for scaling Neural ODEs, potentially leading to faster training and improved performance in real-world applications.

Adversarial Multimodal Network for Movie Question Answering

Jun 24, 2019

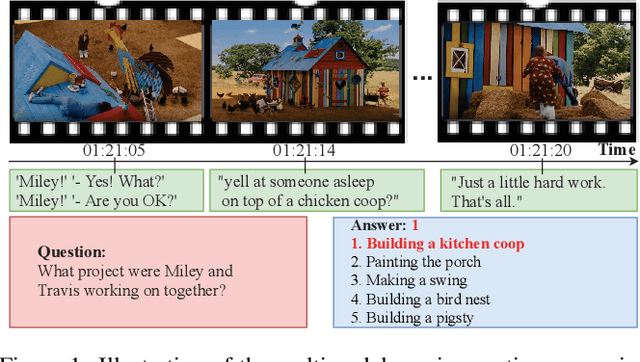

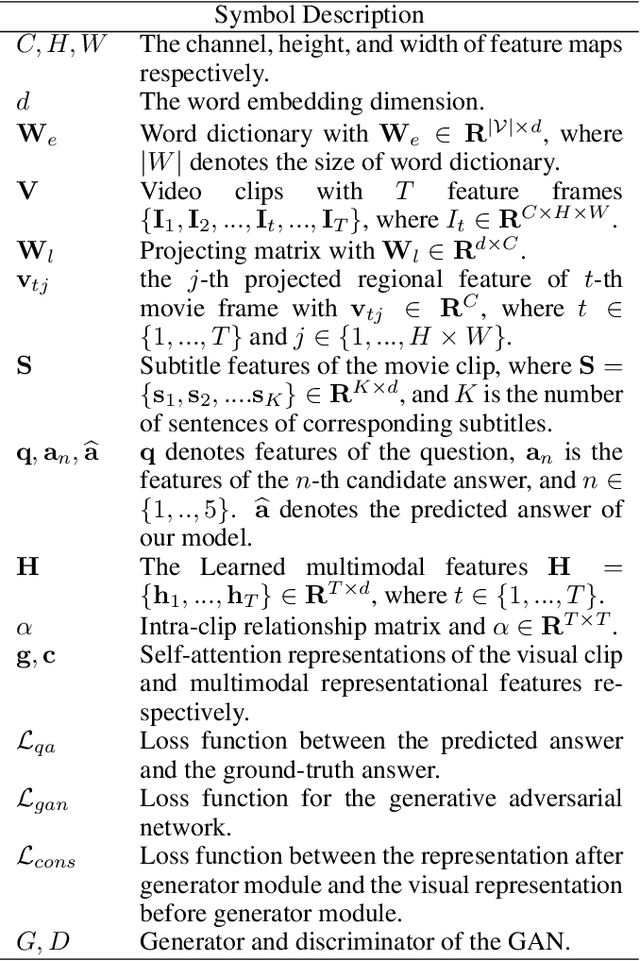

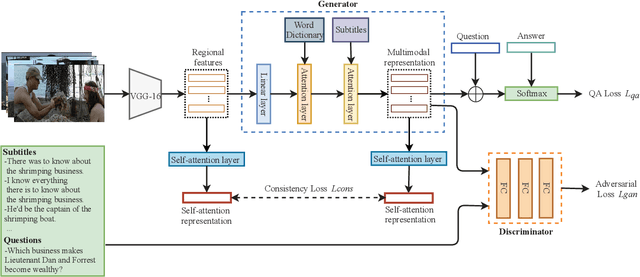

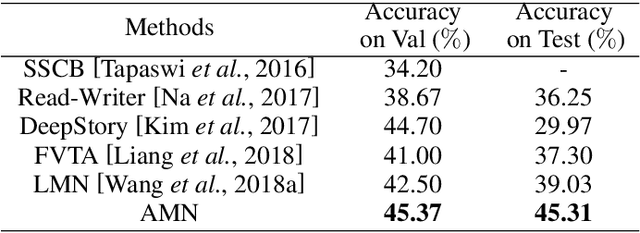

Abstract:Visual question answering by using information from multiple modalities has attracted more and more attention in recent years. However, it is a very challenging task, as the visual content and natural language have quite different statistical properties. In this work, we present a method called Adversarial Multimodal Network (AMN) to better understand video stories for question answering. In AMN, as inspired by generative adversarial networks, we propose to learn multimodal feature representations by finding a more coherent subspace for video clips and the corresponding texts (e.g., subtitles and questions). Moreover, we introduce a self-attention mechanism to enforce the so-called consistency constraints in order to preserve the self-correlation of visual cues of the original video clips in the learned multimodal representations. Extensive experiments on the MovieQA dataset show the effectiveness of our proposed AMN over other published state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge