Simon L. Kappel

A Direct Comparison of Simultaneously Recorded Scalp, Around-Ear, and In-Ear EEG for Neural Selective Auditory Attention Decoding to Speech

May 20, 2025Abstract:Current assistive hearing devices, such as hearing aids and cochlear implants, lack the ability to adapt to the listener's focus of auditory attention, limiting their effectiveness in complex acoustic environments like cocktail party scenarios where multiple conversations occur simultaneously. Neuro-steered hearing devices aim to overcome this limitation by decoding the listener's auditory attention from neural signals, such as electroencephalography (EEG). While many auditory attention decoding (AAD) studies have used high-density scalp EEG, such systems are impractical for daily use as they are bulky and uncomfortable. Therefore, AAD with wearable and unobtrusive EEG systems that are comfortable to wear and can be used for long-term recording are required. Around-ear EEG systems like cEEGrids have shown promise in AAD, but in-ear EEG, recorded via custom earpieces offering superior comfort, remains underexplored. We present a new AAD dataset with simultaneously recorded scalp, around-ear, and in-ear EEG, enabling a direct comparison. Using a classic linear stimulus reconstruction algorithm, a significant performance gap between all three systems exists, with AAD accuracies of 83.4% (scalp), 67.2% (around-ear), and 61.1% (in-ear) on 60s decision windows. These results highlight the trade-off between decoding performance and practical usability. Yet, while the ear-based systems using basic algorithms might currently not yield accurate enough performances for a decision speed-sensitive application in hearing aids, their significant performance suggests potential for attention monitoring on longer timescales. Furthermore, adding an external reference or a few scalp electrodes via greedy forward selection substantially and quickly boosts accuracy by over 10 percent point, especially for in-ear EEG. These findings position in-ear EEG as a promising component in EEG sensor networks for AAD.

A Knowledge Distillation Framework For Enhancing Ear-EEG Based Sleep Staging With Scalp-EEG Data

Oct 27, 2022Abstract:Sleep plays a crucial role in the well-being of human lives. Traditional sleep studies using Polysomnography are associated with discomfort and often lower sleep quality caused by the acquisition setup. Previous works have focused on developing less obtrusive methods to conduct high-quality sleep studies, and ear-EEG is among popular alternatives. However, the performance of sleep staging based on ear-EEG is still inferior to scalp-EEG based sleep staging. In order to address the performance gap between scalp-EEG and ear-EEG based sleep staging, we propose a cross-modal knowledge distillation strategy, which is a domain adaptation approach. Our experiments and analysis validate the effectiveness of the proposed approach with existing architectures, where it enhances the accuracy of the ear-EEG based sleep staging by 3.46% and Cohen's kappa coefficient by a margin of 0.038.

Towards Interpretable Sleep Stage Classification Using Cross-Modal Transformers

Aug 15, 2022

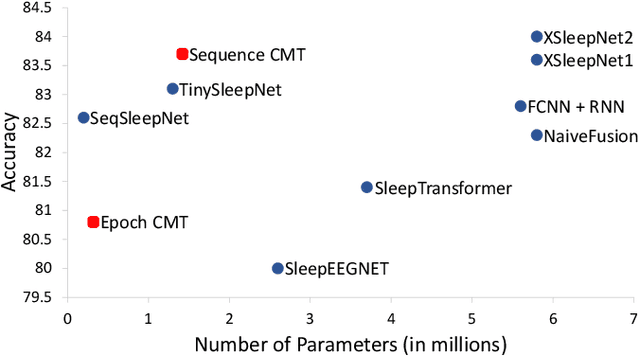

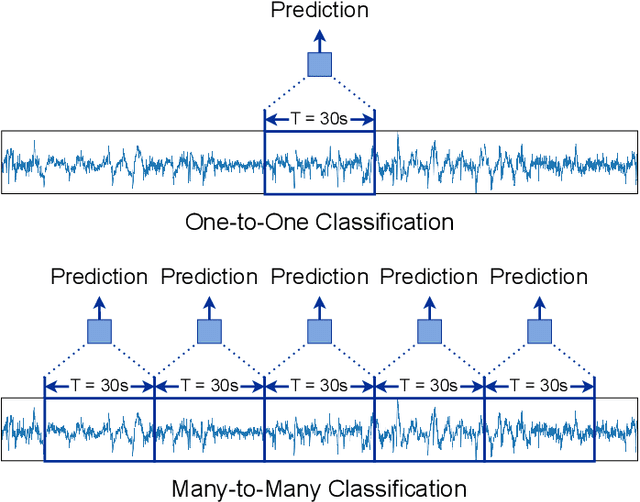

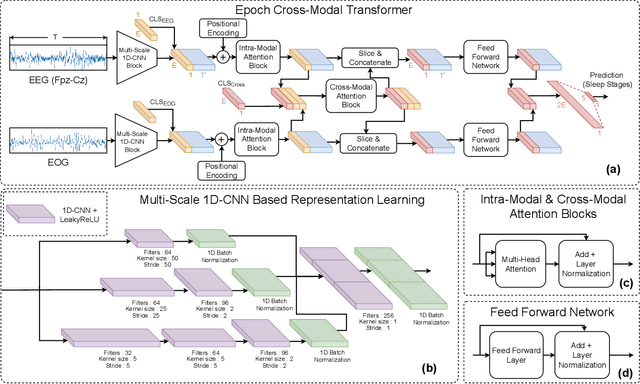

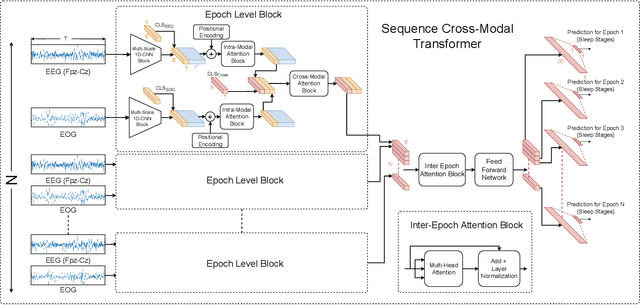

Abstract:Accurate sleep stage classification is significant for sleep health assessment. In recent years, several deep learning and machine learning based sleep staging algorithms have been developed and they have achieved performance on par with human annotation. Despite improved performance, a limitation of most deep-learning based algorithms is their Black-box behavior, which which have limited their use in clinical settings. Here, we propose Cross-Modal Transformers, which is a transformer-based method for sleep stage classification. Our models achieve both competitive performance with the state-of-the-art approaches and eliminates the Black-box behavior of deep-learning models by utilizing the interpretability aspect of the attention modules. The proposed cross-modal transformers consist of a novel cross-modal transformer encoder architecture along with a multi-scale 1-dimensional convolutional neural network for automatic representation learning. Our sleep stage classifier based on this design was able to achieve sleep stage classification performance on par with or better than the state-of-the-art approaches, along with interpretability, a fourfold reduction in the number of parameters and a reduced training time compared to the current state-of-the-art. Our code is available at https://github.com/Jathurshan0330/Cross-Modal-Transformer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge