Sehun Yu

Weakly Supervised Temporal Anomaly Segmentation with Dynamic Time Warping

Aug 15, 2021

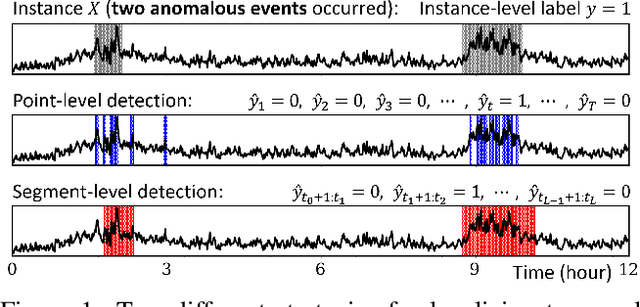

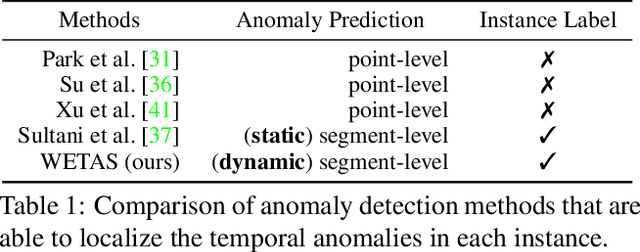

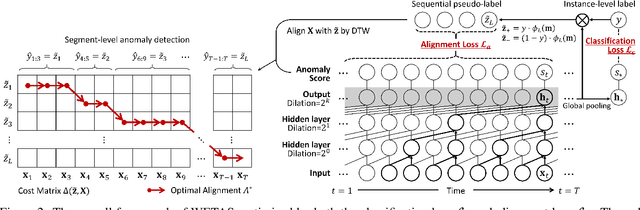

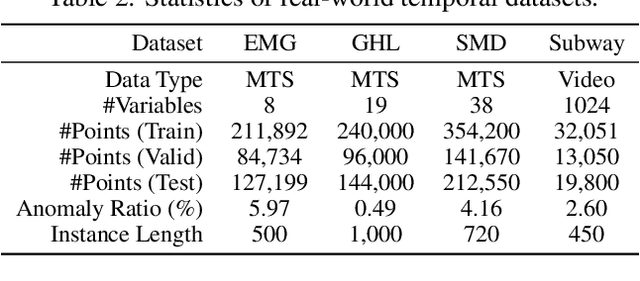

Abstract:Most recent studies on detecting and localizing temporal anomalies have mainly employed deep neural networks to learn the normal patterns of temporal data in an unsupervised manner. Unlike them, the goal of our work is to fully utilize instance-level (or weak) anomaly labels, which only indicate whether any anomalous events occurred or not in each instance of temporal data. In this paper, we present WETAS, a novel framework that effectively identifies anomalous temporal segments (i.e., consecutive time points) in an input instance. WETAS learns discriminative features from the instance-level labels so that it infers the sequential order of normal and anomalous segments within each instance, which can be used as a rough segmentation mask. Based on the dynamic time warping (DTW) alignment between the input instance and its segmentation mask, WETAS obtains the result of temporal segmentation, and simultaneously, it further enhances itself by using the mask as additional supervision. Our experiments show that WETAS considerably outperforms other baselines in terms of the localization of temporal anomalies, and also it provides more informative results than point-level detection methods.

Multi-Class Data Description for Out-of-distribution Detection

Apr 02, 2021

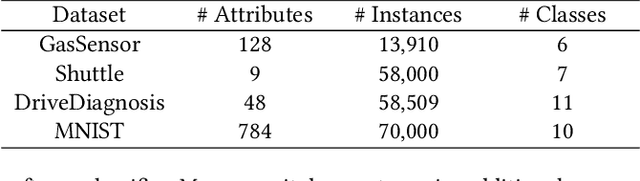

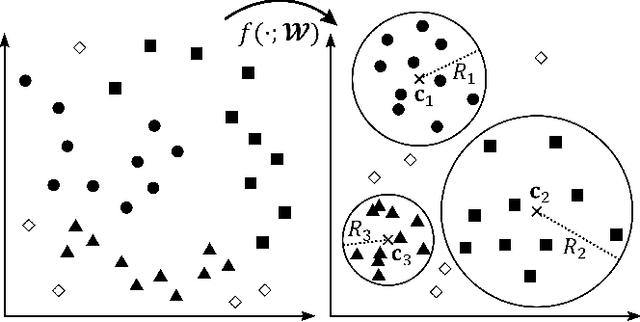

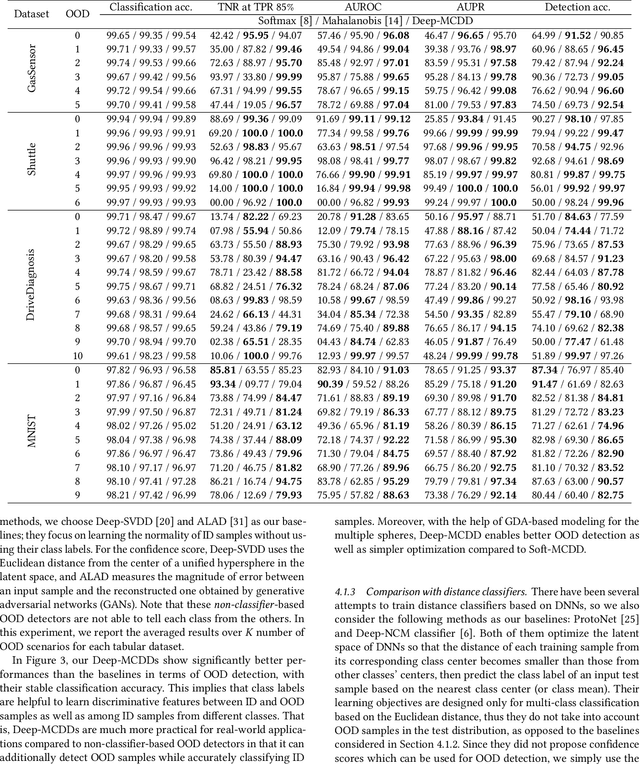

Abstract:The capability of reliably detecting out-of-distribution samples is one of the key factors in deploying a good classifier, as the test distribution always does not match with the training distribution in most real-world applications. In this work, we present a deep multi-class data description, termed as Deep-MCDD, which is effective to detect out-of-distribution (OOD) samples as well as classify in-distribution (ID) samples. Unlike the softmax classifier that only focuses on the linear decision boundary partitioning its latent space into multiple regions, our Deep-MCDD aims to find a spherical decision boundary for each class which determines whether a test sample belongs to the class or not. By integrating the concept of Gaussian discriminant analysis into deep neural networks, we propose a deep learning objective to learn class-conditional distributions that are explicitly modeled as separable Gaussian distributions. Thereby, we can define the confidence score by the distance of a test sample from each class-conditional distribution, and utilize it for identifying OOD samples. Our empirical evaluation on multi-class tabular and image datasets demonstrates that Deep-MCDD achieves the best performances in distinguishing OOD samples while showing the classification accuracy as high as the other competitors.

* 9 pages, 7 figures, published in SIGKDD 20

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge