Schyler C. Sun

Revealing the Excitation Causality between Climate and Political Violence via a Neural Forward-Intensity Poisson Process

Mar 09, 2022

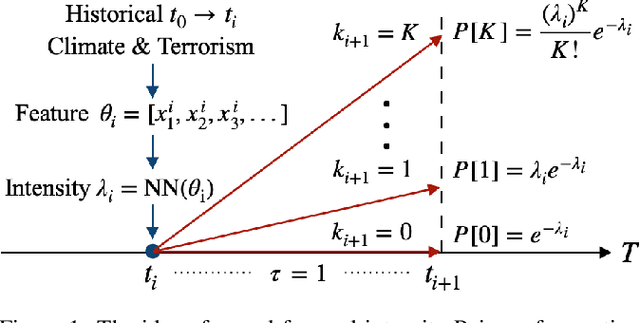

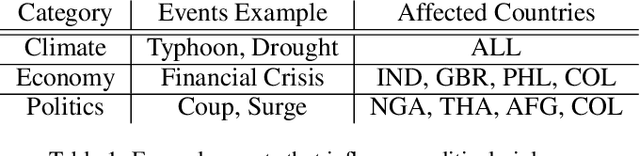

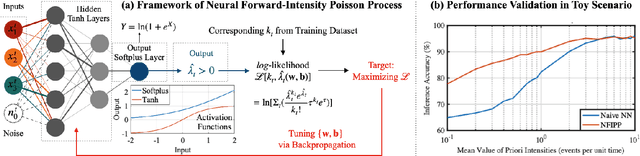

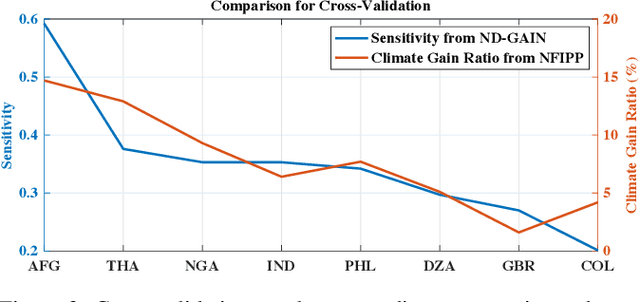

Abstract:The causal mechanism between climate and political violence is fraught with complex mechanisms. Current quantitative causal models rely on one or more assumptions: (1) the climate drivers persistently generate conflict, (2) the causal mechanisms have a linear relationship with the conflict generation parameter, and/or (3) there is sufficient data to inform the prior distribution. Yet, we know conflict drivers often excite a social transformation process which leads to violence (e.g., drought forces agricultural producers to join urban militia), but further climate effects do not necessarily contribute to further violence. Therefore, not only is this bifurcation relationship highly non-linear, there is also often a lack of data to support prior assumptions for high resolution modeling. Here, we aim to overcome the aforementioned causal modeling challenges by proposing a neural forward-intensity Poisson process (NFIPP) model. The NFIPP is designed to capture the potential non-linear causal mechanism in climate induced political violence, whilst being robust to sparse and timing-uncertain data. Our results span 20 recent years and reveal an excitation-based causal link between extreme climate events and political violence across diverse countries. Our climate-induced conflict model results are cross-validated against qualitative climate vulnerability indices. Furthermore, we label historical events that either improve or reduce our predictability gain, demonstrating the importance of domain expertise in informing interpretation.

Scarce Data Driven Deep Learning of Drones via Generalized Data Distribution Space

Aug 18, 2021

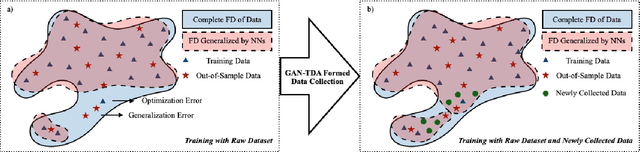

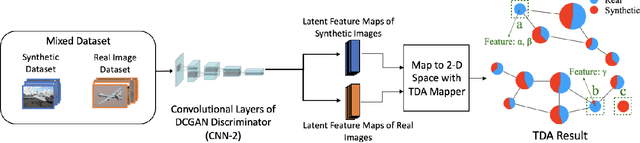

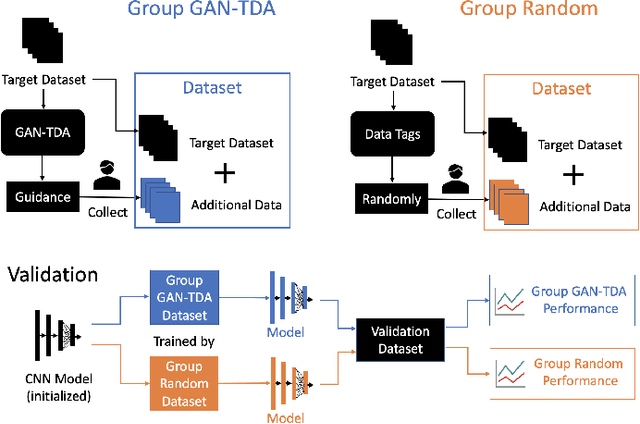

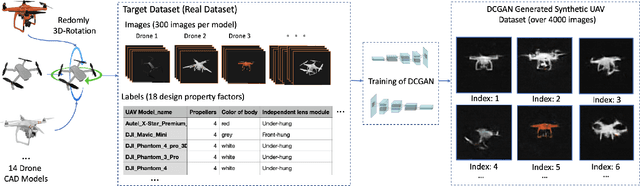

Abstract:Increased drone proliferation in civilian and professional settings has created new threat vectors for airports and national infrastructures. The economic damage for a single major airport from drone incursions is estimated to be millions per day. Due to the lack of diverse drone training data, accurate training of deep learning detection algorithms under scarce data is an open challenge. Existing methods largely rely on collecting diverse and comprehensive experimental drone footage data, artificially induced data augmentation, transfer and meta-learning, as well as physics-informed learning. However, these methods cannot guarantee capturing diverse drone designs and fully understanding the deep feature space of drones. Here, we show how understanding the general distribution of the drone data via a Generative Adversarial Network (GAN) and explaining the missing features using Topological Data Analysis (TDA) - can allow us to acquire missing data to achieve rapid and more accurate learning. We demonstrate our results on a drone image dataset, which contains both real drone images as well as simulated images from computer-aided design. When compared to random data collection (usual practice - discriminator accuracy of 94.67\% after 200 epochs), our proposed GAN-TDA informed data collection method offers a significant 4\% improvement (99.42\% after 200 epochs). We believe that this approach of exploiting general data distribution knowledge form neural networks can be applied to a wide range of scarce data open challenges.

Scalable Partial Explainability in Neural Networks via Flexible Activation Functions

Jun 10, 2020

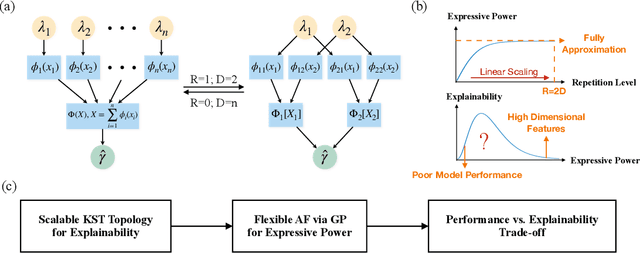

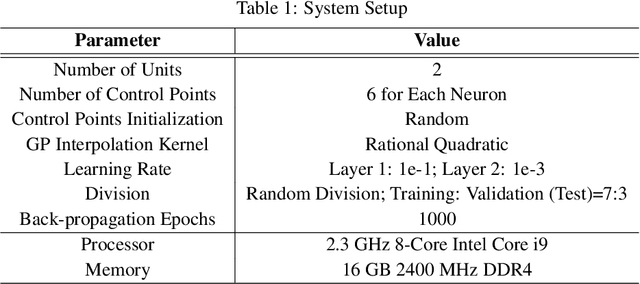

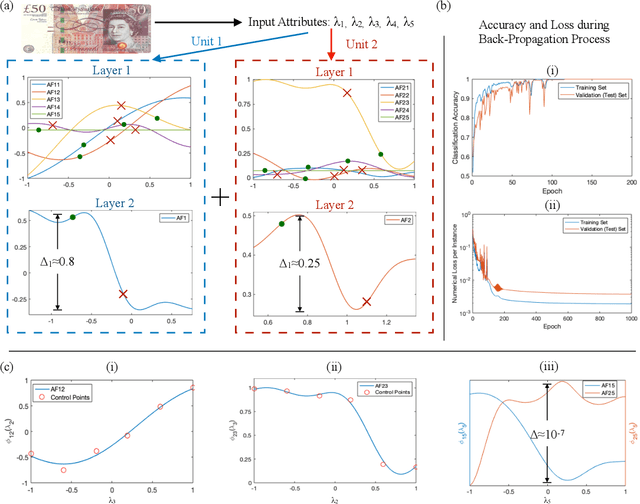

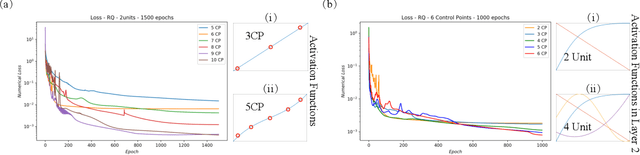

Abstract:Achieving transparency in black-box deep learning algorithms is still an open challenge. High dimensional features and decisions given by deep neural networks (NN) require new algorithms and methods to expose its mechanisms. Current state-of-the-art NN interpretation methods (e.g. Saliency maps, DeepLIFT, LIME, etc.) focus more on the direct relationship between NN outputs and inputs rather than the NN structure and operations itself. In current deep NN operations, there is uncertainty over the exact role played by neurons with fixed activation functions. In this paper, we achieve partially explainable learning model by symbolically explaining the role of activation functions (AF) under a scalable topology. This is carried out by modeling the AFs as adaptive Gaussian Processes (GP), which sit within a novel scalable NN topology, based on the Kolmogorov-Arnold Superposition Theorem (KST). In this scalable NN architecture, the AFs are generated by GP interpolation between control points and can thus be tuned during the back-propagation procedure via gradient descent. The control points act as the core enabler to both local and global adjustability of AF, where the GP interpolation constrains the intrinsic autocorrelation to avoid over-fitting. We show that there exists a trade-off between the NN's expressive power and interpretation complexity, under linear KST topology scaling. To demonstrate this, we perform a case study on a binary classification dataset of banknote authentication. By quantitatively and qualitatively investigating the mapping relationship between inputs and output, our explainable model can provide interpretation over each of the one-dimensional attributes. These early results suggest that our model has the potential to act as the final interpretation layer for deep neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge