Sarah Ostadabbas

A Semi-Supervised Data Augmentation Approach using 3D Graphical Engines

Sep 28, 2018

Abstract:Deep learning approaches have been rapidly adopted across a wide range of fields because of their accuracy and flexibility, but require large labeled training datasets. This presents a fundamental problem for applications with limited, expensive, or private data (i.e. small data), such as human pose and behavior estimation/tracking which could be highly personalized. In this paper, we present a semi-supervised data augmentation approach that can synthesize large scale labeled training datasets using 3D graphical engines based on a physically-valid low dimensional pose descriptor. To evaluate the performance of our synthesized datasets in training deep learning-based models, we generated a large synthetic human pose dataset, called ScanAva using 3D scans of only 7 individuals based on our proposed augmentation approach. A state-of-the-art human pose estimation deep learning model then was trained from scratch using our ScanAva dataset and could achieve the pose estimation accuracy of 91.2% at PCK0.5 criteria after applying an efficient domain adaptation on the synthetic images, in which its pose estimation accuracy was comparable to the same model trained on large scale pose data from real humans such as MPII dataset and much higher than the model trained on other synthetic human dataset such as SURREAL.

Inner Space Preserving Generative Pose Machine

Aug 06, 2018

Abstract:Image-based generative methods, such as generative adversarial networks (GANs) have already been able to generate realistic images with much context control, specially when they are conditioned. However, most successful frameworks share a common procedure which performs an image-to-image translation with pose of figures in the image untouched. When the objective is reposing a figure in an image while preserving the rest of the image, the state-of-the-art mainly assumes a single rigid body with simple background and limited pose shift, which can hardly be extended to the images under normal settings. In this paper, we introduce an image "inner space" preserving model that assigns an interpretable low-dimensional pose descriptor (LDPD) to an articulated figure in the image. Figure reposing is then generated by passing the LDPD and the original image through multi-stage augmented hourglass networks in a conditional GAN structure, called inner space preserving generative pose machine (ISP-GPM). We evaluated ISP-GPM on reposing human figures, which are highly articulated with versatile variations. Test of a state-of-the-art pose estimator on our reposed dataset gave an accuracy over 80% on PCK0.5 metric. The results also elucidated that our ISP-GPM is able to preserve the background with high accuracy while reasonably recovering the area blocked by the figure to be reposed.

* http://www.northeastern.edu/ostadabbas/2018/07/23/inner-space-preserving-generative-pose-machine/

In-Bed Pose Estimation: Deep Learning with Shallow Dataset

Jul 07, 2018

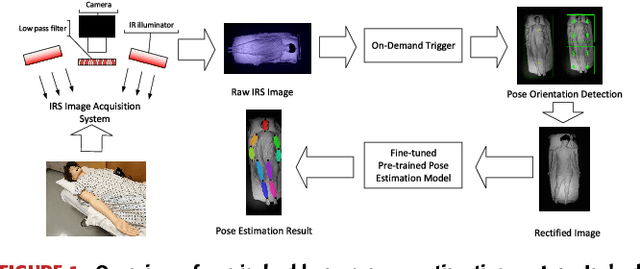

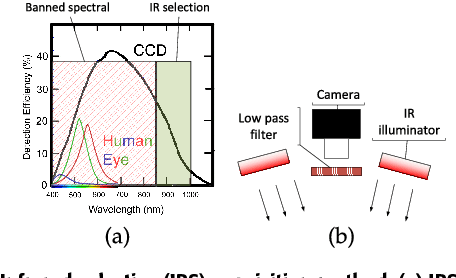

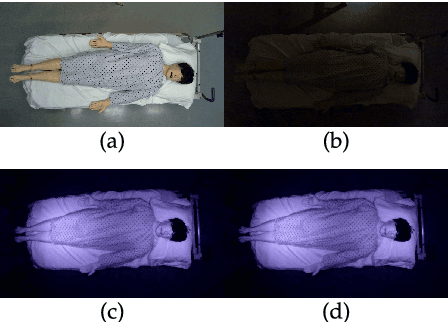

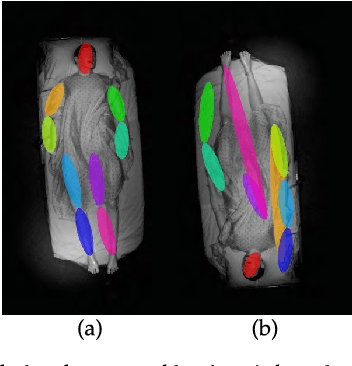

Abstract:Although human pose estimation for various computer vision (CV) applications has been studied extensively in the last few decades, yet in-bed pose estimation using camera-based vision methods has been ignored by the CV community because it is assumed to be identical to the general purpose pose estimation methods. However, in-bed pose estimation has its own specialized aspects and comes with specific challenges including the notable differences in lighting conditions throughout a day and also having different pose distribution from the common human surveillance viewpoint. In this paper, we demonstrate that these challenges significantly lessen the effectiveness of existing general purpose pose estimation models. In order to address the lighting variation challenge, infrared selective (IRS) image acquisition technique is proposed to provide uniform quality data under various lighting conditions. In addition, to deal with unconventional pose perspective, a 2-end histogram of oriented gradient (HOG) rectification method is presented. In this work, we explored the idea of employing a pre-trained convolutional neural network (CNN) model trained on large public datasets of general human poses and fine-tuning the model using our own shallow in-bed IRS dataset. We developed an IRS imaging system and collected IRS image data from several realistic life-size mannequins in a simulated hospital room environment. A pre-trained CNN called convolutional pose machine (CPM) was repurposed for in-bed pose estimation by fine-tuning its specific intermediate layers. Using the HOG rectification method, the pose estimation performance of CPM significantly improved by 26.4% in PCK0.1 criteria compared to the model without such rectification.

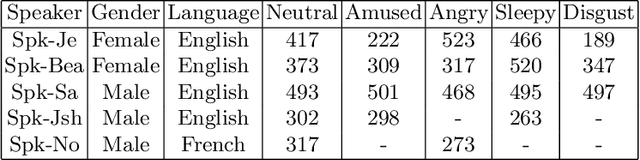

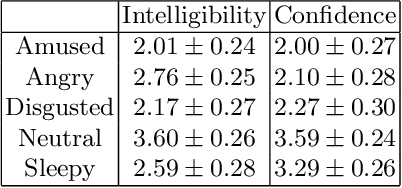

The Emotional Voices Database: Towards Controlling the Emotion Dimension in Voice Generation Systems

Jun 25, 2018

Abstract:In this paper, we present a database of emotional speech intended to be open-sourced and used for synthesis and generation purpose. It contains data for male and female actors in English and a male actor in French. The database covers 5 emotion classes so it could be suitable to build synthesis and voice transformation systems with the potential to control the emotional dimension in a continuous way. We show the data's efficiency by building a simple MLP system converting neutral to angry speech style and evaluate it via a CMOS perception test. Even though the system is a very simple one, the test show the efficiency of the data which is promising for future work.

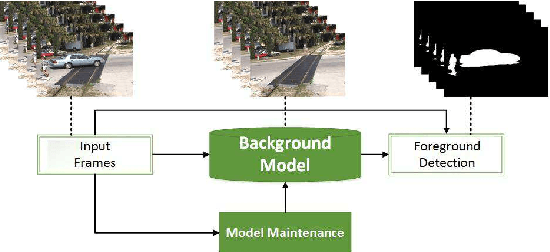

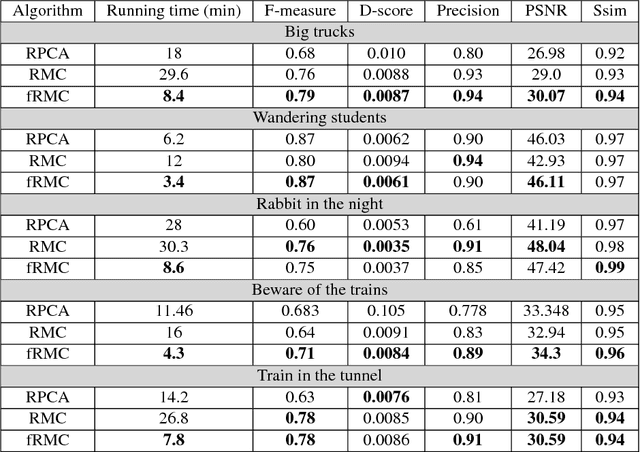

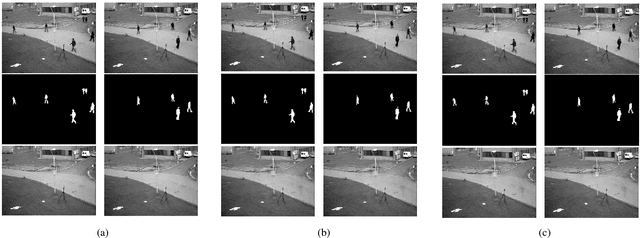

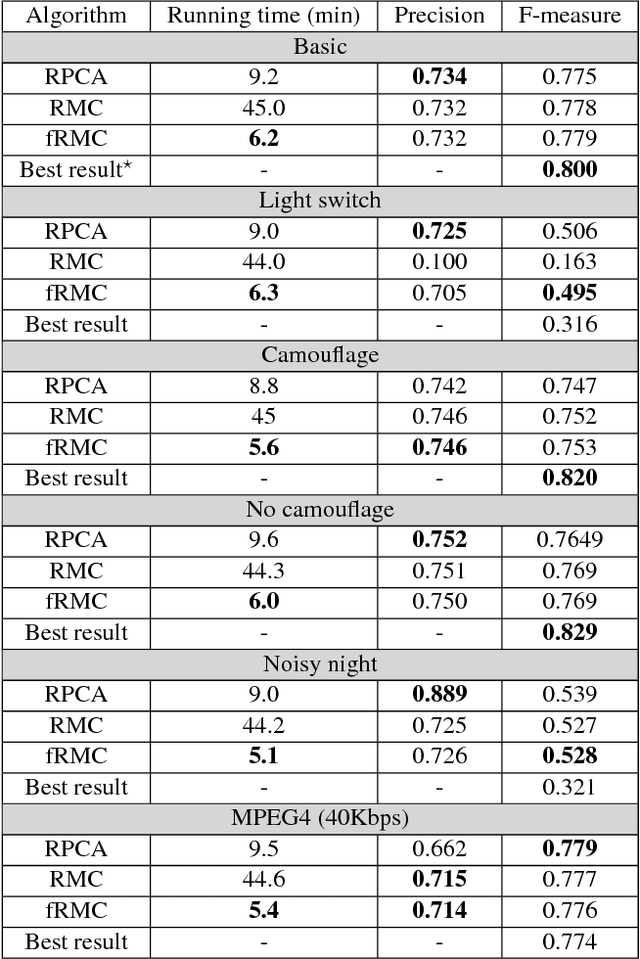

Background Subtraction via Fast Robust Matrix Completion

Nov 03, 2017

Abstract:Background subtraction is the primary task of the majority of video inspection systems. The most important part of the background subtraction which is common among different algorithms is background modeling. In this regard, our paper addresses the problem of background modeling in a computationally efficient way, which is important for current eruption of "big data" processing coming from high resolution multi-channel videos. Our model is based on the assumption that background in natural images lies on a low-dimensional subspace. We formulated and solved this problem in a low-rank matrix completion framework. In modeling the background, we benefited from the in-face extended Frank-Wolfe algorithm for solving a defined convex optimization problem. We evaluated our fast robust matrix completion (fRMC) method on both background models challenge (BMC) and Stuttgart artificial background subtraction (SABS) datasets. The results were compared with the robust principle component analysis (RPCA) and low-rank robust matrix completion (RMC) methods, both solved by inexact augmented Lagrangian multiplier (IALM). The results showed faster computation, at least twice as when IALM solver is used, while having a comparable accuracy even better in some challenges, in subtracting the backgrounds in order to detect moving objects in the scene.

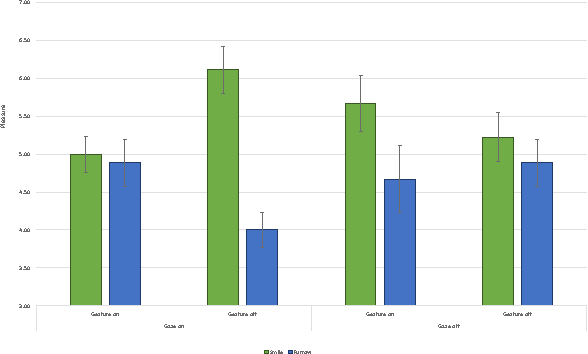

Using Virtual Humans to Understand Real Ones

Jun 13, 2016

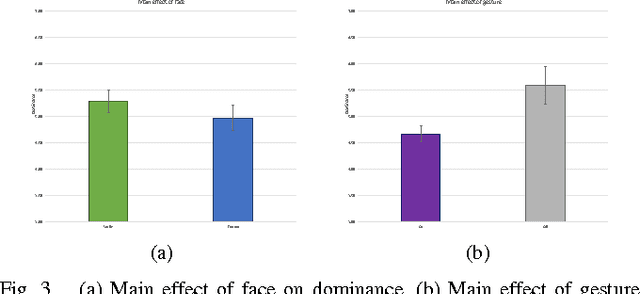

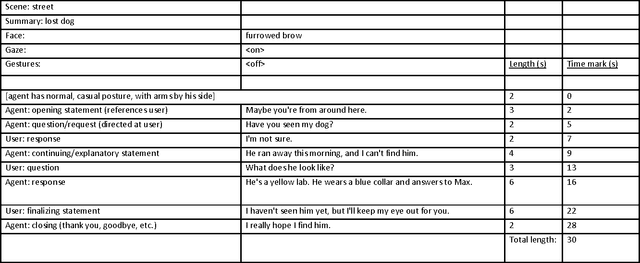

Abstract:Human interactions are characterized by explicit as well as implicit channels of communication. While the explicit channel transmits overt messages, the implicit ones transmit hidden messages about the communicator (e.g., his/her intentions and attitudes). There is a growing consensus that providing a computer with the ability to manipulate implicit affective cues should allow for a more meaningful and natural way of studying particular non-verbal signals of human-human communications by human-computer interactions. In this pilot study, we created a non-dynamic human-computer interaction while manipulating three specific non-verbal channels of communication: gaze pattern, facial expression, and gesture. Participants rated the virtual agent on affective dimensional scales (pleasure, arousal, and dominance) while their physiological signal (electrodermal activity, EDA) was captured during the interaction. Assessment of the behavioral data revealed a significant and complex three-way interaction between gaze, gesture, and facial configuration on the dimension of pleasure, as well as a main effect of gesture on the dimension of dominance. These results suggest a complex relationship between different non-verbal cues and the social context in which they are interpreted. Qualifying considerations as well as possible next steps are further discussed in light of these exploratory findings.

Decoding Emotional Experience through Physiological Signal Processing

Jun 01, 2016

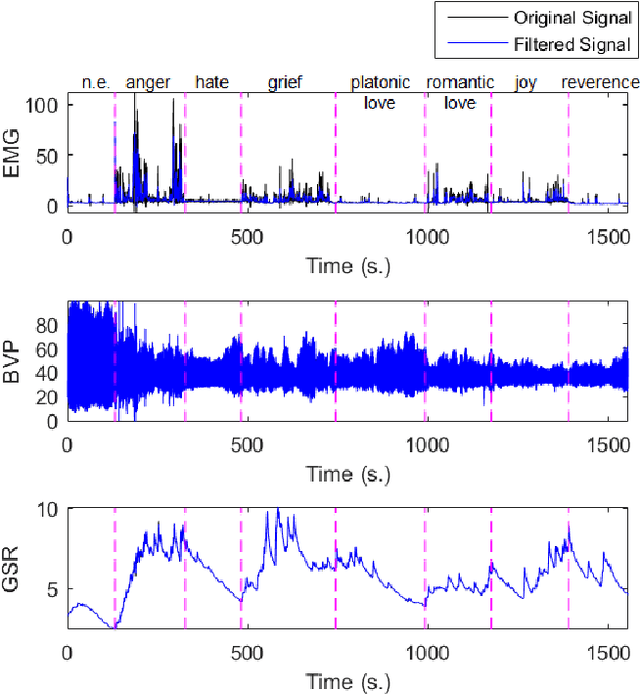

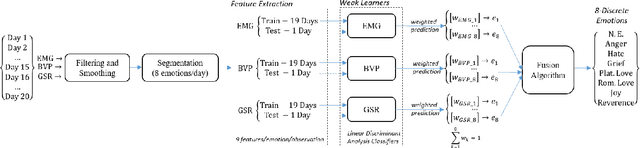

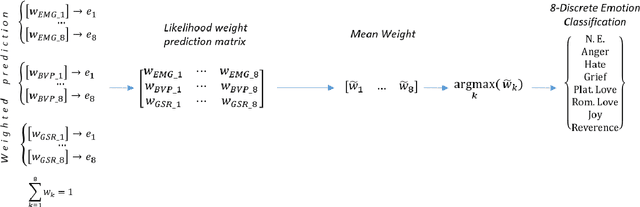

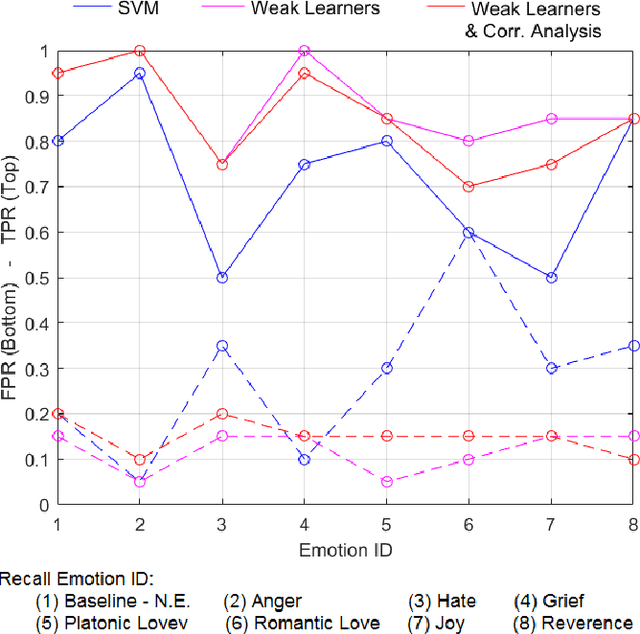

Abstract:There is an increasing consensus among re- searchers that making a computer emotionally intelligent with the ability to decode human affective states would allow a more meaningful and natural way of human-computer interactions (HCIs). One unobtrusive and non-invasive way of recognizing human affective states entails the exploration of how physiological signals vary under different emotional experiences. In particular, this paper explores the correlation between autonomically-mediated changes in multimodal body signals and discrete emotional states. In order to fully exploit the information in each modality, we have provided an innovative classification approach for three specific physiological signals including Electromyogram (EMG), Blood Volume Pressure (BVP) and Galvanic Skin Response (GSR). These signals are analyzed as inputs to an emotion recognition paradigm based on fusion of a series of weak learners. Our proposed classification approach showed 88.1% recognition accuracy, which outperformed the conventional Support Vector Machine (SVM) classifier with 17% accuracy improvement. Furthermore, in order to avoid information redundancy and the resultant over-fitting, a feature reduction method is proposed based on a correlation analysis to optimize the number of features required for training and validating each weak learner. Results showed that despite the feature space dimensionality reduction from 27 to 18 features, our methodology preserved the recognition accuracy of about 85.0%. This reduction in complexity will get us one step closer towards embedding this human emotion encoder in the wireless and wearable HCI platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge