Sagar Dasgupta

Quantum-Classical Hybrid Framework for Zero-Day Time-Push GNSS Spoofing Detection

Aug 25, 2025Abstract:Global Navigation Satellite Systems (GNSS) are critical for Positioning, Navigation, and Timing (PNT) applications. However, GNSS are highly vulnerable to spoofing attacks, where adversaries transmit counterfeit signals to mislead receivers. Such attacks can lead to severe consequences, including misdirected navigation, compromised data integrity, and operational disruptions. Most existing spoofing detection methods depend on supervised learning techniques and struggle to detect novel, evolved, and unseen attacks. To overcome this limitation, we develop a zero-day spoofing detection method using a Hybrid Quantum-Classical Autoencoder (HQC-AE), trained solely on authentic GNSS signals without exposure to spoofed data. By leveraging features extracted during the tracking stage, our method enables proactive detection before PNT solutions are computed. We focus on spoofing detection in static GNSS receivers, which are particularly susceptible to time-push spoofing attacks, where attackers manipulate timing information to induce incorrect time computations at the receiver. We evaluate our model against different unseen time-push spoofing attack scenarios: simplistic, intermediate, and sophisticated. Our analysis demonstrates that the HQC-AE consistently outperforms its classical counterpart, traditional supervised learning-based models, and existing unsupervised learning-based methods in detecting zero-day, unseen GNSS time-push spoofing attacks, achieving an average detection accuracy of 97.71% with an average false negative rate of 0.62% (when an attack occurs but is not detected). For sophisticated spoofing attacks, the HQC-AE attains an accuracy of 98.23% with a false negative rate of 1.85%. These findings highlight the effectiveness of our method in proactively detecting zero-day GNSS time-push spoofing attacks across various stationary GNSS receiver platforms.

Vision-Based Localization and LLM-based Navigation for Indoor Environments

Aug 11, 2025Abstract:Indoor navigation remains a complex challenge due to the absence of reliable GPS signals and the architectural intricacies of large enclosed environments. This study presents an indoor localization and navigation approach that integrates vision-based localization with large language model (LLM)-based navigation. The localization system utilizes a ResNet-50 convolutional neural network fine-tuned through a two-stage process to identify the user's position using smartphone camera input. To complement localization, the navigation module employs an LLM, guided by a carefully crafted system prompt, to interpret preprocessed floor plan images and generate step-by-step directions. Experimental evaluation was conducted in a realistic office corridor with repetitive features and limited visibility to test localization robustness. The model achieved high confidence and an accuracy of 96% across all tested waypoints, even under constrained viewing conditions and short-duration queries. Navigation tests using ChatGPT on real building floor maps yielded an average instruction accuracy of 75%, with observed limitations in zero-shot reasoning and inference time. This research demonstrates the potential for scalable, infrastructure-free indoor navigation using off-the-shelf cameras and publicly available floor plans, particularly in resource-constrained settings like hospitals, airports, and educational institutions.

Grid2Guide: A* Enabled Small Language Model for Indoor Navigation

Aug 11, 2025Abstract:Reliable indoor navigation remains a significant challenge in complex environments, particularly where external positioning signals and dedicated infrastructures are unavailable. This research presents Grid2Guide, a hybrid navigation framework that combines the A* search algorithm with a Small Language Model (SLM) to generate clear, human-readable route instructions. The framework first conducts a binary occupancy matrix from a given indoor map. Using this matrix, the A* algorithm computes the optimal path between origin and destination, producing concise textual navigation steps. These steps are then transformed into natural language instructions by the SLM, enhancing interpretability for end users. Experimental evaluations across various indoor scenarios demonstrate the method's effectiveness in producing accurate and timely navigation guidance. The results validate the proposed approach as a lightweight, infrastructure-free solution for real-time indoor navigation support.

Experimental Validation of Sensor Fusion-based GNSS Spoofing Attack Detection Framework for Autonomous Vehicles

Jan 02, 2024Abstract:In this paper, we validate the performance of the a sensor fusion-based Global Navigation Satellite System (GNSS) spoofing attack detection framework for Autonomous Vehicles (AVs). To collect data, a vehicle equipped with a GNSS receiver, along with Inertial Measurement Unit (IMU) is used. The detection framework incorporates two strategies: The first strategy involves comparing the predicted location shift, which is the distance traveled between two consecutive timestamps, with the inertial sensor-based location shift. For this purpose, data from low-cost in-vehicle inertial sensors such as the accelerometer and gyroscope sensor are fused and fed into a long short-term memory (LSTM) neural network. The second strategy employs a Random-Forest supervised machine learning model to detect and classify turns, distinguishing between left and right turns using the output from the steering angle sensor. In experiments, two types of spoofing attack models: turn-by-turn and wrong turn are simulated. These spoofing attacks are modeled as SQL injection attacks, where, upon successful implementation, the navigation system perceives injected spoofed location information as legitimate while being unable to detect legitimate GNSS signals. Importantly, the IMU data remains uncompromised throughout the spoofing attack. To test the effectiveness of the detection framework, experiments are conducted in Tuscaloosa, AL, mimicking urban road structures. The results demonstrate the framework's ability to detect various sophisticated GNSS spoofing attacks, even including slow position drifting attacks. Overall, the experimental results showcase the robustness and efficacy of the sensor fusion-based spoofing attack detection approach in safeguarding AVs against GNSS spoofing threats.

Reinforcement Learning based Cyberattack Model for Adaptive Traffic Signal Controller in Connected Transportation Systems

Oct 31, 2022Abstract:In a connected transportation system, adaptive traffic signal controllers (ATSC) utilize real-time vehicle trajectory data received from vehicles through wireless connectivity (i.e., connected vehicles) to regulate green time. However, this wirelessly connected ATSC increases cyber-attack surfaces and increases their vulnerability to various cyber-attack modes, which can be leveraged to induce significant congestion in a roadway network. An attacker may receive financial benefits to create such a congestion for a specific roadway. One such mode is a 'sybil' attack in which an attacker creates fake vehicles in the network by generating fake Basic Safety Messages (BSMs) imitating actual connected vehicles following roadway traffic rules. The ultimate goal of an attacker will be to block a route(s) by generating fake or 'sybil' vehicles at a rate such that the signal timing and phasing changes occur without flagging any abrupt change in number of vehicles. Because of the highly non-linear and unpredictable nature of vehicle arrival rates and the ATSC algorithm, it is difficult to find an optimal rate of sybil vehicles, which will be injected from different approaches of an intersection. Thus, it is necessary to develop an intelligent cyber-attack model to prove the existence of such attacks. In this study, a reinforcement learning based cyber-attack model is developed for a waiting time-based ATSC. Specifically, an RL agent is trained to learn an optimal rate of sybil vehicle injection to create congestion for an approach(s). Our analyses revealed that the RL agent can learn an optimal policy for creating an intelligent attack.

Improving the Environmental Perception of Autonomous Vehicles using Deep Learning-based Audio Classification

Sep 09, 2022

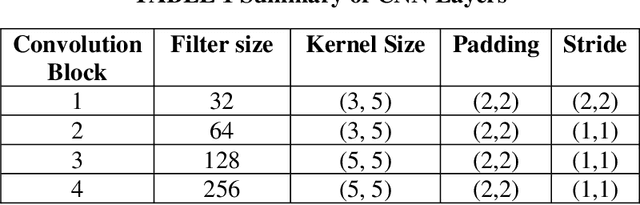

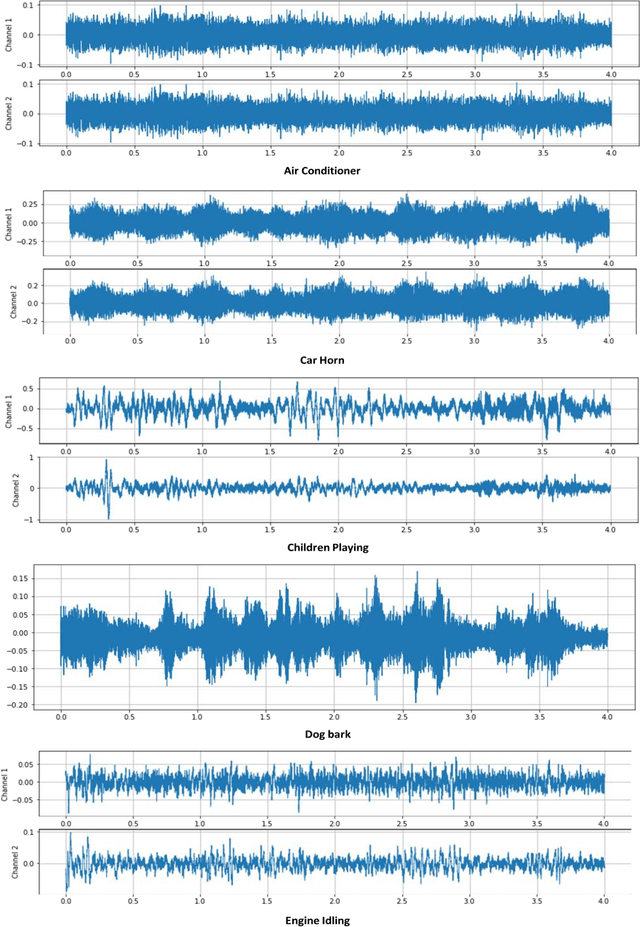

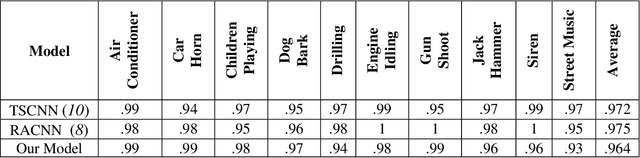

Abstract:Sense of hearing is crucial for autonomous vehicles (AVs) to better perceive its surrounding environment. Although visual sensors of an AV, such as camera, lidar, and radar, help to see its surrounding environment, an AV cannot see beyond those sensors line of sight. On the other hand, an AV s sense of hearing cannot be obstructed by line of sight. For example, an AV can identify an emergency vehicle s siren through audio classification even though the emergency vehicle is not within the line of sight of the AV. Thus, auditory perception is complementary to the camera, lidar, and radar-based perception systems. This paper presents a deep learning-based robust audio classification framework aiming to achieve improved environmental perception for AVs. The presented framework leverages a deep Convolution Neural Network (CNN) to classify different audio classes. UrbanSound8k, an urban environment dataset, is used to train and test the developed framework. Seven audio classes i.e., air conditioner, car horn, children playing, dog bark, engine idling, gunshot, and siren, are identified from the UrbanSound8k dataset because of their relevancy related to AVs. Our framework can classify different audio classes with 97.82% accuracy. Moreover, the audio classification accuracies with all ten classes are presented, which proves that our framework performed better in the case of AV-related sounds compared to the existing audio classification frameworks.

Audio Analytics-based Human Trafficking Detection Framework for Autonomous Vehicles

Sep 09, 2022

Abstract:Human trafficking is a universal problem, persistent despite numerous efforts to combat it globally. Individuals of any age, race, ethnicity, sex, gender identity, sexual orientation, nationality, immigration status, cultural background, religion, socioeconomic class, and education can be a victim of human trafficking. With the advancements in technology and the introduction of autonomous vehicles (AVs), human traffickers will adopt new ways to transport victims, which could accelerate the growth of organized human trafficking networks, which can make the detection of trafficking in persons more challenging for law enforcement agencies. The objective of this study is to develop an innovative audio analytics-based human trafficking detection framework for autonomous vehicles. The primary contributions of this study are to: (i) define four non-trivial, feasible, and realistic human trafficking scenarios for AVs; (ii) create a new and comprehensive audio dataset related to human trafficking with five classes i.e., crying, screaming, car door banging, car noise, and conversation; and (iii) develop a deep 1-D Convolution Neural Network (CNN) architecture for audio data classification related to human trafficking. We have also conducted a case study using the new audio dataset and evaluated the audio classification performance of the deep 1-D CNN. Our analyses reveal that the deep 1-D CNN can distinguish sound coming from a human trafficking victim from a non-human trafficking sound with an accuracy of 95%, which proves the efficacy of our framework.

A Sensor Fusion-based GNSS Spoofing Attack Detection Framework for Autonomous Vehicles

Aug 19, 2021

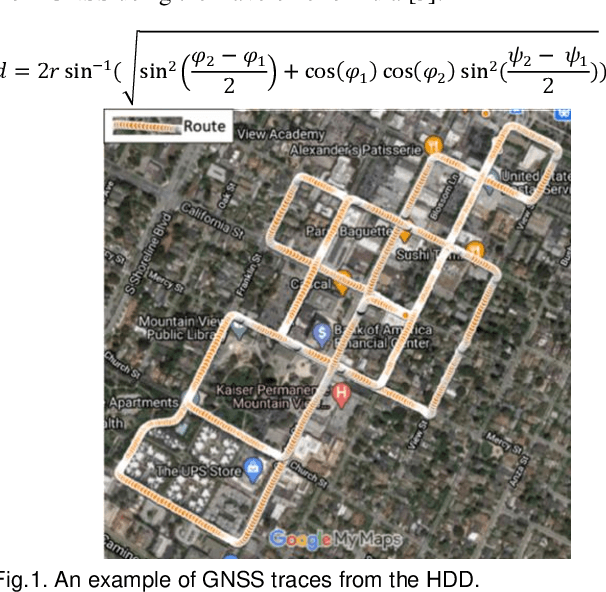

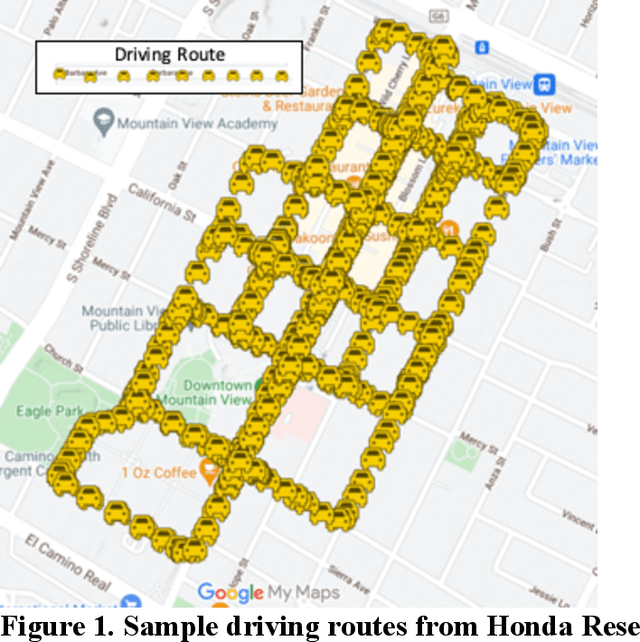

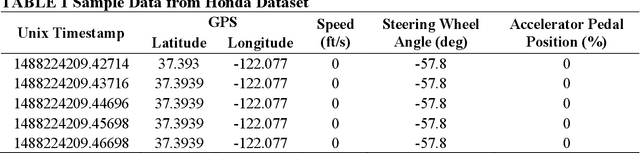

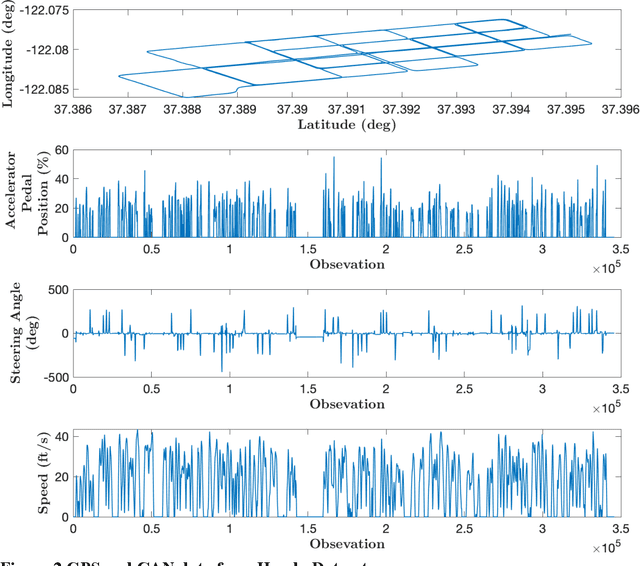

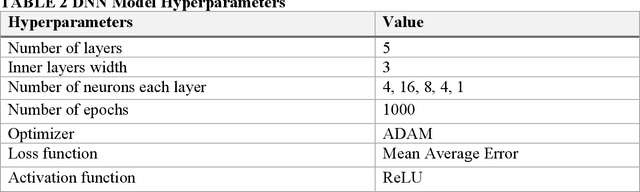

Abstract:This paper presents a sensor fusion based Global Navigation Satellite System (GNSS) spoofing attack detection framework for autonomous vehicles (AV) that consists of two concurrent strategies: (i) detection of vehicle state using predicted location shift -- i.e., distance traveled between two consecutive timestamps -- and monitoring of vehicle motion state -- i.e., standstill/ in motion; and (ii) detection and classification of turns (i.e., left or right). Data from multiple low-cost in-vehicle sensors (i.e., accelerometer, steering angle sensor, speed sensor, and GNSS) are fused and fed into a recurrent neural network model, which is a long short-term memory (LSTM) network for predicting the location shift, i.e., the distance that an AV travels between two consecutive timestamps. This location shift is then compared with the GNSS-based location shift to detect an attack. We have then combined k-Nearest Neighbors (k-NN) and Dynamic Time Warping (DTW) algorithms to detect and classify left and right turns using data from the steering angle sensor. To prove the efficacy of the sensor fusion-based attack detection framework, attack datasets are created for four unique and sophisticated spoofing attacks-turn-by-turn, overshoot, wrong turn, and stop, using the publicly available real-world Honda Research Institute Driving Dataset (HDD). Our analysis reveals that the sensor fusion-based detection framework successfully detects all four types of spoofing attacks within the required computational latency threshold.

A Reinforcement Learning Approach for GNSS Spoofing Attack Detection of Autonomous Vehicles

Aug 19, 2021

Abstract:A resilient and robust positioning, navigation, and timing (PNT) system is a necessity for the navigation of autonomous vehicles (AVs). Global Navigation Satelite System (GNSS) provides satellite-based PNT services. However, a spoofer can temper an authentic GNSS signal and could transmit wrong position information to an AV. Therefore, a GNSS must have the capability of real-time detection and feedback-correction of spoofing attacks related to PNT receivers, whereby it will help the end-user (autonomous vehicle in this case) to navigate safely if it falls into any compromises. This paper aims to develop a deep reinforcement learning (RL)-based turn-by-turn spoofing attack detection using low-cost in-vehicle sensor data. We have utilized Honda Driving Dataset to create attack and non-attack datasets, develop a deep RL model, and evaluate the performance of the RL-based attack detection model. We find that the accuracy of the RL model ranges from 99.99% to 100%, and the recall value is 100%. However, the precision ranges from 93.44% to 100%, and the f1 score ranges from 96.61% to 100%. Overall, the analyses reveal that the RL model is effective in turn-by-turn spoofing attack detection.

An Innovative Attack Modelling and Attack Detection Approach for a Waiting Time-based Adaptive Traffic Signal Controller

Aug 19, 2021

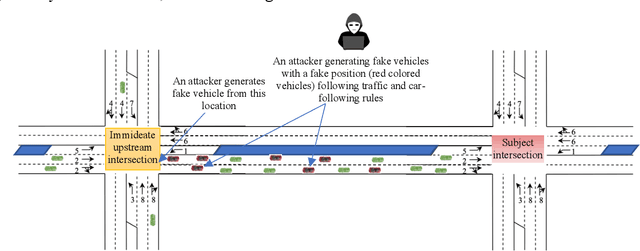

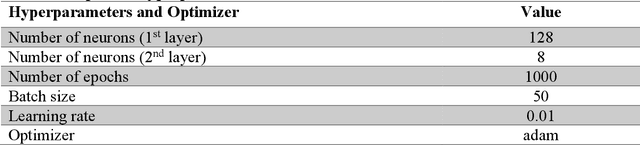

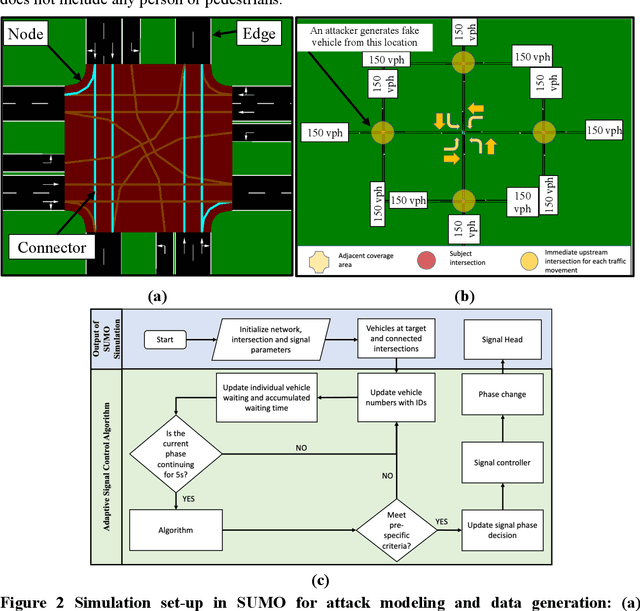

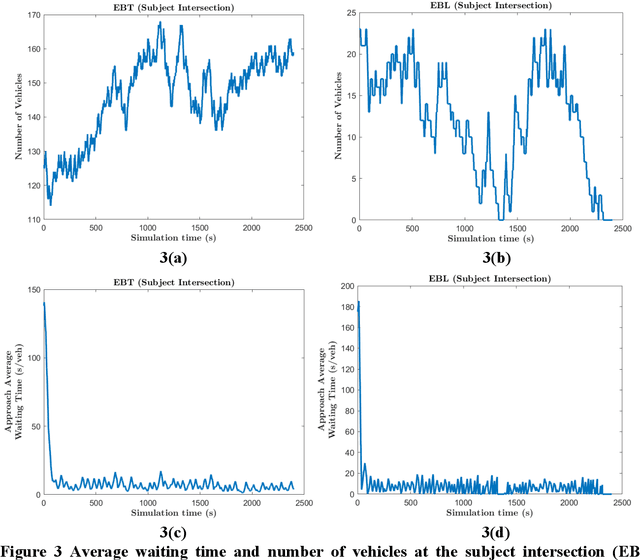

Abstract:An adaptive traffic signal controller (ATSC) combined with a connected vehicle (CV) concept uses real-time vehicle trajectory data to regulate green time and has the ability to reduce intersection waiting time significantly and thereby improve travel time in a signalized corridor. However, the CV-based ATSC increases the size of the surface vulnerable to potential cyber-attack, allowing an attacker to generate disastrous traffic congestion in a roadway network. An attacker can congest a route by generating fake vehicles by maintaining traffic and car-following rules at a slow rate so that the signal timing and phase change without having any abrupt changes in number of vehicles. Because of the adaptive nature of ATSC, it is a challenge to model this kind of attack and also to develop a strategy for detection. This paper introduces an innovative "slow poisoning" cyberattack for a waiting time based ATSC algorithm and a corresponding detection strategy. Thus, the objectives of this paper are to: (i) develop a "slow poisoning" attack generation strategy for an ATSC, and (ii) develop a prediction-based "slow poisoning" attack detection strategy using a recurrent neural network -- i.e., long short-term memory model. We have generated a "slow poisoning" attack modeling strategy using a microscopic traffic simulator -- Simulation of Urban Mobility (SUMO) -- and used generated data from the simulation to develop both the attack model and detection model. Our analyses revealed that the attack strategy is effective in creating a congestion in an approach and detection strategy is able to flag the attack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge