Rolf Drechsler

Late Breaking Results: Conversion of Neural Networks into Logic Flows for Edge Computing

Jan 29, 2026Abstract:Neural networks have been successfully applied in various resource-constrained edge devices, where usually central processing units (CPUs) instead of graphics processing units exist due to limited power availability. State-of-the-art research still focuses on efficiently executing enormous numbers of multiply-accumulate (MAC) operations. However, CPUs themselves are not good at executing such mathematical operations on a large scale, since they are more suited to execute control flow logic, i.e., computer algorithms. To enhance the computation efficiency of neural networks on CPUs, in this paper, we propose to convert them into logic flows for execution. Specifically, neural networks are first converted into equivalent decision trees, from which decision paths with constant leaves are then selected and compressed into logic flows. Such logic flows consist of if and else structures and a reduced number of MAC operations. Experimental results demonstrate that the latency can be reduced by up to 14.9 % on a simulated RISC-V CPU without any accuracy degradation. The code is open source at https://github.com/TUDa-HWAI/NN2Logic

LLM-based Behaviour Driven Development for Hardware Design

Dec 23, 2025Abstract:Test and verification are essential activities in hardware and system design, but their complexity grows significantly with increasing system sizes. While Behavior Driven Development (BDD) has proven effective in software engineering, it is not yet well established in hardware design, and its practical use remains limited. One contributing factor is the manual effort required to derive precise behavioral scenarios from textual specifications. Recent advances in Large Language Models (LLMs) offer new opportunities to automate this step. In this paper, we investigate the use of LLM-based techniques to support BDD in the context of hardware design.

Revolution or Hype? Seeking the Limits of Large Models in Hardware Design

Sep 05, 2025Abstract:Recent breakthroughs in Large Language Models (LLMs) and Large Circuit Models (LCMs) have sparked excitement across the electronic design automation (EDA) community, promising a revolution in circuit design and optimization. Yet, this excitement is met with significant skepticism: Are these AI models a genuine revolution in circuit design, or a temporary wave of inflated expectations? This paper serves as a foundational text for the corresponding ICCAD 2025 panel, bringing together perspectives from leading experts in academia and industry. It critically examines the practical capabilities, fundamental limitations, and future prospects of large AI models in hardware design. The paper synthesizes the core arguments surrounding reliability, scalability, and interpretability, framing the debate on whether these models can meaningfully outperform or complement traditional EDA methods. The result is an authoritative overview offering fresh insights into one of today's most contentious and impactful technology trends.

ALF -- A Fitness-Based Artificial Life Form for Evolving Large-Scale Neural Networks

Apr 16, 2021

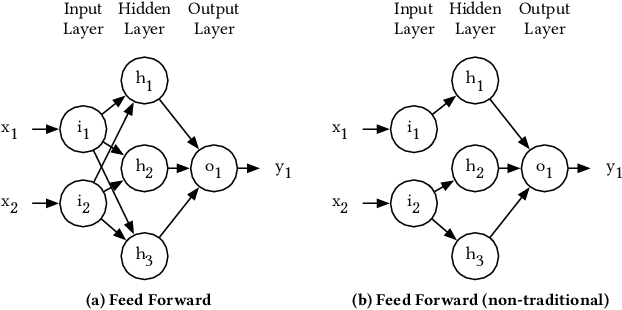

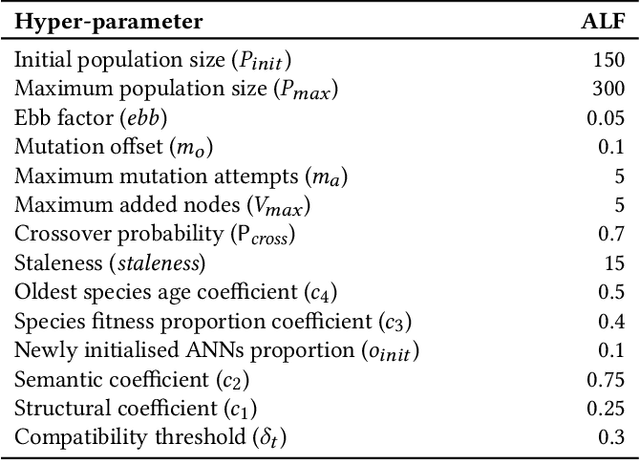

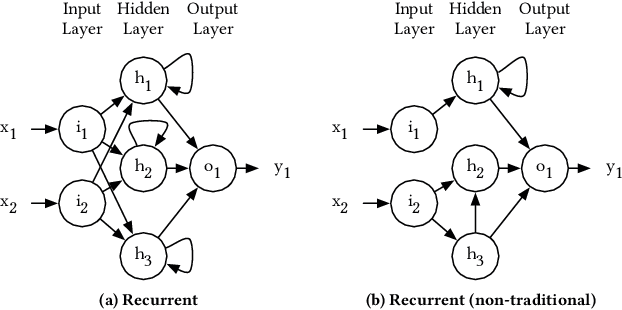

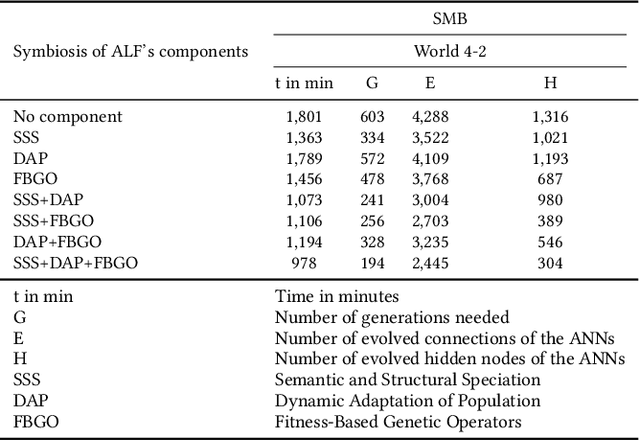

Abstract:Machine Learning (ML) is becoming increasingly important in daily life. In this context, Artificial Neural Networks (ANNs) are a popular approach within ML methods to realize an artificial intelligence. Usually, the topology of ANNs is predetermined. However, there are problems where it is difficult to find a suitable topology. Therefore, Topology and Weight Evolving Artificial Neural Network (TWEANN) algorithms have been developed that can find ANN topologies and weights using genetic algorithms. A well-known downside for large-scale problems is that TWEANN algorithms often evolve inefficient ANNs and require long runtimes. To address this issue, we propose a new TWEANN algorithm called Artificial Life Form (ALF) with the following technical advancements: (1) speciation via structural and semantic similarity to form better candidate solutions, (2) dynamic adaptation of the observed candidate solutions for better convergence properties, and (3) integration of solution quality into genetic reproduction to increase the probability of optimization success. Experiments on large-scale ML problems confirm that these approaches allow the fast solving of these problems and lead to efficient evolved ANNs.

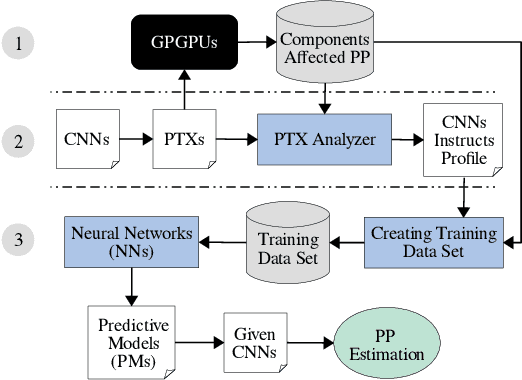

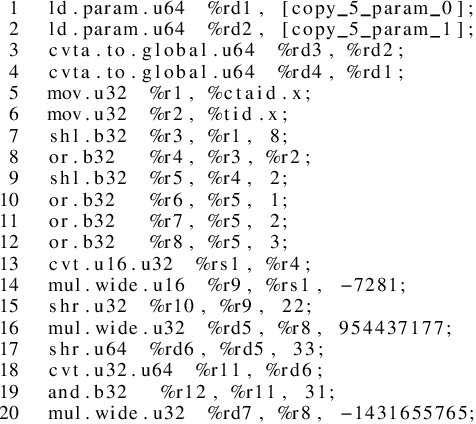

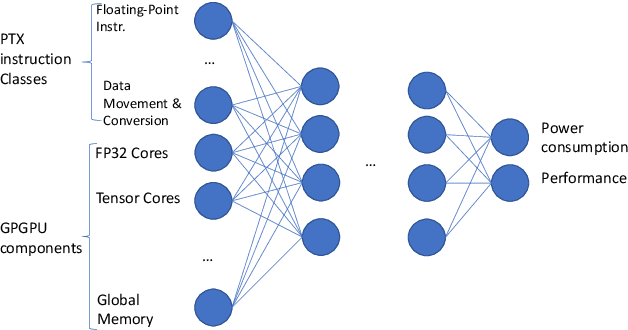

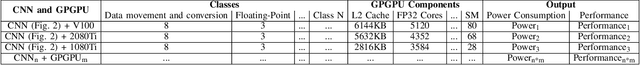

Pick the Right Edge Device: Towards Power and Performance Estimation of CUDA-based CNNs on GPGPUs

Feb 02, 2021

Abstract:The emergence of Machine Learning (ML) as a powerful technique has been helping nearly all fields of business to increase operational efficiency or to develop new value propositions. Besides the challenges of deploying and maintaining ML models, picking the right edge device (e.g., GPGPUs) to run these models (e.g., CNN with the massive computational process) is one of the most pressing challenges faced by organizations today. As the cost of renting (on Cloud) or purchasing an edge device is directly connected to the cost of final products or services, choosing the most efficient device is essential. However, this decision making requires deep knowledge about performance and power consumption of the ML models running on edge devices that must be identified at the early stage of ML workflow. In this paper, we present a novel ML-based approach that provides ML engineers with the early estimation of both power consumption and performance of CUDA-based CNNs on GPGPUs. The proposed approach empowers ML engineers to pick the most efficient GPGPU for a given CNN model at the early stage of development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge