Riccardo M. G. Ferrari

Personalized and Resilient Distributed Learning Through Opinion Dynamics

May 20, 2025Abstract:In this paper, we address two practical challenges of distributed learning in multi-agent network systems, namely personalization and resilience. Personalization is the need of heterogeneous agents to learn local models tailored to their own data and tasks, while still generalizing well; on the other hand, the learning process must be resilient to cyberattacks or anomalous training data to avoid disruption. Motivated by a conceptual affinity between these two requirements, we devise a distributed learning algorithm that combines distributed gradient descent and the Friedkin-Johnsen model of opinion dynamics to fulfill both of them. We quantify its convergence speed and the neighborhood that contains the final learned models, which can be easily controlled by tuning the algorithm parameters to enforce a more personalized/resilient behavior. We numerically showcase the effectiveness of our algorithm on synthetic and real-world distributed learning tasks, where it achieves high global accuracy both for personalized models and with malicious agents compared to standard strategies.

Beta Residuals: Improving Fault-Tolerant Control for Sensory Faults via Bayesian Inference and Precision Learning

Apr 17, 2022

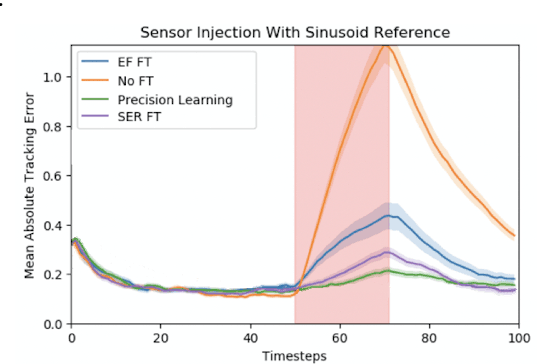

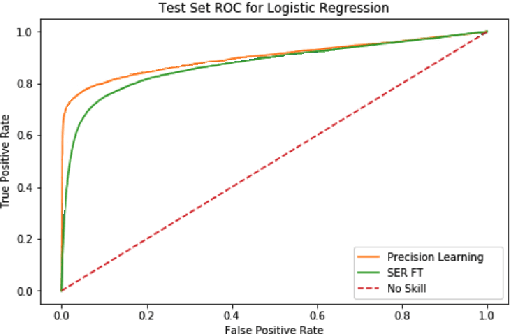

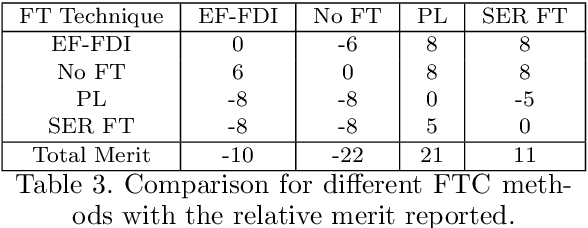

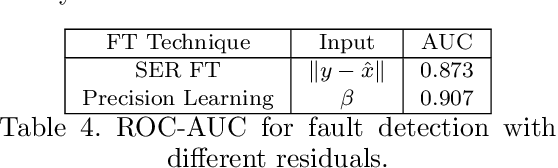

Abstract:Model-based fault-tolerant control (FTC) often consists of two distinct steps: fault detection & isolation (FDI), and fault accommodation. In this work we investigate posing fault-tolerant control as a single Bayesian inference problem. Previous work showed that precision learning allows for stochastic FTC without an explicit fault detection step. While this leads to implicit fault recovery, information on sensor faults is not provided, which may be essential for triggering other impact-mitigation actions. In this paper, we introduce a precision-learning based Bayesian FTC approach and a novel beta residual for fault detection. Simulation results are presented, supporting the use of beta residual against competing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge