Radhika Mamidi

Confabulations from ACL Publications (CAP): A Dataset for Scientific Hallucination Detection

Oct 25, 2025

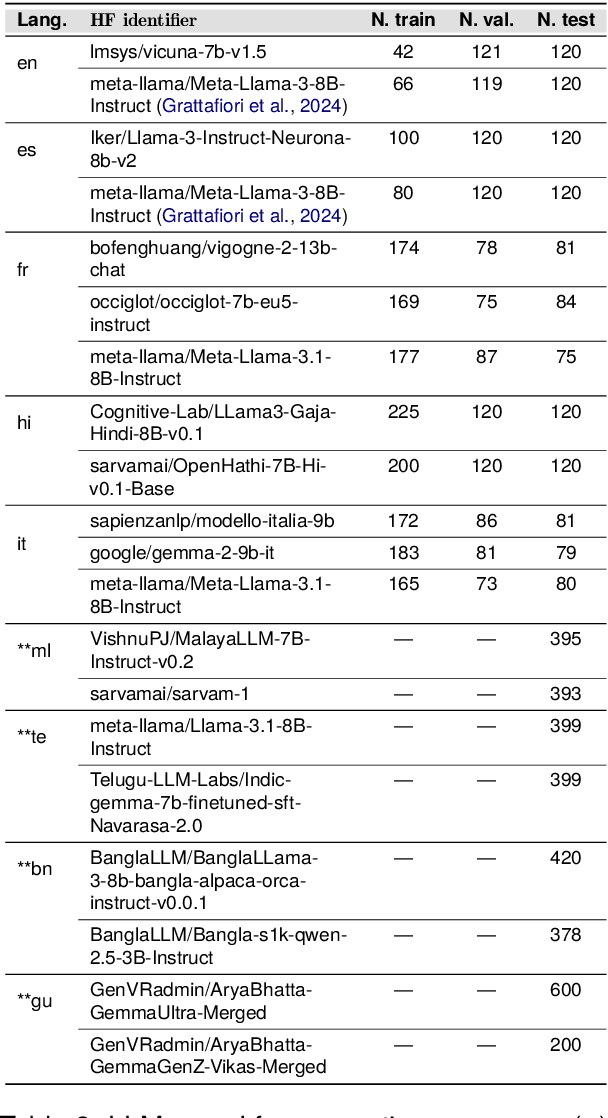

Abstract:We introduce the CAP (Confabulations from ACL Publications) dataset, a multilingual resource for studying hallucinations in large language models (LLMs) within scientific text generation. CAP focuses on the scientific domain, where hallucinations can distort factual knowledge, as they frequently do. In this domain, however, the presence of specialized terminology, statistical reasoning, and context-dependent interpretations further exacerbates these distortions, particularly given LLMs' lack of true comprehension, limited contextual understanding, and bias toward surface-level generalization. CAP operates in a cross-lingual setting covering five high-resource languages (English, French, Hindi, Italian, and Spanish) and four low-resource languages (Bengali, Gujarati, Malayalam, and Telugu). The dataset comprises 900 curated scientific questions and over 7000 LLM-generated answers from 16 publicly available models, provided as question-answer pairs along with token sequences and corresponding logits. Each instance is annotated with a binary label indicating the presence of a scientific hallucination, denoted as a factuality error, and a fluency label, capturing issues in the linguistic quality or naturalness of the text. CAP is publicly released to facilitate advanced research on hallucination detection, multilingual evaluation of LLMs, and the development of more reliable scientific NLP systems.

Analyzing Biases in Political Dialogue: Tagging U.S. Presidential Debates with an Extended DAMSL Framework

May 27, 2025Abstract:We present a critical discourse analysis of the 2024 U.S. presidential debates, examining Donald Trump's rhetorical strategies in his interactions with Joe Biden and Kamala Harris. We introduce a novel annotation framework, BEADS (Bias Enriched Annotation for Dialogue Structure), which systematically extends the DAMSL framework to capture bias driven and adversarial discourse features in political communication. BEADS includes a domain and language agnostic set of tags that model ideological framing, emotional appeals, and confrontational tactics. Our methodology compares detailed human annotation with zero shot ChatGPT assisted tagging on verified transcripts from the Trump and Biden (19,219 words) and Trump and Harris (18,123 words) debates. Our analysis shows that Trump consistently dominated in key categories: Challenge and Adversarial Exchanges, Selective Emphasis, Appeal to Fear, Political Bias, and Perceived Dismissiveness. These findings underscore his use of emotionally charged and adversarial rhetoric to control the narrative and influence audience perception. In this work, we establish BEADS as a scalable and reproducible framework for critical discourse analysis across languages, domains, and political contexts.

Bias in Political Dialogue: Tagging U.S. Presidential Debates with an Extended DAMSL Framework

May 26, 2025Abstract:We present a critical discourse analysis of the 2024 U.S. presidential debates, examining Donald Trump's rhetorical strategies in his interactions with Joe Biden and Kamala Harris. We introduce a novel annotation framework, BEADS (Bias Enriched Annotation for Dialogue Structure), which systematically extends the DAMSL framework to capture bias driven and adversarial discourse features in political communication. BEADS includes a domain and language agnostic set of tags that model ideological framing, emotional appeals, and confrontational tactics. Our methodology compares detailed human annotation with zero shot ChatGPT assisted tagging on verified transcripts from the Trump and Biden (19,219 words) and Trump and Harris (18,123 words) debates. Our analysis shows that Trump consistently dominated in key categories: Challenge and Adversarial Exchanges, Selective Emphasis, Appeal to Fear, Political Bias, and Perceived Dismissiveness. These findings underscore his use of emotionally charged and adversarial rhetoric to control the narrative and influence audience perception. In this work, we establish BEADS as a scalable and reproducible framework for critical discourse analysis across languages, domains, and political contexts.

IndicSentEval: How Effectively do Multilingual Transformer Models encode Linguistic Properties for Indic Languages?

Oct 03, 2024

Abstract:Transformer-based models have revolutionized the field of natural language processing. To understand why they perform so well and to assess their reliability, several studies have focused on questions such as: Which linguistic properties are encoded by these models, and to what extent? How robust are these models in encoding linguistic properties when faced with perturbations in the input text? However, these studies have mainly focused on BERT and the English language. In this paper, we investigate similar questions regarding encoding capability and robustness for 8 linguistic properties across 13 different perturbations in 6 Indic languages, using 9 multilingual Transformer models (7 universal and 2 Indic-specific). To conduct this study, we introduce a novel multilingual benchmark dataset, IndicSentEval, containing approximately $\sim$47K sentences. Surprisingly, our probing analysis of surface, syntactic, and semantic properties reveals that while almost all multilingual models demonstrate consistent encoding performance for English, they show mixed results for Indic languages. As expected, Indic-specific multilingual models capture linguistic properties in Indic languages better than universal models. Intriguingly, universal models broadly exhibit better robustness compared to Indic-specific models, particularly under perturbations such as dropping both nouns and verbs, dropping only verbs, or keeping only nouns. Overall, this study provides valuable insights into probing and perturbation-specific strengths and weaknesses of popular multilingual Transformer-based models for different Indic languages. We make our code and dataset publicly available [https://tinyurl.com/IndicSentEval}].

Mast Kalandar at SemEval-2024 Task 8: On the Trail of Textual Origins: RoBERTa-BiLSTM Approach to Detect AI-Generated Text

Jul 03, 2024Abstract:Large Language Models (LLMs) have showcased impressive abilities in generating fluent responses to diverse user queries. However, concerns regarding the potential misuse of such texts in journalism, educational, and academic contexts have surfaced. SemEval 2024 introduces the task of Multigenerator, Multidomain, and Multilingual Black-Box Machine-Generated Text Detection, aiming to develop automated systems for identifying machine-generated text and detecting potential misuse. In this paper, we i) propose a RoBERTa-BiLSTM based classifier designed to classify text into two categories: AI-generated or human ii) conduct a comparative study of our model with baseline approaches to evaluate its effectiveness. This paper contributes to the advancement of automatic text detection systems in addressing the challenges posed by machine-generated text misuse. Our architecture ranked 46th on the official leaderboard with an accuracy of 80.83 among 125.

Significance of Chain of Thought in Gender Bias Mitigation for English-Dravidian Machine Translation

Jun 03, 2024Abstract:Gender bias in machine translation (MT) sys- tems poses a significant challenge to achieving accurate and inclusive translations. This paper examines gender bias in machine translation systems for languages such as Telugu and Kan- nada from the Dravidian family, analyzing how gender inflections affect translation accuracy and neutrality using Google Translate and Chat- GPT. It finds that while plural forms can reduce bias, individual-centric sentences often main- tain the bias due to historical stereotypes. The study evaluates the Chain of Thought process- ing, noting significant bias mitigation from 80% to 4% in Telugu and from 40% to 0% in Kan- nada. It also compares Telugu and Kannada translations, emphasizing the need for language specific strategies to address these challenges and suggesting directions for future research to enhance fairness in both data preparation and prompts during inference.

Zero-Shot Multi-task Hallucination Detection

Mar 18, 2024Abstract:In recent studies, the extensive utilization of large language models has underscored the importance of robust evaluation methodologies for assessing text generation quality and relevance to specific tasks. This has revealed a prevalent issue known as hallucination, an emergent condition in the model where generated text lacks faithfulness to the source and deviates from the evaluation criteria. In this study, we formally define hallucination and propose a framework for its quantitative detection in a zero-shot setting, leveraging our definition and the assumption that model outputs entail task and sample specific inputs. In detecting hallucinations, our solution achieves an accuracy of 0.78 in a model-aware setting and 0.61 in a model-agnostic setting. Notably, our solution maintains computational efficiency, requiring far less computational resources than other SOTA approaches, aligning with the trend towards lightweight and compressed models.

SemEval-2024 Task 8: Weighted Layer Averaging RoBERTa for Black-Box Machine-Generated Text Detection

Feb 24, 2024Abstract:This document contains the details of the authors' submission to the proceedings of SemEval 2024's Task 8: Multigenerator, Multidomain, and Multilingual Black-Box Machine-Generated Text Detection Subtask A (monolingual) and B. Detection of machine-generated text is becoming an increasingly important task, with the advent of large language models (LLMs). In this document, we lay out the techniques utilized for performing the same, along with the results obtained.

GAE-ISumm: Unsupervised Graph-Based Summarization of Indian Languages

Dec 25, 2022Abstract:Document summarization aims to create a precise and coherent summary of a text document. Many deep learning summarization models are developed mainly for English, often requiring a large training corpus and efficient pre-trained language models and tools. However, English summarization models for low-resource Indian languages are often limited by rich morphological variation, syntax, and semantic differences. In this paper, we propose GAE-ISumm, an unsupervised Indic summarization model that extracts summaries from text documents. In particular, our proposed model, GAE-ISumm uses Graph Autoencoder (GAE) to learn text representations and a document summary jointly. We also provide a manually-annotated Telugu summarization dataset TELSUM, to experiment with our model GAE-ISumm. Further, we experiment with the most publicly available Indian language summarization datasets to investigate the effectiveness of GAE-ISumm on other Indian languages. Our experiments of GAE-ISumm in seven languages make the following observations: (i) it is competitive or better than state-of-the-art results on all datasets, (ii) it reports benchmark results on TELSUM, and (iii) the inclusion of positional and cluster information in the proposed model improved the performance of summaries.

Using Selective Masking as a Bridge between Pre-training and Fine-tuning

Nov 24, 2022Abstract:Pre-training a language model and then fine-tuning it for downstream tasks has demonstrated state-of-the-art results for various NLP tasks. Pre-training is usually independent of the downstream task, and previous works have shown that this pre-training alone might not be sufficient to capture the task-specific nuances. We propose a way to tailor a pre-trained BERT model for the downstream task via task-specific masking before the standard supervised fine-tuning. For this, a word list is first collected specific to the task. For example, if the task is sentiment classification, we collect a small sample of words representing both positive and negative sentiments. Next, a word's importance for the task, called the word's task score, is measured using the word list. Each word is then assigned a probability of masking based on its task score. We experiment with different masking functions that assign the probability of masking based on the word's task score. The BERT model is further trained on MLM objective, where masking is done using the above strategy. Following this standard supervised fine-tuning is done for different downstream tasks. Results on these tasks show that the selective masking strategy outperforms random masking, indicating its effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge