Qinglin Yang

Towards Depth Foundation Model: Recent Trends in Vision-Based Depth Estimation

Jul 15, 2025Abstract:Depth estimation is a fundamental task in 3D computer vision, crucial for applications such as 3D reconstruction, free-viewpoint rendering, robotics, autonomous driving, and AR/VR technologies. Traditional methods relying on hardware sensors like LiDAR are often limited by high costs, low resolution, and environmental sensitivity, limiting their applicability in real-world scenarios. Recent advances in vision-based methods offer a promising alternative, yet they face challenges in generalization and stability due to either the low-capacity model architectures or the reliance on domain-specific and small-scale datasets. The emergence of scaling laws and foundation models in other domains has inspired the development of "depth foundation models": deep neural networks trained on large datasets with strong zero-shot generalization capabilities. This paper surveys the evolution of deep learning architectures and paradigms for depth estimation across the monocular, stereo, multi-view, and monocular video settings. We explore the potential of these models to address existing challenges and provide a comprehensive overview of large-scale datasets that can facilitate their development. By identifying key architectures and training strategies, we aim to highlight the path towards robust depth foundation models, offering insights into their future research and applications.

Efficient Model Compression for Hierarchical Federated Learning

May 27, 2024

Abstract:Federated learning (FL), as an emerging collaborative learning paradigm, has garnered significant attention due to its capacity to preserve privacy within distributed learning systems. In these systems, clients collaboratively train a unified neural network model using their local datasets and share model parameters rather than raw data, enhancing privacy. Predominantly, FL systems are designed for mobile and edge computing environments where training typically occurs over wireless networks. Consequently, as model sizes increase, the conventional FL frameworks increasingly consume substantial communication resources. To address this challenge and improve communication efficiency, this paper introduces a novel hierarchical FL framework that integrates the benefits of clustered FL and model compression. We present an adaptive clustering algorithm that identifies a core client and dynamically organizes clients into clusters. Furthermore, to enhance transmission efficiency, each core client implements a local aggregation with compression (LC aggregation) algorithm after collecting compressed models from other clients within the same cluster. Simulation results affirm that our proposed algorithms not only maintain comparable predictive accuracy but also significantly reduce energy consumption relative to existing FL mechanisms.

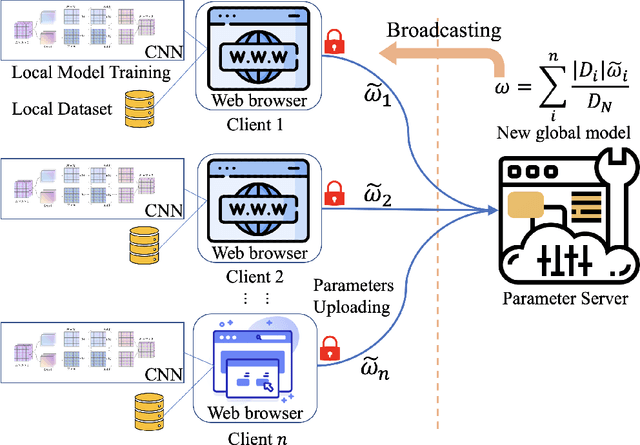

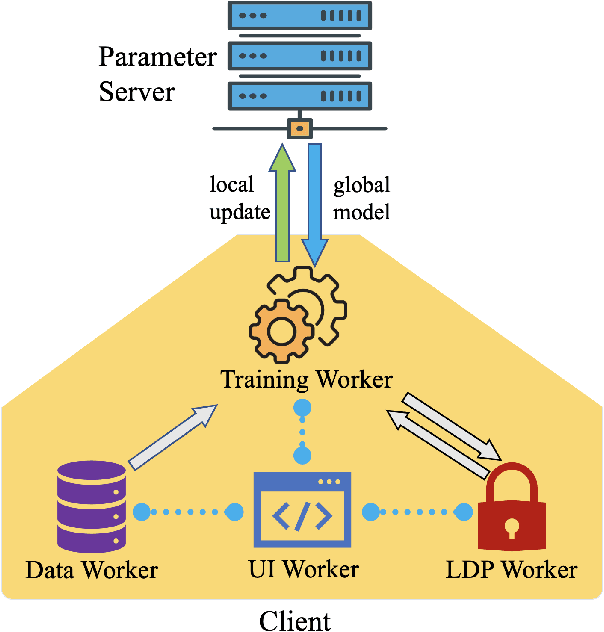

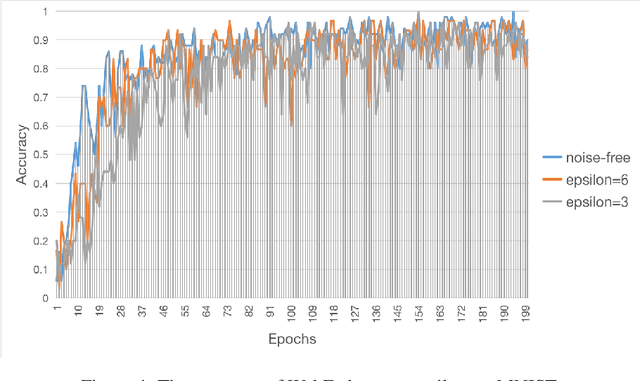

WebFed: Cross-platform Federated Learning Framework Based on Web Browser with Local Differential Privacy

Oct 22, 2021

Abstract:For data isolated islands and privacy issues, federated learning has been extensively invoking much interest since it allows clients to collaborate on training a global model using their local data without sharing any with a third party. However, the existing federated learning frameworks always need sophisticated condition configurations (e.g., sophisticated driver configuration of standalone graphics card like NVIDIA, compile environment) that bring much inconvenience for large-scale development and deployment. To facilitate the deployment of federated learning and the implementation of related applications, we innovatively propose WebFed, a novel browser-based federated learning framework that takes advantage of the browser's features (e.g., Cross-platform, JavaScript Programming Features) and enhances the privacy protection via local differential privacy mechanism. Finally, We conduct experiments on heterogeneous devices to evaluate the performance of the proposed WebFed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge