Pu Wang

DCHT: Deep Complex Hybrid Transformer for Speech Enhancement

Oct 30, 2023Abstract:Most of the current deep learning-based approaches for speech enhancement only operate in the spectrogram or waveform domain. Although a cross-domain transformer combining waveform- and spectrogram-domain inputs has been proposed, its performance can be further improved. In this paper, we present a novel deep complex hybrid transformer that integrates both spectrogram and waveform domains approaches to improve the performance of speech enhancement. The proposed model consists of two parts: a complex Swin-Unet in the spectrogram domain and a dual-path transformer network (DPTnet) in the waveform domain. We first construct a complex Swin-Unet network in the spectrogram domain and perform speech enhancement in the complex audio spectrum. We then introduce improved DPT by adding memory-compressed attention. Our model is capable of learning multi-domain features to reduce existing noise on different domains in a complementary way. The experimental results on the BirdSoundsDenoising dataset and the VCTK+DEMAND dataset indicate that our method can achieve better performance compared to state-of-the-art methods.

DPATD: Dual-Phase Audio Transformer for Denoising

Oct 30, 2023

Abstract:Recent high-performance transformer-based speech enhancement models demonstrate that time domain methods could achieve similar performance as time-frequency domain methods. However, time-domain speech enhancement systems typically receive input audio sequences consisting of a large number of time steps, making it challenging to model extremely long sequences and train models to perform adequately. In this paper, we utilize smaller audio chunks as input to achieve efficient utilization of audio information to address the above challenges. We propose a dual-phase audio transformer for denoising (DPATD), a novel model to organize transformer layers in a deep structure to learn clean audio sequences for denoising. DPATD splits the audio input into smaller chunks, where the input length can be proportional to the square root of the original sequence length. Our memory-compressed explainable attention is efficient and converges faster compared to the frequently used self-attention module. Extensive experiments demonstrate that our model outperforms state-of-the-art methods.

GaitSADA: Self-Aligned Domain Adaptation for mmWave Gait Recognition

Feb 01, 2023

Abstract:mmWave radar-based gait recognition is a novel user identification method that captures human gait biometrics from mmWave radar return signals. This technology offers privacy protection and is resilient to weather and lighting conditions. However, its generalization performance is yet unknown and limits its practical deployment. To address this problem, in this paper, a non-synthetic dataset is collected and analyzed to reveal the presence of spatial and temporal domain shifts in mmWave gait biometric data, which significantly impacts identification accuracy. To address this issue, a novel self-aligned domain adaptation method called GaitSADA is proposed. GaitSADA improves system generalization performance by using a two-stage semi-supervised model training approach. The first stage uses semi-supervised contrastive learning and the second stage uses semi-supervised consistency training with centroid alignment. Extensive experiments show that GaitSADA outperforms representative domain adaptation methods by an average of 15.41% in low data regimes.

A Modular Multi-stage Lightweight Graph Transformer Network for Human Pose and Shape Estimation from 2D Human Pose

Jan 31, 2023Abstract:In this research, we address the challenge faced by existing deep learning-based human mesh reconstruction methods in balancing accuracy and computational efficiency. These methods typically prioritize accuracy, resulting in large network sizes and excessive computational complexity, which may hinder their practical application in real-world scenarios, such as virtual reality systems. To address this issue, we introduce a modular multi-stage lightweight graph-based transformer network for human pose and shape estimation from 2D human pose, a pose-based human mesh reconstruction approach that prioritizes computational efficiency without sacrificing reconstruction accuracy. Our method consists of a 2D-to-3D lifter module that utilizes graph transformers to analyze structured and implicit joint correlations in 2D human poses, and a mesh regression module that combines the extracted pose features with a mesh template to produce the final human mesh parameters.

Skeleton-based Human Action Recognition via Convolutional Neural Networks (CNN)

Jan 31, 2023

Abstract:Recently, there has been a remarkable increase in the interest towards skeleton-based action recognition within the research community, owing to its various advantageous features, including computational efficiency, representative features, and illumination invariance. Despite this, researchers continue to explore and investigate the most optimal way to represent human actions through skeleton representation and the extracted features. As a result, the growth and availability of human action recognition datasets have risen substantially. In addition, deep learning-based algorithms have gained widespread popularity due to the remarkable advancements in various computer vision tasks. Most state-of-the-art contributions in skeleton-based action recognition incorporate a Graph Neural Network (GCN) architecture for representing the human body and extracting features. Our research demonstrates that Convolutional Neural Networks (CNNs) can attain comparable results to GCN, provided that the proper training techniques, augmentations, and optimizers are applied. Our approach has been rigorously validated, and we have achieved a score of 95% on the NTU-60 dataset

GaitMixer: Skeleton-based Gait Representation Learning via Wide-spectrum Multi-axial Mixer

Oct 29, 2022Abstract:Most existing gait recognition methods are appearance-based, which rely on the silhouettes extracted from the video data of human walking activities. The less-investigated skeleton-based gait recognition methods directly learn the gait dynamics from 2D/3D human skeleton sequences, which are theoretically more robust solutions in the presence of appearance changes caused by clothes, hairstyles, and carrying objects. However, the performance of skeleton-based solutions is still largely behind the appearance-based ones. This paper aims to close such performance gap by proposing a novel network model, GaitMixer, to learn more discriminative gait representation from skeleton sequence data. In particular, GaitMixer follows a heterogeneous multi-axial mixer architecture, which exploits the spatial self-attention mixer followed by the temporal large-kernel convolution mixer to learn rich multi-frequency signals in the gait feature maps. Experiments on the widely used gait database, CASIA-B, demonstrate that GaitMixer outperforms the previous SOTA skeleton-based methods by a large margin while achieving a competitive performance compared with the representative appearance-based solutions. Code will be available at https://github.com/exitudio/gaitmixer

Quantum Feature Extraction for THz Multi-Layer Imaging

Jul 18, 2022

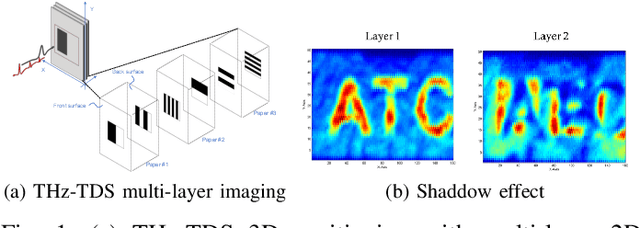

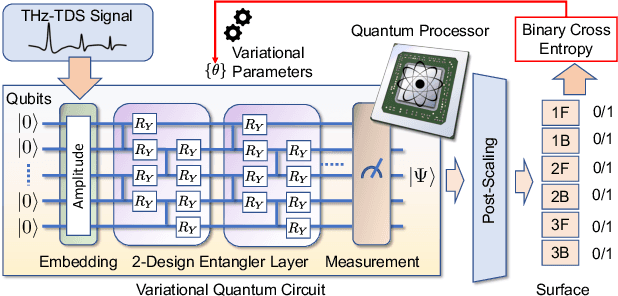

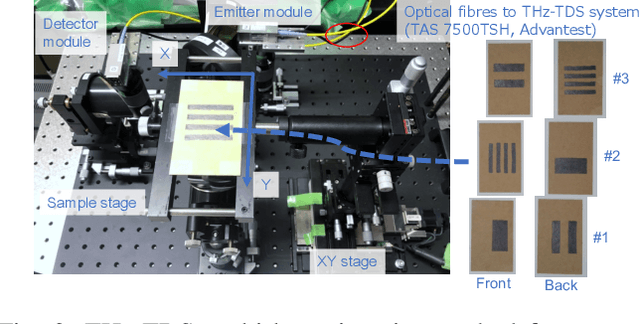

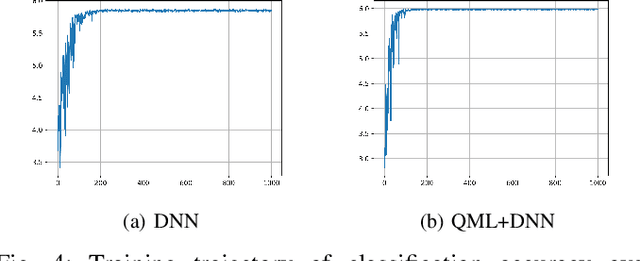

Abstract:A learning-based THz multi-layer imaging has been recently used for contactless three-dimensional (3D) positioning and encoding. We show a proof-of-concept demonstration of an emerging quantum machine learning (QML) framework to deal with depth variation, shadow effect, and double-sided content recognition, through an experimental validation.

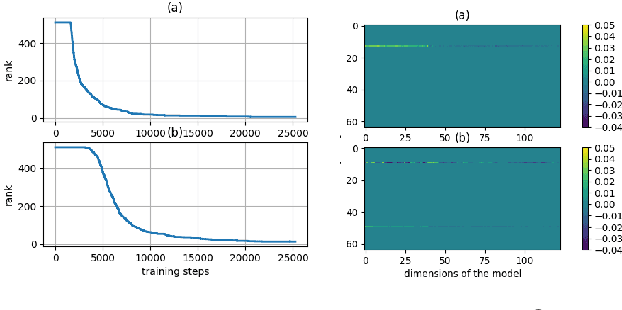

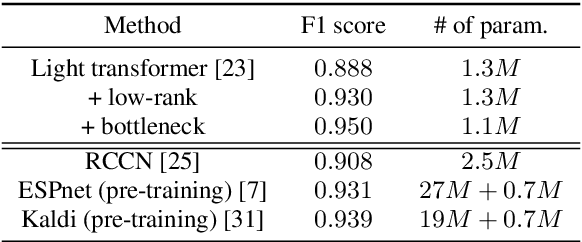

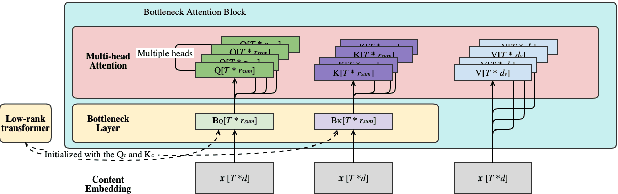

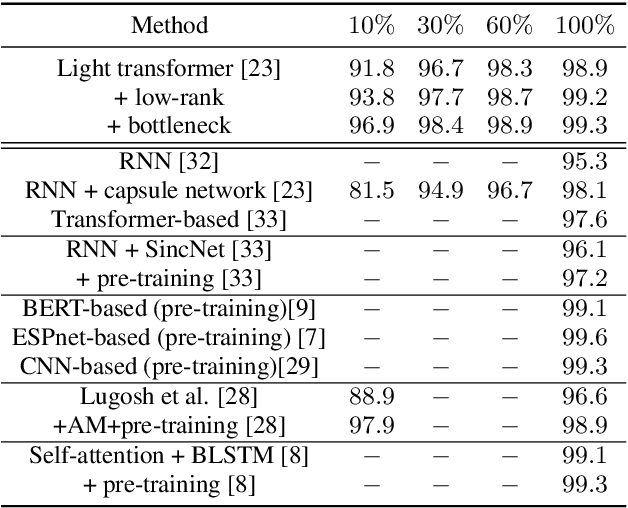

Bottleneck Low-rank Transformers for Low-resource Spoken Language Understanding

Jun 28, 2022

Abstract:End-to-end spoken language understanding (SLU) systems benefit from pretraining on large corpora, followed by fine-tuning on application-specific data. The resulting models are too large for on-edge applications. For instance, BERT-based systems contain over 110M parameters. Observing the model is overparameterized, we propose lean transformer structure where the dimension of the attention mechanism is automatically reduced using group sparsity. We propose a variant where the learned attention subspace is transferred to an attention bottleneck layer. In a low-resource setting and without pre-training, the resulting compact SLU model achieves accuracies competitive with pre-trained large models.

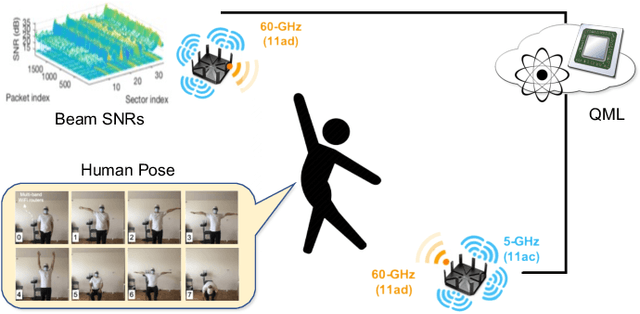

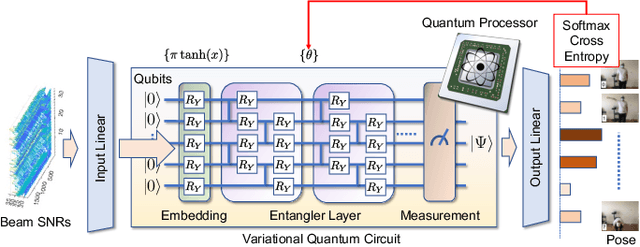

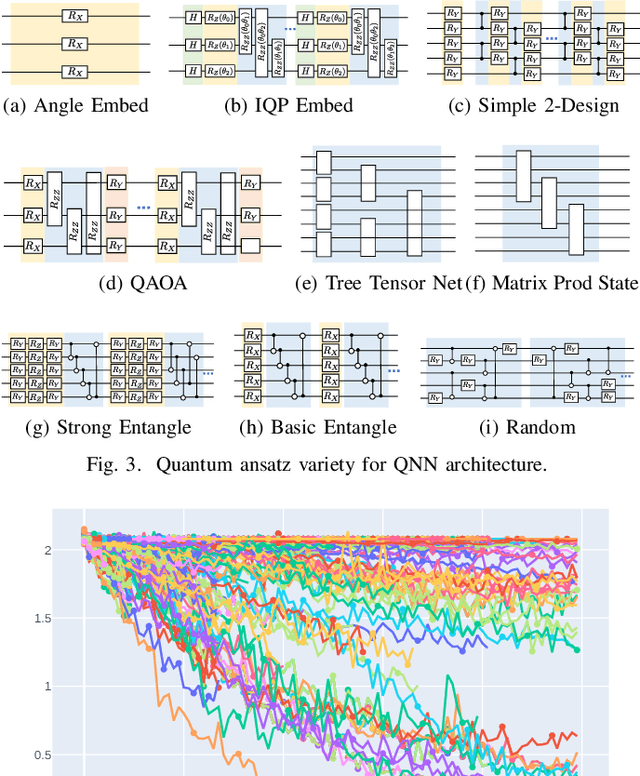

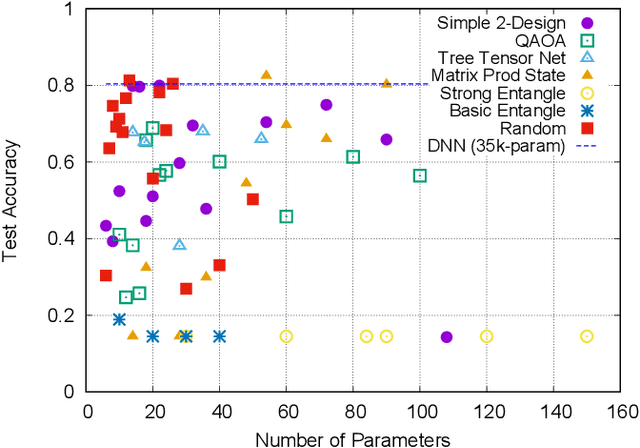

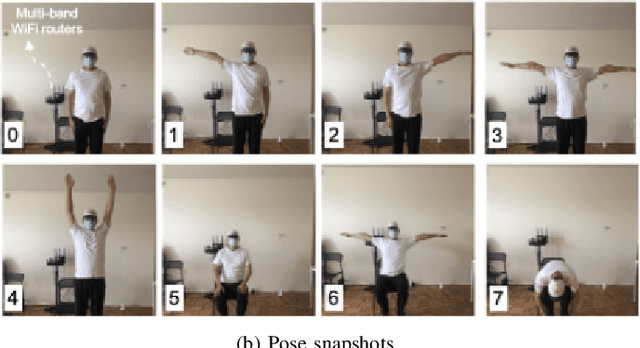

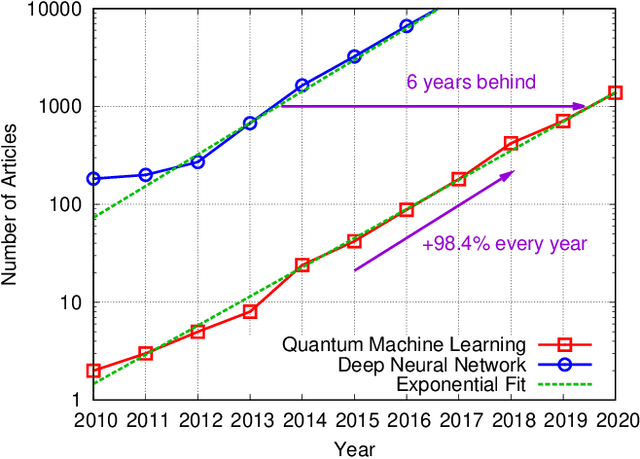

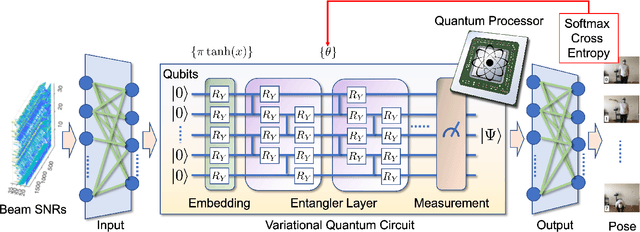

AutoQML: Automated Quantum Machine Learning for Wi-Fi Integrated Sensing and Communications

May 17, 2022

Abstract:Commercial Wi-Fi devices can be used for integrated sensing and communications (ISAC) to jointly exchange data and monitor indoor environment. In this paper, we investigate a proof-of-concept approach using automated quantum machine learning (AutoQML) framework called AutoAnsatz to recognize human gesture. We address how to efficiently design quantum circuits to configure quantum neural networks (QNN). The effectiveness of AutoQML is validated by an in-house experiment for human pose recognition, achieving state-of-the-art performance greater than 80% accuracy for a limited data size with a significantly small number of trainable parameters.

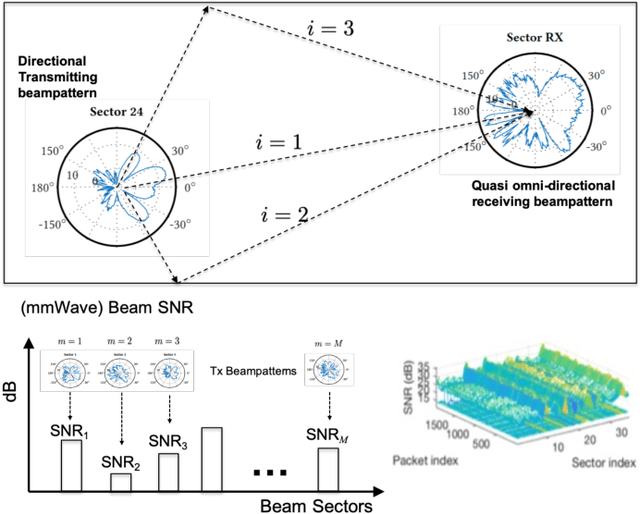

Quantum Transfer Learning for Wi-Fi Sensing

May 17, 2022

Abstract:Beyond data communications, commercial-off-the-shelf Wi-Fi devices can be used to monitor human activities, track device locomotion, and sense the ambient environment. In particular, spatial beam attributes that are inherently available in the 60-GHz IEEE 802.11ad/ay standards have shown to be effective in terms of overhead and channel measurement granularity for these indoor sensing tasks. In this paper, we investigate transfer learning to mitigate domain shift in human monitoring tasks when Wi-Fi settings and environments change over time. As a proof-of-concept study, we consider quantum neural networks (QNN) as well as classical deep neural networks (DNN) for the future quantum-ready society. The effectiveness of both DNN and QNN is validated by an in-house experiment for human pose recognition, achieving greater than 90% accuracy with a limited data size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge