Pingqiang Zhou

Efficient RF Passive Components Modeling with Bayesian Online Learning and Uncertainty Aware Sampling

Nov 19, 2025Abstract:Conventional radio frequency (RF) passive components modeling based on machine learning requires extensive electromagnetic (EM) simulations to cover geometric and frequency design spaces, creating computational bottlenecks. In this paper, we introduce an uncertainty-aware Bayesian online learning framework for efficient parametric modeling of RF passive components, which includes: 1) a Bayesian neural network with reconfigurable heads for joint geometric-frequency domain modeling while quantifying uncertainty; 2) an adaptive sampling strategy that simultaneously optimizes training data sampling across geometric parameters and frequency domain using uncertainty guidance. Validated on three RF passive components, the framework achieves accurate modeling while using only 2.86% EM simulation time compared to traditional ML-based flow, achieving a 35 times speedup.

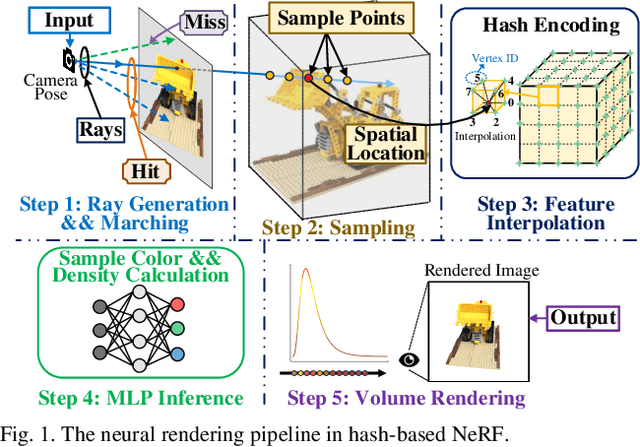

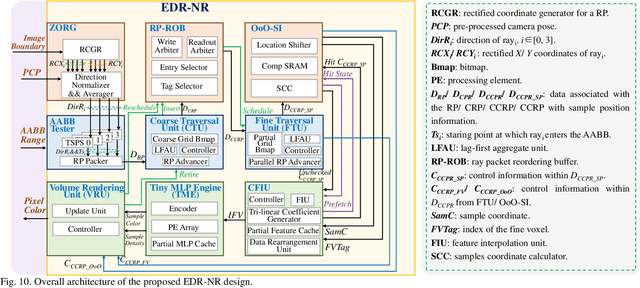

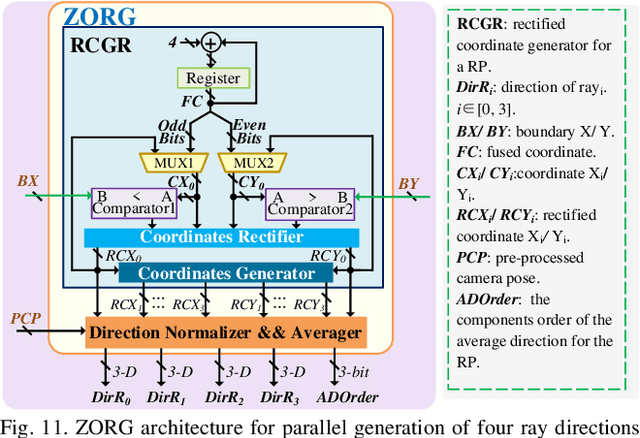

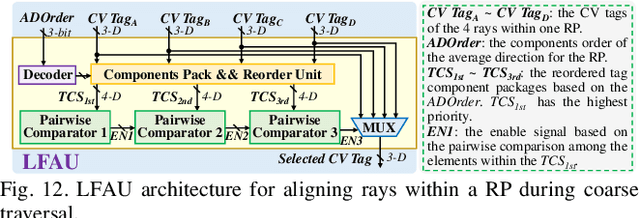

An Energy-Efficient Edge Coprocessor for Neural Rendering with Explicit Data Reuse Strategies

Oct 09, 2025

Abstract:Neural radiance fields (NeRF) have transformed 3D reconstruction and rendering, facilitating photorealistic image synthesis from sparse viewpoints. This work introduces an explicit data reuse neural rendering (EDR-NR) architecture, which reduces frequent external memory accesses (EMAs) and cache misses by exploiting the spatial locality from three phases, including rays, ray packets (RPs), and samples. The EDR-NR architecture features a four-stage scheduler that clusters rays on the basis of Z-order, prioritize lagging rays when ray divergence happens, reorders RPs based on spatial proximity, and issues samples out-of-orderly (OoO) according to the availability of on-chip feature data. In addition, a four-tier hierarchical RP marching (HRM) technique is integrated with an axis-aligned bounding box (AABB) to facilitate spatial skipping (SS), reducing redundant computations and improving throughput. Moreover, a balanced allocation strategy for feature storage is proposed to mitigate SRAM bank conflicts. Fabricated using a 40 nm process with a die area of 10.5 mmX, the EDR-NR chip demonstrates a 2.41X enhancement in normalized energy efficiency, a 1.21X improvement in normalized area efficiency, a 1.20X increase in normalized throughput, and a 53.42% reduction in on-chip SRAM consumption compared to state-of-the-art accelerators.

Tensor Train Random Projection

Oct 21, 2020

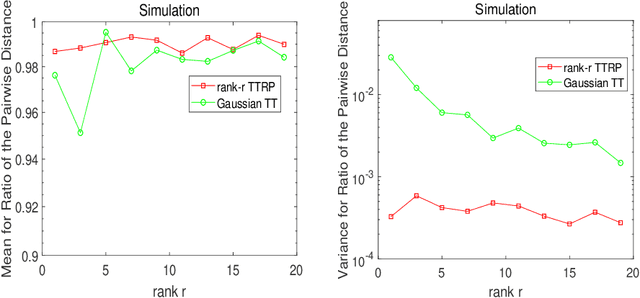

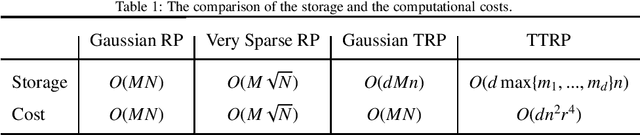

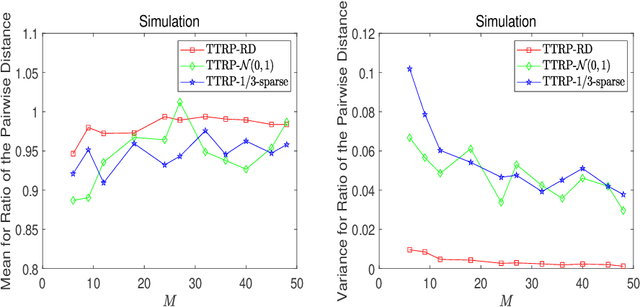

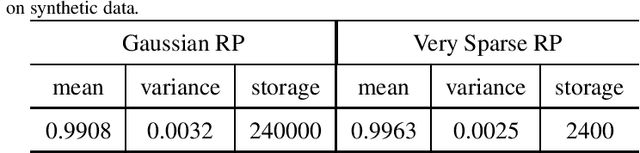

Abstract:This work proposes a novel tensor train random projection (TTRP) method for dimension reduction, where the pairwise distances can be approximately preserved. Based on the tensor train format, this new random projection method can speed up the computation for high dimensional problems and requires less storage with little loss in accuracy, compared with existing methods (e.g., very sparse random projection). Our TTRP is systematically constructed through a rank-one TT-format with Rademacher random variables, which results in efficient projection with small variances. The isometry property of TTRP is proven in this work, and detailed numerical experiments with data sets (synthetic, MNIST and CIFAR-10) are conducted to demonstrate the efficiency of TTRP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge