Pengcheng Fan

Baichuan-M1: Pushing the Medical Capability of Large Language Models

Feb 18, 2025

Abstract:The current generation of large language models (LLMs) is typically designed for broad, general-purpose applications, while domain-specific LLMs, especially in vertical fields like medicine, remain relatively scarce. In particular, the development of highly efficient and practical LLMs for the medical domain is challenging due to the complexity of medical knowledge and the limited availability of high-quality data. To bridge this gap, we introduce Baichuan-M1, a series of large language models specifically optimized for medical applications. Unlike traditional approaches that simply continue pretraining on existing models or apply post-training to a general base model, Baichuan-M1 is trained from scratch with a dedicated focus on enhancing medical capabilities. Our model is trained on 20 trillion tokens and incorporates a range of effective training methods that strike a balance between general capabilities and medical expertise. As a result, Baichuan-M1 not only performs strongly across general domains such as mathematics and coding but also excels in specialized medical fields. We have open-sourced Baichuan-M1-14B, a mini version of our model, which can be accessed through the following links.

Boosting Mask R-CNN Performance for Long, Thin Forensic Traces with Pre-Segmentation and IoU Region Merging

Mar 08, 2022

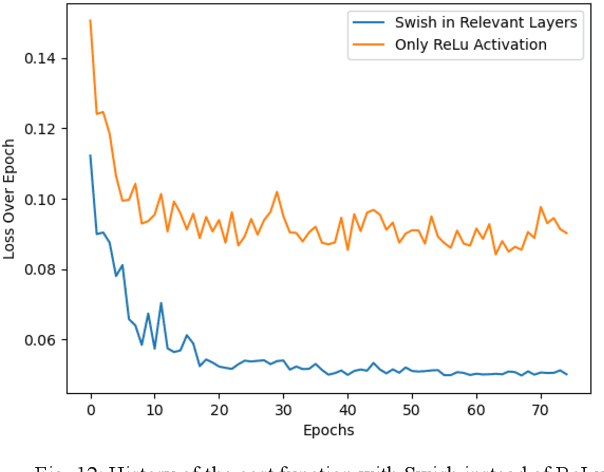

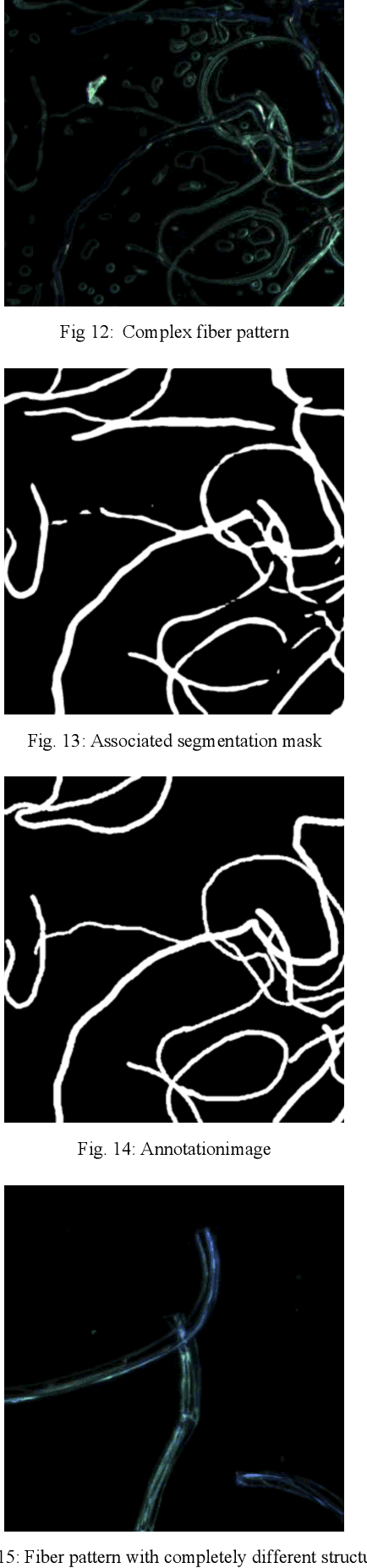

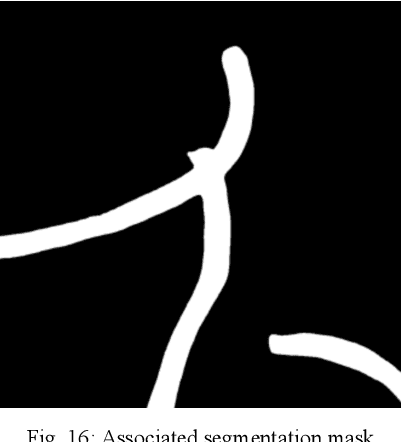

Abstract:Mask R-CNN has recently achieved great success in the field of instance segmentation. However, weaknesses of the algorithm have been repeatedly pointed out as well, especially in the segmentation of long, sparse objects whose orientation is not exclusively horizontal or vertical. We present here an approach that significantly improves the performance of the algorithm by first pre-segmenting the images with a PSPNet algorithm. To further improve its prediction, we have developed our own cost functions and heuristics in the form of training strategies, which can prevent so-called (early) overfitting and achieve a more targeted convergence. Furthermore, due to the high variance of the images, especially for PSPNet, we aimed to develop strategies for a high robustness and generalization, which are also presented here.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge