Patrick Hall

Branching Out: Broadening AI Measurement and Evaluation with Measurement Trees

Sep 30, 2025Abstract:This paper introduces \textit{measurement trees}, a novel class of metrics designed to combine various constructs into an interpretable multi-level representation of a measurand. Unlike conventional metrics that yield single values, vectors, surfaces, or categories, measurement trees produce a hierarchical directed graph in which each node summarizes its children through user-defined aggregation methods. In response to recent calls to expand the scope of AI system evaluation, measurement trees enhance metric transparency and facilitate the integration of heterogeneous evidence, including, e.g., agentic, business, energy-efficiency, sociotechnical, or security signals. We present definitions and examples, demonstrate practical utility through a large-scale measurement exercise, and provide accompanying open-source Python code. By operationalizing a transparent approach to measurement of complex constructs, this work offers a principled foundation for broader and more interpretable AI evaluation.

Reality Check: A New Evaluation Ecosystem Is Necessary to Understand AI's Real World Effects

May 24, 2025

Abstract:Conventional AI evaluation approaches concentrated within the AI stack exhibit systemic limitations for exploring, navigating and resolving the human and societal factors that play out in real world deployment such as in education, finance, healthcare, and employment sectors. AI capability evaluations can capture detail about first-order effects, such as whether immediate system outputs are accurate, or contain toxic, biased or stereotypical content, but AI's second-order effects, i.e. any long-term outcomes and consequences that may result from AI use in the real world, have become a significant area of interest as the technology becomes embedded in our daily lives. These secondary effects can include shifts in user behavior, societal, cultural and economic ramifications, workforce transformations, and long-term downstream impacts that may result from a broad and growing set of risks. This position paper argues that measuring the indirect and secondary effects of AI will require expansion beyond static, single-turn approaches conducted in silico to include testing paradigms that can capture what actually materializes when people use AI technology in context. Specifically, we describe the need for data and methods that can facilitate contextual awareness and enable downstream interpretation and decision making about AI's secondary effects, and recommend requirements for a new ecosystem.

Guidelines for Responsible and Human-Centered Use of Explainable Machine Learning

Jun 08, 2019

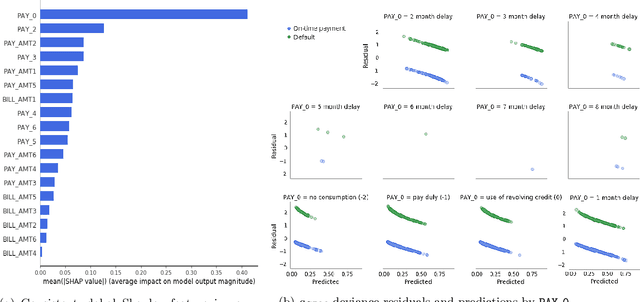

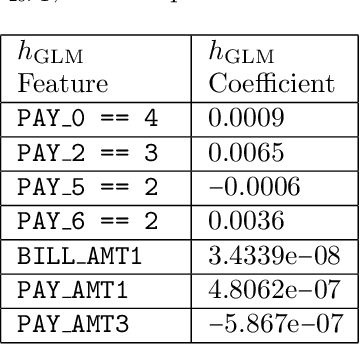

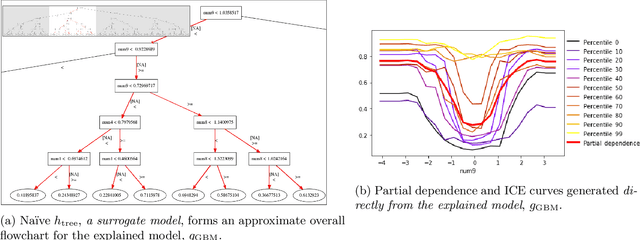

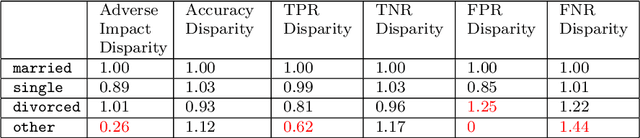

Abstract:Explainable machine learning (ML) has been implemented in numerous open source and proprietary software packages and explainable ML is an important aspect of commercial predictive modeling. However, explainable ML can be misused, particularly as a faulty safeguard for harmful black-boxes, e.g. fairwashing, and for other malevolent purposes like model stealing. This text discusses definitions, examples, and guidelines that promote a holistic and human-centered approach to ML which includes interpretable (i.e. white-box ) models and explanatory, debugging, and disparate impact analysis techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge