Ohad Shamir

Initialization-Dependent Sample Complexity of Linear Predictors and Neural Networks

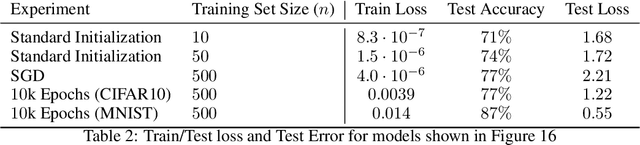

May 25, 2023Abstract:We provide several new results on the sample complexity of vector-valued linear predictors (parameterized by a matrix), and more generally neural networks. Focusing on size-independent bounds, where only the Frobenius norm distance of the parameters from some fixed reference matrix $W_0$ is controlled, we show that the sample complexity behavior can be surprisingly different than what we may expect considering the well-studied setting of scalar-valued linear predictors. This also leads to new sample complexity bounds for feed-forward neural networks, tackling some open questions in the literature, and establishing a new convex linear prediction problem that is provably learnable without uniform convergence.

From Tempered to Benign Overfitting in ReLU Neural Networks

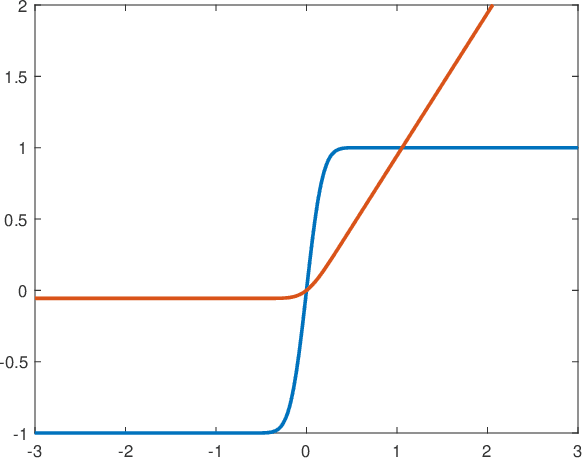

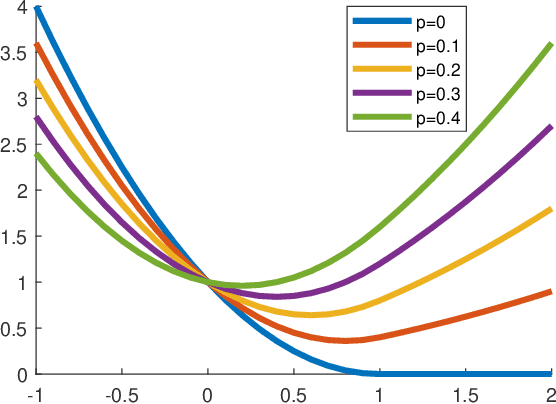

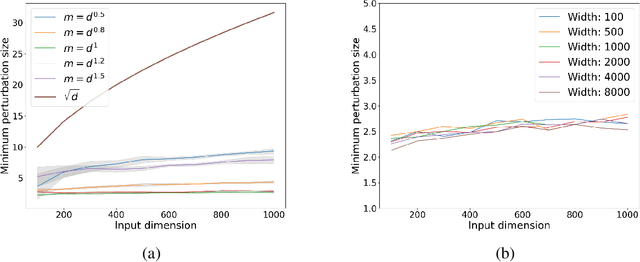

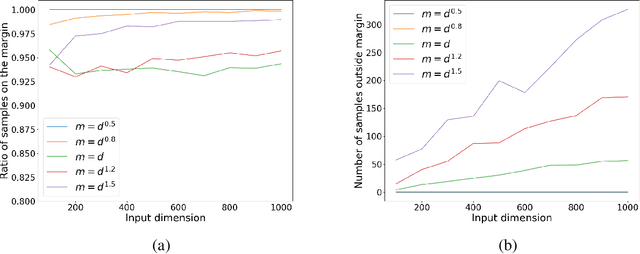

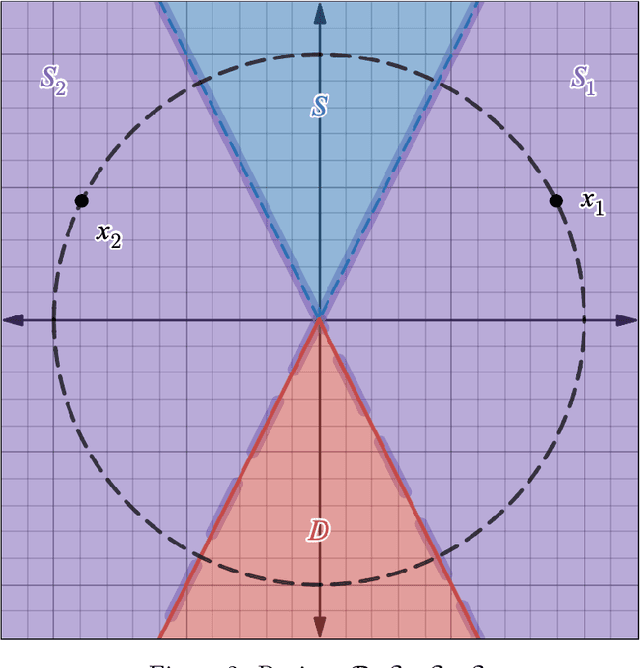

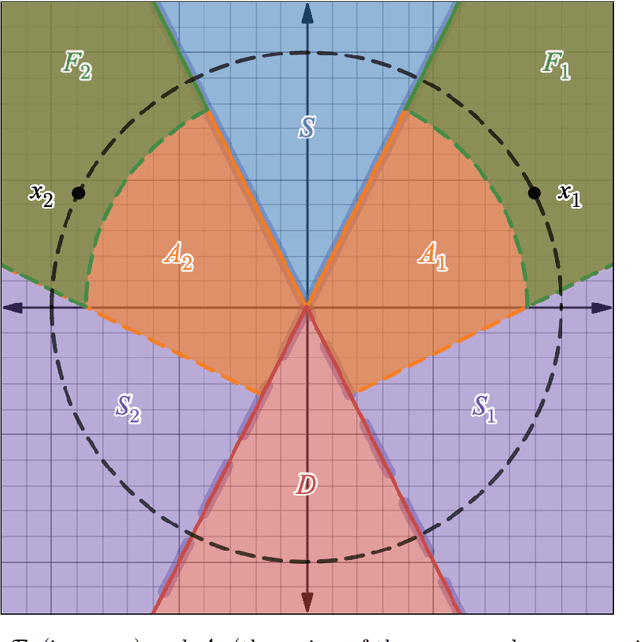

May 24, 2023Abstract:Overparameterized neural networks (NNs) are observed to generalize well even when trained to perfectly fit noisy data. This phenomenon motivated a large body of work on "benign overfitting", where interpolating predictors achieve near-optimal performance. Recently, it was conjectured and empirically observed that the behavior of NNs is often better described as "tempered overfitting", where the performance is non-optimal yet also non-trivial, and degrades as a function of the noise level. However, a theoretical justification of this claim for non-linear NNs has been lacking so far. In this work, we provide several results that aim at bridging these complementing views. We study a simple classification setting with 2-layer ReLU NNs, and prove that under various assumptions, the type of overfitting transitions from tempered in the extreme case of one-dimensional data, to benign in high dimensions. Thus, we show that the input dimension has a crucial role on the type of overfitting in this setting, which we also validate empirically for intermediate dimensions. Overall, our results shed light on the intricate connections between the dimension, sample size, architecture and training algorithm on the one hand, and the type of resulting overfitting on the other hand.

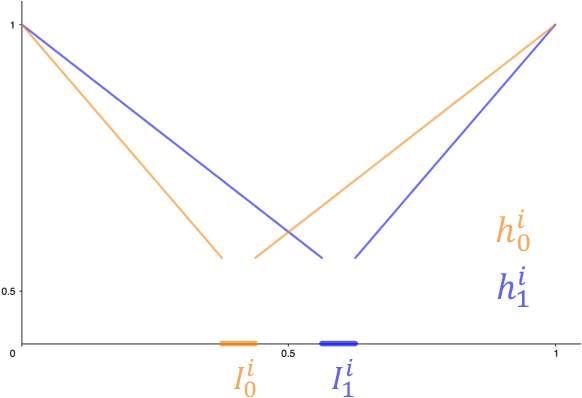

Deterministic Nonsmooth Nonconvex Optimization

Feb 16, 2023Abstract:We study the complexity of optimizing nonsmooth nonconvex Lipschitz functions by producing $(\delta,\epsilon)$-stationary points. Several recent works have presented randomized algorithms that produce such points using $\tilde O(\delta^{-1}\epsilon^{-3})$ first-order oracle calls, independent of the dimension $d$. It has been an open problem as to whether a similar result can be obtained via a deterministic algorithm. We resolve this open problem, showing that randomization is necessary to obtain a dimension-free rate. In particular, we prove a lower bound of $\Omega(d)$ for any deterministic algorithm. Moreover, we show that unlike smooth or convex optimization, access to function values is required for any deterministic algorithm to halt within any finite time. On the other hand, we prove that if the function is even slightly smooth, then the dimension-free rate of $\tilde O(\delta^{-1}\epsilon^{-3})$ can be obtained by a deterministic algorithm with merely a logarithmic dependence on the smoothness parameter. Motivated by these findings, we turn to study the complexity of deterministically smoothing Lipschitz functions. Though there are efficient black-box randomized smoothings, we start by showing that no such deterministic procedure can smooth functions in a meaningful manner, resolving an open question. We then bypass this impossibility result for the structured case of ReLU neural networks. To that end, in a practical white-box setting in which the optimizer is granted access to the network's architecture, we propose a simple, dimension-free, deterministic smoothing that provably preserves $(\delta,\epsilon)$-stationary points. Our method applies to a variety of architectures of arbitrary depth, including ResNets and ConvNets. Combined with our algorithm, this yields the first deterministic dimension-free algorithm for optimizing ReLU networks, circumventing our lower bound.

On the Complexity of Finding Small Subgradients in Nonsmooth Optimization

Sep 21, 2022

Abstract:We study the oracle complexity of producing $(\delta,\epsilon)$-stationary points of Lipschitz functions, in the sense proposed by Zhang et al. [2020]. While there exist dimension-free randomized algorithms for producing such points within $\widetilde{O}(1/\delta\epsilon^3)$ first-order oracle calls, we show that no dimension-free rate can be achieved by a deterministic algorithm. On the other hand, we point out that this rate can be derandomized for smooth functions with merely a logarithmic dependence on the smoothness parameter. Moreover, we establish several lower bounds for this task which hold for any randomized algorithm, with or without convexity. Finally, we show how the convergence rate of finding $(\delta,\epsilon)$-stationary points can be improved in case the function is convex, a setting which we motivate by proving that in general no finite time algorithm can produce points with small subgradients even for convex functions.

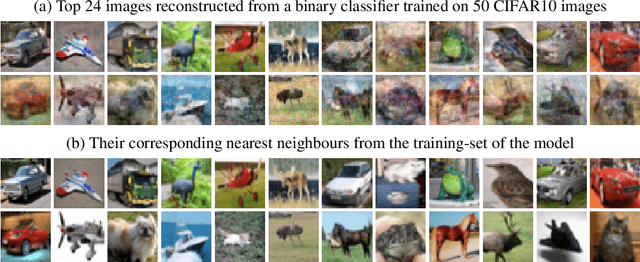

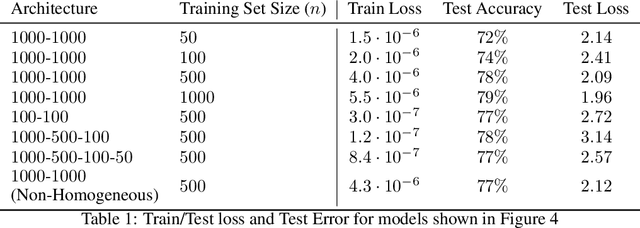

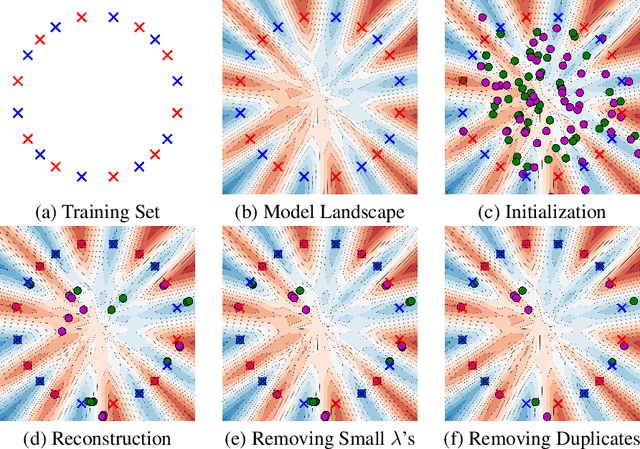

Reconstructing Training Data from Trained Neural Networks

Jun 15, 2022

Abstract:Understanding to what extent neural networks memorize training data is an intriguing question with practical and theoretical implications. In this paper we show that in some cases a significant fraction of the training data can in fact be reconstructed from the parameters of a trained neural network classifier. We propose a novel reconstruction scheme that stems from recent theoretical results about the implicit bias in training neural networks with gradient-based methods. To the best of our knowledge, our results are the first to show that reconstructing a large portion of the actual training samples from a trained neural network classifier is generally possible. This has negative implications on privacy, as it can be used as an attack for revealing sensitive training data. We demonstrate our method for binary MLP classifiers on a few standard computer vision datasets.

The Sample Complexity of One-Hidden-Layer Neural Networks

Feb 13, 2022

Abstract:We study norm-based uniform convergence bounds for neural networks, aiming at a tight understanding of how these are affected by the architecture and type of norm constraint, for the simple class of scalar-valued one-hidden-layer networks, and inputs bounded in Euclidean norm. We begin by proving that in general, controlling the spectral norm of the hidden layer weight matrix is insufficient to get uniform convergence guarantees (independent of the network width), while a stronger Frobenius norm control is sufficient, extending and improving on previous work. Motivated by the proof constructions, we identify and analyze two important settings where a mere spectral norm control turns out to be sufficient: First, when the network's activation functions are sufficiently smooth (with the result extending to deeper networks); and second, for certain types of convolutional networks. In the latter setting, we study how the sample complexity is additionally affected by parameters such as the amount of overlap between patches and the overall number of patches.

The Implicit Bias of Benign Overfitting

Feb 13, 2022

Abstract:The phenomenon of benign overfitting, where a predictor perfectly fits noisy training data while attaining low expected loss, has received much attention in recent years, but still remains not fully understood beyond simple linear regression setups. In this paper, we show that for regression, benign overfitting is ``biased'' towards certain types of problems, in the sense that its existence on one learning problem precludes its existence on other learning problems. On the negative side, we use this to argue that one should not expect benign overfitting to occur in general, for several natural extensions of the plain linear regression problems studied so far. We then turn to classification problems, and show that the situation there is much more favorable. Specifically, we consider a model where an arbitrary input distribution of some fixed dimension $k$ is concatenated with a high-dimensional distribution, and prove that the max-margin predictor (to which gradient-based methods are known to converge in direction) is asymptotically biased towards minimizing the expected \emph{squared hinge loss} w.r.t. the $k$-dimensional distribution. This allows us to reduce the question of benign overfitting in classification to the simpler question of whether this loss is a good surrogate for the misclassification error, and use it to show benign overfitting in some new settings.

Gradient Methods Provably Converge to Non-Robust Networks

Feb 09, 2022

Abstract:Despite a great deal of research, it is still unclear why neural networks are so susceptible to adversarial examples. In this work, we identify natural settings where depth-$2$ ReLU networks trained with gradient flow are provably non-robust (susceptible to small adversarial $\ell_2$-perturbations), even when robust networks that classify the training dataset correctly exist. Perhaps surprisingly, we show that the well-known implicit bias towards margin maximization induces bias towards non-robust networks, by proving that every network which satisfies the KKT conditions of the max-margin problem is non-robust.

Width is Less Important than Depth in ReLU Neural Networks

Feb 08, 2022Abstract:We solve an open question from Lu et al. (2017), by showing that any target network with inputs in $\mathbb{R}^d$ can be approximated by a width $O(d)$ network (independent of the target network's architecture), whose number of parameters is essentially larger only by a linear factor. In light of previous depth separation theorems, which imply that a similar result cannot hold when the roles of width and depth are interchanged, it follows that depth plays a more significant role than width in the expressive power of neural networks. We extend our results to constructing networks with bounded weights, and to constructing networks with width at most $d+2$, which is close to the minimal possible width due to previous lower bounds. Both of these constructions cause an extra polynomial factor in the number of parameters over the target network. We also show an exact representation of wide and shallow networks using deep and narrow networks which, in certain cases, does not increase the number of parameters over the target network.

Implicit Regularization Towards Rank Minimization in ReLU Networks

Jan 30, 2022

Abstract:We study the conjectured relationship between the implicit regularization in neural networks, trained with gradient-based methods, and rank minimization of their weight matrices. Previously, it was proved that for linear networks (of depth 2 and vector-valued outputs), gradient flow (GF) w.r.t. the square loss acts as a rank minimization heuristic. However, understanding to what extent this generalizes to nonlinear networks is an open problem. In this paper, we focus on nonlinear ReLU networks, providing several new positive and negative results. On the negative side, we prove (and demonstrate empirically) that, unlike the linear case, GF on ReLU networks may no longer tend to minimize ranks, in a rather strong sense (even approximately, for "most" datasets of size 2). On the positive side, we reveal that ReLU networks of sufficient depth are provably biased towards low-rank solutions in several reasonable settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge