Nicolas Langer

Uncertainty Modeling in Multimodal Speech Analysis Across the Psychosis Spectrum

Feb 25, 2025Abstract:Capturing subtle speech disruptions across the psychosis spectrum is challenging because of the inherent variability in speech patterns. This variability reflects individual differences and the fluctuating nature of symptoms in both clinical and non-clinical populations. Accounting for uncertainty in speech data is essential for predicting symptom severity and improving diagnostic precision. Speech disruptions characteristic of psychosis appear across the spectrum, including in non-clinical individuals. We develop an uncertainty-aware model integrating acoustic and linguistic features to predict symptom severity and psychosis-related traits. Quantifying uncertainty in specific modalities allows the model to address speech variability, improving prediction accuracy. We analyzed speech data from 114 participants, including 32 individuals with early psychosis and 82 with low or high schizotypy, collected through structured interviews, semi-structured autobiographical tasks, and narrative-driven interactions in German. The model improved prediction accuracy, reducing RMSE and achieving an F1-score of 83% with ECE = 4.5e-2, showing robust performance across different interaction contexts. Uncertainty estimation improved model interpretability by identifying reliability differences in speech markers such as pitch variability, fluency disruptions, and spectral instability. The model dynamically adjusted to task structures, weighting acoustic features more in structured settings and linguistic features in unstructured contexts. This approach strengthens early detection, personalized assessment, and clinical decision-making in psychosis-spectrum research.

Linguistic Features Extracted by GPT-4 Improve Alzheimer's Disease Detection based on Spontaneous Speech

Dec 20, 2024

Abstract:Alzheimer's Disease (AD) is a significant and growing public health concern. Investigating alterations in speech and language patterns offers a promising path towards cost-effective and non-invasive early detection of AD on a large scale. Large language models (LLMs), such as GPT, have enabled powerful new possibilities for semantic text analysis. In this study, we leverage GPT-4 to extract five semantic features from transcripts of spontaneous patient speech. The features capture known symptoms of AD, but they are difficult to quantify effectively using traditional methods of computational linguistics. We demonstrate the clinical significance of these features and further validate one of them ("Word-Finding Difficulties") against a proxy measure and human raters. When combined with established linguistic features and a Random Forest classifier, the GPT-derived features significantly improve the detection of AD. Our approach proves effective for both manually transcribed and automatically generated transcripts, representing a novel and impactful use of recent advancements in LLMs for AD speech analysis.

SPEED: Scalable Preprocessing of EEG Data for Self-Supervised Learning

Aug 15, 2024Abstract:Electroencephalography (EEG) research typically focuses on tasks with narrowly defined objectives, but recent studies are expanding into the use of unlabeled data within larger models, aiming for a broader range of applications. This addresses a critical challenge in EEG research. For example, Kostas et al. (2021) show that self-supervised learning (SSL) outperforms traditional supervised methods. Given the high noise levels in EEG data, we argue that further improvements are possible with additional preprocessing. Current preprocessing methods often fail to efficiently manage the large data volumes required for SSL, due to their lack of optimization, reliance on subjective manual corrections, and validation processes or inflexible protocols that limit SSL. We propose a Python-based EEG preprocessing pipeline optimized for self-supervised learning, designed to efficiently process large-scale data. This optimization not only stabilizes self-supervised training but also enhances performance on downstream tasks compared to training with raw data.

Parallel Log Spectra index : a quality metric in large scale resting EEG preprocessing

Oct 18, 2023

Abstract:Toward large scale electrophysiology data analysis, many preprocessing pipelines are developed to reject artifacts as the prerequisite step before the downstream analysis. A mainstay of these pipelines is based on the data driven approach -- Independent Component Analysis (ICA). Nevertheless, there is little effort put to the preprocessing quality control. In this paper, attentions to this issue were carefully paid by our observation that after running ICA based preprocessing pipeline: some subjects showed approximately Parallel multichannel Log power Spectra (PaLOS), namely, multichannel power spectra are proportional to each other. Firstly, the presence of PaLOS and its implications to connectivity analysis were described by real instance and simulation; secondly, we built its mathematical model and proposed the PaLOS index (PaLOSi) based on the common principal component analysis to detect its presence; thirdly, the performance of PaLOSi was tested on 30094 cases of EEG from 5 databases. The results showed that 1) the PaLOS implies a sole source which is physiologically implausible. 2) PaLOSi can detect the excessive elimination of brain components and is robust in terms of channel number, electrode layout, reference, and the other factors. 3) PaLOSi can output the channel and frequency wise index to help for in-depth check. This paper presented the PaLOS issue in the quality control step after running the preprocessing pipeline and the proposed PaLOSi may serve as a novel data quality metric in the large-scale automatic preprocessing.

An Interpretable and Attention-based Method for Gaze Estimation Using Electroencephalography

Aug 09, 2023Abstract:Eye movements can reveal valuable insights into various aspects of human mental processes, physical well-being, and actions. Recently, several datasets have been made available that simultaneously record EEG activity and eye movements. This has triggered the development of various methods to predict gaze direction based on brain activity. However, most of these methods lack interpretability, which limits their technology acceptance. In this paper, we leverage a large data set of simultaneously measured Electroencephalography (EEG) and Eye tracking, proposing an interpretable model for gaze estimation from EEG data. More specifically, we present a novel attention-based deep learning framework for EEG signal analysis, which allows the network to focus on the most relevant information in the signal and discard problematic channels. Additionally, we provide a comprehensive evaluation of the presented framework, demonstrating its superiority over current methods in terms of accuracy and robustness. Finally, the study presents visualizations that explain the results of the analysis and highlights the potential of attention mechanism for improving the efficiency and effectiveness of EEG data analysis in a variety of applications.

Electrode Clustering and Bandpass Analysis of EEG Data for Gaze Estimation

Feb 19, 2023

Abstract:In this study, we validate the findings of previously published papers, showing the feasibility of an Electroencephalography (EEG) based gaze estimation. Moreover, we extend previous research by demonstrating that with only a slight drop in model performance, we can significantly reduce the number of electrodes, indicating that a high-density, expensive EEG cap is not necessary for the purposes of EEG-based eye tracking. Using data-driven approaches, we establish which electrode clusters impact gaze estimation and how the different types of EEG data preprocessing affect the models' performance. Finally, we also inspect which recorded frequencies are most important for the defined tasks.

Detection of ADHD based on Eye Movements during Natural Viewing

Jul 14, 2022

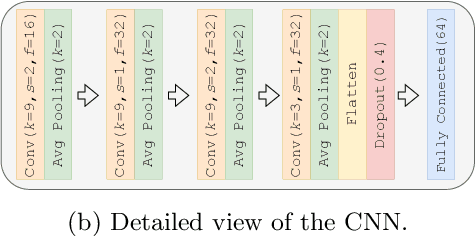

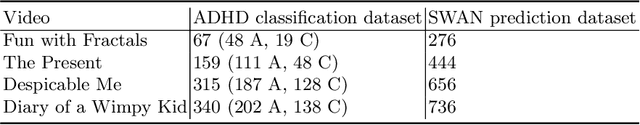

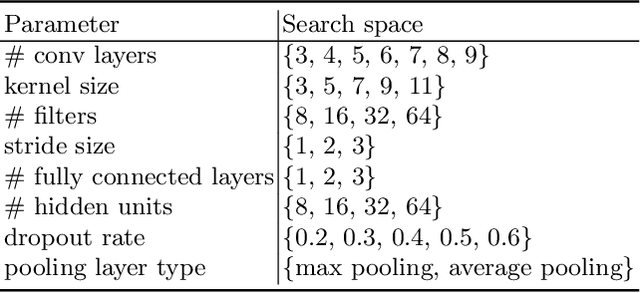

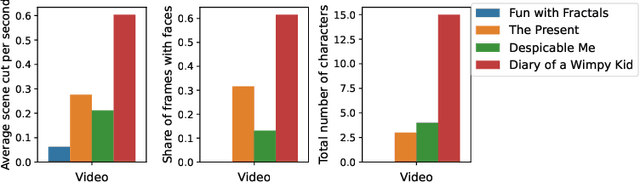

Abstract:Attention-deficit/hyperactivity disorder (ADHD) is a neurodevelopmental disorder that is highly prevalent and requires clinical specialists to diagnose. It is known that an individual's viewing behavior, reflected in their eye movements, is directly related to attentional mechanisms and higher-order cognitive processes. We therefore explore whether ADHD can be detected based on recorded eye movements together with information about the video stimulus in a free-viewing task. To this end, we develop an end-to-end deep learning-based sequence model which we pre-train on a related task for which more data are available. We find that the method is in fact able to detect ADHD and outperforms relevant baselines. We investigate the relevance of the input features in an ablation study. Interestingly, we find that the model's performance is closely related to the content of the video, which provides insights for future experimental designs.

A Deep Learning Approach for the Segmentation of Electroencephalography Data in Eye Tracking Applications

Jun 17, 2022

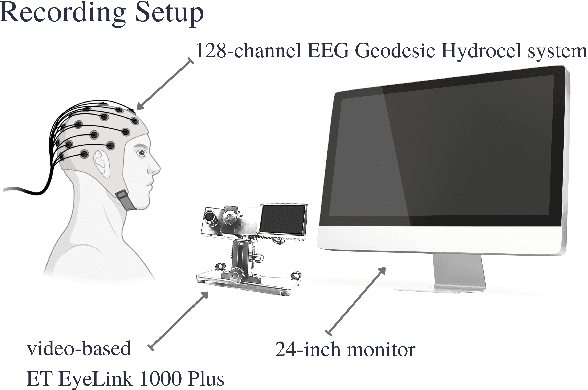

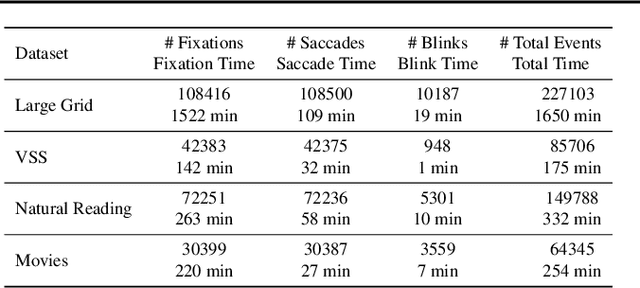

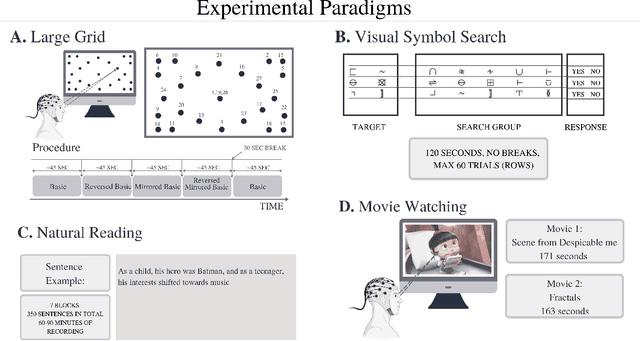

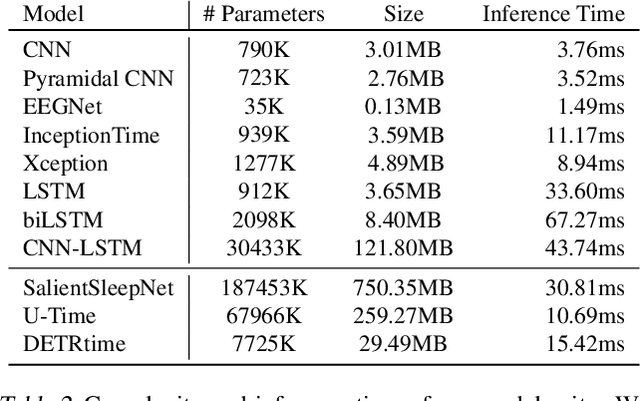

Abstract:The collection of eye gaze information provides a window into many critical aspects of human cognition, health and behaviour. Additionally, many neuroscientific studies complement the behavioural information gained from eye tracking with the high temporal resolution and neurophysiological markers provided by electroencephalography (EEG). One of the essential eye-tracking software processing steps is the segmentation of the continuous data stream into events relevant to eye-tracking applications, such as saccades, fixations, and blinks. Here, we introduce DETRtime, a novel framework for time-series segmentation that creates ocular event detectors that do not require additionally recorded eye-tracking modality and rely solely on EEG data. Our end-to-end deep learning-based framework brings recent advances in Computer Vision to the forefront of the times series segmentation of EEG data. DETRtime achieves state-of-the-art performance in ocular event detection across diverse eye-tracking experiment paradigms. In addition to that, we provide evidence that our model generalizes well in the task of EEG sleep stage segmentation.

Reading Task Classification Using EEG and Eye-Tracking Data

Dec 12, 2021

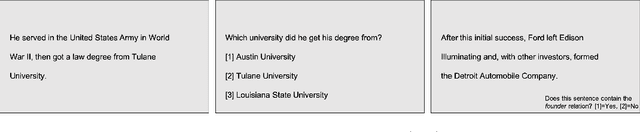

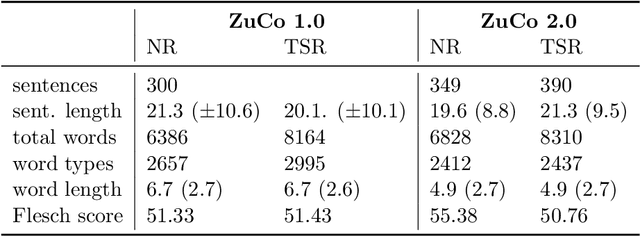

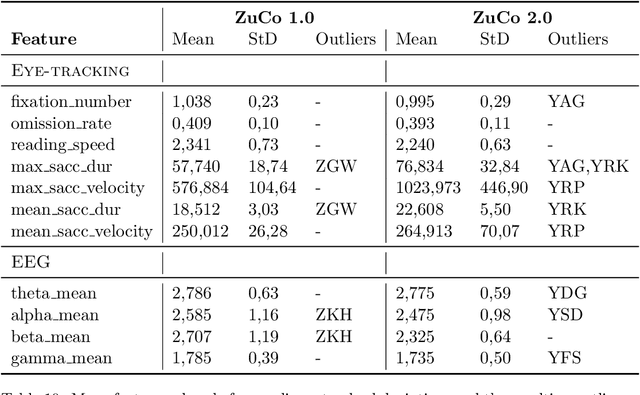

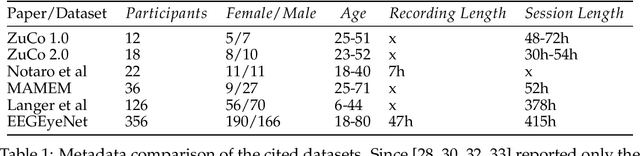

Abstract:The Zurich Cognitive Language Processing Corpus (ZuCo) provides eye-tracking and EEG signals from two reading paradigms, normal reading and task-specific reading. We analyze whether machine learning methods are able to classify these two tasks using eye-tracking and EEG features. We implement models with aggregated sentence-level features as well as fine-grained word-level features. We test the models in within-subject and cross-subject evaluation scenarios. All models are tested on the ZuCo 1.0 and ZuCo 2.0 data subsets, which are characterized by differing recording procedures and thus allow for different levels of generalizability. Finally, we provide a series of control experiments to analyze the results in more detail.

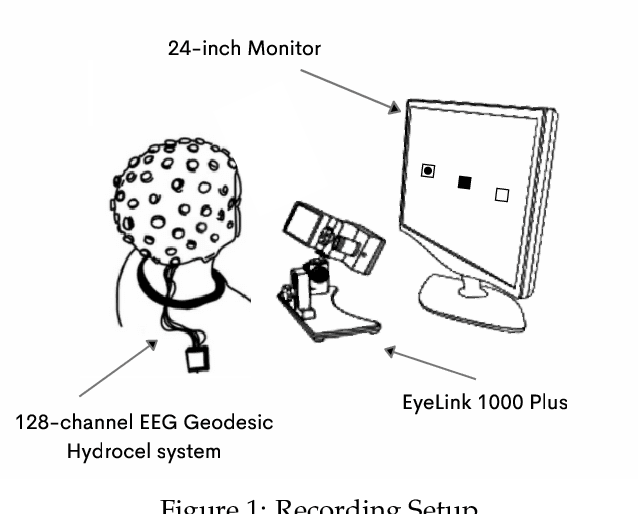

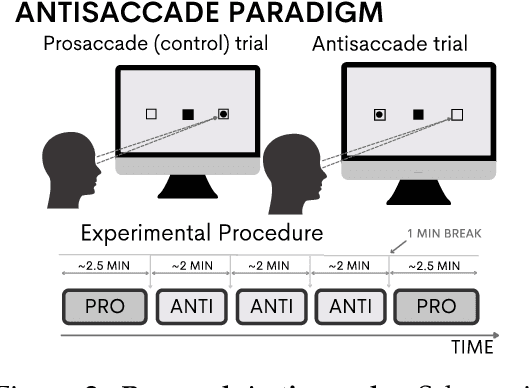

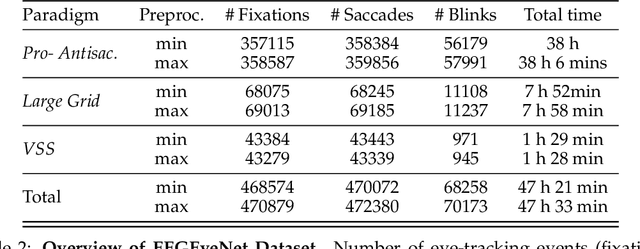

EEGEyeNet: a Simultaneous Electroencephalography and Eye-tracking Dataset and Benchmark for Eye Movement Prediction

Nov 10, 2021

Abstract:We present a new dataset and benchmark with the goal of advancing research in the intersection of brain activities and eye movements. Our dataset, EEGEyeNet, consists of simultaneous Electroencephalography (EEG) and Eye-tracking (ET) recordings from 356 different subjects collected from three different experimental paradigms. Using this dataset, we also propose a benchmark to evaluate gaze prediction from EEG measurements. The benchmark consists of three tasks with an increasing level of difficulty: left-right, angle-amplitude and absolute position. We run extensive experiments on this benchmark in order to provide solid baselines, both based on classical machine learning models and on large neural networks. We release our complete code and data and provide a simple and easy-to-use interface to evaluate new methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge