Evading Deepfake-Image Detectors with White- and Black-Box Attacks

Apr 01, 2020Nicholas Carlini, Hany Farid

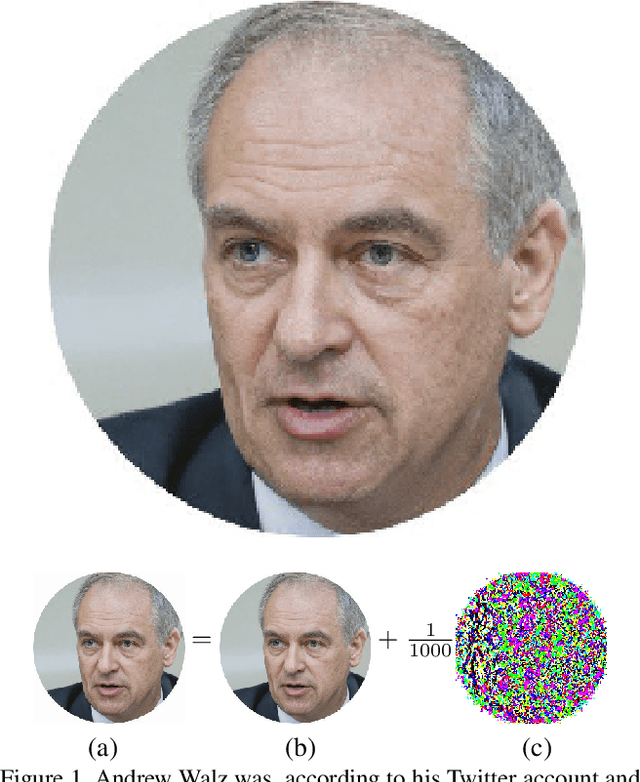

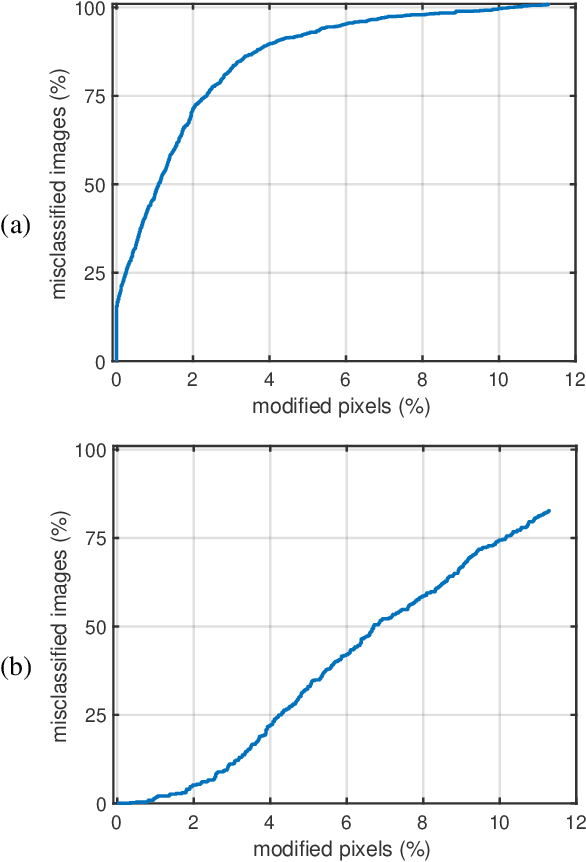

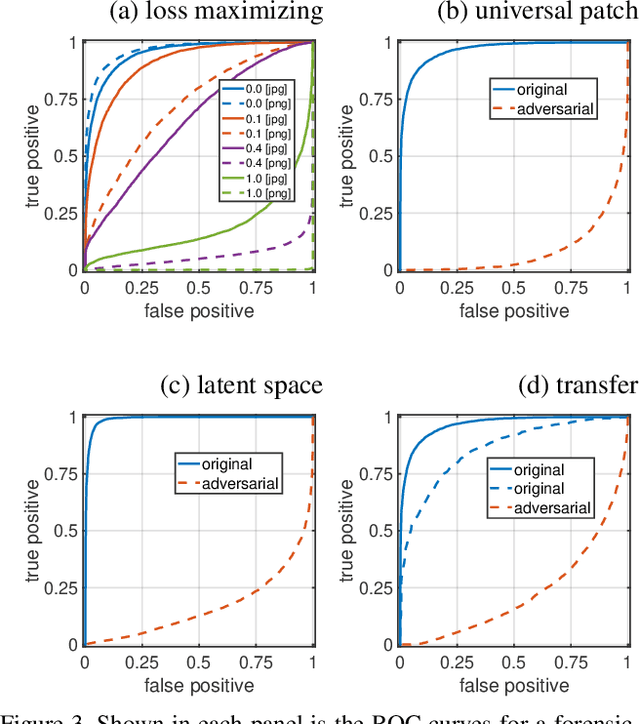

It is now possible to synthesize highly realistic images of people who don't exist. Such content has, for example, been implicated in the creation of fraudulent social-media profiles responsible for dis-information campaigns. Significant efforts are, therefore, being deployed to detect synthetically-generated content. One popular forensic approach trains a neural network to distinguish real from synthetic content. We show that such forensic classifiers are vulnerable to a range of attacks that reduce the classifier to near-0% accuracy. We develop five attack case studies on a state-of-the-art classifier that achieves an area under the ROC curve (AUC) of 0.95 on almost all existing image generators, when only trained on one generator. With full access to the classifier, we can flip the lowest bit of each pixel in an image to reduce the classifier's AUC to 0.0005; perturb 1% of the image area to reduce the classifier's AUC to 0.08; or add a single noise pattern in the synthesizer's latent space to reduce the classifier's AUC to 0.17. We also develop a black-box attack that, with no access to the target classifier, reduces the AUC to 0.22. These attacks reveal significant vulnerabilities of certain image-forensic classifiers.

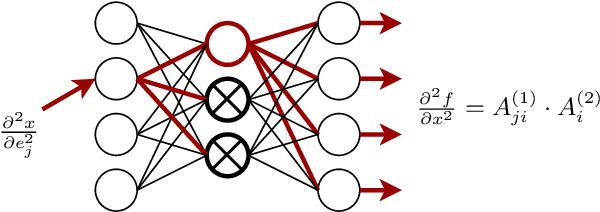

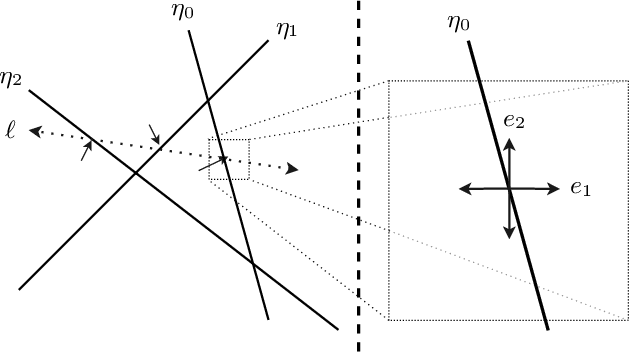

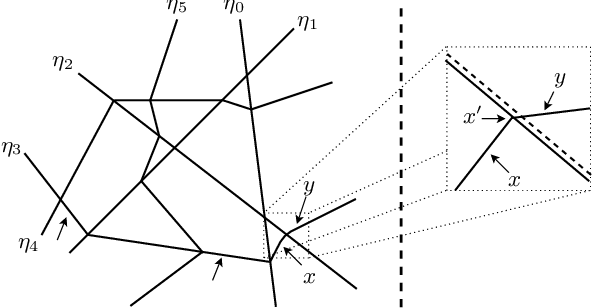

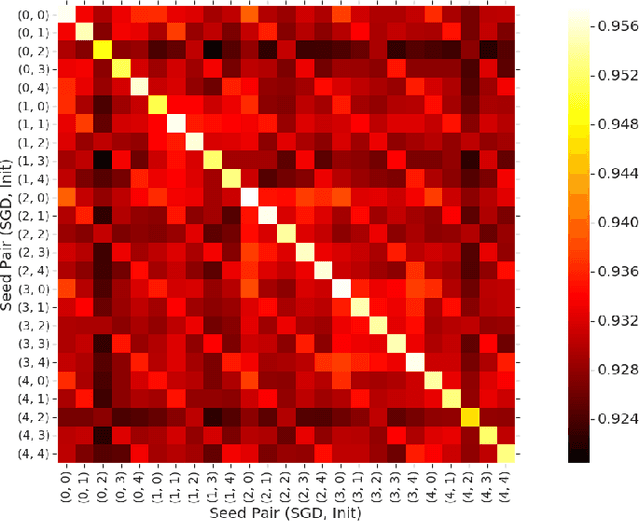

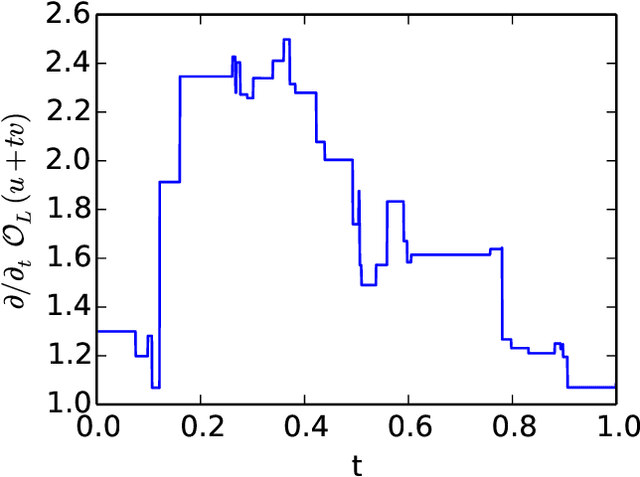

Cryptanalytic Extraction of Neural Network Models

Mar 10, 2020Nicholas Carlini, Matthew Jagielski, Ilya Mironov

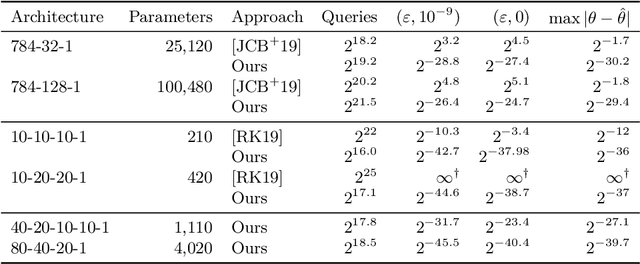

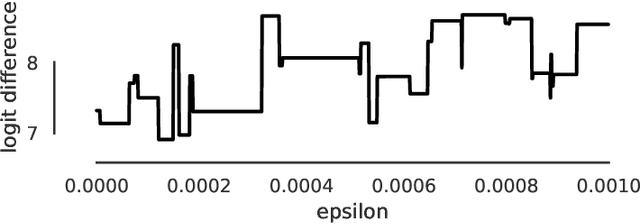

We argue that the machine learning problem of model extraction is actually a cryptanalytic problem in disguise, and should be studied as such. Given oracle access to a neural network, we introduce a differential attack that can efficiently steal the parameters of the remote model up to floating point precision. Our attack relies on the fact that ReLU neural networks are piecewise linear functions, and that queries at the critical points reveal information about the model parameters. We evaluate our attack on multiple neural network models and extract models that are 2^20 times more precise and require 100x fewer queries than prior work. For example, we extract a 100,000 parameter neural network trained on the MNIST digit recognition task with 2^21.5 queries in under an hour, such that the extracted model agrees with the oracle on all inputs up to a worst-case error of 2^-25, or a model with 4,000 parameters in 2^18.5 queries with worst-case error of 2^-40.4.

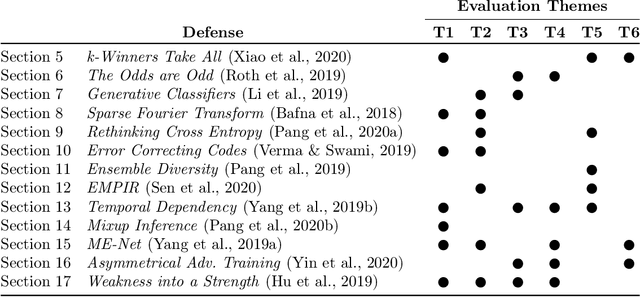

On Adaptive Attacks to Adversarial Example Defenses

Feb 19, 2020Florian Tramer, Nicholas Carlini, Wieland Brendel, Aleksander Madry

Adaptive attacks have (rightfully) become the de facto standard for evaluating defenses to adversarial examples. We find, however, that typical adaptive evaluations are incomplete. We demonstrate that thirteen defenses recently published at ICLR, ICML and NeurIPS---and chosen for illustrative and pedagogical purposes---can be circumvented despite attempting to perform evaluations using adaptive attacks. While prior evaluation papers focused mainly on the end result---showing that a defense was ineffective---this paper focuses on laying out the methodology and the approach necessary to perform an adaptive attack. We hope that these analyses will serve as guidance on how to properly perform adaptive attacks against defenses to adversarial examples, and thus will allow the community to make further progress in building more robust models.

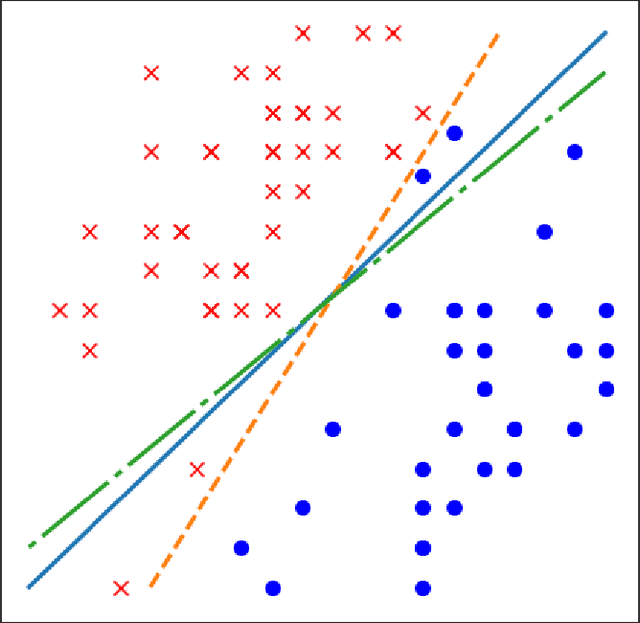

Fundamental Tradeoffs between Invariance and Sensitivity to Adversarial Perturbations

Feb 11, 2020Florian Tramèr, Jens Behrmann, Nicholas Carlini, Nicolas Papernot, Jörn-Henrik Jacobsen

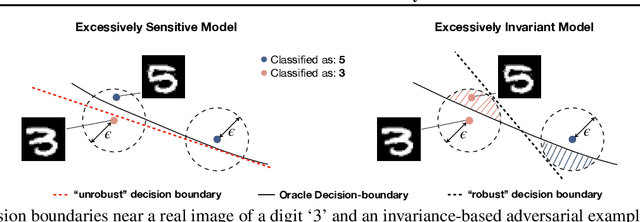

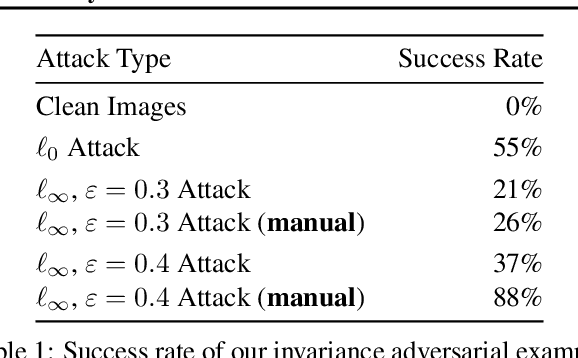

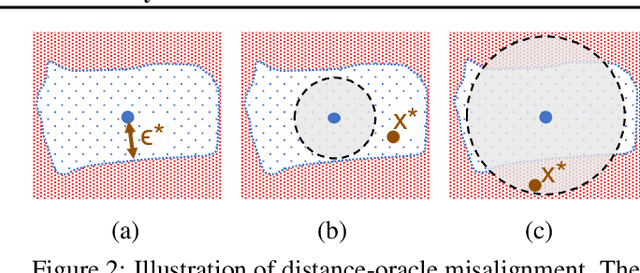

Adversarial examples are malicious inputs crafted to induce misclassification. Commonly studied sensitivity-based adversarial examples introduce semantically-small changes to an input that result in a different model prediction. This paper studies a complementary failure mode, invariance-based adversarial examples, that introduce minimal semantic changes that modify an input's true label yet preserve the model's prediction. We demonstrate fundamental tradeoffs between these two types of adversarial examples. We show that defenses against sensitivity-based attacks actively harm a model's accuracy on invariance-based attacks, and that new approaches are needed to resist both attack types. In particular, we break state-of-the-art adversarially-trained and certifiably-robust models by generating small perturbations that the models are (provably) robust to, yet that change an input's class according to human labelers. Finally, we formally show that the existence of excessively invariant classifiers arises from the presence of overly-robust predictive features in standard datasets.

FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence

Jan 21, 2020Kihyuk Sohn, David Berthelot, Chun-Liang Li, Zizhao Zhang, Nicholas Carlini, Ekin D. Cubuk, Alex Kurakin, Han Zhang, Colin Raffel

Semi-supervised learning (SSL) provides an effective means of leveraging unlabeled data to improve a model's performance. In this paper, we demonstrate the power of a simple combination of two common SSL methods: consistency regularization and pseudo-labeling. Our algorithm, FixMatch, first generates pseudo-labels using the model's predictions on weakly-augmented unlabeled images. For a given image, the pseudo-label is only retained if the model produces a high-confidence prediction. The model is then trained to predict the pseudo-label when fed a strongly-augmented version of the same image. Despite its simplicity, we show that FixMatch achieves state-of-the-art performance across a variety of standard semi-supervised learning benchmarks, including 94.93% accuracy on CIFAR-10 with 250 labels and 88.61% accuracy with 40 -- just 4 labels per class. Since FixMatch bears many similarities to existing SSL methods that achieve worse performance, we carry out an extensive ablation study to tease apart the experimental factors that are most important to FixMatch's success. We make our code available at https://github.com/google-research/fixmatch.

ReMixMatch: Semi-Supervised Learning with Distribution Alignment and Augmentation Anchoring

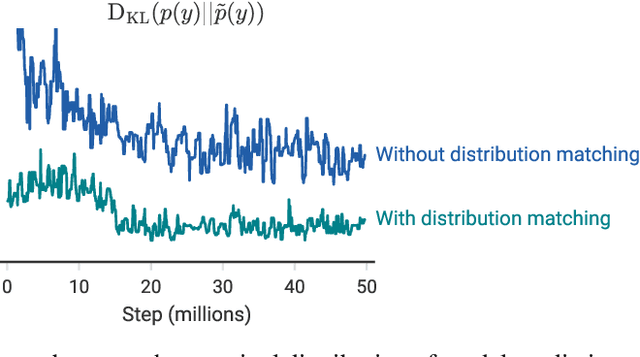

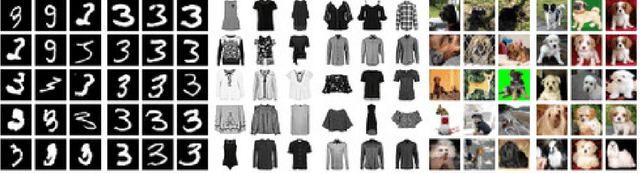

Nov 21, 2019David Berthelot, Nicholas Carlini, Ekin D. Cubuk, Alex Kurakin, Kihyuk Sohn, Han Zhang, Colin Raffel

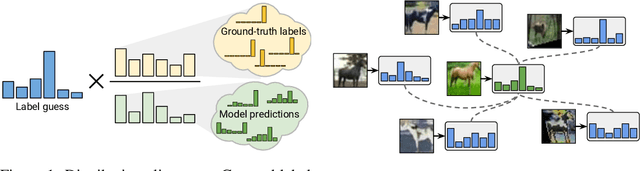

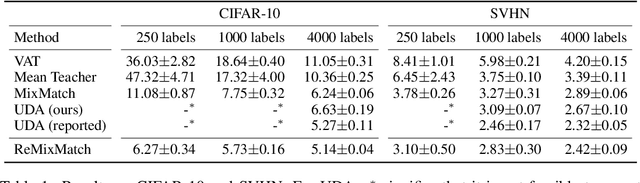

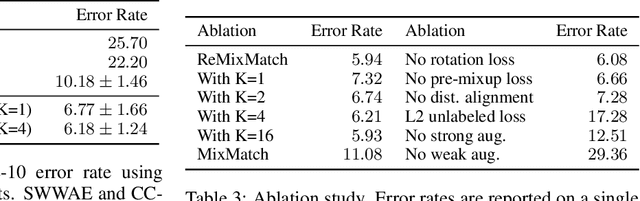

We improve the recently-proposed "MixMatch" semi-supervised learning algorithm by introducing two new techniques: distribution alignment and augmentation anchoring. Distribution alignment encourages the marginal distribution of predictions on unlabeled data to be close to the marginal distribution of ground-truth labels. Augmentation anchoring feeds multiple strongly augmented versions of an input into the model and encourages each output to be close to the prediction for a weakly-augmented version of the same input. To produce strong augmentations, we propose a variant of AutoAugment which learns the augmentation policy while the model is being trained. Our new algorithm, dubbed ReMixMatch, is significantly more data-efficient than prior work, requiring between $5\times$ and $16\times$ less data to reach the same accuracy. For example, on CIFAR-10 with 250 labeled examples we reach $93.73\%$ accuracy (compared to MixMatch's accuracy of $93.58\%$ with $4{,}000$ examples) and a median accuracy of $84.92\%$ with just four labels per class. We make our code and data open-source at https://github.com/google-research/remixmatch.

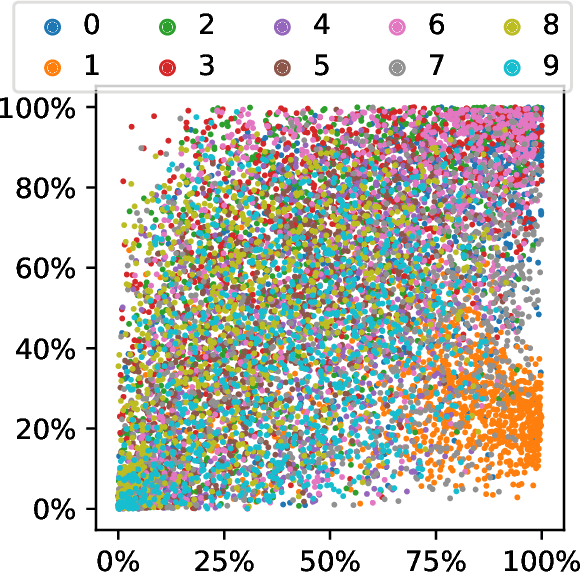

Distribution Density, Tails, and Outliers in Machine Learning: Metrics and Applications

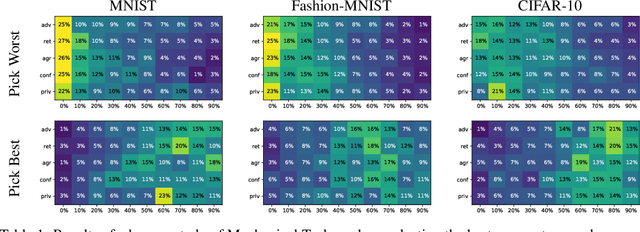

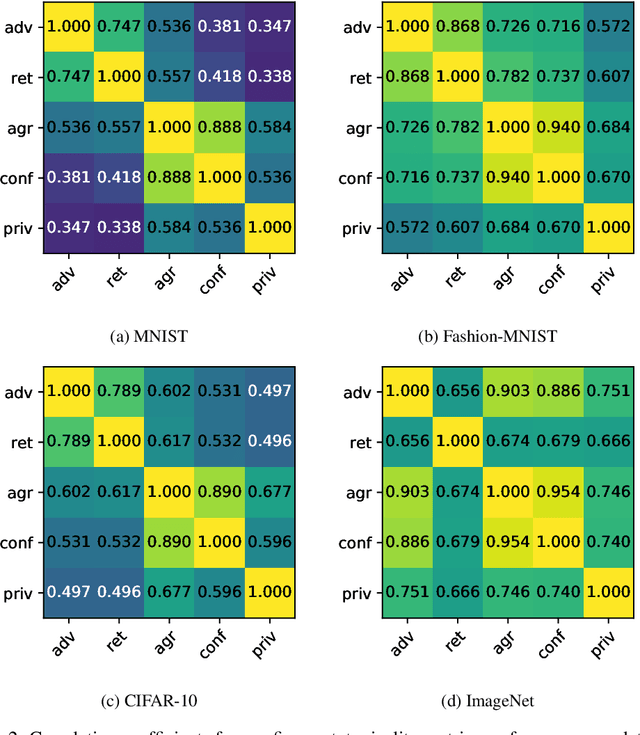

Oct 29, 2019Nicholas Carlini, Úlfar Erlingsson, Nicolas Papernot

We develop techniques to quantify the degree to which a given (training or testing) example is an outlier in the underlying distribution. We evaluate five methods to score examples in a dataset by how well-represented the examples are, for different plausible definitions of "well-represented", and apply these to four common datasets: MNIST, Fashion-MNIST, CIFAR-10, and ImageNet. Despite being independent approaches, we find all five are highly correlated, suggesting that the notion of being well-represented can be quantified. Among other uses, we find these methods can be combined to identify (a) prototypical examples (that match human expectations); (b) memorized training examples; and, (c) uncommon submodes of the dataset. Further, we show how we can utilize our metrics to determine an improved ordering for curriculum learning, and impact adversarial robustness. We release all metric values on training and test sets we studied.

High-Fidelity Extraction of Neural Network Models

Sep 03, 2019Matthew Jagielski, Nicholas Carlini, David Berthelot, Alex Kurakin, Nicolas Papernot

Model extraction allows an adversary to steal a copy of a remotely deployed machine learning model given access to its predictions. Adversaries are motivated to mount such attacks for a variety of reasons, ranging from reducing their computational costs, to eliminating the need to collect expensive training data, to obtaining a copy of a model in order to find adversarial examples, perform membership inference, or model inversion attacks. In this paper, we taxonomize the space of model extraction attacks around two objectives: \emph{accuracy}, i.e., performing well on the underlying learning task, and \emph{fidelity}, i.e., matching the predictions of the remote victim classifier on any input. To extract a high-accuracy model, we develop a learning-based attack which exploits the victim to supervise the training of an extracted model. Through analytical and empirical arguments, we then explain the inherent limitations that prevent any learning-based strategy from extracting a truly high-fidelity model---i.e., extracting a functionally-equivalent model whose predictions are identical to those of the victim model on all possible inputs. Addressing these limitations, we expand on prior work to develop the first practical functionally-equivalent extraction attack for direct extraction (i.e., without training) of a model's weights. We perform experiments both on academic datasets and a state-of-the-art image classifier trained with 1 billion proprietary images. In addition to broadening the scope of model extraction research, our work demonstrates the practicality of model extraction attacks against production-grade systems.

Stateful Detection of Black-Box Adversarial Attacks

Jul 12, 2019Steven Chen, Nicholas Carlini, David Wagner

The problem of adversarial examples, evasion attacks on machine learning classifiers, has proven extremely difficult to solve. This is true even when, as is the case in many practical settings, the classifier is hosted as a remote service and so the adversary does not have direct access to the model parameters. This paper argues that in such settings, defenders have a much larger space of actions than have been previously explored. Specifically, we deviate from the implicit assumption made by prior work that a defense must be a stateless function that operates on individual examples, and explore the possibility for stateful defenses. To begin, we develop a defense designed to detect the process of adversarial example generation. By keeping a history of the past queries, a defender can try to identify when a sequence of queries appears to be for the purpose of generating an adversarial example. We then introduce query blinding, a new class of attacks designed to bypass defenses that rely on such a defense approach. We believe that expanding the study of adversarial examples from stateless classifiers to stateful systems is not only more realistic for many black-box settings, but also gives the defender a much-needed advantage in responding to the adversary.

A critique of the DeepSec Platform for Security Analysis of Deep Learning Models

May 17, 2019Nicholas Carlini

At IEEE S&P 2019, the paper "DeepSec: A Uniform Platform for Security Analysis of Deep Learning Model" aims to to "systematically evaluate the existing adversarial attack and defense methods." While the paper's goals are laudable, it fails to achieve them and presents results that are fundamentally flawed and misleading. We explain the flaws in the DeepSec work, along with how its analysis fails to meaningfully evaluate the various attacks and defenses. Specifically, DeepSec (1) evaluates each defense obliviously, using attacks crafted against undefended models; (2) evaluates attacks and defenses using incorrect implementations that greatly under-estimate their effectiveness; (3) evaluates the robustness of each defense as an average, not based on the most effective attack against that defense; (4) performs several statistical analyses incorrectly and fails to report variance; and, (5) as a result of these errors draws invalid conclusions and makes sweeping generalizations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge