Myeonghun Jeong

ASVspoof 5: Design, Collection and Validation of Resources for Spoofing, Deepfake, and Adversarial Attack Detection Using Crowdsourced Speech

Feb 13, 2025

Abstract:ASVspoof 5 is the fifth edition in a series of challenges which promote the study of speech spoofing and deepfake attacks as well as the design of detection solutions. We introduce the ASVspoof 5 database which is generated in crowdsourced fashion from data collected in diverse acoustic conditions (cf. studio-quality data for earlier ASVspoof databases) and from ~2,000 speakers (cf. ~100 earlier). The database contains attacks generated with 32 different algorithms, also crowdsourced, and optimised to varying degrees using new surrogate detection models. Among them are attacks generated with a mix of legacy and contemporary text-to-speech synthesis and voice conversion models, in addition to adversarial attacks which are incorporated for the first time. ASVspoof 5 protocols comprise seven speaker-disjoint partitions. They include two distinct partitions for the training of different sets of attack models, two more for the development and evaluation of surrogate detection models, and then three additional partitions which comprise the ASVspoof 5 training, development and evaluation sets. An auxiliary set of data collected from an additional 30k speakers can also be used to train speaker encoders for the implementation of attack algorithms. Also described herein is an experimental validation of the new ASVspoof 5 database using a set of automatic speaker verification and spoof/deepfake baseline detectors. With the exception of protocols and tools for the generation of spoofed/deepfake speech, the resources described in this paper, already used by participants of the ASVspoof 5 challenge in 2024, are now all freely available to the community.

SegINR: Segment-wise Implicit Neural Representation for Sequence Alignment in Neural Text-to-Speech

Oct 07, 2024Abstract:We present SegINR, a novel approach to neural Text-to-Speech (TTS) that addresses sequence alignment without relying on an auxiliary duration predictor and complex autoregressive (AR) or non-autoregressive (NAR) frame-level sequence modeling. SegINR simplifies the process by converting text sequences directly into frame-level features. It leverages an optimal text encoder to extract embeddings, transforming each into a segment of frame-level features using a conditional implicit neural representation (INR). This method, named segment-wise INR (SegINR), models temporal dynamics within each segment and autonomously defines segment boundaries, reducing computational costs. We integrate SegINR into a two-stage TTS framework, using it for semantic token prediction. Our experiments in zero-shot adaptive TTS scenarios demonstrate that SegINR outperforms conventional methods in speech quality with computational efficiency.

High Fidelity Text-to-Speech Via Discrete Tokens Using Token Transducer and Group Masked Language Model

Jun 25, 2024

Abstract:We propose a novel two-stage text-to-speech (TTS) framework with two types of discrete tokens, i.e., semantic and acoustic tokens, for high-fidelity speech synthesis. It features two core components: the Interpreting module, which processes text and a speech prompt into semantic tokens focusing on linguistic contents and alignment, and the Speaking module, which captures the timbre of the target voice to generate acoustic tokens from semantic tokens, enriching speech reconstruction. The Interpreting stage employs a transducer for its robustness in aligning text to speech. In contrast, the Speaking stage utilizes a Conformer-based architecture integrated with a Grouped Masked Language Model (G-MLM) to boost computational efficiency. Our experiments verify that this innovative structure surpasses the conventional models in the zero-shot scenario in terms of speech quality and speaker similarity.

MakeSinger: A Semi-Supervised Training Method for Data-Efficient Singing Voice Synthesis via Classifier-free Diffusion Guidance

Jun 10, 2024Abstract:In this paper, we propose MakeSinger, a semi-supervised training method for singing voice synthesis (SVS) via classifier-free diffusion guidance. The challenge in SVS lies in the costly process of gathering aligned sets of text, pitch, and audio data. MakeSinger enables the training of the diffusion-based SVS model from any speech and singing voice data regardless of its labeling, thereby enhancing the quality of generated voices with large amount of unlabeled data. At inference, our novel dual guiding mechanism gives text and pitch guidance on the reverse diffusion step by estimating the score of masked input. Experimental results show that the model trained in a semi-supervised manner outperforms other baselines trained only on the labeled data in terms of pronunciation, pitch accuracy and overall quality. Furthermore, we demonstrate that by adding Text-to-Speech (TTS) data in training, the model can synthesize the singing voices of TTS speakers even without their singing voices.

Utilizing Neural Transducers for Two-Stage Text-to-Speech via Semantic Token Prediction

Jan 03, 2024Abstract:We propose a novel text-to-speech (TTS) framework centered around a neural transducer. Our approach divides the whole TTS pipeline into semantic-level sequence-to-sequence (seq2seq) modeling and fine-grained acoustic modeling stages, utilizing discrete semantic tokens obtained from wav2vec2.0 embeddings. For a robust and efficient alignment modeling, we employ a neural transducer named token transducer for the semantic token prediction, benefiting from its hard monotonic alignment constraints. Subsequently, a non-autoregressive (NAR) speech generator efficiently synthesizes waveforms from these semantic tokens. Additionally, a reference speech controls temporal dynamics and acoustic conditions at each stage. This decoupled framework reduces the training complexity of TTS while allowing each stage to focus on semantic and acoustic modeling. Our experimental results on zero-shot adaptive TTS demonstrate that our model surpasses the baseline in terms of speech quality and speaker similarity, both objectively and subjectively. We also delve into the inference speed and prosody control capabilities of our approach, highlighting the potential of neural transducers in TTS frameworks.

Efficient Parallel Audio Generation using Group Masked Language Modeling

Jan 02, 2024Abstract:We present a fast and high-quality codec language model for parallel audio generation. While SoundStorm, a state-of-the-art parallel audio generation model, accelerates inference speed compared to autoregressive models, it still suffers from slow inference due to iterative sampling. To resolve this problem, we propose Group-Masked Language Modeling~(G-MLM) and Group Iterative Parallel Decoding~(G-IPD) for efficient parallel audio generation. Both the training and sampling schemes enable the model to synthesize high-quality audio with a small number of iterations by effectively modeling the group-wise conditional dependencies. In addition, our model employs a cross-attention-based architecture to capture the speaker style of the prompt voice and improves computational efficiency. Experimental results demonstrate that our proposed model outperforms the baselines in prompt-based audio generation.

Transduce and Speak: Neural Transducer for Text-to-Speech with Semantic Token Prediction

Nov 08, 2023Abstract:We introduce a text-to-speech(TTS) framework based on a neural transducer. We use discretized semantic tokens acquired from wav2vec2.0 embeddings, which makes it easy to adopt a neural transducer for the TTS framework enjoying its monotonic alignment constraints. The proposed model first generates aligned semantic tokens using the neural transducer, then synthesizes a speech sample from the semantic tokens using a non-autoregressive(NAR) speech generator. This decoupled framework alleviates the training complexity of TTS and allows each stage to focus on 1) linguistic and alignment modeling and 2) fine-grained acoustic modeling, respectively. Experimental results on the zero-shot adaptive TTS show that the proposed model exceeds the baselines in speech quality and speaker similarity via objective and subjective measures. We also investigate the inference speed and prosody controllability of our proposed model, showing the potential of the neural transducer for TTS frameworks.

Towards single integrated spoofing-aware speaker verification embeddings

Jun 01, 2023

Abstract:This study aims to develop a single integrated spoofing-aware speaker verification (SASV) embeddings that satisfy two aspects. First, rejecting non-target speakers' input as well as target speakers' spoofed inputs should be addressed. Second, competitive performance should be demonstrated compared to the fusion of automatic speaker verification (ASV) and countermeasure (CM) embeddings, which outperformed single embedding solutions by a large margin in the SASV2022 challenge. We analyze that the inferior performance of single SASV embeddings comes from insufficient amount of training data and distinct nature of ASV and CM tasks. To this end, we propose a novel framework that includes multi-stage training and a combination of loss functions. Copy synthesis, combined with several vocoders, is also exploited to address the lack of spoofed data. Experimental results show dramatic improvements, achieving a SASV-EER of 1.06% on the evaluation protocol of the SASV2022 challenge.

SNAC: Speaker-normalized affine coupling layer in flow-based architecture for zero-shot multi-speaker text-to-speech

Nov 30, 2022Abstract:Zero-shot multi-speaker text-to-speech (ZSM-TTS) models aim to generate a speech sample with the voice characteristic of an unseen speaker. The main challenge of ZSM-TTS is to increase the overall speaker similarity for unseen speakers. One of the most successful speaker conditioning methods for flow-based multi-speaker text-to-speech (TTS) models is to utilize the functions which predict the scale and bias parameters of the affine coupling layers according to the given speaker embedding vector. In this letter, we improve on the previous speaker conditioning method by introducing a speaker-normalized affine coupling (SNAC) layer which allows for unseen speaker speech synthesis in a zero-shot manner leveraging a normalization-based conditioning technique. The newly designed coupling layer explicitly normalizes the input by the parameters predicted from a speaker embedding vector while training, enabling an inverse process of denormalizing for a new speaker embedding at inference. The proposed conditioning scheme yields the state-of-the-art performance in terms of the speech quality and speaker similarity in a ZSM-TTS setting.

Adversarial Speaker-Consistency Learning Using Untranscribed Speech Data for Zero-Shot Multi-Speaker Text-to-Speech

Oct 12, 2022

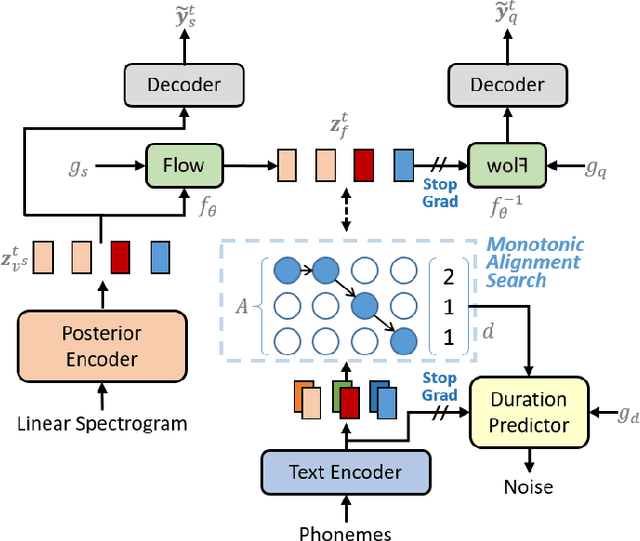

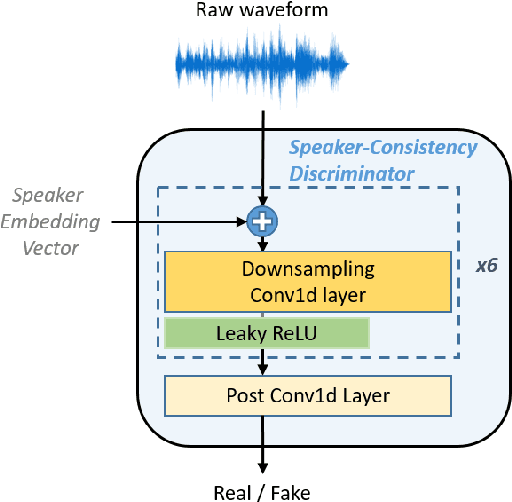

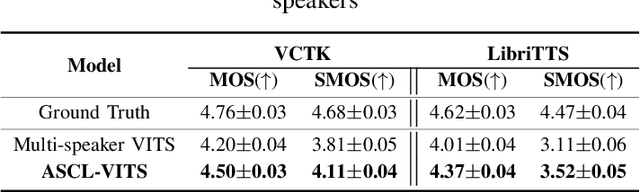

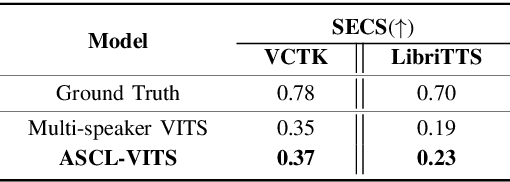

Abstract:Several recently proposed text-to-speech (TTS) models achieved to generate the speech samples with the human-level quality in the single-speaker and multi-speaker TTS scenarios with a set of pre-defined speakers. However, synthesizing a new speaker's voice with a single reference audio, commonly known as zero-shot multi-speaker text-to-speech (ZSM-TTS), is still a very challenging task. The main challenge of ZSM-TTS is the speaker domain shift problem upon the speech generation of a new speaker. To mitigate this problem, we propose adversarial speaker-consistency learning (ASCL). The proposed method first generates an additional speech of a query speaker using the external untranscribed datasets at each training iteration. Then, the model learns to consistently generate the speech sample of the same speaker as the corresponding speaker embedding vector by employing an adversarial learning scheme. The experimental results show that the proposed method is effective compared to the baseline in terms of the quality and speaker similarity in ZSM-TTS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge