Moi Hoon Yap

R-MNet: A Perceptual Adversarial Network for Image Inpainting

Aug 11, 2020

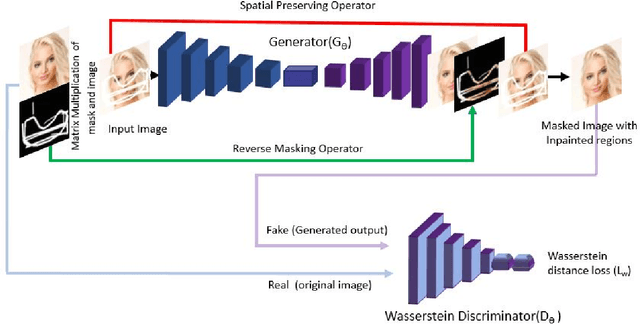

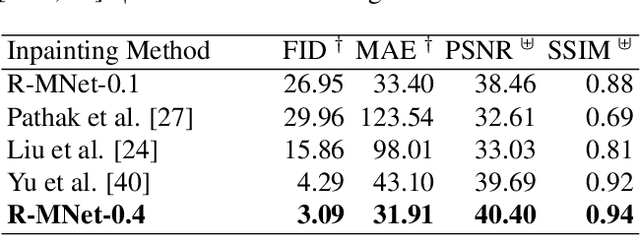

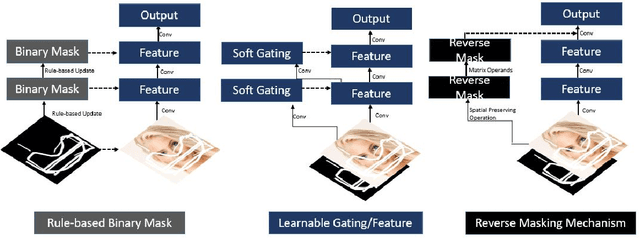

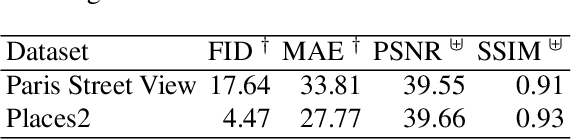

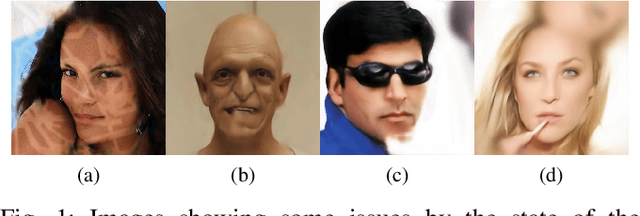

Abstract:Facial image inpainting is a problem that is widely studied, and in recent years the introduction of Generative Adversarial Networks, has led to improvements in the field. Unfortunately some issues persists, in particular when blending the missing pixels with the visible ones. We address the problem by proposing a Wasserstein GAN combined with a new reverse mask operator, namely Reverse Masking Network (R-MNet), a perceptual adversarial network for image inpainting. The reverse mask operator transfers the reverse masked image to the end of the encoder-decoder network leaving only valid pixels to be inpainted. Additionally, we propose a new loss function computed in feature space to target only valid pixels combined with adversarial training. These then capture data distributions and generate images similar to those in the training data with achieved realism (realistic and coherent) on the output images. We evaluate our method on publicly available dataset, and compare with state-of-the-art methods. We show that our method is able to generalize to high-resolution inpainting task, and further show more realistic outputs that are plausible to the human visual system when compared with the state-of-the-art methods.

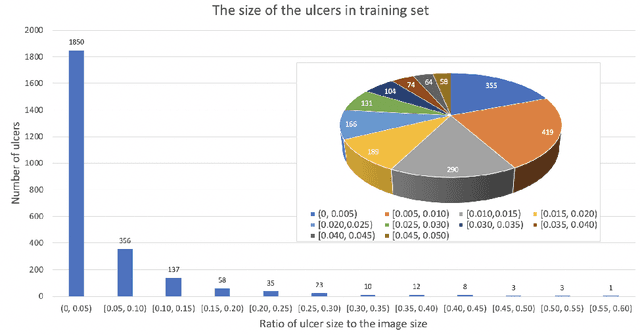

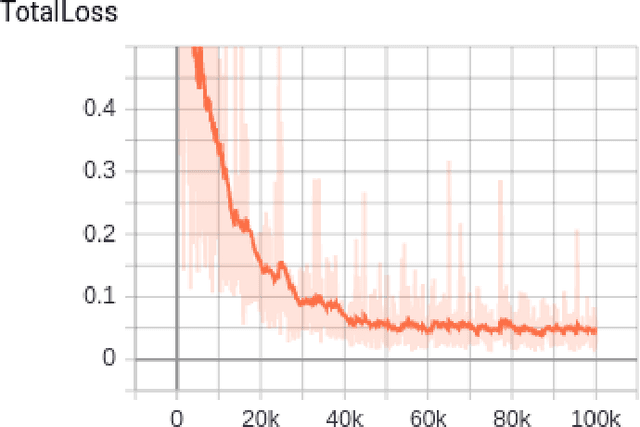

DFUC2020: Analysis Towards Diabetic Foot Ulcer Detection

Apr 24, 2020

Abstract:Every 20 seconds, a limb is amputated somewhere in the world due to diabetes. This is a global health problem that requires a global solution. The MICCAI challenge discussed in this paper, which concerns the detection of diabetic foot ulcers, will accelerate the development of innovative healthcare technology to address this unmet medical need. In an effort to improve patient care and reduce the strain on healthcare systems, recent research has focused on the creation of cloud-based detection algorithms that can be consumed as a service by a mobile app that patients (or a carer, partner or family member) could use themselves to monitor their condition and to detect the appearance of a diabetic foot ulcer (DFU). Collaborative work between Manchester Metropolitan University, Lancashire Teaching Hospital and the Manchester University NHS Foundation Trust has created a repository of 4000 DFU images for the purpose of supporting research toward more advanced methods of DFU detection. Based on a joint effort involving the lead scientists of the UK, US, India and New Zealand, this challenge will solicit original work, and promote interactions between researchers and interdisciplinary collaborations. This paper presents a dataset description and analysis, assessment methods, benchmark algorithms and initial evaluation results. It facilitates the challenge by providing useful insights into state-of-the-art and ongoing research.

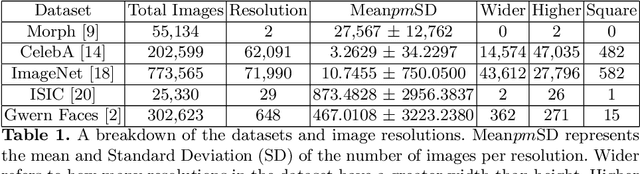

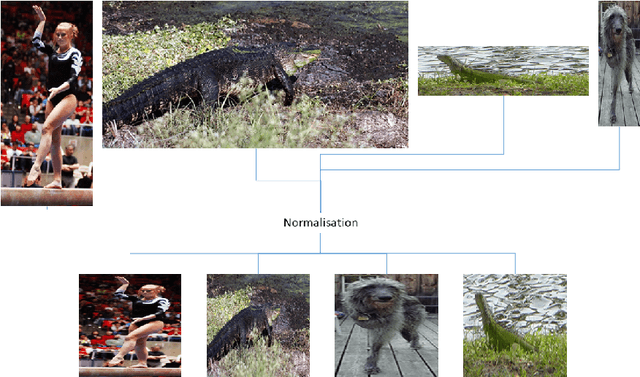

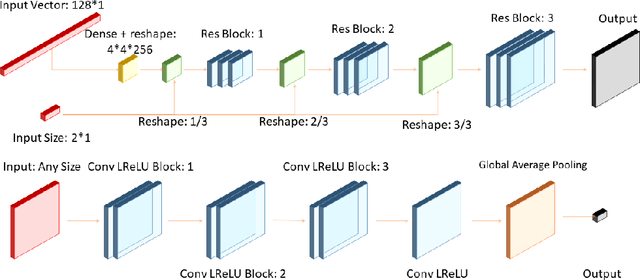

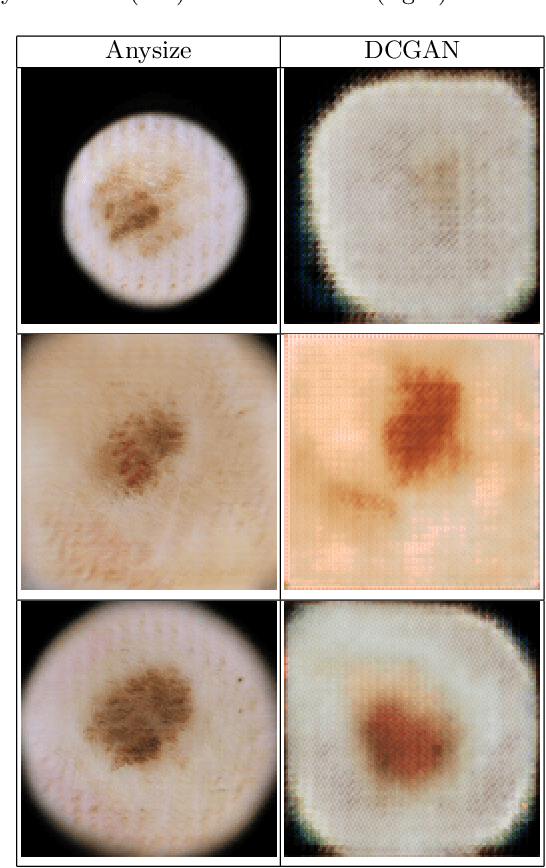

Anysize GAN: A solution to the image-warping problem

Mar 06, 2020

Abstract:We propose a new type of General Adversarial Network (GAN) to resolve a common issue with Deep Learning. We develop a novel architecture that can be applied to existing latent vector based GAN structures that allows them to generate on-the-fly images of any size. Existing GAN for image generation requires uniform images of matching dimensions. However, publicly available datasets, such as ImageNet contain thousands of different sizes. Resizing image causes deformations and changing the image data, whereas as our network does not require this preprocessing step. We make significant changes to the standard data loading techniques to enable any size image to be loaded for training. We also modify the network in two ways, by adding multiple inputs and a novel dynamic resizing layer. Finally we make adjustments to the discriminator to work on multiple resolutions. These changes can allow multiple resolution datasets to be trained on without any resizing, if memory allows. We validate our results on the ISIC 2019 skin lesion dataset. We demonstrate our method can successfully generate realistic images at different sizes without issue, preserving and understanding spatial relationships, while maintaining feature relationships. We will release the source codes upon paper acceptance.

Spotting Macro- and Micro-expression Intervals in Long Video Sequences

Feb 05, 2020

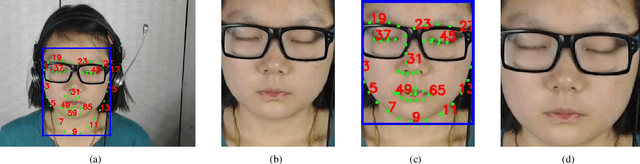

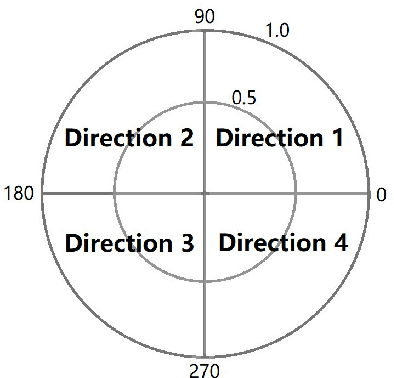

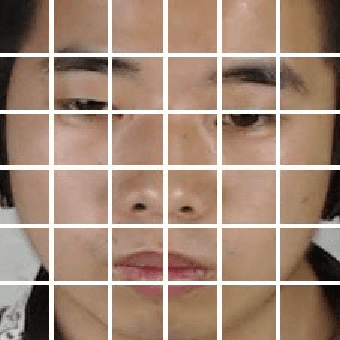

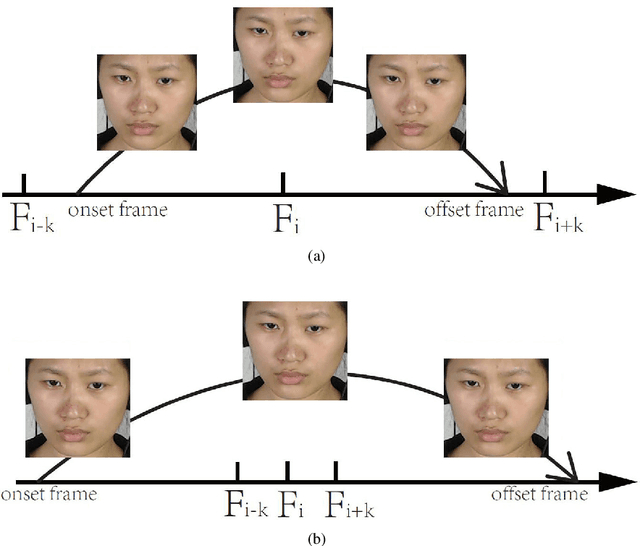

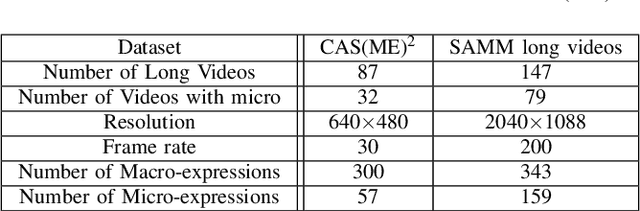

Abstract:This paper presents baseline results for the Third Facial Micro-Expression Grand Challenge (MEGC 2020). Both macro- and micro-expression intervals in CAS(ME)$^2$ and SAMM Long Videos are spotted by employing the method of Main Directional Maximal Difference Analysis (MDMD). The MDMD method uses the magnitude maximal difference in the main direction of optical flow features to spot facial movements. The single frame prediction results of the original MDMD method are post processed into reasonable video intervals. The metric F1-scores of baseline results are evaluated: for CAS(ME)$^2$, the F1-scores are 0.1196 and 0.0082 for macro- and micro-expressions respectively, and the overall F1-score is 0.0376; for SAMM Long Videos, the F1-scores are 0.0629 and 0.0364 for macro- and micro-expressions respectively, and the overall F1-score is 0.0445. The baseline project codes is publicly available at https://github.com/HeyingGithub/Baseline-project-for-MEGC2020_spotting.

Symmetric Skip Connection Wasserstein GAN for High-Resolution Facial Image Inpainting

Jan 11, 2020

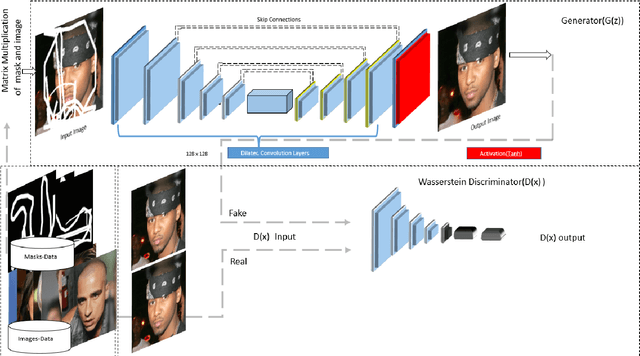

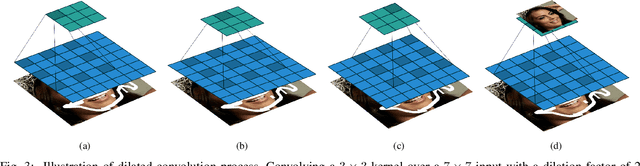

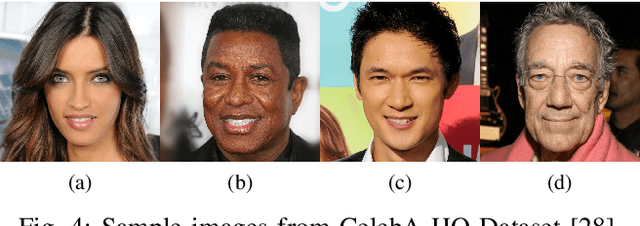

Abstract:We propose a Symmetric Skip Connection Wasserstein Generative Adversarial Network (S-WGAN) for high-resolution facial image inpainting. The architecture is an encoder-decoder with convolutional blocks, linked by skip connections. The encoder is a feature extractor that captures data abstractions of an input image to learn an end-to-end mapping from an input (binary masked image) to the ground-truth. The decoder uses the learned abstractions to reconstruct the image. With skip connections, S-WGAN transfers image details to the decoder. Also, we propose a Wasserstein-Perceptual loss function to preserve colour and maintain realism on a reconstructed image. We evaluate our method and the state-of-the-art methods on CelebA-HQ dataset. Our results show S-WGAN produces sharper and more realistic images when visually compared with other methods. The quantitative measures show our proposed S-WGAN achieves the best Structure Similarity Index Measure of 0.94.

SAMM Long Videos: A Spontaneous Facial Micro- and Macro-Expressions Dataset

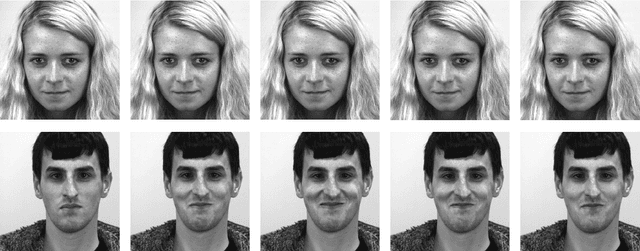

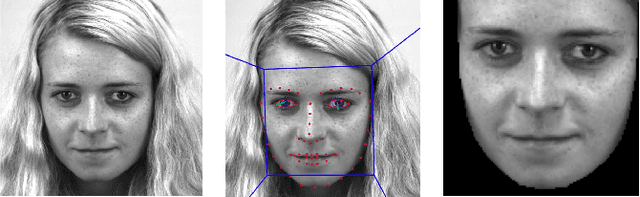

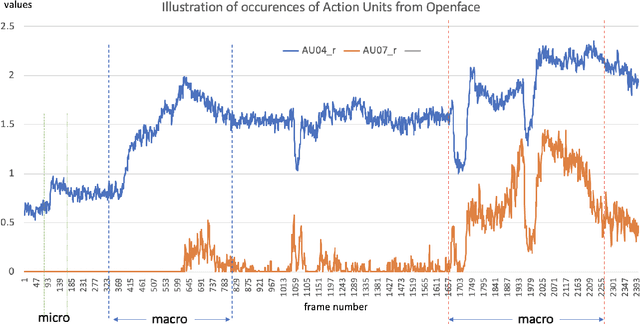

Nov 04, 2019

Abstract:With the growth of popularity of facial microexpressions in recent years, the demand for long videos with micro- and macro-expressions is increasing. Extended from SAMM, a micro-expressions dataset released in 2016, this short report presents SAMM Long Videos dataset for spontaneous micro- and macro-expressions recognition and spotting. SAMM Long Videos dataset consists of 147 long videos with 343 macro-expressions and 159 micro-expressions. The dataset isFACS-coded with detailed Action Units (AUs). We compareour dataset with the Chinese Academy of Sciences MacroExpressions and Micro-Expressions (CAS(ME)2) dataset, which is the only available fully annotated dataset with micro- and macro-expressions. Further, we preprocess the long videos using OpenFace, which includes face alignment and detection of facial movements based on the AUs. We will release the long videos for the next micro-expressions, and macro-expressions spotting challenge and future research uses.

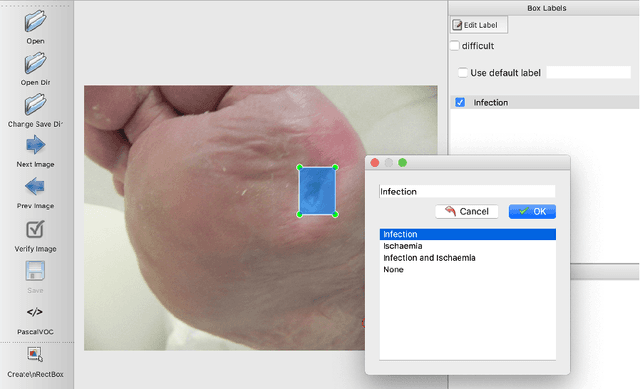

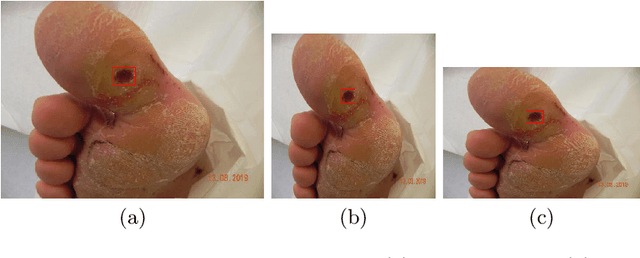

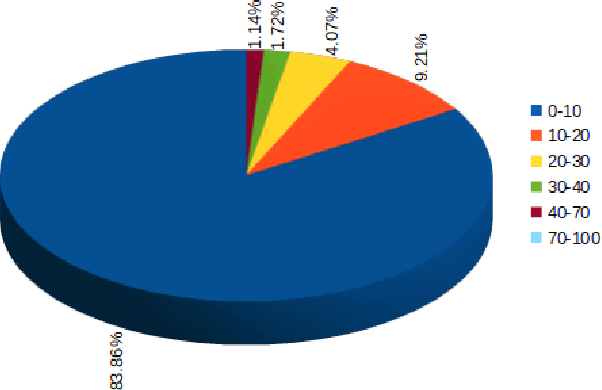

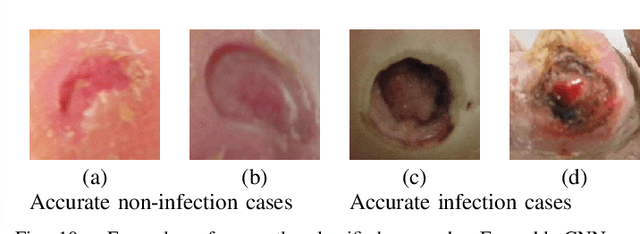

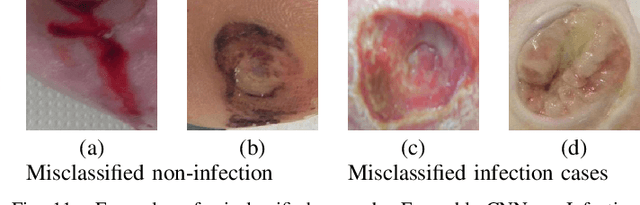

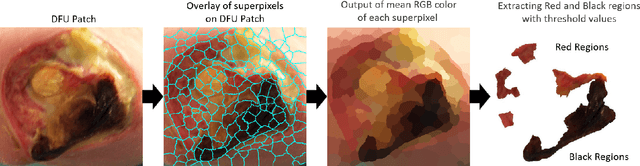

Recognition of Ischaemia and Infection in Diabetic Foot Ulcers: Dataset and Techniques

Sep 11, 2019

Abstract:Diabetic Foot Ulcers (DFU) detection using computerized methods is an emerging research area with the evolution of machine learning algorithms. However, existing research focuses on detecting and segmenting the ulcers. According to DFU medical classification systems, i.e. University of Texas Classification and SINBAD Classification, the presence of infection (bacteria in the wound) and ischaemia (inadequate blood supply) has important clinical implication for DFU assessment, which were used to predict the risk of amputation. In this work, we propose a new dataset and novel techniques to identify the presence of infection and ischaemia. We introduce a very comprehensive DFU dataset with ground truth labels of ischaemia and infection cases. For hand-crafted machine learning approach, we propose new feature descriptor, namely Superpixel Color Descriptor. Then, we propose a technique using Ensemble Convolutional Neural Network (CNN) model for ischaemia and infection recognition. The novelty lies in our proposed natural data-augmentation method, which clearly identifies the region of interest on foot images and focuses on finding the salient features existing in this area. Finally, we evaluate the performance of our proposed techniques on binary classification, i.e. ischaemia versus non-ischaemia and infection versus non-infection. Overall, our proposed method performs better in the classification of ischaemia than infection. We found that our proposed Ensemble CNN deep learning algorithms performed better for both classification tasks than hand-crafted machine learning algorithms, with 90% accuracy in ischaemia classification and 73% in infection classification.

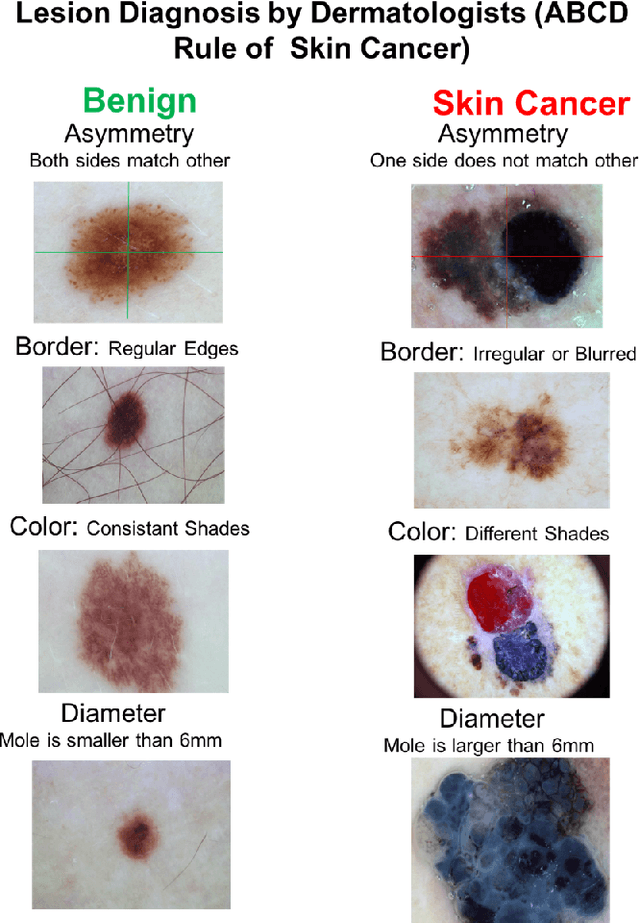

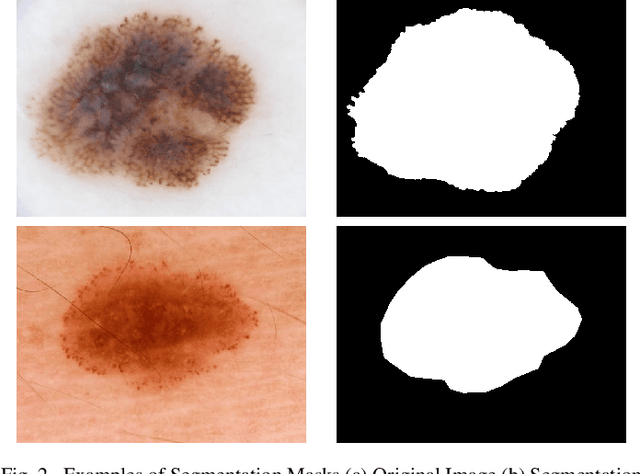

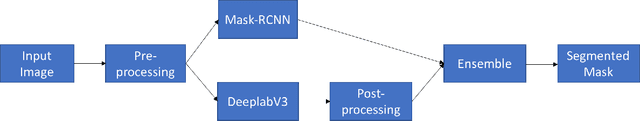

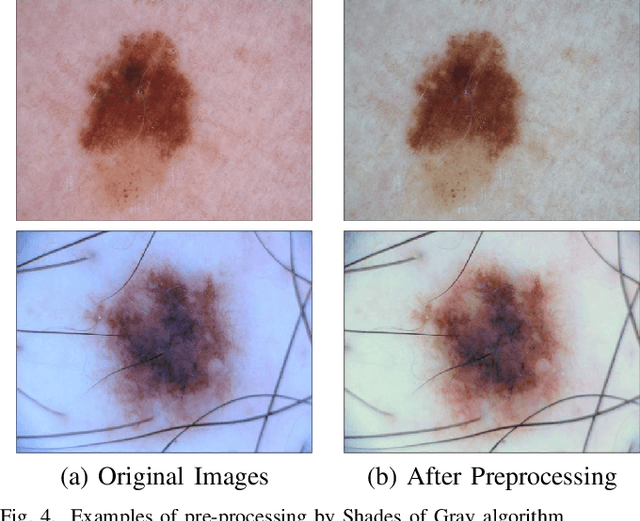

Automatic Lesion Boundary Segmentation in Dermoscopic Images with Ensemble Deep Learning Methods

Feb 02, 2019

Abstract:Early detection of skin cancer, particularly melanoma, is crucial to enable advanced treatment. Due to the rapid growth of skin cancers, there is a growing need of computerized analysis for skin lesions. These processes including detection, classification, and segmentation. The state-of-the-art public available datasets for skin lesions are often accompanied with a very limited amount of segmentation ground truth labeling as it is laborious and expensive. The lesion boundary segmentation is vital to locate the lesion accurately in dermoscopic images and lesion diagnosis of different skin lesion types. In this work, we propose the use of fully automated deep learning ensemble methods for accurate lesion boundary segmentation in dermoscopic images. We trained the Mask-RCNN and DeeplabV3+ methods on ISIC-2017 segmentation training dataset and evaluate the various ensemble performance of both networks on ISIC-2017 testing set, PH2 dataset. Our results showed that the proposed ensemble method segmented the skin lesions with Jaccard index of 79.58% for the ISBI 2017 test dataset. In comparison to FrCN, FCN, U-Net, and SegNet, the proposed ensemble method outperformed them by 2.48%, 7.42%, 17.95%, and 9.96% for the Jaccard index, respectively. Furthermore, the proposed ensemble method achieved a segmentation accuracy of 95.6% for some representative clinical benign cases, 90.78\% for the melanoma cases, and 91.29% for the seborrheic keratosis cases in the ISBI 2017 test dataset, exhibiting better performance than those of FrCN, FCN, U-Net, and SegNet.

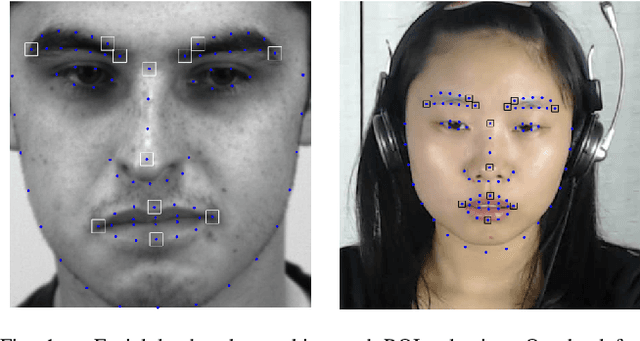

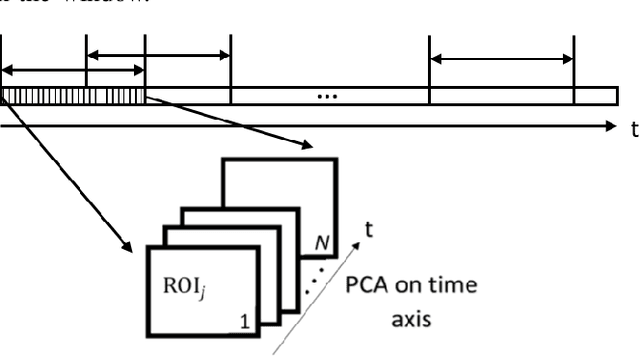

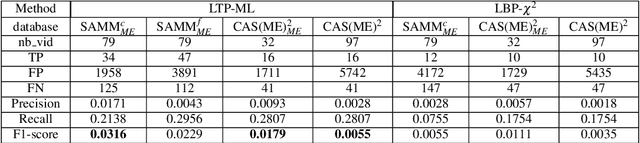

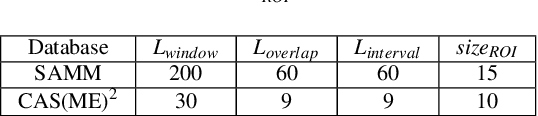

Spotting Micro-Expressions on Long Videos Sequences

Dec 26, 2018

Abstract:This paper presents baseline results for the first Micro-Expression Spotting Challenge 2019 by evaluating local temporal pattern (LTP) on SAMM and CAS(ME)2. The proposed LTP patterns are extracted by applying PCA in a temporal window on several facial local regions. The micro-expression sequences are then spotted by a local classification of LTP and a global fusion. The performance is evaluated by Leave-One-Subject-Out cross validation. Furthermore, we define the criteria of determining true positives in one video by overlap rate and set the metric F1-score for spotting performance of the whole database. The F1-score of baseline results for SAMM and CAS(ME)2 are 0.0316 and 0.0179, respectively.

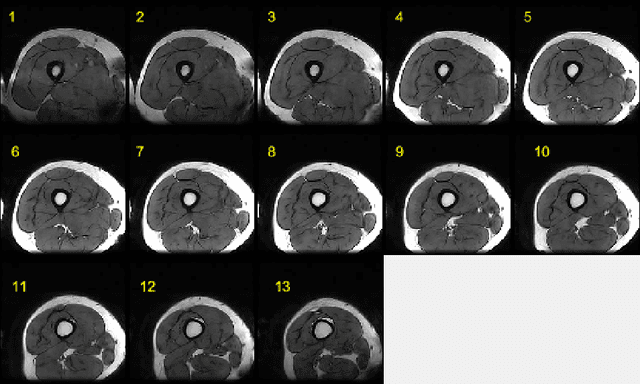

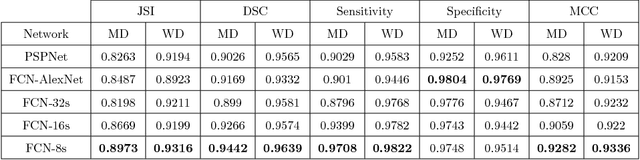

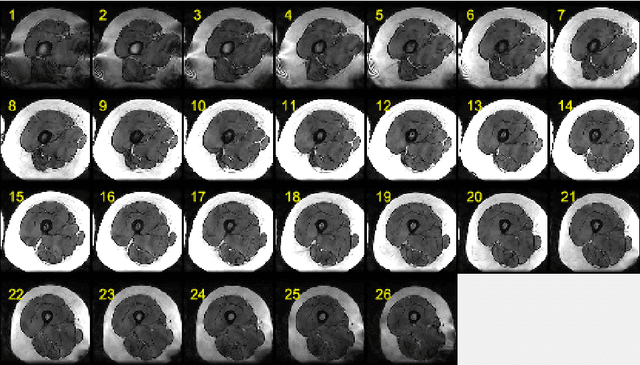

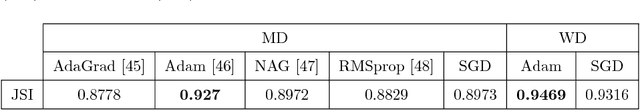

Semantic Segmentation of Human Thigh Quadriceps Muscle in Magnetic Resonance Images

Aug 09, 2018

Abstract:This paper presents an end-to-end solution for MRI thigh quadriceps segmentation. This is the first attempt that deep learning methods are used for the MRI thigh segmentation task. We use the state-of-the-art Fully Convolutional Networks with transfer learning approach for the semantic segmentation of regions of interest in MRI thigh scans. To further improve the performance of the segmentation, we propose a post-processing technique using basic image processing methods. With our proposed method, we have established a new benchmark for MRI thigh quadriceps segmentation with mean Jaccard Similarity Index of 0.9502 and processing time of 0.117 second per image.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge