Moi Hoon Yap

V-LinkNet: Learning Contextual Inpainting Across Latent Space of Generative Adversarial Network

Jan 02, 2022

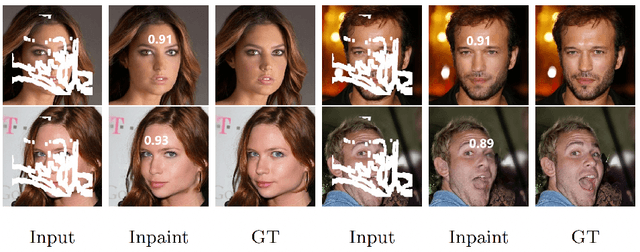

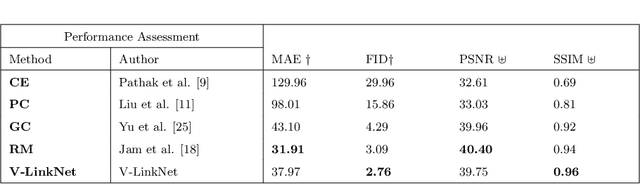

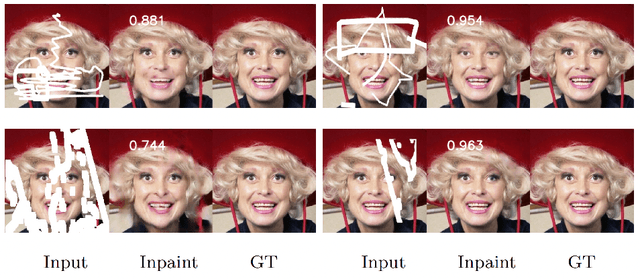

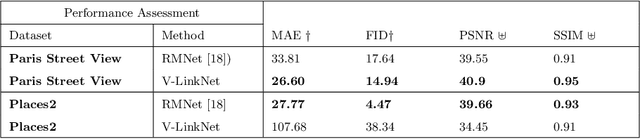

Abstract:Deep learning methods outperform traditional methods in image inpainting. In order to generate contextual textures, researchers are still working to improve on existing methods and propose models that can extract, propagate, and reconstruct features similar to ground-truth regions. Furthermore, the lack of a high-quality feature transfer mechanism in deeper layers contributes to persistent aberrations on generated inpainted regions. To address these limitations, we propose the V-LinkNet cross-space learning strategy network. To improve learning on contextualised features, we design a loss model that employs both encoders. In addition, we propose a recursive residual transition layer (RSTL). The RSTL extracts high-level semantic information and propagates it down layers. Finally, we compare inpainting performance on the same face with different masks and on different faces with the same masks. To improve image inpainting reproducibility, we propose a standard protocol to overcome biases with various masks and images. We investigate the V-LinkNet components using experimental methods. Our result surpasses the state of the art when evaluated on the CelebA-HQ with the standard protocol. In addition, our model can generalise well when evaluated on Paris Street View, and Places2 datasets with the standard protocol.

Development of Diabetic Foot Ulcer Datasets: An Overview

Jan 01, 2022

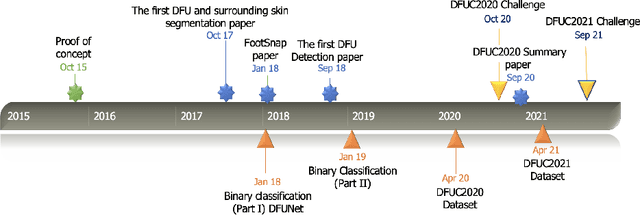

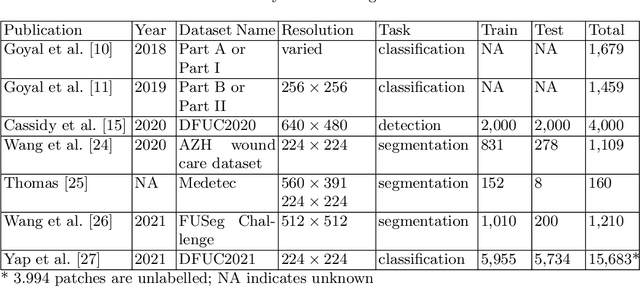

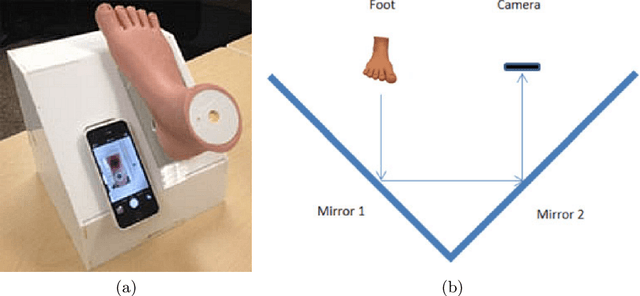

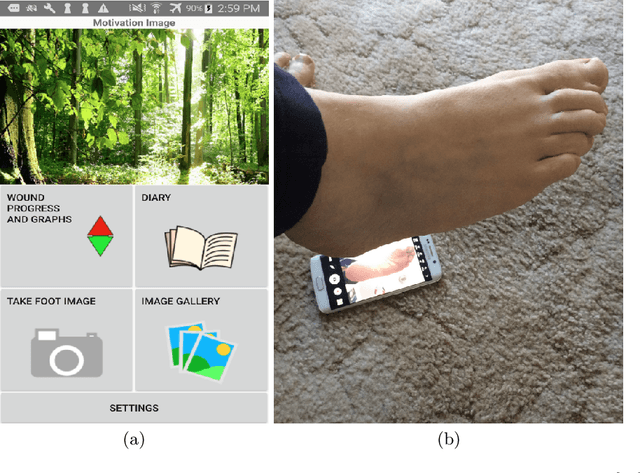

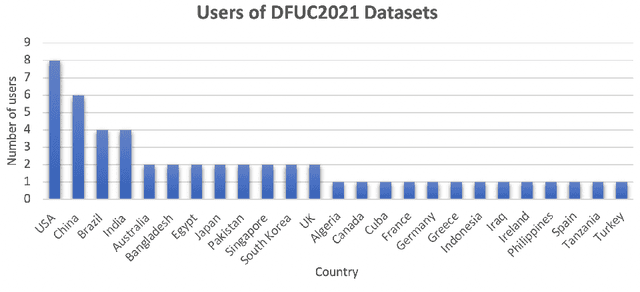

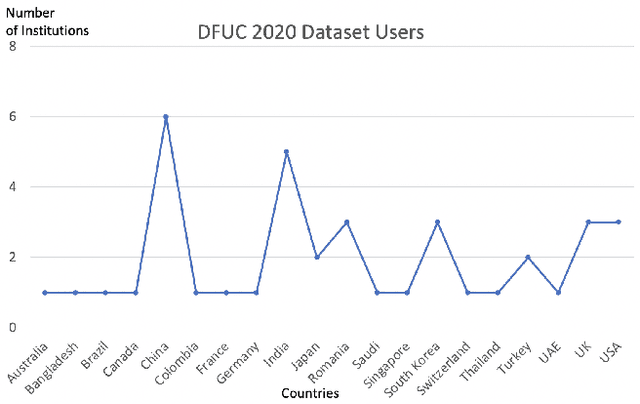

Abstract:This paper provides conceptual foundation and procedures used in the development of diabetic foot ulcer datasets over the past decade, with a timeline to demonstrate progress. We conduct a survey on data capturing methods for foot photographs, an overview of research in developing private and public datasets, the related computer vision tasks (detection, segmentation and classification), the diabetic foot ulcer challenges and the future direction of the development of the datasets. We report the distribution of dataset users by country and year. Our aim is to share the technical challenges that we encountered together with good practices in dataset development, and provide motivation for other researchers to participate in data sharing in this domain.

Diabetic Foot Ulcer Grand Challenge 2021: Evaluation and Summary

Nov 19, 2021

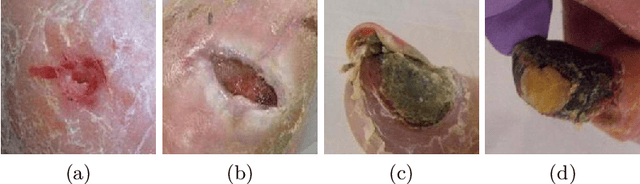

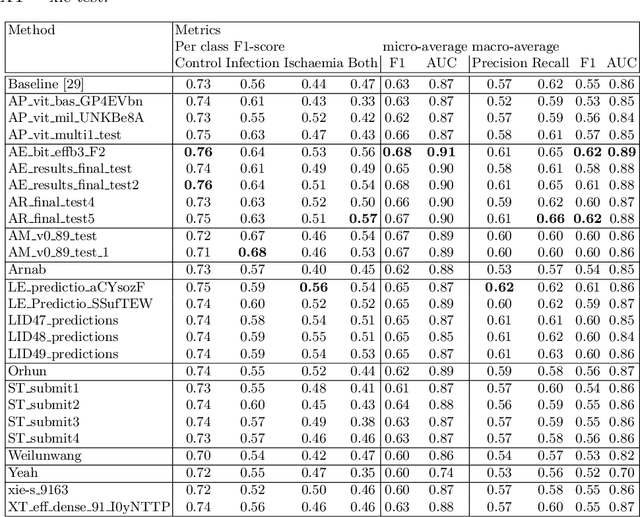

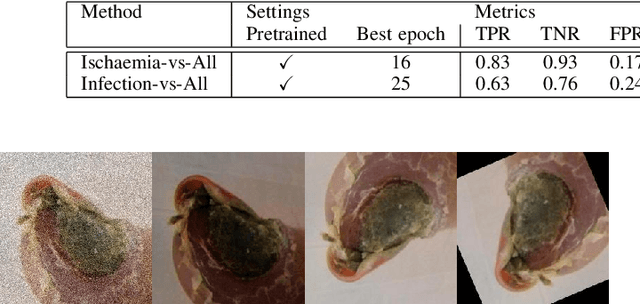

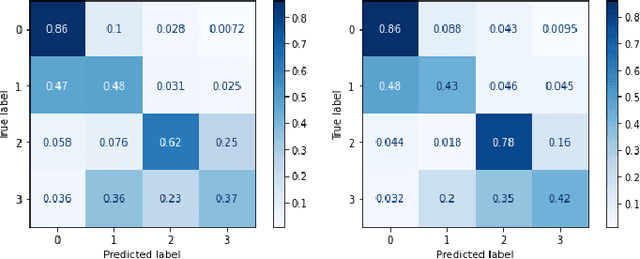

Abstract:Diabetic foot ulcer classification systems use the presence of wound infection (bacteria present within the wound) and ischaemia (restricted blood supply) as vital clinical indicators for treatment and prediction of wound healing. Studies investigating the use of automated computerised methods of classifying infection and ischaemia within diabetic foot wounds are limited due to a paucity of publicly available datasets and severe data imbalance in those few that exist. The Diabetic Foot Ulcer Challenge 2021 provided participants with a more substantial dataset comprising a total of 15,683 diabetic foot ulcer patches, with 5,955 used for training, 5,734 used for testing and an additional 3,994 unlabelled patches to promote the development of semi-supervised and weakly-supervised deep learning techniques. This paper provides an evaluation of the methods used in the Diabetic Foot Ulcer Challenge 2021, and summarises the results obtained from each network. The best performing network was an ensemble of the results of the top 3 models, with a macro-average F1-score of 0.6307.

FacialGAN: Style Transfer and Attribute Manipulation on Synthetic Faces

Oct 18, 2021

Abstract:Facial image manipulation is a generation task where the output face is shifted towards an intended target direction in terms of facial attribute and styles. Recent works have achieved great success in various editing techniques such as style transfer and attribute translation. However, current approaches are either focusing on pure style transfer, or on the translation of predefined sets of attributes with restricted interactivity. To address this issue, we propose FacialGAN, a novel framework enabling simultaneous rich style transfers and interactive facial attributes manipulation. While preserving the identity of a source image, we transfer the diverse styles of a target image to the source image. We then incorporate the geometry information of a segmentation mask to provide a fine-grained manipulation of facial attributes. Finally, a multi-objective learning strategy is introduced to optimize the loss of each specific tasks. Experiments on the CelebA-HQ dataset, with CelebAMask-HQ as semantic mask labels, show our model's capacity in producing visually compelling results in style transfer, attribute manipulation, diversity and face verification. For reproducibility, we provide an interactive open-source tool to perform facial manipulations, and the Pytorch implementation of the model.

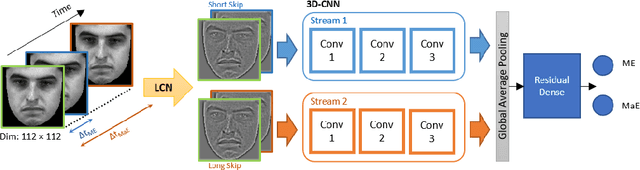

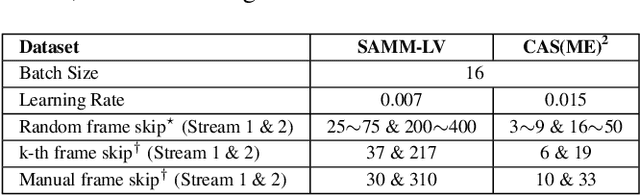

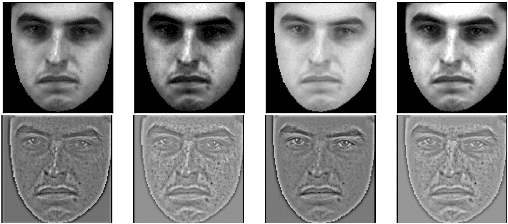

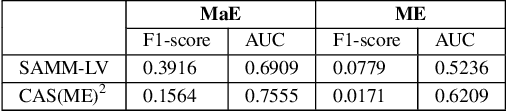

3D-CNN for Facial Micro- and Macro-expression Spotting on Long Video Sequences using Temporal Oriented Reference Frame

Jun 10, 2021

Abstract:Facial expression spotting is the preliminary step for micro- and macro-expression analysis. The task of reliably spotting such expressions in video sequences is currently unsolved. The current best systems depend upon optical flow methods to extract regional motion features, before categorisation of that motion into a specific class of facial movement. Optical flow is susceptible to drift error, which introduces a serious problem for motions with long-term dependencies, such as high frame-rate macro-expression. We propose a purely deep learning solution which, rather than track frame differential motion, compares via a convolutional model, each frame with two temporally local reference frames. Reference frames are sampled according to calculated micro- and macro-expression durations. We show that our solution achieves state-of-the-art performance (F1-score of 0.126) in a dataset of high frame-rate (200 fps) long video sequences (SAMM-LV) and is competitive in a low frame-rate (30 fps) dataset (CAS(ME)2). In this paper, we document our deep learning model and parameters, including how we use local contrast normalisation, which we show is critical for optimal results. We surpass a limitation in existing methods, and advance the state of deep learning in the domain of facial expression spotting.

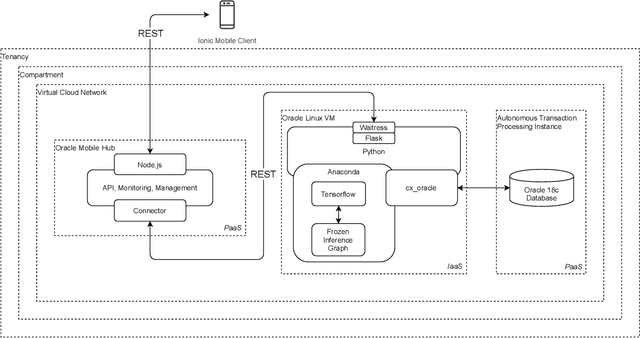

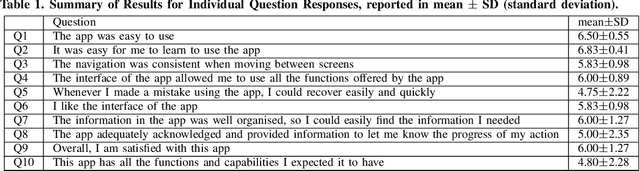

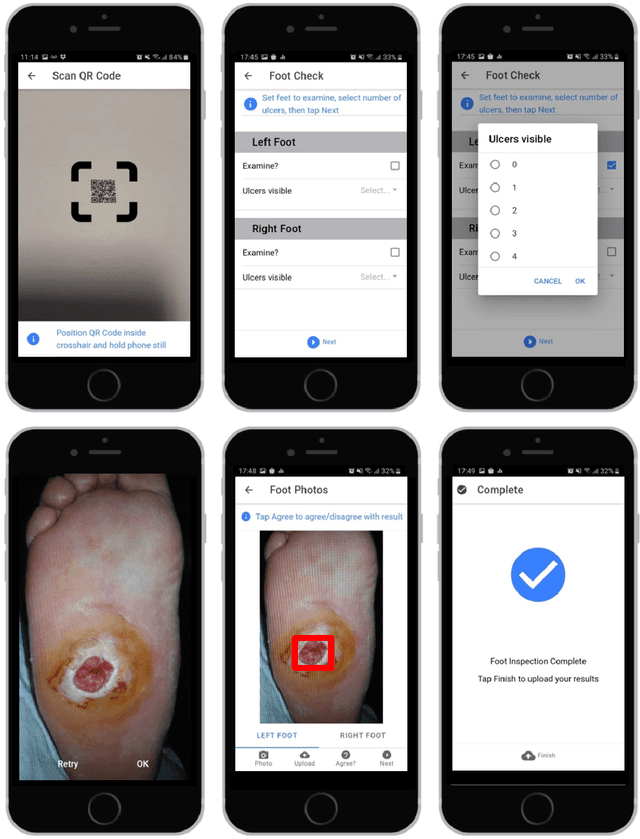

A Cloud-based Deep Learning Framework for Remote Detection of Diabetic Foot Ulcers

May 17, 2021

Abstract:This research proposes a mobile and cloud-based framework for the automatic detection of diabetic foot ulcers and conducts an investigation of its performance. The system uses a cross-platform mobile framework which enables the deployment of mobile apps to multiple platforms using a single TypeScript code base. A deep convolutional neural network was deployed to a cloud-based platform where the mobile app could send photographs of patient's feet for inference to detect the presence of diabetic foot ulcers. The functionality and usability of the system were tested in two clinical settings: Salford Royal NHS Foundation Trust and Lancashire Teaching Hospitals NHS Foundation Trust. The benefits of the system, such as the potential use of the app by patients to identify and monitor their condition are discussed.

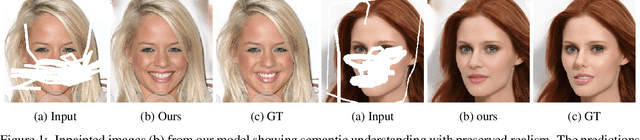

Foreground-guided Facial Inpainting with Fidelity Preservation

May 07, 2021

Abstract:Facial image inpainting, with high-fidelity preservation for image realism, is a very challenging task. This is due to the subtle texture in key facial features (component) that are not easily transferable. Many image inpainting techniques have been proposed with outstanding capabilities and high quantitative performances recorded. However, with facial inpainting, the features are more conspicuous and the visual quality of the blended inpainted regions are more important qualitatively. Based on these facts, we design a foreground-guided facial inpainting framework that can extract and generate facial features using convolutional neural network layers. It introduces the use of foreground segmentation masks to preserve the fidelity. Specifically, we propose a new loss function with semantic capability reasoning of facial expressions, natural and unnatural features (make-up). We conduct our experiments using the CelebA-HQ dataset, segmentation masks from CelebAMask-HQ (for foreground guidance) and Quick Draw Mask (for missing regions). Our proposed method achieved comparable quantitative results when compare to the state of the art but qualitatively, it demonstrated high-fidelity preservation of facial components.

Analysis Towards Classification of Infection and Ischaemia of Diabetic Foot Ulcers

Apr 07, 2021

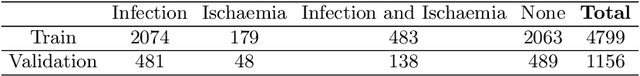

Abstract:This paper introduces the Diabetic Foot Ulcers dataset (DFUC2021) for analysis of pathology, focusing on infection and ischaemia. We describe the data preparation of DFUC2021 for ground truth annotation, data curation and data analysis. The final release of DFUC2021 consists of 15,683 DFU patches, with 5,955 training, 5,734 for testing and 3,994 unlabeled DFU patches. The ground truth labels are four classes, i.e. control, infection, ischaemia and both conditions. We curate the dataset using image hashing techniques and analyse the separability using UMAP projection. We benchmark the performance of five key backbones of deep learning, i.e. VGG16, ResNet101, InceptionV3, DenseNet121 and EfficientNet on DFUC2021. We report the optimised results of these key backbones with different strategies. Based on our observations, we conclude that EfficientNetB0 with data augmentation and transfer learning provided the best results for multi-class (4-class) classification with macro-average Precision, Recall and F1-score of 0.57, 0.62 and 0.55, respectively. In ischaemia and infection recognition, when trained on one-versus-all, EfficientNetB0 achieved comparable results with the state of the art. Finally, we interpret the results with statistical analysis and Grad-CAM visualisation.

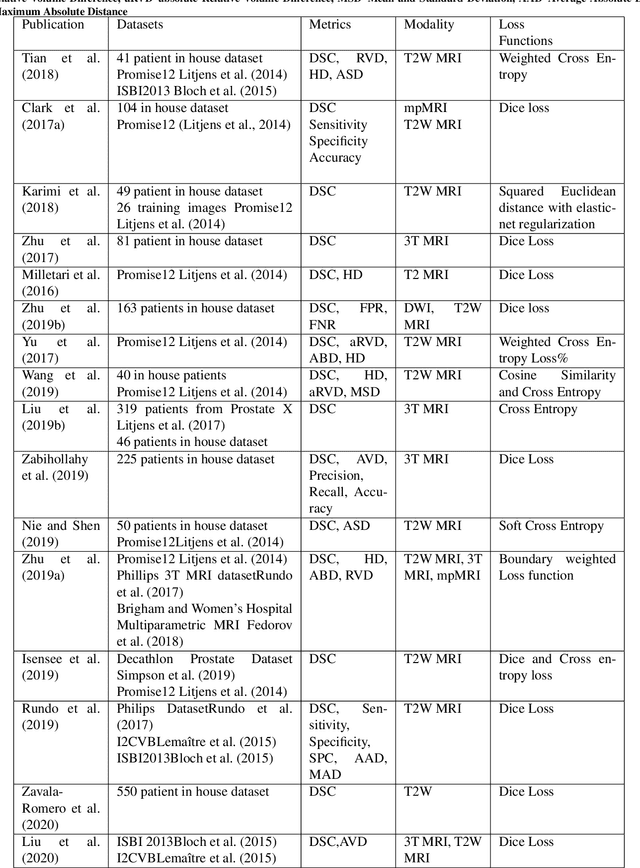

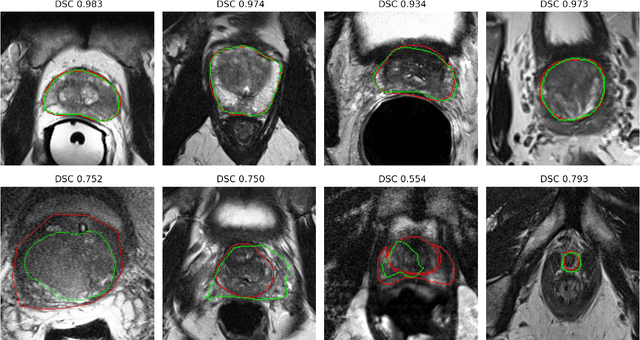

Deep learning in magnetic resonance prostate segmentation: A review and a new perspective

Nov 16, 2020

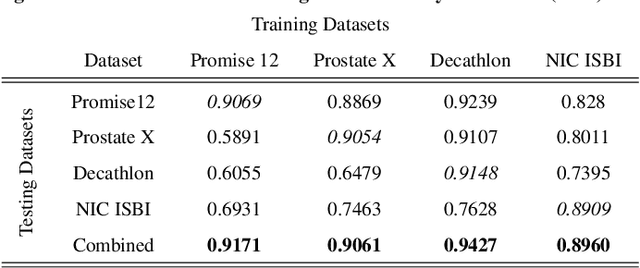

Abstract:Prostate radiotherapy is a well established curative oncology modality, which in future will use Magnetic Resonance Imaging (MRI)-based radiotherapy for daily adaptive radiotherapy target definition. However the time needed to delineate the prostate from MRI data accurately is a time consuming process. Deep learning has been identified as a potential new technology for the delivery of precision radiotherapy in prostate cancer, where accurate prostate segmentation helps in cancer detection and therapy. However, the trained models can be limited in their application to clinical setting due to different acquisition protocols, limited publicly available datasets, where the size of the datasets are relatively small. Therefore, to explore the field of prostate segmentation and to discover a generalisable solution, we review the state-of-the-art deep learning algorithms in MR prostate segmentation; provide insights to the field by discussing their limitations and strengths; and propose an optimised 2D U-Net for MR prostate segmentation. We evaluate the performance on four publicly available datasets using Dice Similarity Coefficient (DSC) as performance metric. Our experiments include within dataset evaluation and cross-dataset evaluation. The best result is achieved by composite evaluation (DSC of 0.9427 on Decathlon test set) and the poorest result is achieved by cross-dataset evaluation (DSC of 0.5892, Prostate X training set, Promise 12 testing set). We outline the challenges and provide recommendations for future work. Our research provides a new perspective to MR prostate segmentation and more importantly, we provide standardised experiment settings for researchers to evaluate their algorithms. Our code is available at https://github.com/AIEMMU/MRI\_Prostate.

Deep Learning in Diabetic Foot Ulcers Detection: A Comprehensive Evaluation

Oct 15, 2020

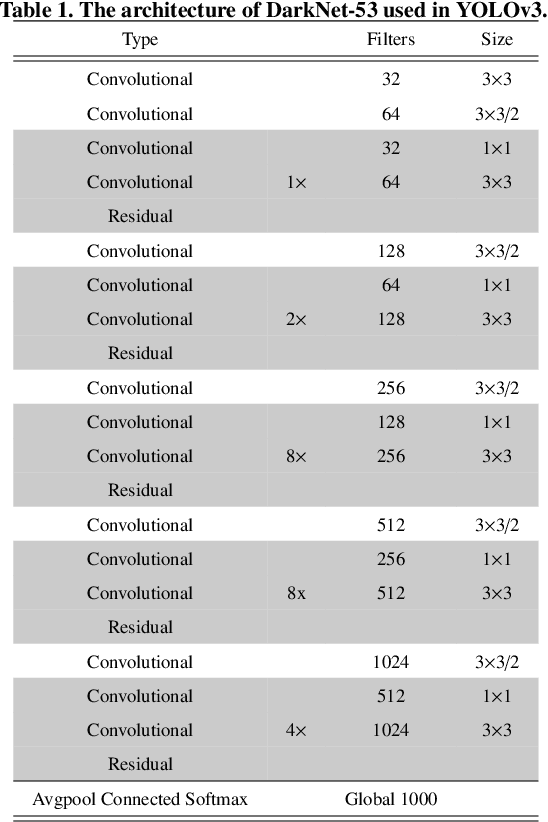

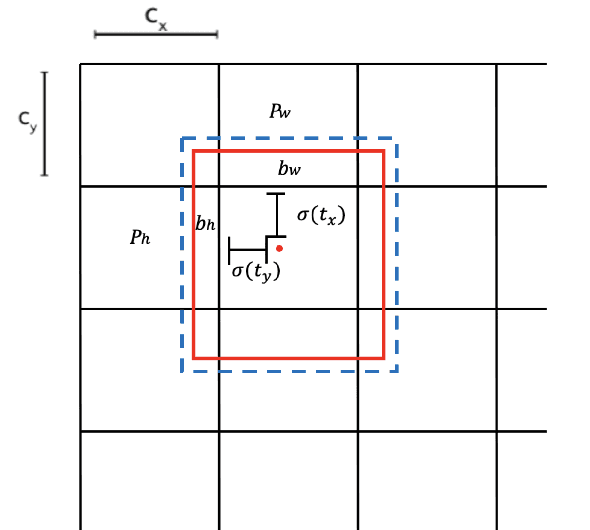

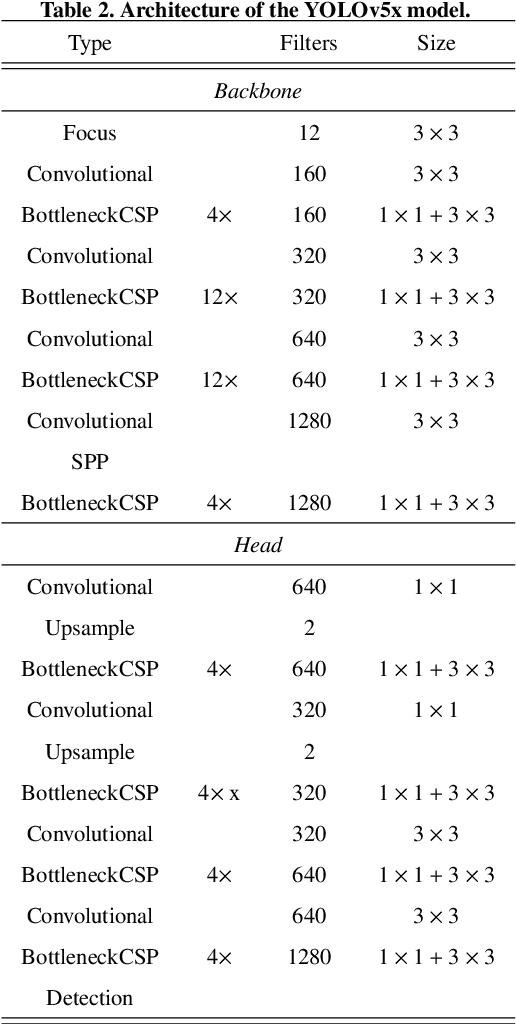

Abstract:There has been a substantial amount of research on computer methods and technology for the detection and recognition of diabetic foot ulcers (DFUs), but there is a lack of systematic comparisons of state-of-the-art deep learning object detection frameworks applied to this problem. With recent development and data sharing performed as part of the DFU Challenge (DFUC2020) such a comparison becomes possible: DFUC2020 provided participants with a comprehensive dataset consisting of 2,000 images for training each method and 2,000 images for testing them. The following deep learning-based algorithms are compared in this paper: Faster R-CNN, three variants of Faster R-CNN and an ensemble method; YOLOv3; YOLOv5; EfficientDet; and a new Cascade Attention Network. For each deep learning method, we provide a detailed description of model architecture, parameter settings for training and additional stages including pre-processing, data augmentation and post-processing. We provide a comprehensive evaluation for each method. All the methods required a data augmentation stage to increase the number of images available for training and a post-processing stage to remove false positives. The best performance is obtained Deformable Convolution, a variant of Faster R-CNN, with a mAP of 0.6940 and an F1-Score of 0.7434. Finally, we demonstrate that the ensemble method based on different deep learning methods can enhanced the F1-Score but not the mAP. Our results show that state-of-the-art deep learning methods can detect DFU with some accuracy, but there are many challenges ahead before they can be implemented in real world settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge