Mohammad Abu-Shaira

Online Domain-aware LLM Decoding for Continual Domain Evolution

Feb 08, 2026Abstract:LLMs are typically fine-tuned offline on domain-specific data, assuming a static domain. In practice, domain knowledge evolves continuously through new regulations, products, services, and interaction patterns. Retraining or fine-tuning LLMs for every new instance is computationally infeasible. Additionally, real-world environments also exhibit temporal dynamics with shifting data distributions. Disregarding this phenomenon, commonly referred to as concept drift, can significantly diminish a model's predictive accuracy. This mismatch between evolving domains and static adaptation pipelines highlights the need for efficient, real-time adaptation without costly retraining. In response, we introduce Online Domain-aware Decoding framework (ODD). ODD performs probability-level fusion between a base LLM and a prefix-tree prior, guided by adaptive confidence modulation using disagreement and continuity signals. Empirical evaluation under diverse drift scenarios demonstrates that ODD consistently surpasses LLM-Greedy and LLM-Temp Scaled across all syntactic and semantic NLG metrics. It yields an absolute ROUGE-L gain of 0.065 and a 13.6% relative improvement in Cosine Similarity over the best baseline. These results demonstrate ODD 's robustness to evolving lexical and contextual patterns, making it suitable for dynamic LLM applications.

OLR-WA: Online Weighted Average Linear Regression in Multivariate Data Streams

Dec 16, 2025

Abstract:Online learning updates models incrementally with new data, avoiding large storage requirements and costly model recalculations. In this paper, we introduce "OLR-WA; OnLine Regression with Weighted Average", a novel and versatile multivariate online linear regression model. We also investigate scenarios involving drift, where the underlying patterns in the data evolve over time, conduct convergence analysis, and compare our approach with existing online regression models. The results of OLR-WA demonstrate its ability to achieve performance comparable to the batch regression, while also showcasing comparable or superior performance when compared with other state-of-the-art online models, thus establishing its effectiveness. Moreover, OLR-WA exhibits exceptional performance in terms of rapid convergence, surpassing other online models with consistently achieving high r2 values as a performance measure from the first iteration to the last iteration, even when initialized with minimal amount of data points, as little as 1% to 10% of the total data points. In addition to its ability to handle time-based (temporal drift) scenarios, remarkably, OLR-WA stands out as the only model capable of effectively managing confidence-based challenging scenarios. It achieves this by adopting a conservative approach in its updates, giving priority to older data points with higher confidence levels. In summary, OLR-WA's performance further solidifies its versatility and utility across different contexts, making it a valuable solution for online linear regression tasks.

Unveiling Statistical Significance of Online Regression over Multiple Datasets

Dec 14, 2025Abstract:Despite extensive focus on techniques for evaluating the performance of two learning algorithms on a single dataset, the critical challenge of developing statistical tests to compare multiple algorithms across various datasets has been largely overlooked in most machine learning research. Additionally, in the realm of Online Learning, ensuring statistical significance is essential to validate continuous learning processes, particularly for achieving rapid convergence and effectively managing concept drifts in a timely manner. Robust statistical methods are needed to assess the significance of performance differences as data evolves over time. This article examines the state-of-the-art online regression models and empirically evaluates several suitable tests. To compare multiple online regression models across various datasets, we employed the Friedman test along with corresponding post-hoc tests. For thorough evaluations, utilizing both real and synthetic datasets with 5-fold cross-validation and seed averaging ensures comprehensive assessment across various data subsets. Our tests generally confirmed the performance of competitive baselines as consistent with their individual reports. However, some statistical test results also indicate that there is still room for improvement in certain aspects of state-of-the-art methods.

OLR-WAA: Adaptive and Drift-Resilient Online Regression with Dynamic Weighted Averaging

Dec 14, 2025Abstract:Real-world datasets frequently exhibit evolving data distributions, reflecting temporal variations and underlying shifts. Overlooking this phenomenon, known as concept drift, can substantially degrade the predictive performance of the model. Furthermore, the presence of hyperparameters in online models exacerbates this issue, as these parameters are typically fixed and lack the flexibility to dynamically adjust to evolving data. This paper introduces "OLR-WAA: An Adaptive and Drift-Resilient Online Regression with Dynamic Weighted Average", a hyperparameter-free model designed to tackle the challenges of non-stationary data streams and enable effective, continuous adaptation. The objective is to strike a balance between model stability and adaptability. OLR-WAA incrementally updates its base model by integrating incoming data streams, utilizing an exponentially weighted moving average. It further introduces a unique optimization mechanism that dynamically detects concept drift, quantifies its magnitude, and adjusts the model based on real-time data characteristics. Rigorous evaluations show that it matches batch regression performance in static settings and consistently outperforms or rivals state-of-the-art online models, confirming its effectiveness. Concept drift datasets reveal a performance gap that OLR-WAA effectively bridges, setting it apart from other online models. In addition, the model effectively handles confidence-based scenarios through a conservative update strategy that prioritizes stable, high-confidence data points. Notably, OLR-WAA converges rapidly, consistently yielding higher R2 values compared to other online models.

DAO-GP Drift Aware Online Non-Linear Regression Gaussian-Process

Dec 09, 2025

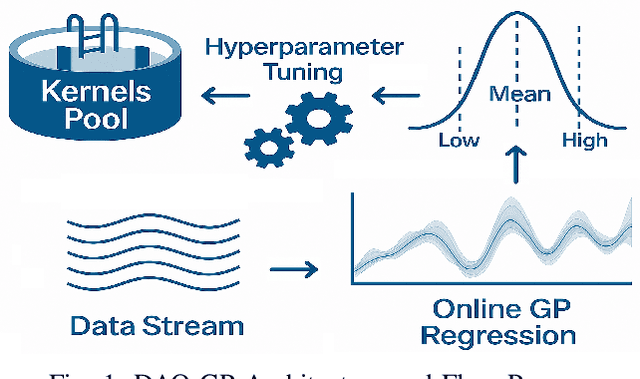

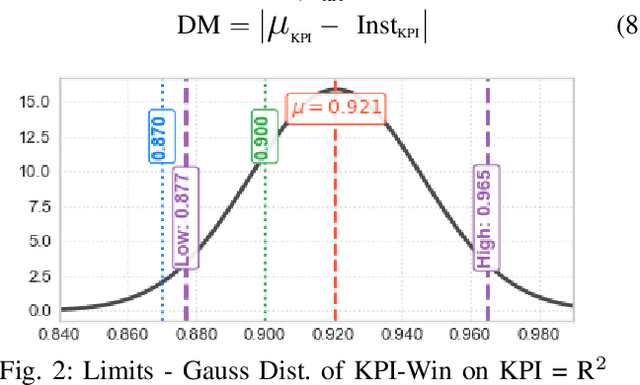

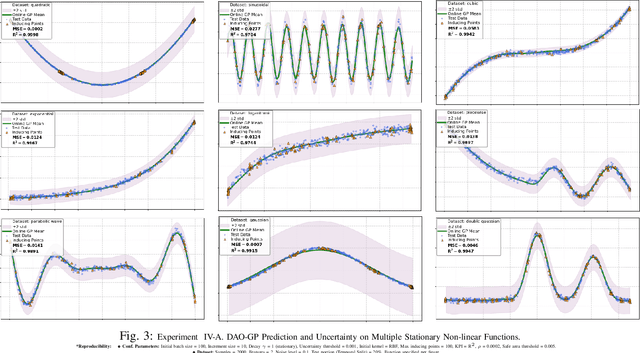

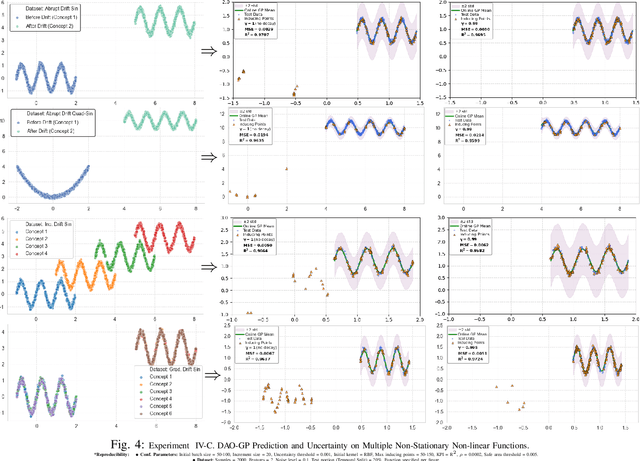

Abstract:Real-world datasets often exhibit temporal dynamics characterized by evolving data distributions. Disregarding this phenomenon, commonly referred to as concept drift, can significantly diminish a model's predictive accuracy. Furthermore, the presence of hyperparameters in online models exacerbates this issue. These parameters are typically fixed and cannot be dynamically adjusted by the user in response to the evolving data distribution. Gaussian Process (GP) models offer powerful non-parametric regression capabilities with uncertainty quantification, making them ideal for modeling complex data relationships in an online setting. However, conventional online GP methods face several critical limitations, including a lack of drift-awareness, reliance on fixed hyperparameters, vulnerability to data snooping, absence of a principled decay mechanism, and memory inefficiencies. In response, we propose DAO-GP (Drift-Aware Online Gaussian Process), a novel, fully adaptive, hyperparameter-free, decayed, and sparse non-linear regression model. DAO-GP features a built-in drift detection and adaptation mechanism that dynamically adjusts model behavior based on the severity of drift. Extensive empirical evaluations confirm DAO-GP's robustness across stationary conditions, diverse drift types (abrupt, incremental, gradual), and varied data characteristics. Analyses demonstrate its dynamic adaptation, efficient in-memory and decay-based management, and evolving inducing points. Compared with state-of-the-art parametric and non-parametric models, DAO-GP consistently achieves superior or competitive performance, establishing it as a drift-resilient solution for online non-linear regression.

OLR-WA Online Regression with Weighted Average

Jul 06, 2023Abstract:Machine Learning requires a large amount of training data in order to build accurate models. Sometimes the data arrives over time, requiring significant storage space and recalculating the model to account for the new data. On-line learning addresses these issues by incrementally modifying the model as data is encountered, and then discarding the data. In this study we introduce a new online linear regression approach. Our approach combines newly arriving data with a previously existing model to create a new model. The introduced model, named OLR-WA (OnLine Regression with Weighted Average) uses user-defined weights to provide flexibility in the face of changing data to bias the results in favor of old or new data. We have conducted 2-D and 3-D experiments comparing OLR-WA to a static model using the entire data set. The results show that for consistent data, OLR-WA and the static batch model perform similarly and for varying data, the user can set the OLR-WA to adapt more quickly or to resist change.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge