Mingzhe Sui

NR-DFERNet: Noise-Robust Network for Dynamic Facial Expression Recognition

Jun 10, 2022

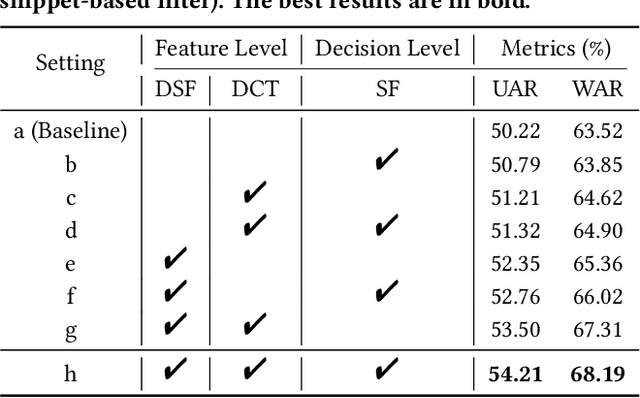

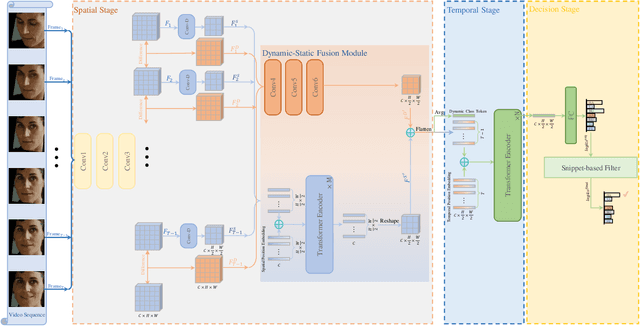

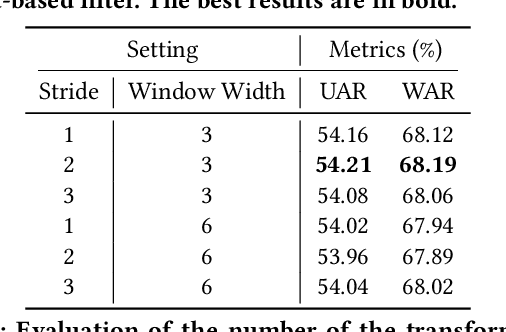

Abstract:Dynamic facial expression recognition (DFER) in the wild is an extremely challenging task, due to a large number of noisy frames in the video sequences. Previous works focus on extracting more discriminative features, but ignore distinguishing the key frames from the noisy frames. To tackle this problem, we propose a noise-robust dynamic facial expression recognition network (NR-DFERNet), which can effectively reduce the interference of noisy frames on the DFER task. Specifically, at the spatial stage, we devise a dynamic-static fusion module (DSF) that introduces dynamic features to static features for learning more discriminative spatial features. To suppress the impact of target irrelevant frames, we introduce a novel dynamic class token (DCT) for the transformer at the temporal stage. Moreover, we design a snippet-based filter (SF) at the decision stage to reduce the effect of too many neutral frames on non-neutral sequence classification. Extensive experimental results demonstrate that our NR-DFERNet outperforms the state-of-the-art methods on both the DFEW and AFEW benchmarks.

AFNet-M: Adaptive Fusion Network with Masks for 2D+3D Facial Expression Recognition

May 24, 2022

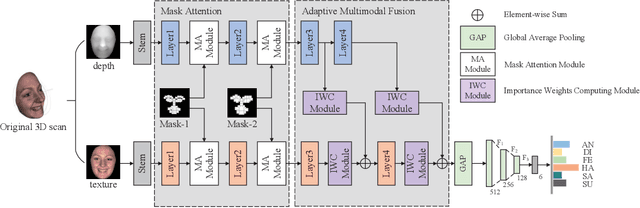

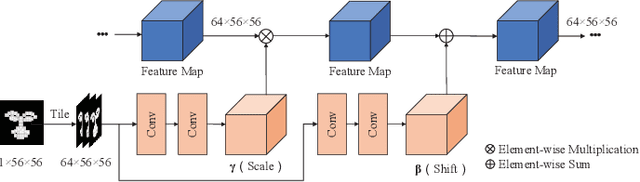

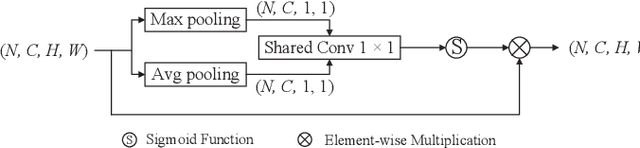

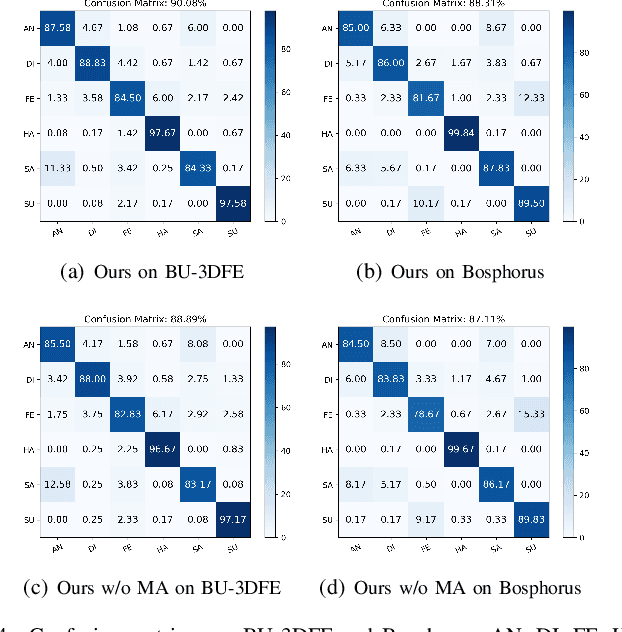

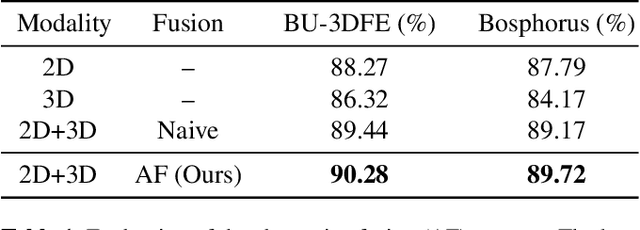

Abstract:2D+3D facial expression recognition (FER) can effectively cope with illumination changes and pose variations by simultaneously merging 2D texture and more robust 3D depth information. Most deep learning-based approaches employ the simple fusion strategy that concatenates the multimodal features directly after fully-connected layers, without considering the different degrees of significance for each modality. Meanwhile, how to focus on both 2D and 3D local features in salient regions is still a great challenge. In this letter, we propose the adaptive fusion network with masks (AFNet-M) for 2D+3D FER. To enhance 2D and 3D local features, we take the masks annotating salient regions of the face as prior knowledge and design the mask attention module (MA) which can automatically learn two modulation vectors to adjust the feature maps. Moreover, we introduce a novel fusion strategy that can perform adaptive fusion at convolutional layers through the designed importance weights computing module (IWC). Experimental results demonstrate that our AFNet-M achieves the state-of-the-art performance on BU-3DFE and Bosphorus datasets and requires fewer parameters in comparison with other models.

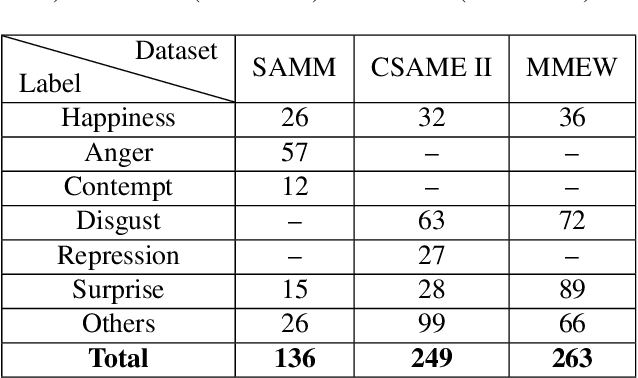

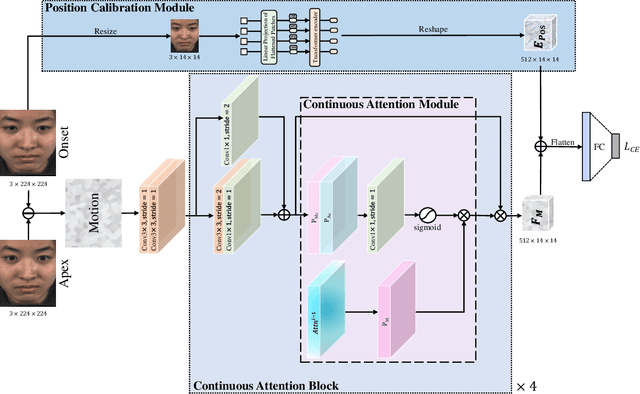

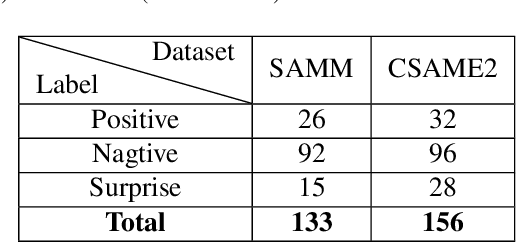

MMNet: Muscle motion-guided network for micro-expression recognition

Jan 14, 2022

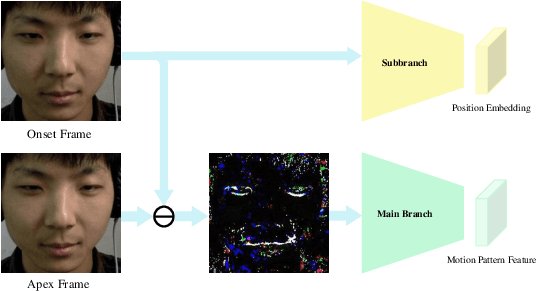

Abstract:Facial micro-expressions (MEs) are involuntary facial motions revealing peoples real feelings and play an important role in the early intervention of mental illness, the national security, and many human-computer interaction systems. However, existing micro-expression datasets are limited and usually pose some challenges for training good classifiers. To model the subtle facial muscle motions, we propose a robust micro-expression recognition (MER) framework, namely muscle motion-guided network (MMNet). Specifically, a continuous attention (CA) block is introduced to focus on modeling local subtle muscle motion patterns with little identity information, which is different from most previous methods that directly extract features from complete video frames with much identity information. Besides, we design a position calibration (PC) module based on the vision transformer. By adding the position embeddings of the face generated by PC module at the end of the two branches, the PC module can help to add position information to facial muscle motion pattern features for the MER. Extensive experiments on three public micro-expression datasets demonstrate that our approach outperforms state-of-the-art methods by a large margin.

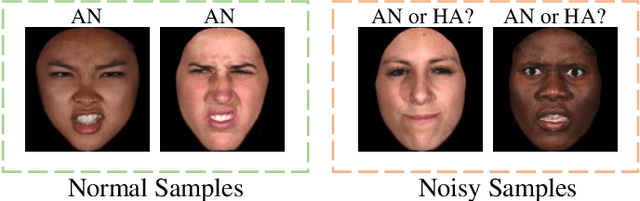

MFEViT: A Robust Lightweight Transformer-based Network for Multimodal 2D+3D Facial Expression Recognition

Sep 20, 2021

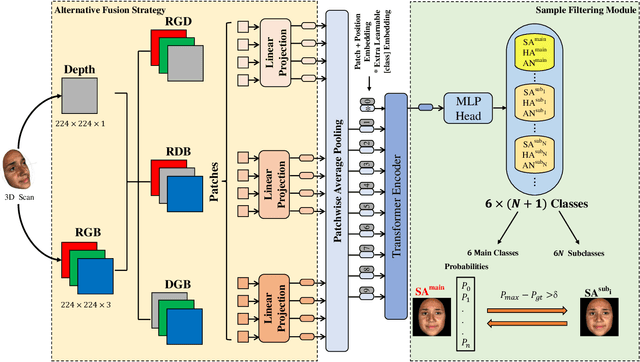

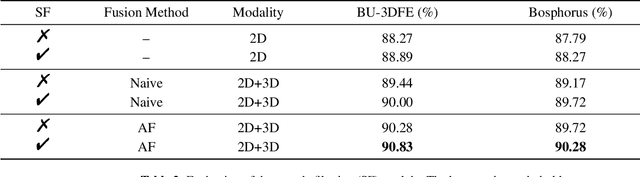

Abstract:Vision transformer (ViT) has been widely applied in many areas due to its self-attention mechanism that help obtain the global receptive field since the first layer. It even achieves surprising performance exceeding CNN in some vision tasks. However, there exists an issue when leveraging vision transformer into 2D+3D facial expression recognition (FER), i.e., ViT training needs mass data. Nonetheless, the number of samples in public 2D+3D FER datasets is far from sufficient for evaluation. How to utilize the ViT pre-trained on RGB images to handle 2D+3D data becomes a challenge. To solve this problem, we propose a robust lightweight pure transformer-based network for multimodal 2D+3D FER, namely MFEViT. For narrowing the gap between RGB and multimodal data, we devise an alternative fusion strategy, which replaces each of the three channels of an RGB image with the depth-map channel and fuses them before feeding them into the transformer encoder. Moreover, the designed sample filtering module adds several subclasses for each expression and move the noisy samples to their corresponding subclasses, thus eliminating their disturbance on the network during the training stage. Extensive experiments demonstrate that our MFEViT outperforms state-of-the-art approaches with an accuracy of 90.83% on BU-3DFE and 90.28% on Bosphorus. Specifically, the proposed MFEViT is a lightweight model, requiring much fewer parameters than multi-branch CNNs. To the best of our knowledge, this is the first work to introduce vision transformer into multimodal 2D+3D FER. The source code of our MFEViT will be publicly available online.

MViT: Mask Vision Transformer for Facial Expression Recognition in the wild

Jun 08, 2021

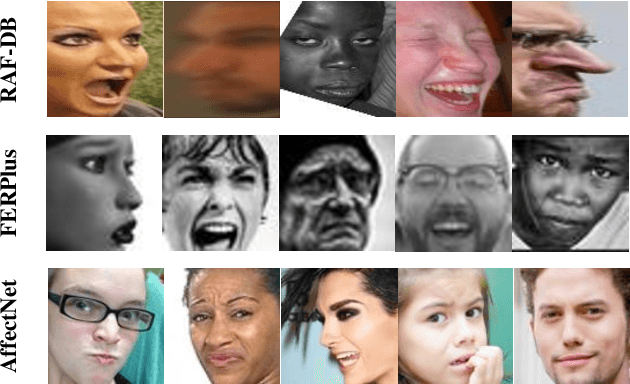

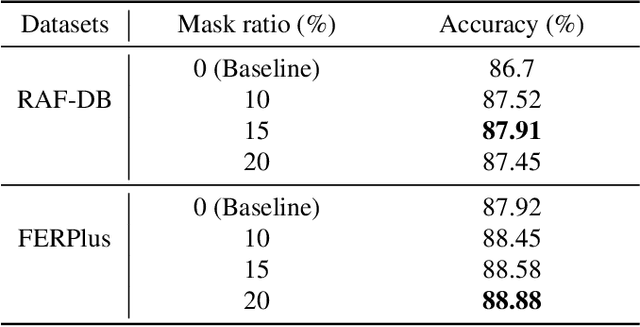

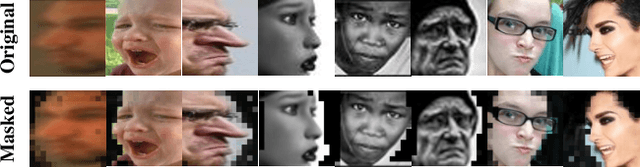

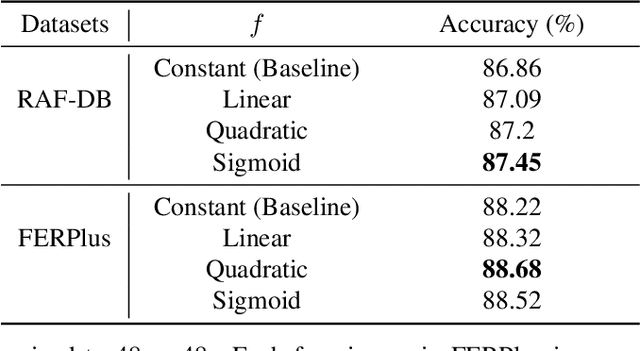

Abstract:Facial Expression Recognition (FER) in the wild is an extremely challenging task in computer vision due to variant backgrounds, low-quality facial images, and the subjectiveness of annotators. These uncertainties make it difficult for neural networks to learn robust features on limited-scale datasets. Moreover, the networks can be easily distributed by the above factors and perform incorrect decisions. Recently, vision transformer (ViT) and data-efficient image transformers (DeiT) present their significant performance in traditional classification tasks. The self-attention mechanism makes transformers obtain a global receptive field in the first layer which dramatically enhances the feature extraction capability. In this work, we first propose a novel pure transformer-based mask vision transformer (MViT) for FER in the wild, which consists of two modules: a transformer-based mask generation network (MGN) to generate a mask that can filter out complex backgrounds and occlusion of face images, and a dynamic relabeling module to rectify incorrect labels in FER datasets in the wild. Extensive experimental results demonstrate that our MViT outperforms state-of-the-art methods on RAF-DB with 88.62%, FERPlus with 89.22%, and AffectNet-7 with 64.57%, respectively, and achieves a comparable result on AffectNet-8 with 61.40%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge