Michelle Zhu

Conversion and Implementation of State-of-the-Art Deep Learning Algorithms for the Classification of Diabetic Retinopathy

Oct 07, 2020

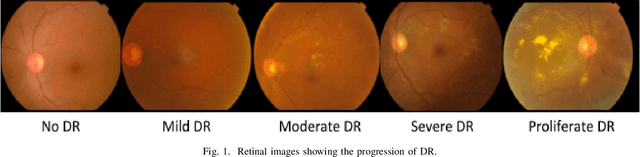

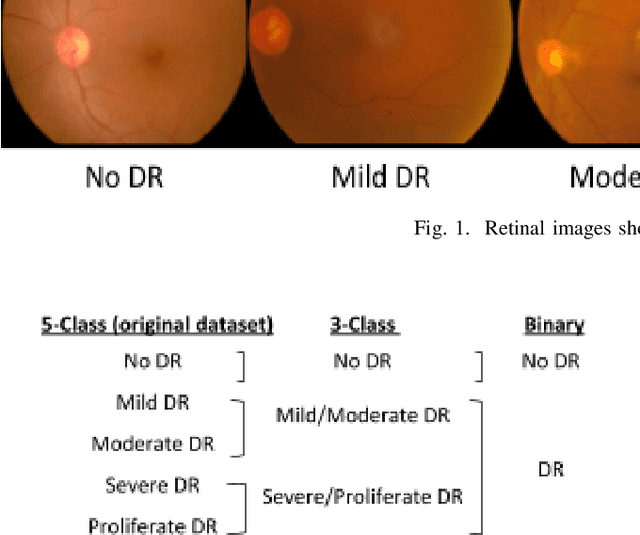

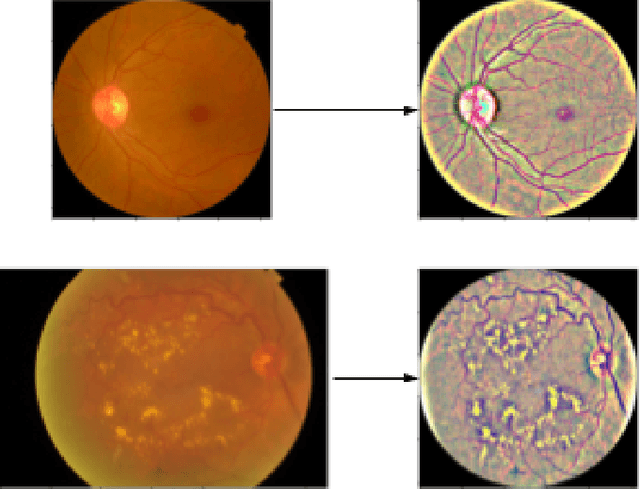

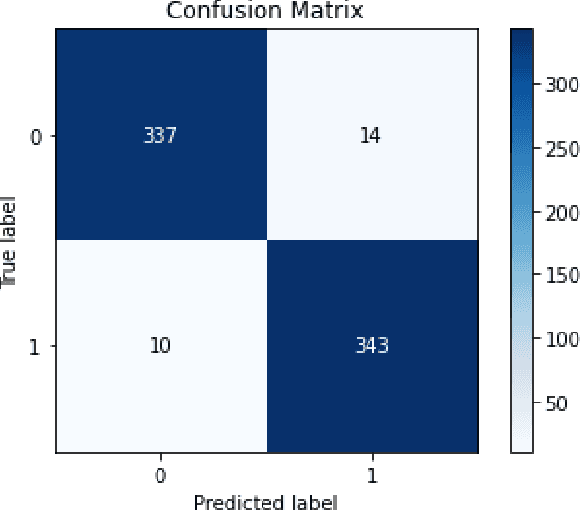

Abstract:Diabetic retinopathy (DR) is a retinal microvascular condition that emerges in diabetic patients. DR will continue to be a leading cause of blindness worldwide, with a predicted 191.0 million globally diagnosed patients in 2030. Microaneurysms, hemorrhages, exudates, and cotton wool spots are common signs of DR. However, they can be small and hard for human eyes to detect. Early detection of DR is crucial for effective clinical treatment. Existing methods to classify images require much time for feature extraction and selection, and are limited in their performance. Convolutional Neural Networks (CNNs), as an emerging deep learning (DL) method, have proven their potential in image classification tasks. In this paper, comprehensive experimental studies of implementing state-of-the-art CNNs for the detection and classification of DR are conducted in order to determine the top performing classifiers for the task. Five CNN classifiers, namely Inception-V3, VGG19, VGG16, ResNet50, and InceptionResNetV2, are evaluated through experiments. They categorize medical images into five different classes based on DR severity. Data augmentation and transfer learning techniques are applied since annotated medical images are limited and imbalanced. Experimental results indicate that the ResNet50 classifier has top performance for binary classification and that the InceptionResNetV2 classifier has top performance for multi-class DR classification.

Instance-based Deep Transfer Learning

Sep 08, 2018

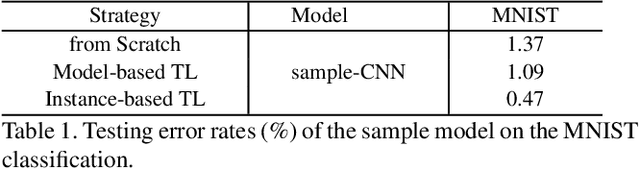

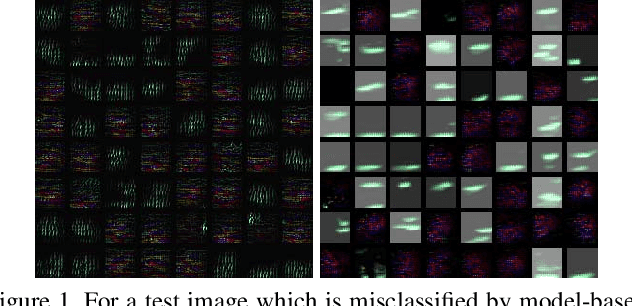

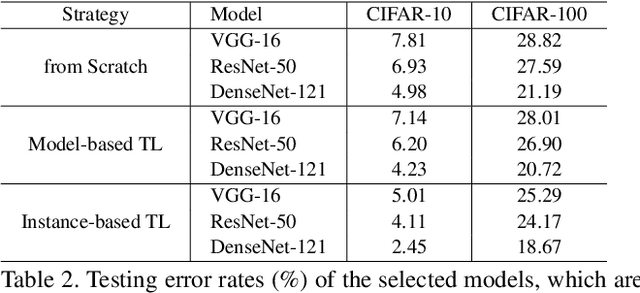

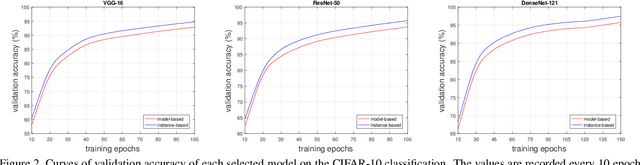

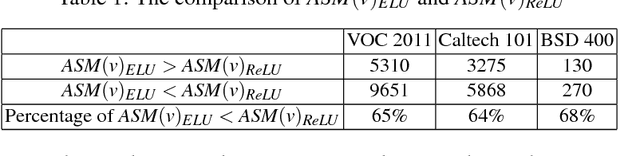

Abstract:Deep transfer learning has acquired significant research interest. It makes use of pre-trained models that are learned from a source domain, and utilizes these models for the tasks in a target domain. Model-based deep transfer learning is arguably the most frequently used method. However, very little work has been devoted to enhancing deep transfer learning by focusing on the influence of data. In this work, we propose an instance-based approach to improve deep transfer learning in target domain. Specifically, we choose a pre-trained model which is learned from a source domain, and utilize this model to estimate the influence of each training sample in a target domain. Then we optimize training data of the target domain by removing the training samples that will lower the performance of the pre-trained model. We then fine-tune the pre-trained model with the optimized training data in the target domain, or build a new model which can be initialized partially based on the pre-trained model, and fine-tune it with the optimized training data in the target domain. Using this approach, transfer learning can help deep learning models to learn more useful features. Extensive experiments demonstrate the effectiveness of our approach on further boosting deep learning models for typical high-level computer vision tasks, such as image classification.

An ELU Network with Total Variation for Image Denoising

Aug 14, 2017

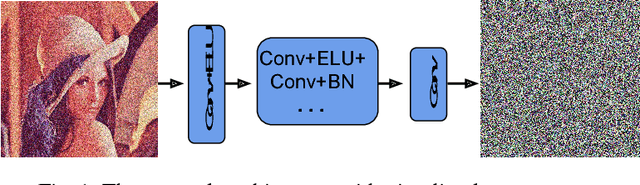

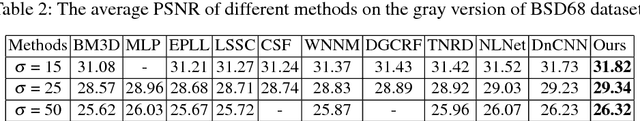

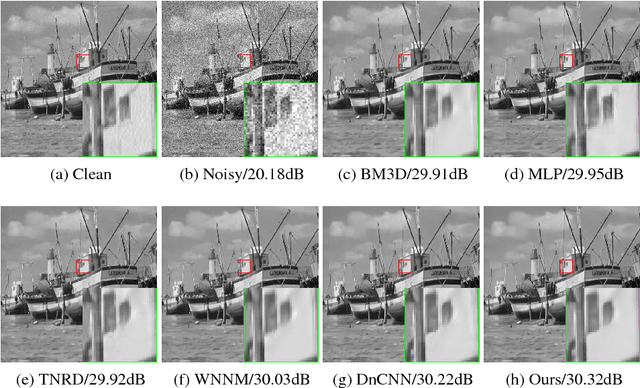

Abstract:In this paper, we propose a novel convolutional neural network (CNN) for image denoising, which uses exponential linear unit (ELU) as the activation function. We investigate the suitability by analyzing ELU's connection with trainable nonlinear reaction diffusion model (TNRD) and residual denoising. On the other hand, batch normalization (BN) is indispensable for residual denoising and convergence purpose. However, direct stacking of BN and ELU degrades the performance of CNN. To mitigate this issue, we design an innovative combination of activation layer and normalization layer to exploit and leverage the ELU network, and discuss the corresponding rationale. Moreover, inspired by the fact that minimizing total variation (TV) can be applied to image denoising, we propose a TV regularized L2 loss to evaluate the training effect during the iterations. Finally, we conduct extensive experiments, showing that our model outperforms some recent and popular approaches on Gaussian denoising with specific or randomized noise levels for both gray and color images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge