Michelle Ho

Model Identification Adaptive Control with $ρ$-POMDP Planning

May 14, 2025Abstract:Accurate system modeling is crucial for safe, effective control, as misidentification can lead to accumulated errors, especially under partial observability. We address this problem by formulating informative input design (IID) and model identification adaptive control (MIAC) as belief space planning problems, modeled as partially observable Markov decision processes with belief-dependent rewards ($\rho$-POMDPs). We treat system parameters as hidden state variables that must be localized while simultaneously controlling the system. We solve this problem with an adapted belief-space iterative Linear Quadratic Regulator (BiLQR). We demonstrate it on fully and partially observable tasks for cart-pole and steady aircraft flight domains. Our method outperforms baselines such as regression, filtering, and local optimal control methods, even under instantaneous disturbances to system parameters.

Comparing the Complexity of Robotic Tasks

Feb 20, 2022

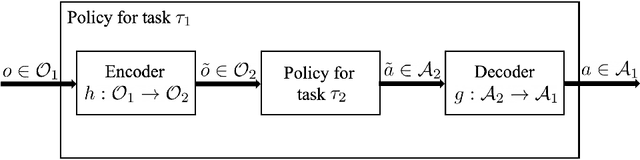

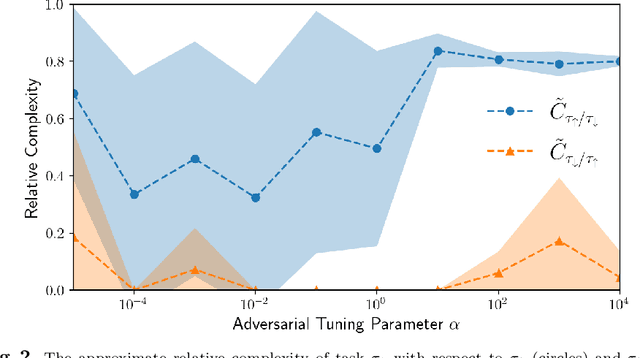

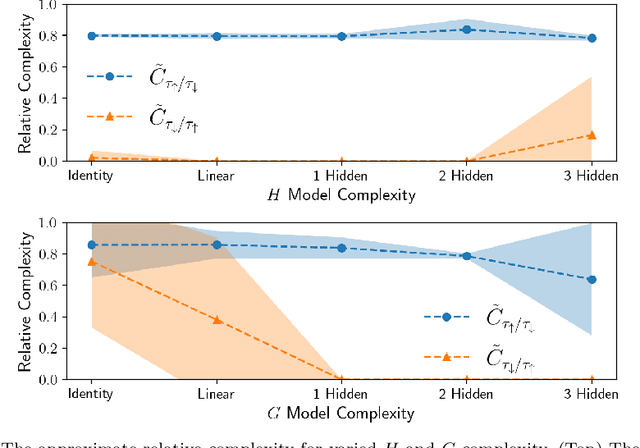

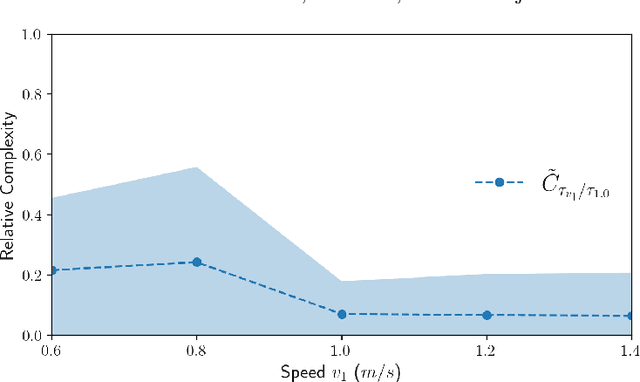

Abstract:We are motivated by the problem of comparing the complexity of one robotic task relative to another. To this end, we define a notion of reduction that formalizes the following intuition: Task 1 reduces to Task 2 if we can efficiently transform any policy that solves Task 2 into a policy that solves Task 1. We further define a quantitative measure of the relative complexity between any two tasks for a given robot. We prove useful properties of our notion of reduction (e.g., reflexivity, transitivity, and antisymmetry) and relative complexity measure (e.g., nonnegativity and monotonicity). In addition, we propose practical algorithms for estimating the relative complexity measure. We illustrate our framework for comparing robotic tasks using (i) examples where one can analytically establish reductions, and (ii) reinforcement learning examples where the proposed algorithm can estimate the relative complexity between tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge