Michele Lombardi

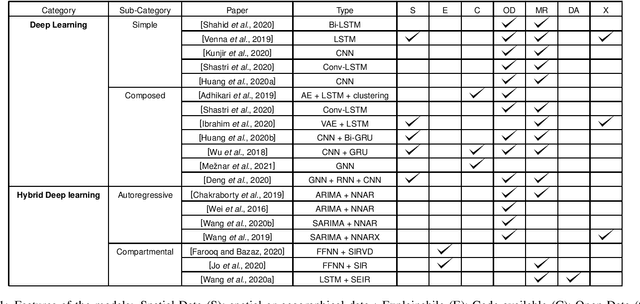

Deep Learning for Virus-Spreading Forecasting: a Brief Survey

Mar 03, 2021

Abstract:The advent of the coronavirus pandemic has sparked the interest in predictive models capable of forecasting virus-spreading, especially for boosting and supporting decision-making processes. In this paper, we will outline the main Deep Learning approaches aimed at predicting the spreading of a disease in space and time. The aim is to show the emerging trends in this area of research and provide a general perspective on the possible strategies to approach this problem. In doing so, we will mainly focus on two macro-categories: classical Deep Learning approaches and Hybrid models. Finally, we will discuss the main advantages and disadvantages of different models, and underline the most promising development directions to improve these approaches.

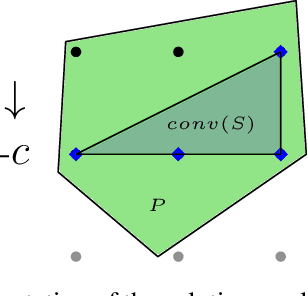

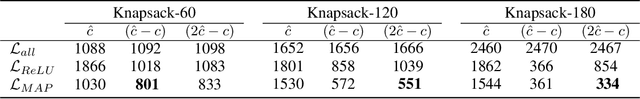

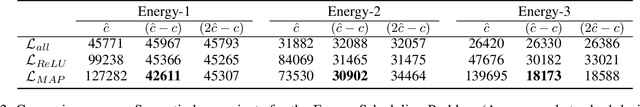

Discrete solution pools and noise-contrastive estimation for predict-and-optimize

Nov 10, 2020

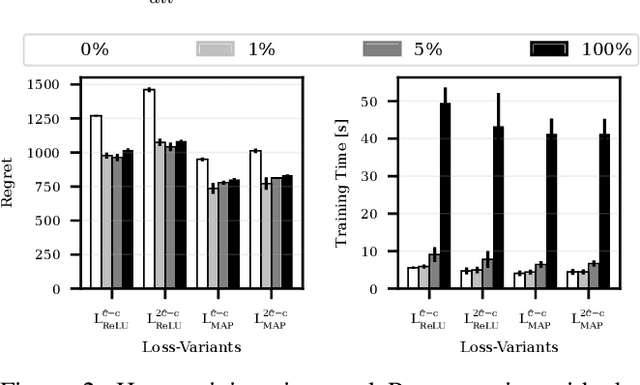

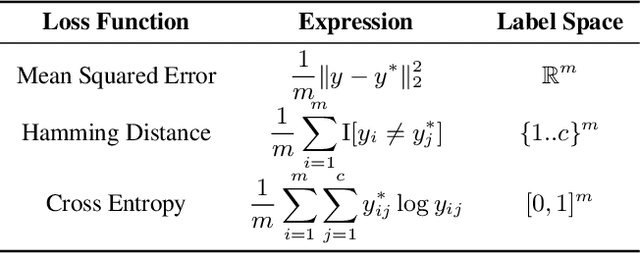

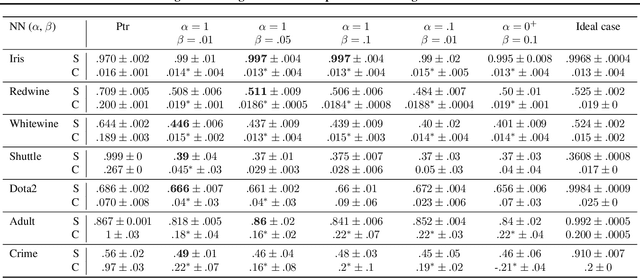

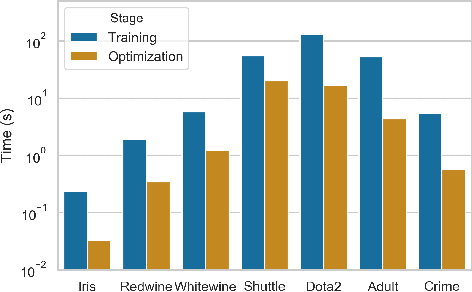

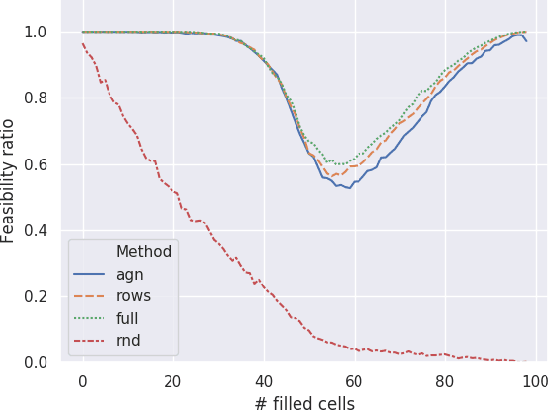

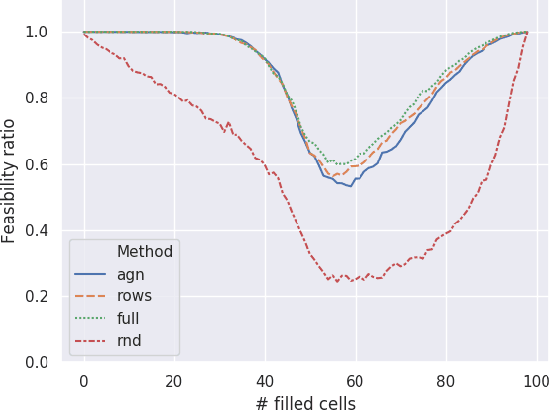

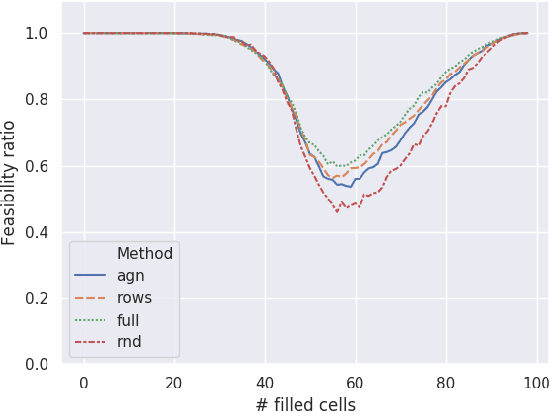

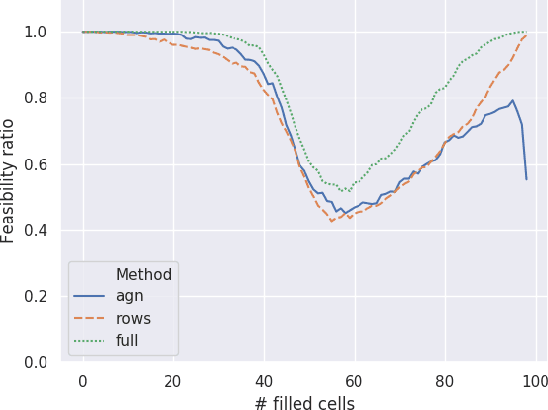

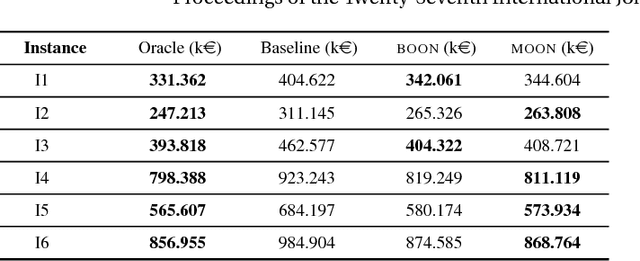

Abstract:Numerous real-life decision-making processes involve solving a combinatorial optimization problem with uncertain input that can be estimated from historic data. There is a growing interest in decision-focused learning methods, where the loss function used for learning to predict the uncertain input uses the outcome of solving the combinatorial problem over a set of predictions. Different surrogate loss functions have been identified, often using a continuous approximation of the combinatorial problem. However, a key bottleneck is that to compute the loss, one has to solve the combinatorial optimisation problem for each training instance in each epoch, which is computationally expensive even in the case of continuous approximations. We propose a different solver-agnostic method for decision-focused learning, namely by considering a pool of feasible solutions as a discrete approximation of the full combinatorial problem. Solving is now trivial through a single pass over the solution pool. We design several variants of a noise-contrastive loss over the solution pool, which we substantiate theoretically and empirically. Furthermore, we show that by dynamically re-solving only a fraction of the training instances each epoch, our method performs on par with the state of the art, whilst drastically reducing the time spent solving, hence increasing the feasibility of predict-and-optimize for larger problems.

An Analysis of Regularized Approaches for Constrained Machine Learning

May 20, 2020

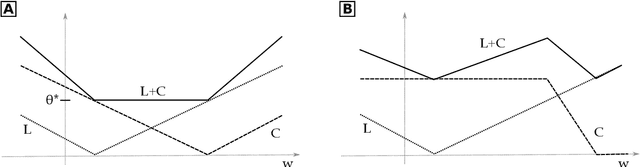

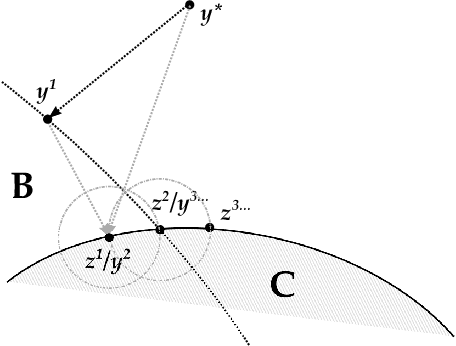

Abstract:Regularization-based approaches for injecting constraints in Machine Learning (ML) were introduced to improve a predictive model via expert knowledge. We tackle the issue of finding the right balance between the loss (the accuracy of the learner) and the regularization term (the degree of constraint satisfaction). The key results of this paper is the formal demonstration that this type of approach cannot guarantee to find all optimal solutions. In particular, in the non-convex case there might be optima for the constrained problem that do not correspond to any multiplier value.

Teaching the Old Dog New Tricks: Supervised Learning with Constraints

Feb 25, 2020

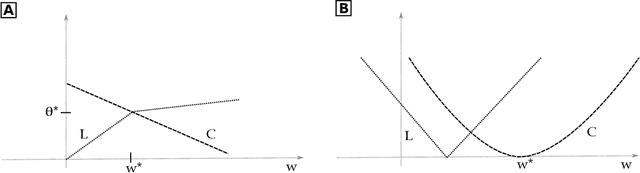

Abstract:Methods for taking into account external knowledge in Machine Learning models have the potential to address outstanding issues in data-driven AI methods, such as improving safety and fairness, and can simplify training in the presence of scarce data. We propose a simple, but effective, method for injecting constraints at training time in supervised learning, based on decomposition and bi-level optimization: a master step is in charge of enforcing the constraints, while a learner step takes care of training the model. The process leads to approximate constraint satisfaction. The method is applicable to any ML approach for which the concept of label (or target) is well defined (most regression and classification scenarios), and allows to reuse existing training algorithms with no modifications. We require no assumption on the constraints, although their properties affect the shape and complexity of the master problem. Convergence guarantees are hard to provide, but we found that the approach performs well on ML tasks with fairness constraints and on classical datasets with synthetic constraints.

Injecting Domain Knowledge in Neural Networks: a Controlled Experiment on a Constrained Problem

Feb 25, 2020

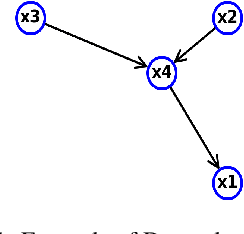

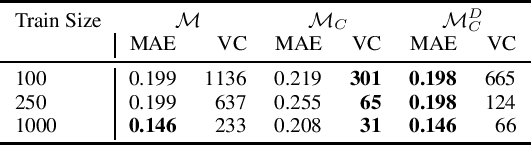

Abstract:Given enough data, Deep Neural Networks (DNNs) are capable of learning complex input-output relations with high accuracy. In several domains, however, data is scarce or expensive to retrieve, while a substantial amount of expert knowledge is available. It seems reasonable that if we can inject this additional information in the DNN, we could ease the learning process. One such case is that of Constraint Problems, for which declarative approaches exists and pure ML solutions have obtained mixed success. Using a classical constrained problem as a case study, we perform controlled experiments to probe the impact of progressively adding domain and empirical knowledge in the DNN. Our results are very encouraging, showing that (at least in our setup) embedding domain knowledge at training time can have a considerable effect and that a small amount of empirical knowledge is sufficient to obtain practically useful results.

Injective Domain Knowledge in Neural Networks for Transprecision Computing

Feb 24, 2020

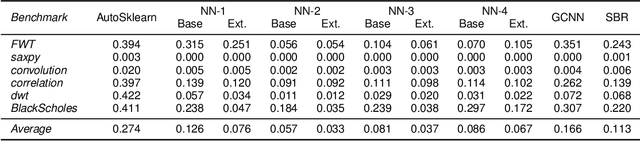

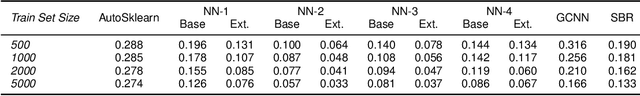

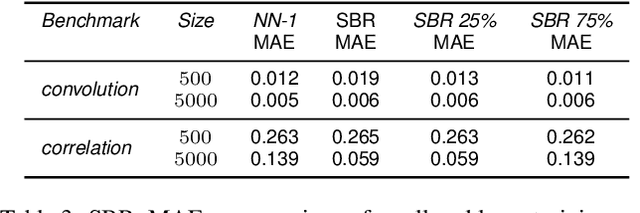

Abstract:Machine Learning (ML) models are very effective in many learning tasks, due to the capability to extract meaningful information from large data sets. Nevertheless, there are learning problems that cannot be easily solved relying on pure data, e.g. scarce data or very complex functions to be approximated. Fortunately, in many contexts domain knowledge is explicitly available and can be used to train better ML models. This paper studies the improvements that can be obtained by integrating prior knowledge when dealing with a non-trivial learning task, namely precision tuning of transprecision computing applications. The domain information is injected in the ML models in different ways: I) additional features, II) ad-hoc graph-based network topology, III) regularization schemes. The results clearly show that ML models exploiting problem-specific information outperform the purely data-driven ones, with an average accuracy improvement around 38%.

A Lagrangian Dual Framework for Deep Neural Networks with Constraints

Jan 26, 2020

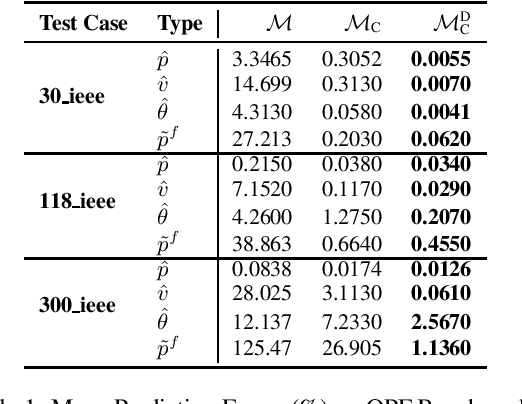

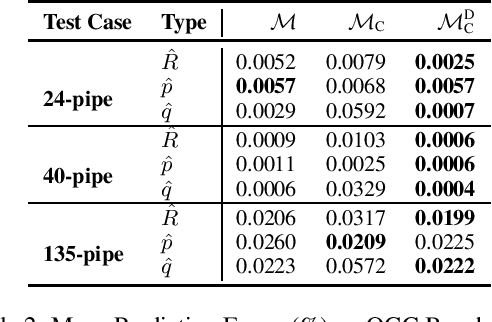

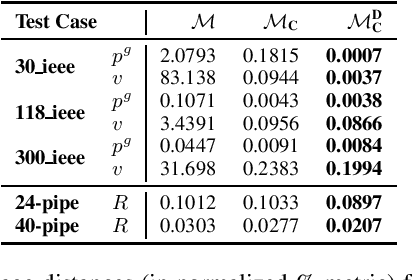

Abstract:A variety of computationally challenging constrained optimization problems in several engineering disciplines are solved repeatedly under different scenarios. In many cases, they would benefit from fast and accurate approximations, either to support real-time operations or large-scale simulation studies. This paper aims at exploring how to leverage the substantial data being accumulated by repeatedly solving instances of these applications over time. It introduces a deep learning model that exploits Lagrangian duality to encourage the satisfaction of hard constraints. The proposed method is evaluated on a collection of realistic energy networks, by enforcing non-discriminatory decisions on a variety of datasets, and a transprecision computing application. The results illustrate the effectiveness of the proposed method that dramatically decreases constraint violations by the predictors and, in some applications, increases the prediction accuracy.

Anomaly Detection using Autoencoders in High Performance Computing Systems

Nov 13, 2018

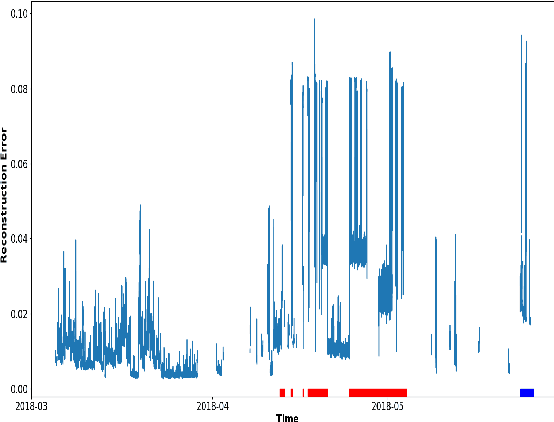

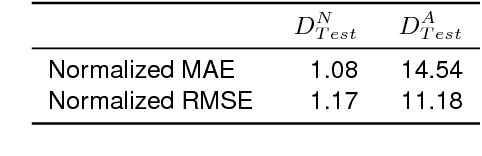

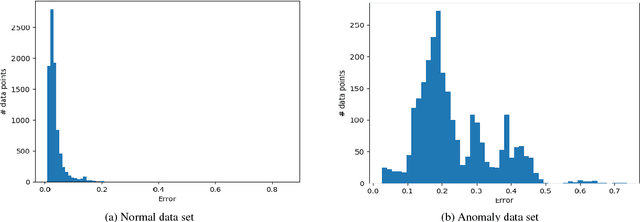

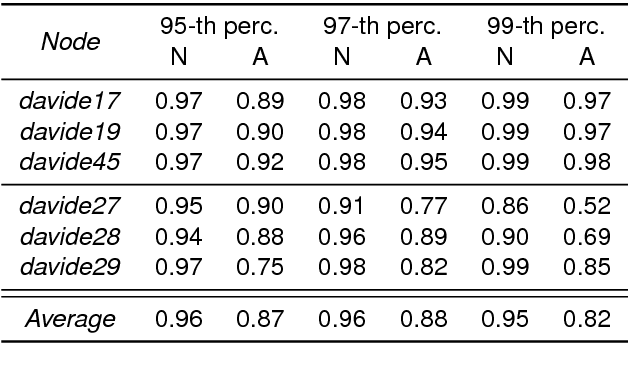

Abstract:Anomaly detection in supercomputers is a very difficult problem due to the big scale of the systems and the high number of components. The current state of the art for automated anomaly detection employs Machine Learning methods or statistical regression models in a supervised fashion, meaning that the detection tool is trained to distinguish among a fixed set of behaviour classes (healthy and unhealthy states). We propose a novel approach for anomaly detection in High Performance Computing systems based on a Machine (Deep) Learning technique, namely a type of neural network called autoencoder. The key idea is to train a set of autoencoders to learn the normal (healthy) behaviour of the supercomputer nodes and, after training, use them to identify abnormal conditions. This is different from previous approaches which where based on learning the abnormal condition, for which there are much smaller datasets (since it is very hard to identify them to begin with). We test our approach on a real supercomputer equipped with a fine-grained, scalable monitoring infrastructure that can provide large amount of data to characterize the system behaviour. The results are extremely promising: after the training phase to learn the normal system behaviour, our method is capable of detecting anomalies that have never been seen before with a very good accuracy (values ranging between 88% and 96%).

Boosting Combinatorial Problem Modeling with Machine Learning

Jul 15, 2018

Abstract:In the past few years, the area of Machine Learning (ML) has witnessed tremendous advancements, becoming a pervasive technology in a wide range of applications. One area that can significantly benefit from the use of ML is Combinatorial Optimization. The three pillars of constraint satisfaction and optimization problem solving, i.e., modeling, search, and optimization, can exploit ML techniques to boost their accuracy, efficiency and effectiveness. In this survey we focus on the modeling component, whose effectiveness is crucial for solving the problem. The modeling activity has been traditionally shaped by optimization and domain experts, interacting to provide realistic results. Machine Learning techniques can tremendously ease the process, and exploit the available data to either create models or refine expert-designed ones. In this survey we cover approaches that have been recently proposed to enhance the modeling process by learning either single constraints, objective functions, or the whole model. We highlight common themes to multiple approaches and draw connections with related fields of research.

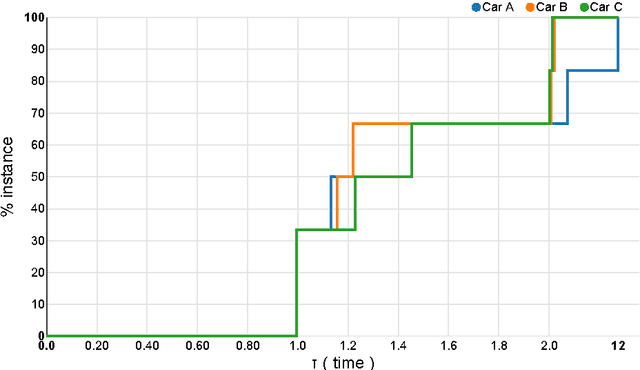

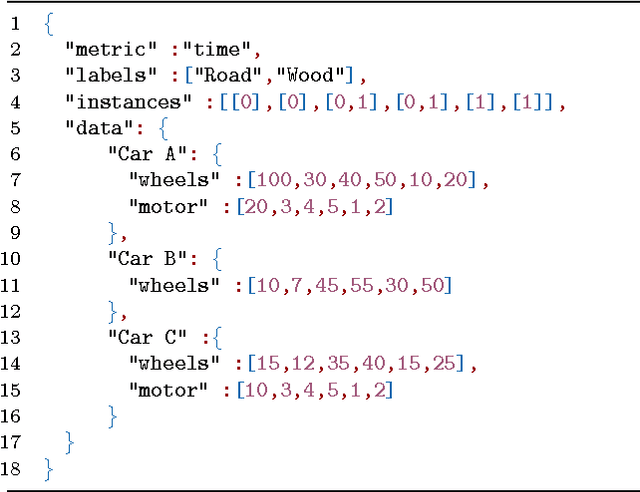

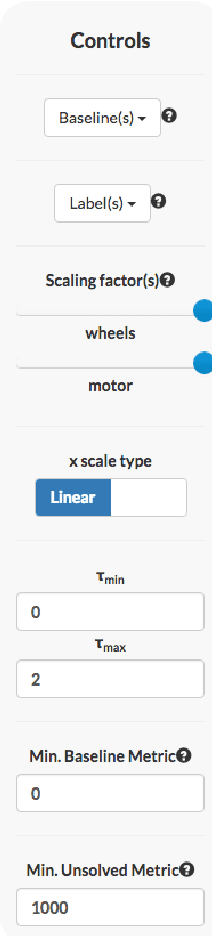

A Visual Web Tool to Perform What-If Analysis of Optimization Approaches

Mar 16, 2017

Abstract:In Operation Research, practical evaluation is essential to validate the efficacy of optimization approaches. This paper promotes the usage of performance profiles as a standard practice to visualize and analyze experimental results. It introduces a Web tool to construct and export performance profiles as SVG or HTML files. In addition, the application relies on a methodology to estimate the benefit of hypothetical solver improvements. Therefore, the tool allows one to employ what-if analysis to screen possible research directions, and identify those having the best potential. The approach is showcased on two Operation Research technologies: Constraint Programming and Mixed Integer Linear Programming.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge