Mayank Vatsa

Subclass Contrastive Loss for Injured Face Recognition

Aug 05, 2020

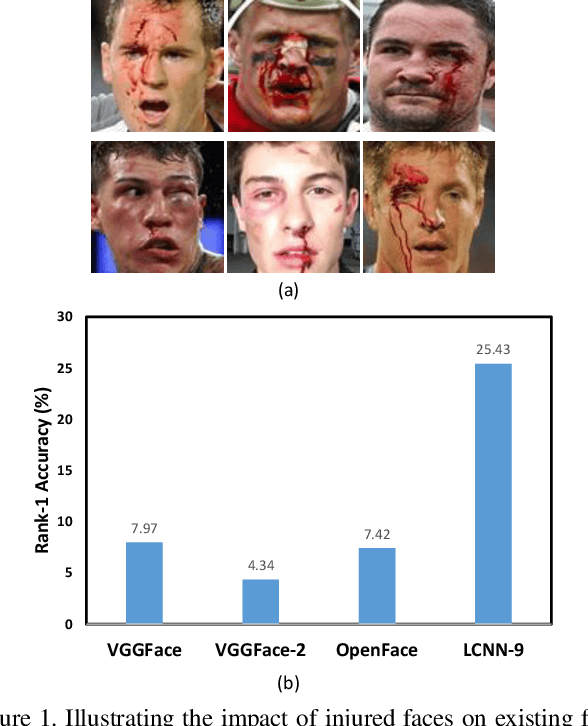

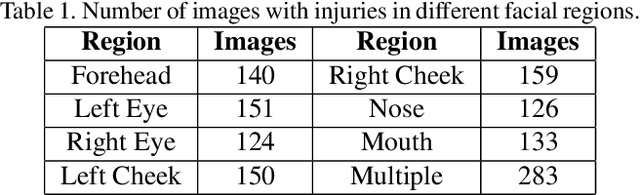

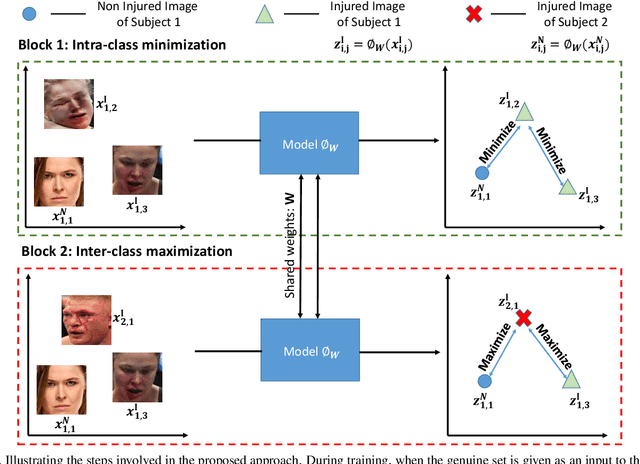

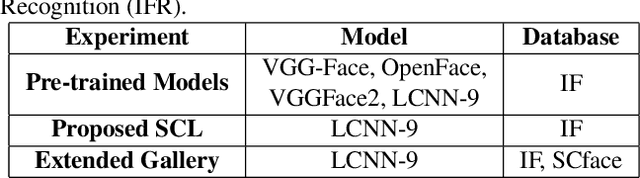

Abstract:Deaths and injuries are common in road accidents, violence, and natural disaster. In such cases, one of the main tasks of responders is to retrieve the identity of the victims to reunite families and ensure proper identification of deceased/ injured individuals. Apart from this, identification of unidentified dead bodies due to violence and accidents is crucial for the police investigation. In the absence of identification cards, current practices for this task include DNA profiling and dental profiling. Face is one of the most commonly used and widely accepted biometric modalities for recognition. However, face recognition is challenging in the presence of facial injuries such as swelling, bruises, blood clots, laceration, and avulsion which affect the features used in recognition. In this paper, for the first time, we address the problem of injured face recognition and propose a novel Subclass Contrastive Loss (SCL) for this task. A novel database, termed as Injured Face (IF) database, is also created to instigate research in this direction. Experimental analysis shows that the proposed loss function surpasses existing algorithm for injured face recognition.

Multi-Task Driven Explainable Diagnosis of COVID-19 using Chest X-ray Images

Aug 03, 2020

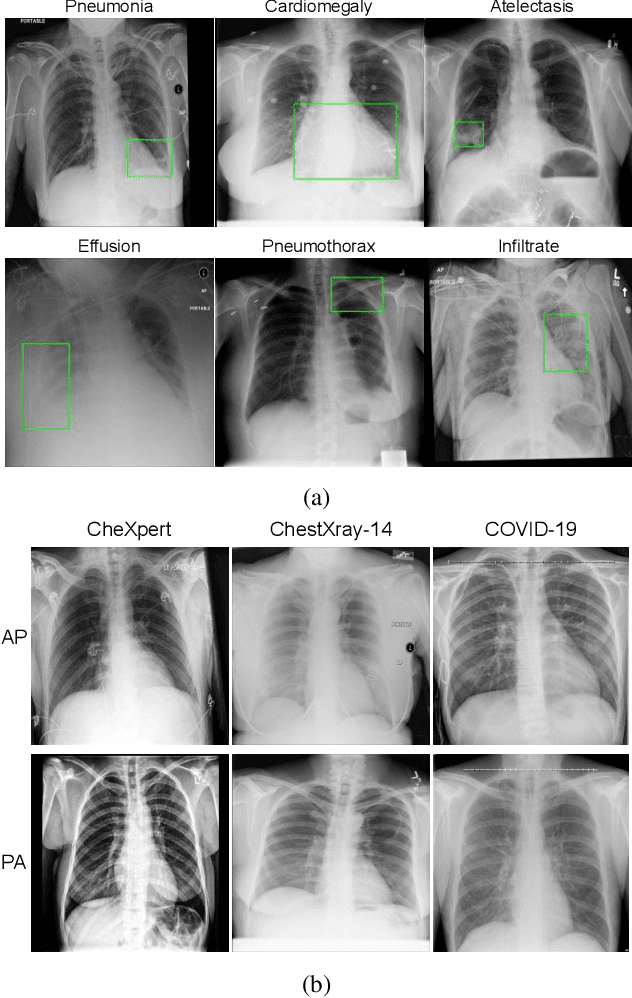

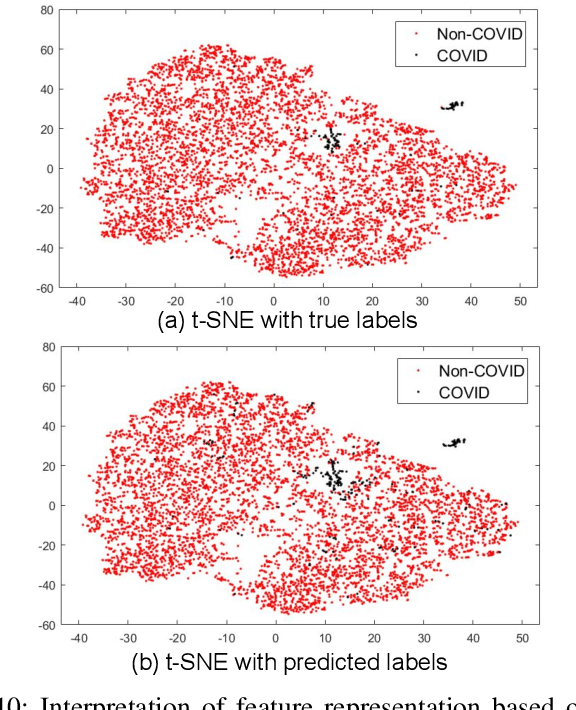

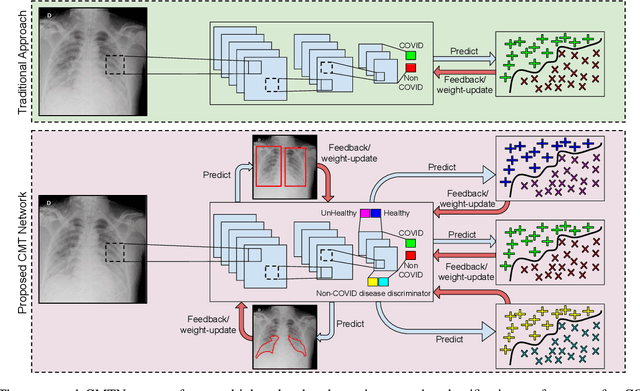

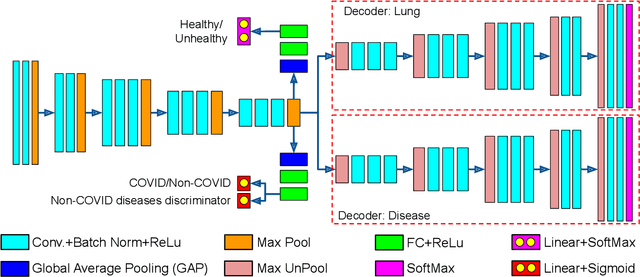

Abstract:With increasing number of COVID-19 cases globally, all the countries are ramping up the testing numbers. While the RT-PCR kits are available in sufficient quantity in several countries, others are facing challenges with limited availability of testing kits and processing centers in remote areas. This has motivated researchers to find alternate methods of testing which are reliable, easily accessible and faster. Chest X-Ray is one of the modalities that is gaining acceptance as a screening modality. Towards this direction, the paper has two primary contributions. Firstly, we present the COVID-19 Multi-Task Network which is an automated end-to-end network for COVID-19 screening. The proposed network not only predicts whether the CXR has COVID-19 features present or not, it also performs semantic segmentation of the regions of interest to make the model explainable. Secondly, with the help of medical professionals, we manually annotate the lung regions of 9000 frontal chest radiographs taken from ChestXray-14, CheXpert and a consolidated COVID-19 dataset. Further, 200 chest radiographs pertaining to COVID-19 patients are also annotated for semantic segmentation. This database will be released to the research community.

Generalized Zero-Shot Learning Via Over-Complete Distribution

Apr 01, 2020

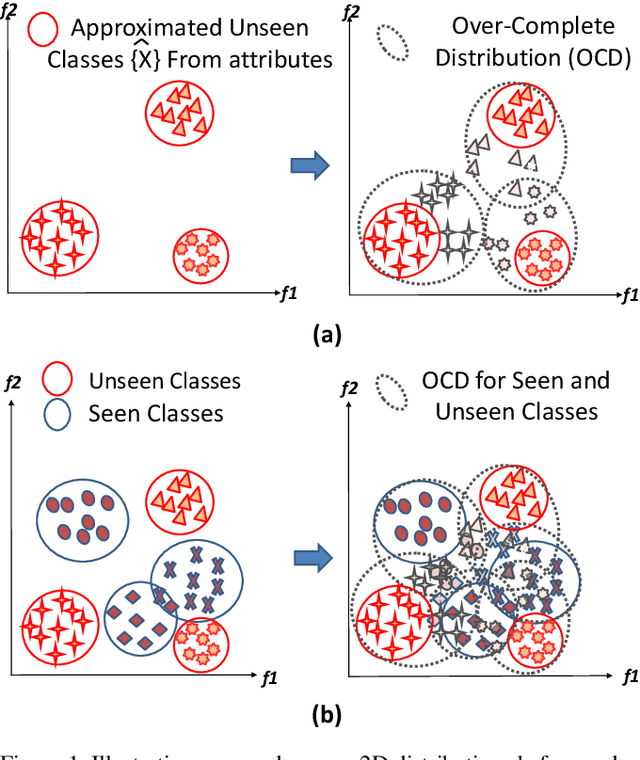

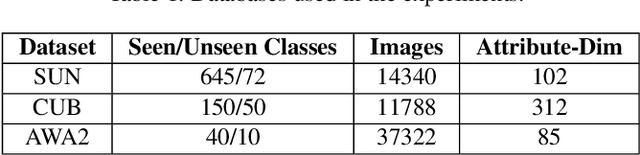

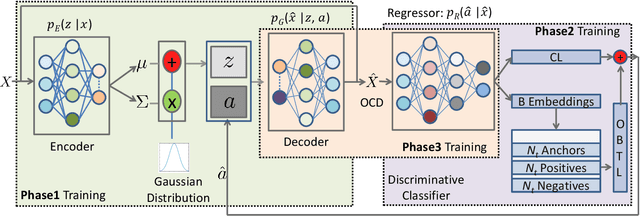

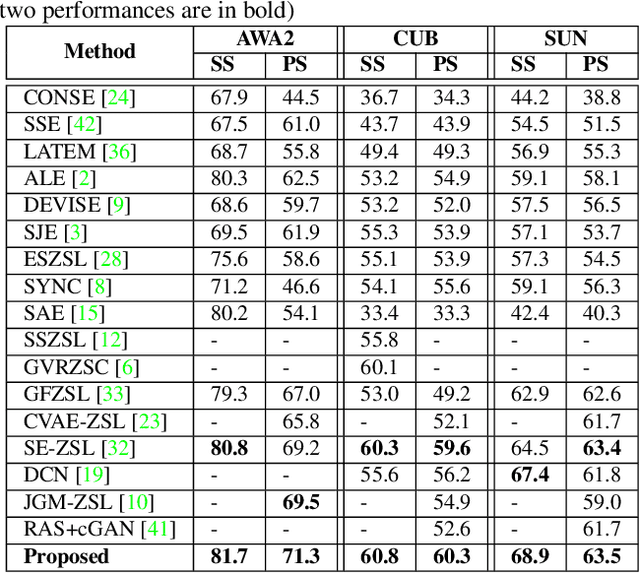

Abstract:A well trained and generalized deep neural network (DNN) should be robust to both seen and unseen classes. However, the performance of most of the existing supervised DNN algorithms degrade for classes which are unseen in the training set. To learn a discriminative classifier which yields good performance in Zero-Shot Learning (ZSL) settings, we propose to generate an Over-Complete Distribution (OCD) using Conditional Variational Autoencoder (CVAE) of both seen and unseen classes. In order to enforce the separability between classes and reduce the class scatter, we propose the use of Online Batch Triplet Loss (OBTL) and Center Loss (CL) on the generated OCD. The effectiveness of the framework is evaluated using both Zero-Shot Learning and Generalized Zero-Shot Learning protocols on three publicly available benchmark databases, SUN, CUB and AWA2. The results show that generating over-complete distributions and enforcing the classifier to learn a transform function from overlapping to non-overlapping distributions can improve the performance on both seen and unseen classes.

On the Robustness of Face Recognition Algorithms Against Attacks and Bias

Feb 07, 2020

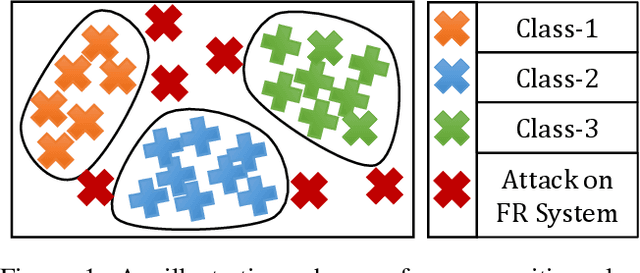

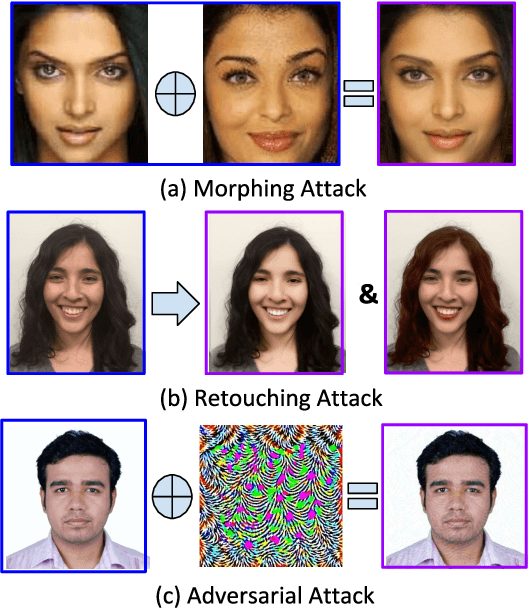

Abstract:Face recognition algorithms have demonstrated very high recognition performance, suggesting suitability for real world applications. Despite the enhanced accuracies, robustness of these algorithms against attacks and bias has been challenged. This paper summarizes different ways in which the robustness of a face recognition algorithm is challenged, which can severely affect its intended working. Different types of attacks such as physical presentation attacks, disguise/makeup, digital adversarial attacks, and morphing/tampering using GANs have been discussed. We also present a discussion on the effect of bias on face recognition models and showcase that factors such as age and gender variations affect the performance of modern algorithms. The paper also presents the potential reasons for these challenges and some of the future research directions for increasing the robustness of face recognition models.

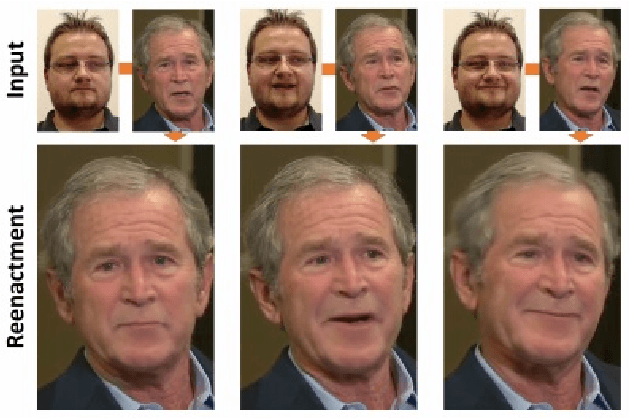

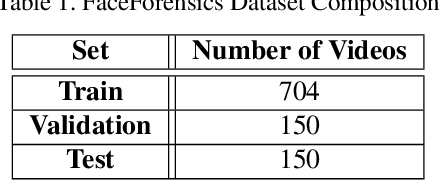

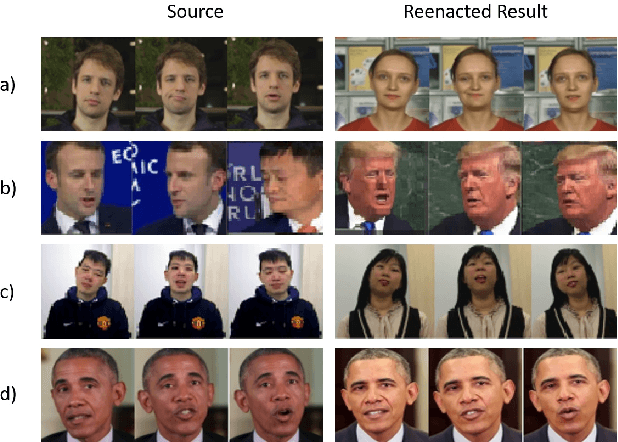

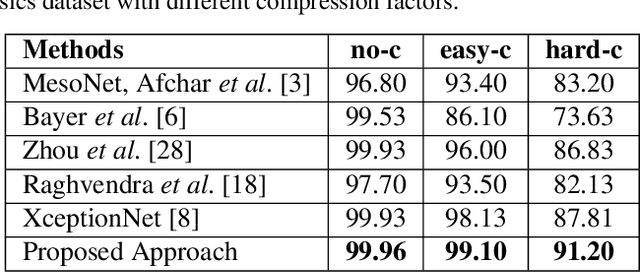

Detecting Face2Face Facial Reenactment in Videos

Jan 21, 2020

Abstract:Visual content has become the primary source of information, as evident in the billions of images and videos, shared and uploaded on the Internet every single day. This has led to an increase in alterations in images and videos to make them more informative and eye-catching for the viewers worldwide. Some of these alterations are simple, like copy-move, and are easily detectable, while other sophisticated alterations like reenactment based DeepFakes are hard to detect. Reenactment alterations allow the source to change the target expressions and create photo-realistic images and videos. While technology can be potentially used for several applications, the malicious usage of automatic reenactment has a very large social implication. It is therefore important to develop detection techniques to distinguish real images and videos with the altered ones. This research proposes a learning-based algorithm for detecting reenactment based alterations. The proposed algorithm uses a multi-stream network that learns regional artifacts and provides a robust performance at various compression levels. We also propose a loss function for the balanced learning of the streams for the proposed network. The performance is evaluated on the publicly available FaceForensics dataset. The results show state-of-the-art classification accuracy of 99.96%, 99.10%, and 91.20% for no, easy, and hard compression factors, respectively.

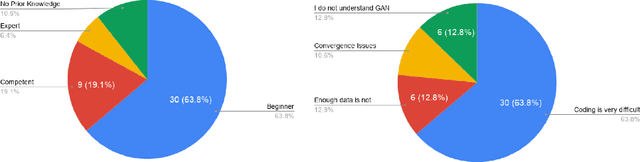

AuthorGAN: Improving GAN Reproducibility using a Modular GAN Framework

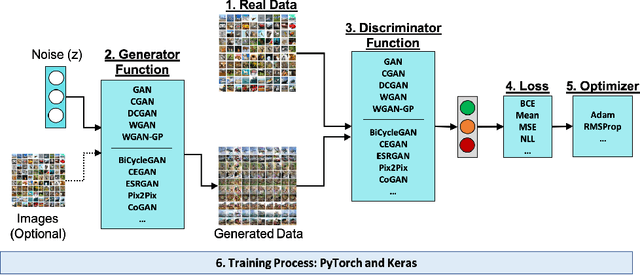

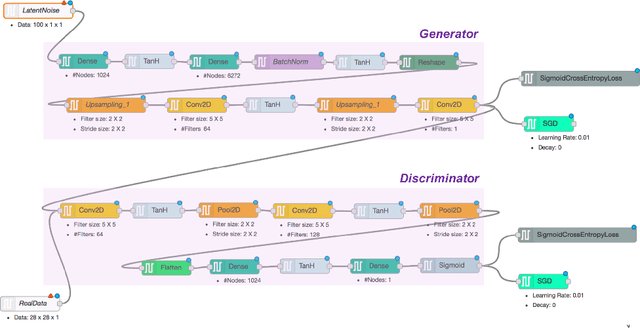

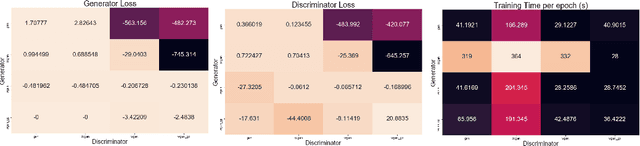

Nov 26, 2019

Abstract:Generative models are becoming increasingly popular in the literature, with Generative Adversarial Networks (GAN) being the most successful variant, yet. With this increasing demand and popularity, it is becoming equally difficult and challenging to implement and consume GAN models. A qualitative user survey conducted across 47 practitioners show that expert level skill is required to use GAN model for a given task, despite the presence of various open source libraries. In this research, we propose a novel system called AuthorGAN, aiming to achieve true democratization of GAN authoring. A highly modularized library agnostic representation of GAN model is defined to enable interoperability of GAN architecture across different libraries such as Keras, Tensorflow, and PyTorch. An intuitive drag-and-drop based visual designer is built using node-red platform to enable custom architecture designing without the need for writing any code. Five different GAN models are implemented as a part of this framework and the performance of the different GAN models are shown using the benchmark MNIST dataset.

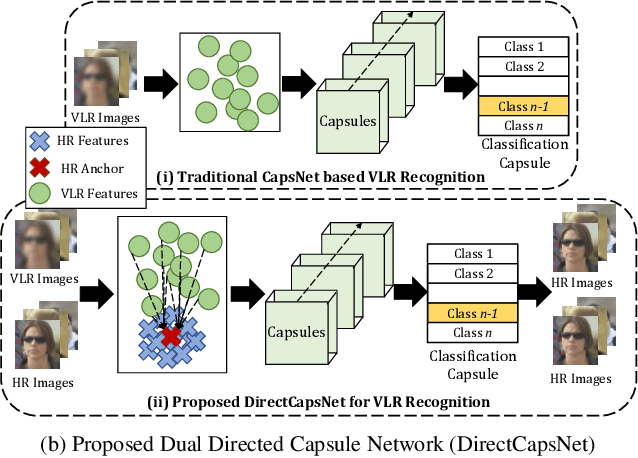

Dual Directed Capsule Network for Very Low Resolution Image Recognition

Aug 27, 2019

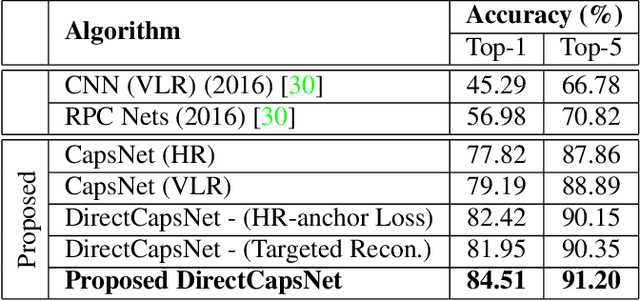

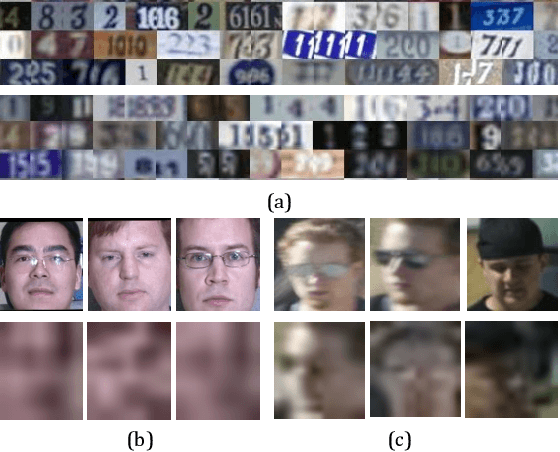

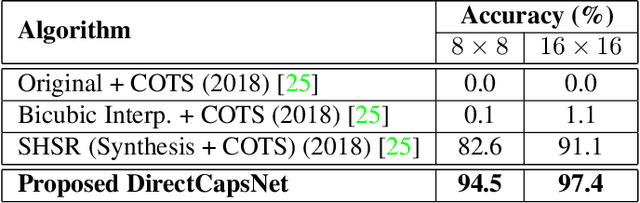

Abstract:Very low resolution (VLR) image recognition corresponds to classifying images with resolution 16x16 or less. Though it has widespread applicability when objects are captured at a very large stand-off distance (e.g. surveillance scenario) or from wide angle mobile cameras, it has received limited attention. This research presents a novel Dual Directed Capsule Network model, termed as DirectCapsNet, for addressing VLR digit and face recognition. The proposed architecture utilizes a combination of capsule and convolutional layers for learning an effective VLR recognition model. The architecture also incorporates two novel loss functions: (i) the proposed HR-anchor loss and (ii) the proposed targeted reconstruction loss, in order to overcome the challenges of limited information content in VLR images. The proposed losses use high resolution images as auxiliary data during training to "direct" discriminative feature learning. Multiple experiments for VLR digit classification and VLR face recognition are performed along with comparisons with state-of-the-art algorithms. The proposed DirectCapsNet consistently showcases state-of-the-art results; for example, on the UCCS face database, it shows over 95\% face recognition accuracy when 16x16 images are matched with 80x80 images.

On Learning Density Aware Embeddings

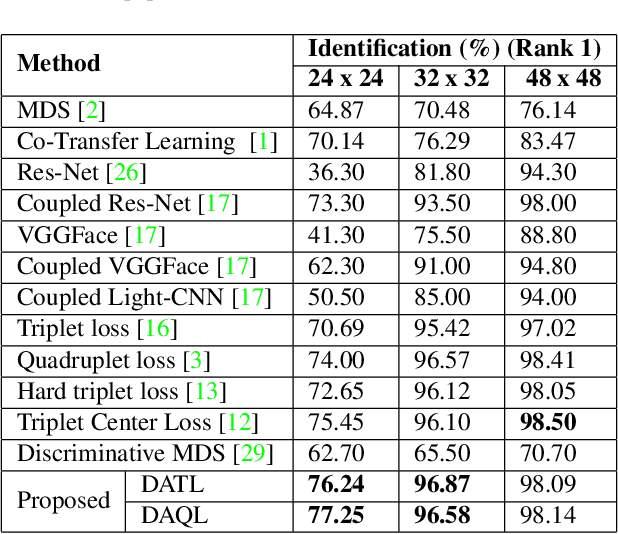

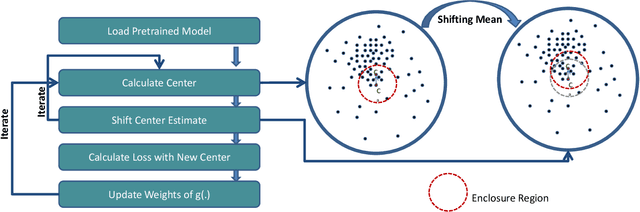

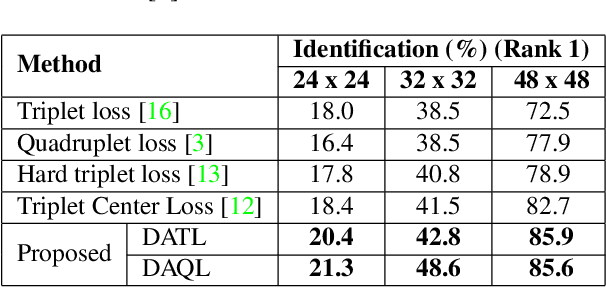

Apr 08, 2019

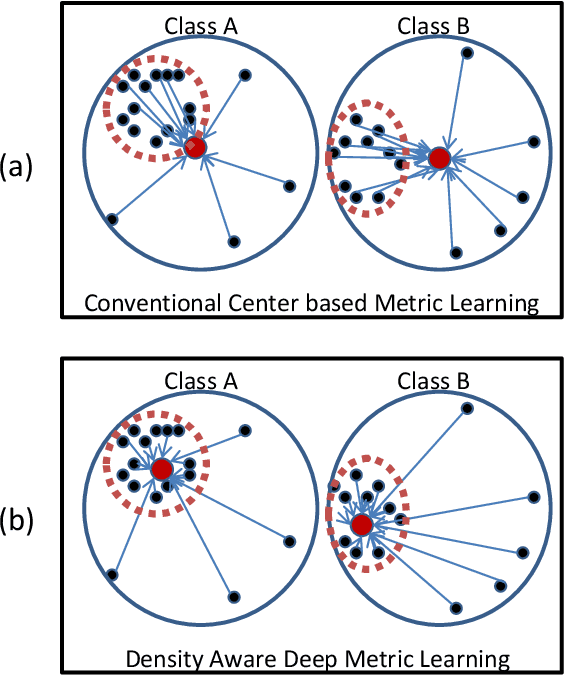

Abstract:Deep metric learning algorithms have been utilized to learn discriminative and generalizable models which are effective for classifying unseen classes. In this paper, a novel noise tolerant deep metric learning algorithm is proposed. The proposed method, termed as Density Aware Metric Learning, enforces the model to learn embeddings that are pulled towards the most dense region of the clusters for each class. It is achieved by iteratively shifting the estimate of the center towards the dense region of the cluster thereby leading to faster convergence and higher generalizability. In addition to this, the approach is robust to noisy samples in the training data, often present as outliers. Detailed experiments and analysis on two challenging cross-modal face recognition databases and two popular object recognition databases exhibit the efficacy of the proposed approach. It has superior convergence, requires lesser training time, and yields better accuracies than several popular deep metric learning methods.

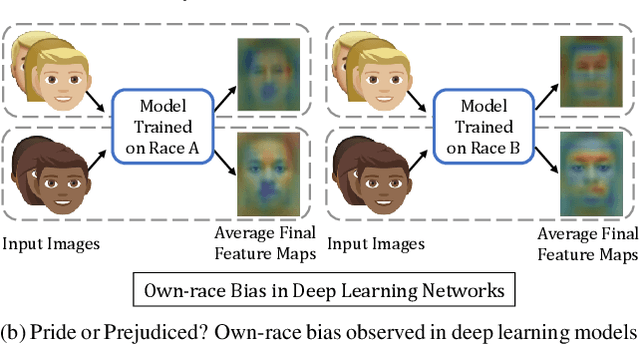

Deep Learning for Face Recognition: Pride or Prejudiced?

Apr 02, 2019

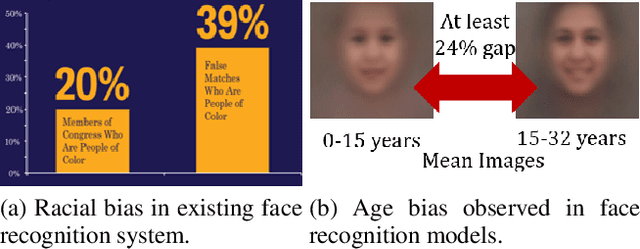

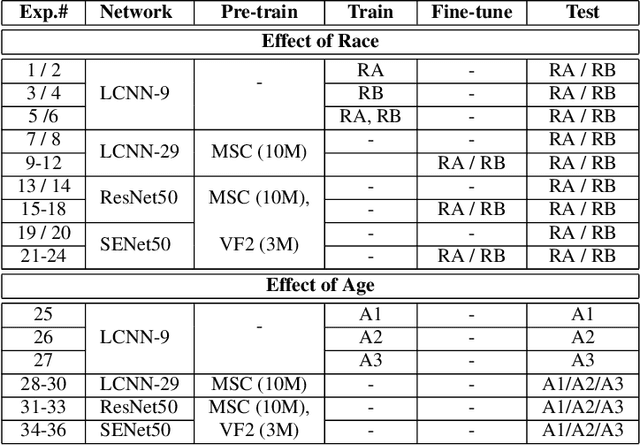

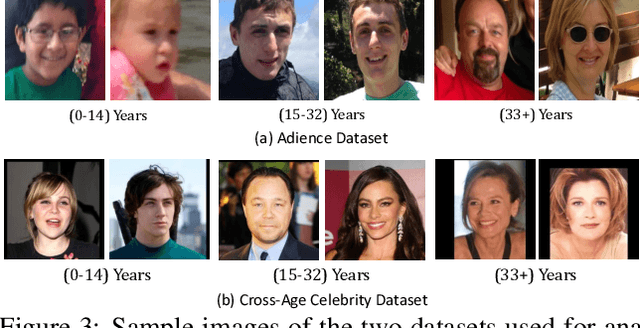

Abstract:Do very high accuracies of deep networks suggest pride of effective AI or are deep networks prejudiced? Do they suffer from in-group biases (own-race-bias and own-age-bias), and mimic the human behavior? Is in-group specific information being encoded sub-consciously by the deep networks? This research attempts to answer these questions and presents an in-depth analysis of `bias' in deep learning based face recognition systems. This is the first work which decodes if and where bias is encoded for face recognition. Taking cues from cognitive studies, we inspect if deep networks are also affected by social in- and out-group effect. Networks are analyzed for own-race and own-age bias, both of which have been well established in human beings. The sub-conscious behavior of face recognition models is examined to understand if they encode race or age specific features for face recognition. Analysis is performed based on 36 experiments conducted on multiple datasets. Four deep learning networks either trained from scratch or pre-trained on over 10M images are used. Variations across class activation maps and feature visualizations provide novel insights into the functioning of deep learning systems, suggesting behavior similar to humans. It is our belief that a better understanding of state-of-the-art deep learning networks would enable researchers to address the given challenge of bias in AI, and develop fairer systems.

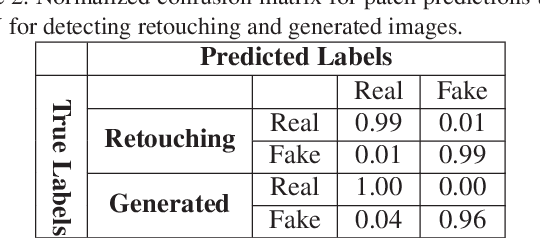

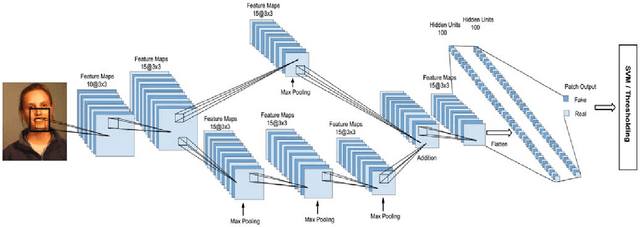

On Detecting GANs and Retouching based Synthetic Alterations

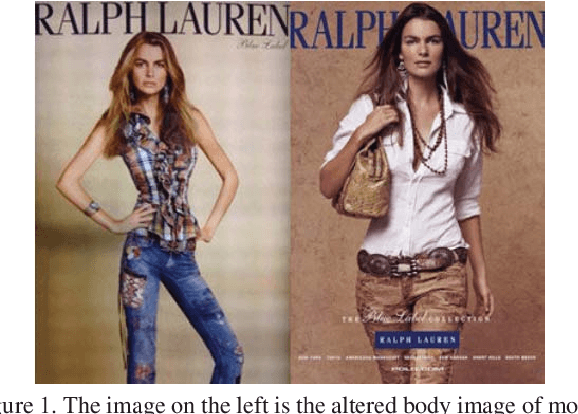

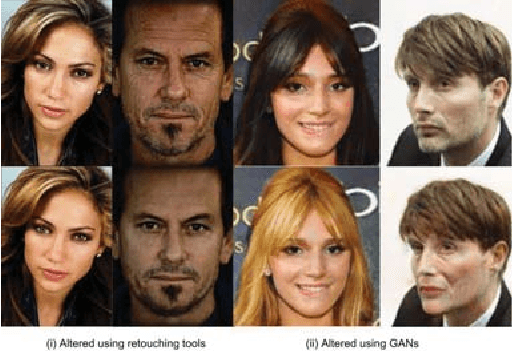

Jan 26, 2019

Abstract:Digitally retouching images has become a popular trend, with people posting altered images on social media and even magazines posting flawless facial images of celebrities. Further, with advancements in Generative Adversarial Networks (GANs), now changing attributes and retouching have become very easy. Such synthetic alterations have adverse effect on face recognition algorithms. While researchers have proposed to detect image tampering, detecting GANs generated images has still not been explored. This paper proposes a supervised deep learning algorithm using Convolutional Neural Networks (CNNs) to detect synthetically altered images. The algorithm yields an accuracy of 99.65% on detecting retouching on the ND-IIITD dataset. It outperforms the previous state of the art which reported an accuracy of 87% on the database. For distinguishing between real images and images generated using GANs, the proposed algorithm yields an accuracy of 99.83%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge